Differential privacy

Anonymized data isn't.

Successful de-anonymizing incidents:

- Netflix Prize Arvind Narayanan and Vitaly Shmatikov linked the Netflix anonymized training database with the IMDb (using the date of rating) to compromise some users’ identity.

- William Weld’s Medical Record Latanya Sweeney linked the anonymized GIC database with voter registration records, and was able to identify the medical record of the governor of Massachusetts.

- Homer et al Nils Homer and colleagues argue composite statistics across cohorts do not mask identity within GWAS.

Providing aggregate statistical information about the data may reveal information about the individuals.

Formalize privacy in statistical databases protects against these kinds of de-anonymization techniques.

Dwork & Roth 2014

Dwork & Roth 2014

Differential privacy

- Requirement the outcome of any analysis is essentially equally likely, independent of whether any individual joins, or refrains from joining, the dataset.

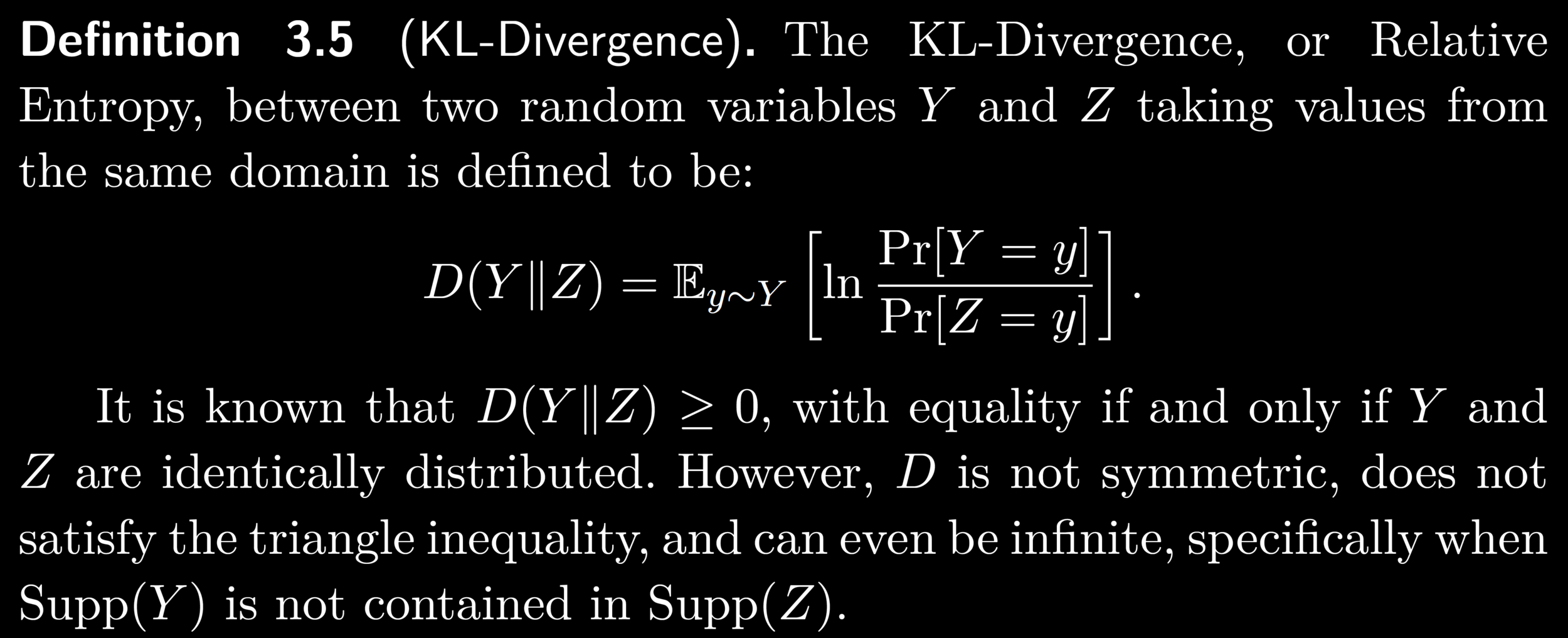

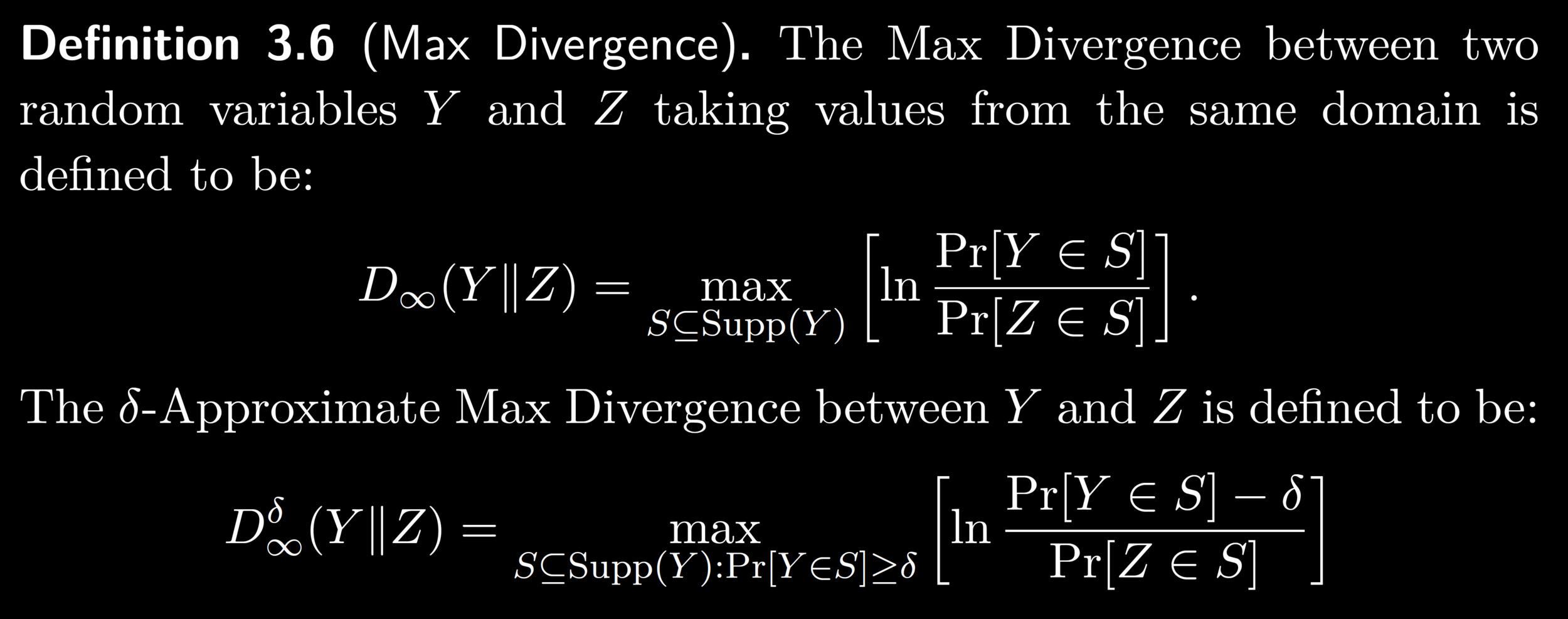

- Definition M gives \((\epsilon, \delta)\)- differential privacy if for all pairs of adjacent data sets \((x,y)\), all subsets \(S\) of possible outputs, \[P(M_x \in S) \leq e^\epsilon P(M_y \in S) + \delta\] (bounding the ratio of the two probabilities by \(e^\epsilon\)).

Possible world where I submit a survey

Possible world where I

do not submit

a survey

Result R

Prob(R) = A

Prob(R) = B

\[A \approx B\]

Differential privacy

- immune to auxiliary information

- concrete measure of privacy loss: \(\epsilon\)

- bound cumulative privacy loss over multiple analyses

Differential privacy mechanisms

- Laplace mechanism To achieve \((\epsilon, 0)\)-differential privacy, it suffices to add Laplace symmetric noise scaled to \(\frac{\Delta F}{\epsilon}\) to obscure the difference \(|f(x) - f(y)|\).

-

Exponential mechanism

If \(M(D,q) = \) return \(r\) with probability \(\propto e^{\frac{\epsilon q(D,r)}{2\Delta q}}\)

then \(M\) maintains \((\epsilon, 0)\)-differential privacy.