Journal club discussion

Aaron R. Caldwell, Samuel N. Cheuvront

Disclosure

falsifiability in principle

- coined by Karl Popper

- if you can imagine what would make it false

- is a strength

Scientific theories must be falsifiable in principle. Of course, not all justified beliefs are falsifiable in principle, but you need strong reasons for such unfalsifiable beliefs.

Paul Stearns, Lucid Philosophy

p-values

given H_0, probability of obtaining observed (or more extreme) result

instead: effect size and confidence intervals

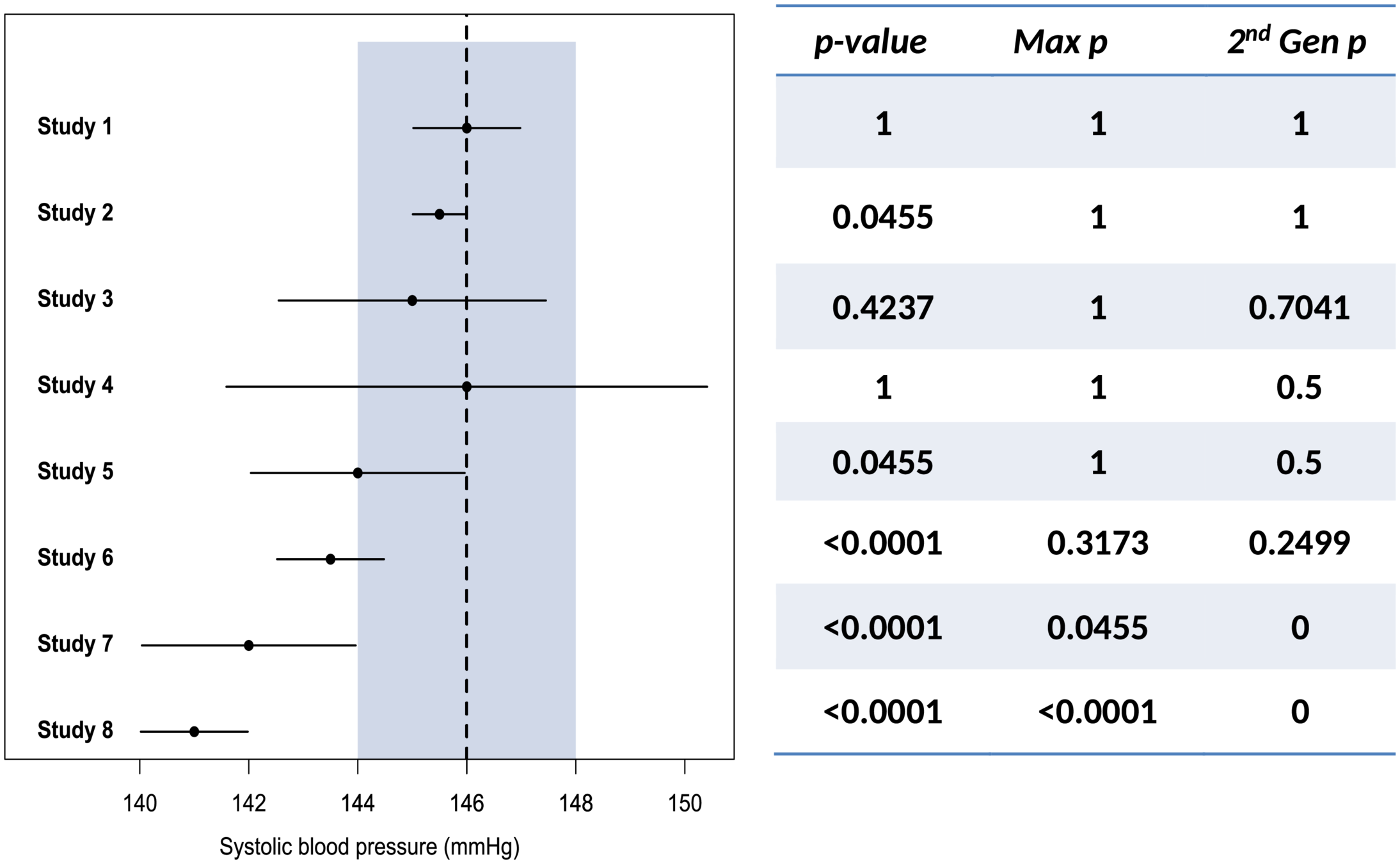

Blume et al. 2018

My understanding of 2nd generation p-value

- computed as a summary for a simplified version of frequentist equivalence testing (or Bayesian region of practical equivalence (ROPE) - but of course, Bayesian statisticians wouldn’t care about p-values)

- caveat: preregister the bounds around H_0. Another parameter to set is the .95 for CI around H^.

- lack of citation to a very similar method by Lakens

http://doingbayesiandataanalysis.blogspot.com/2016/12/bayesian-assessment-of-null-values.html

Laken's preprint comparing the two methods

When introducing a new statistical method, it is important to compare it to existing approaches and specify its relative strengths and weaknesses.

Blume et al. (2018) claim that when using the SGPV “Adjustments for multiple comparisons are obviated” (p. 15). However, this is not correct. Given the direct relationship between TOST and SGPV highlighted in this manuscript [], not correcting for multiple comparisons will inflate the probability of concluding the absence of a meaningful effect based on the SGPV in exactly the same way as it will for equivalence tests.

standard deviation

measure of variation/dispersion

biased vs. unbiased

standard error

is s.d. of its sampling distribution

s.e., of a statistic

s.d., population , sample s

s.e. of the mean ~ s.d. of the sample mean = s.d. of the error in sample mean

error bar

sample mean

± 1 s.e. of the mean

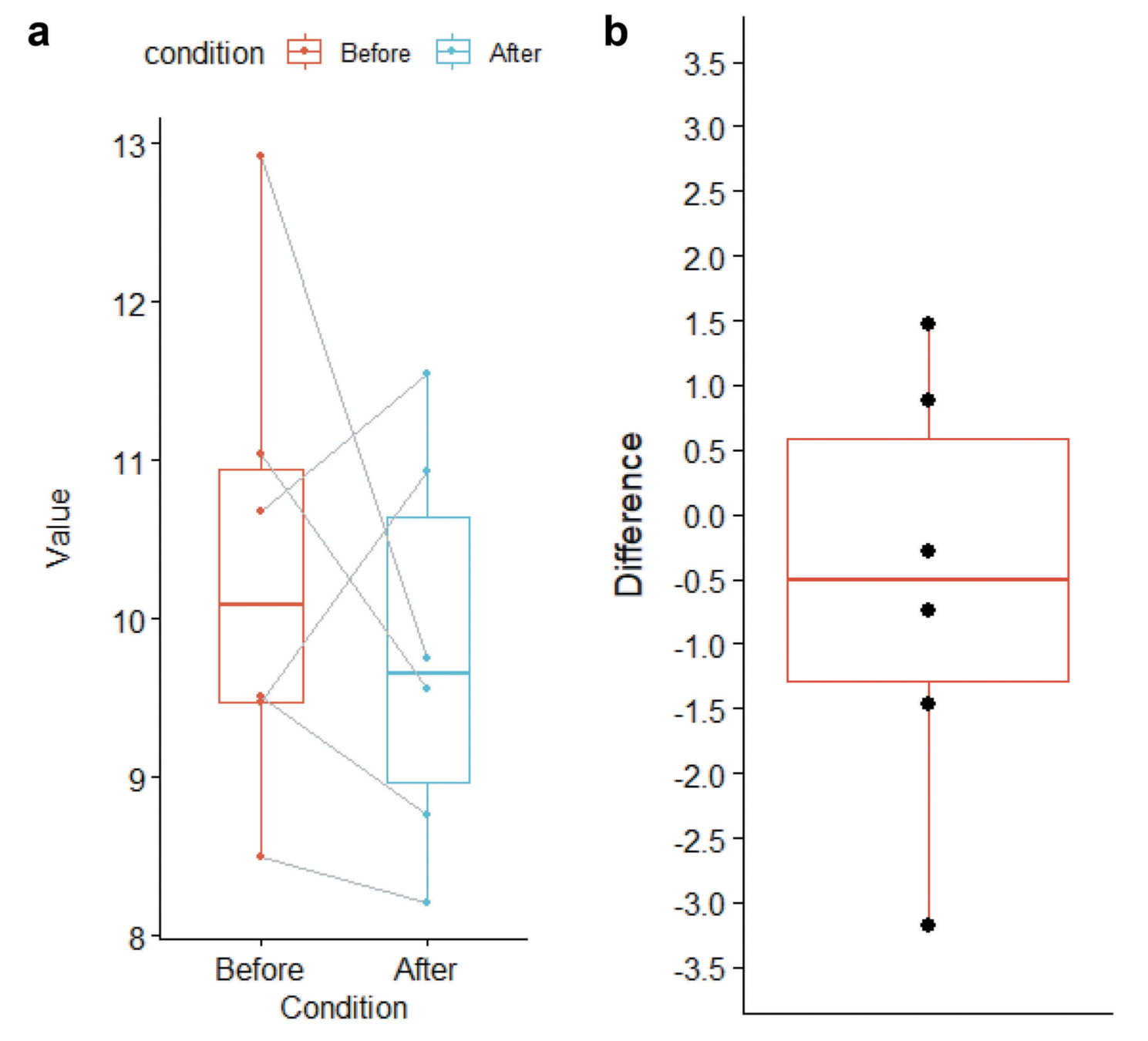

box plot

median

interquartile range

assumptions

- normal

- independent

- homoscedastic

- no outliers/leverage points

- transform

- remove outliers

- robust methods

- permutations

alternatives

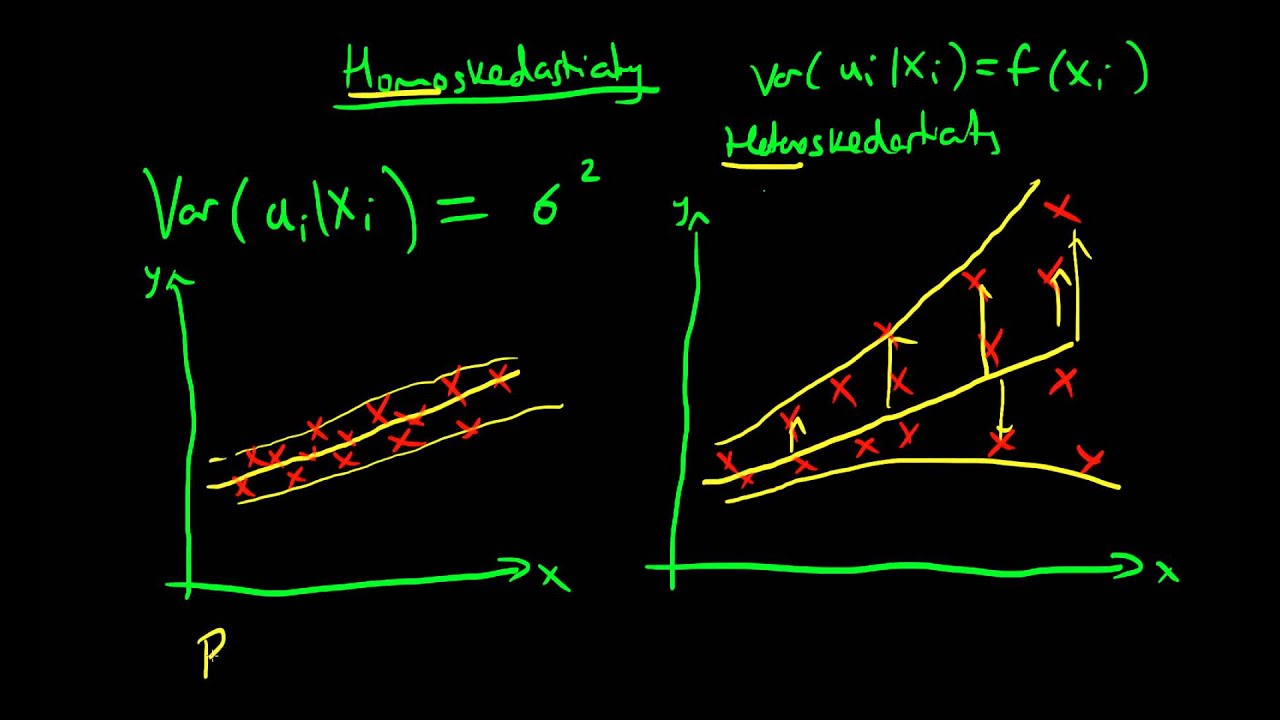

homoscedasticity

variance of the error term is stable across groups or predictor variables

heteroscedasticity

variance of the error term is NOT STABLE across groups or predictor variables

probability of rejecting a false null hypothesis

Pr(reject H_0 | H_1 is true)

statistical power

a priori

prior to data collection

post hoc

How many times do I need to toss a coin to conclude (with 80% power) that it is rigged by a certain amount?

I tossed a coin 10 times and it seemed to not be rigged by a certain amount. What was the power of my test?

retrospective, posteriori, observed

Because you will always have low observed power when you report non-significant effects, you should never perform an observed or post-hoc power analysis, even if an editor requests it. Instead, you should explain how likely it was to observe a significant effect, given your sample, and given an expected or small effect size.

For example, say, you collected 500 participants in an independent t-test, and did not observe an effect (0.3 threshold). You had more than 90% power to observe a small effect of d = 0.3. It is always possible that the true effect size is even smaller, or that your no effect conclusion is a Type 2 error, and you should acknowledge this. At the same time, given your sample size, and assuming a certain true effect size, it might be most probable that there is no effect.

open science

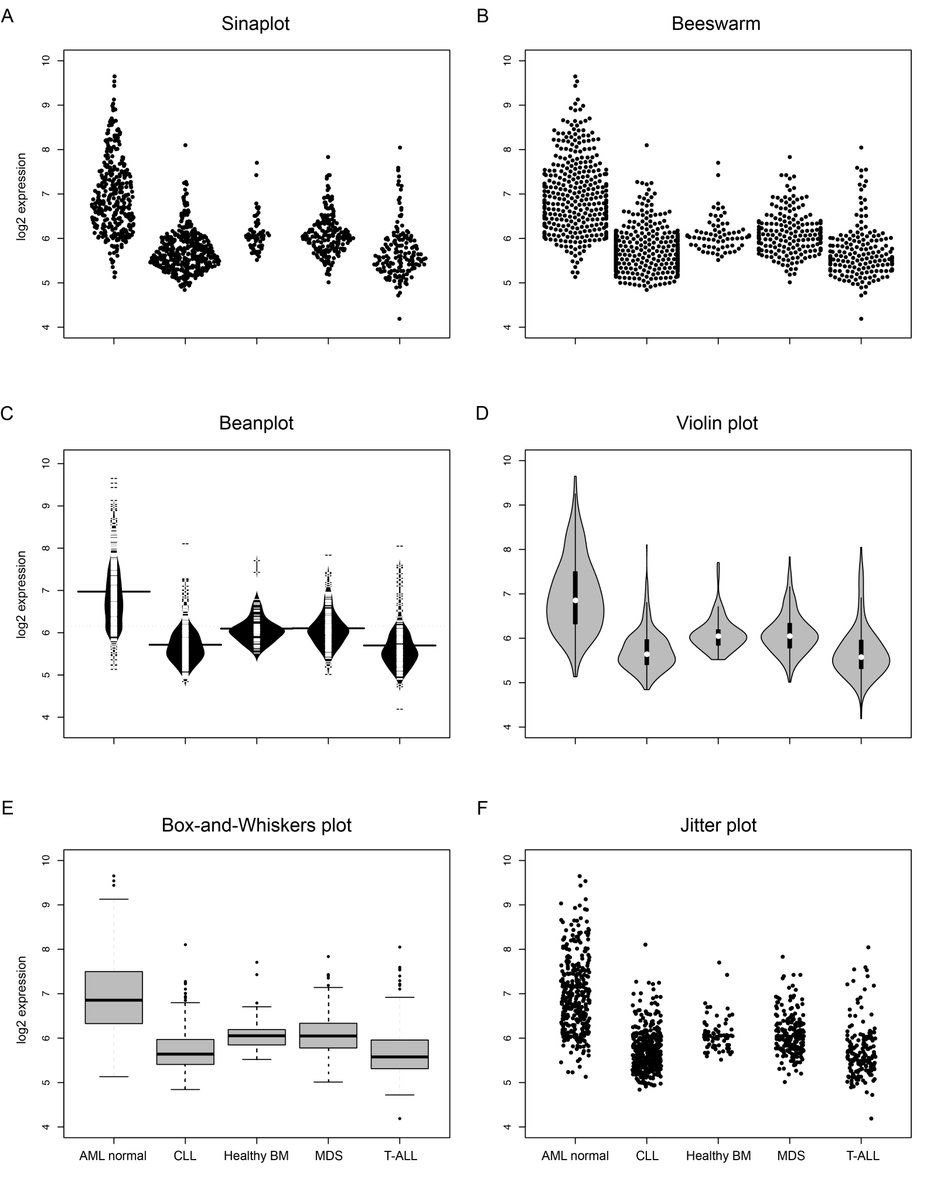

Data visualization with large samples can be overly complicated and busy if all the data points are presented. Therefore, in these situations it is advisable to present the summary statistics along with some visualization of the distribution rather than the data points