Canary Release com Docker, Kubernetes, Gitlab e Traefik

#startup #docker #k8s #traefik #gitlab #ci/cd #helm #kops #aws #help

About me

From Fortaleza - CE

10 years+ coding

CTO/Co-founder @Agendor

Java, Javascript, Android, PHP, Node.js, Ruby, React, Infra, Linux, AWS, MySQL, Business, Product.

Tulio Monte Azul

@tuliomonteazul

tulio@agendor.com.br

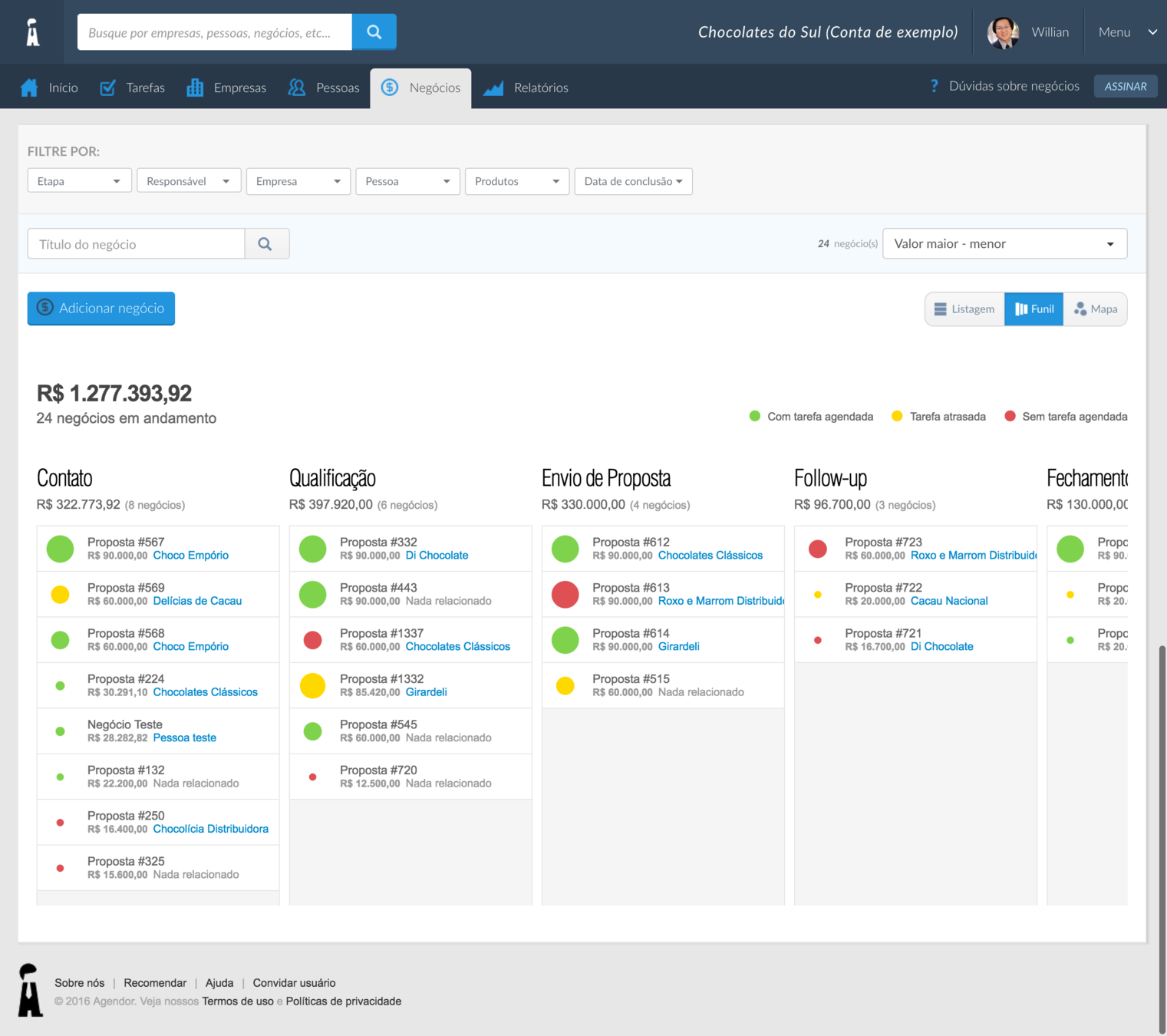

Agendor é a ferramenta principal do vendedor.

(Github do Vendedor)

Versão Web e Mobile com plano gratuito e pago.

O que esperar dessa talk?

"o quê" vs "como"

"release" vs "implementação"

Agenda

- Contexto

- Motivação

- Estratégia

- Desafios

- Lições aprendidas

Contexto

- Projeto em PHP (legado)

- Migração pra SPA com Rails + React

- Equipe enxuta (6 devs)

- Mercado competitivo

- Churn (desistências)

- 25k usuários ativos

- 5k rpm

Motivação

Problemas

Solução

-

Deploy com bug = caos

-

Medo de arriscar e atualizar versões

-

Processos manuais (ssh, yum install)

-

Ambiente dev != Outro dev != Prod

-

Falta de testes automatizados

-

Downtime

-

Improdutividade

-

Deploy com rollback

-

Deploy com ambientes isolados (containers)

-

Infrastructure as Code

-

Infrastructure as Code

-

Canary Release

-

Monitoramento e auto recover

-

CI/CD

Soluções

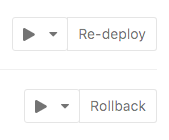

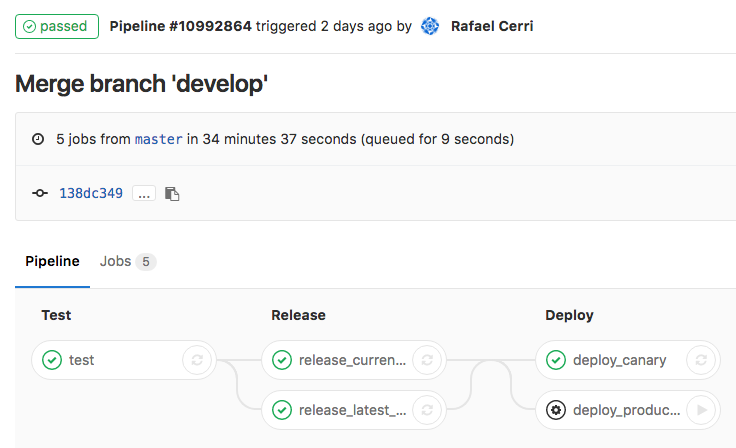

CI/CD

Rollback fácil

muito fácil

Automação

Custo

stages:

- test

- release

- deploy

image: azukiapp/docker-kubernetes-ci:17.04

services:

- docker:dind

variables:

DOCKER_DRIVER: overlay

before_script:

- docker info

- docker-compose --version

# Install kubectl and generate configurations

# More info in https://gitlab.com/agendorteam/agendor-infra#configure-kubectl-context-in-ci

- kube-config-generator

- kubectl cluster-info

- helm version

# Test

###########

test:

stage: test

tags:

- docker

script:

- docker-compose build test

- docker-compose run test

# Release

###########

.release: &release

stage: release

script:

# REFERENCES: https://docs.gitlab.com/ce/ci/variables/

# - ./scripts/release.sh agendor-web master

- ./scripts/release.sh $CI_PROJECT_NAME $IMAGE_TAG

release_current_staging:

<<: *release

tags:

- docker

variables:

DEPLOY_TYPE: staging

IMAGE_TAG: stg-$CI_BUILD_REF_SLUG-$CI_BUILD_REF

except:

- master

release_current_test:

<<: *release

tags:

- docker

variables:

DEPLOY_TYPE: test

IMAGE_TAG: tst-$CI_BUILD_REF_SLUG-$CI_BUILD_REF

except:

- master

release_current_production:

<<: *release

tags:

- docker

variables:

DEPLOY_TYPE: production

IMAGE_TAG: $CI_BUILD_REF_SLUG-$CI_BUILD_REF

only:

- master

release_latest_production:

<<: *release

tags:

- docker

variables:

DEPLOY_TYPE: production

IMAGE_TAG: latest

only:

- master

# Deploy

###########

.deploy_template: &deploy_definition

stage: deploy

tags:

- docker

script:

# ./scripts/deploy.sh [PROJECT_NAME [REF_NAME [HELM_NAME]]]

- ./scripts/deploy.sh $CI_PROJECT_NAME $IMAGE_TAG

- ./scripts/deploy.sh $CI_PROJECT_NAME $IMAGE_TAG agendor-api-mobile

deploy_staging:

<<: *deploy_definition

environment:

name: staging

variables:

DEPLOY_TYPE: staging

IMAGE_TAG: stg-$CI_BUILD_REF_SLUG-$CI_BUILD_REF

only:

- develop

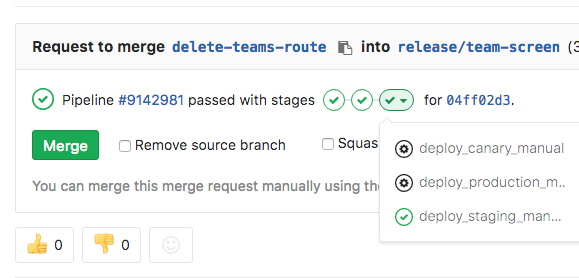

deploy_canary:

<<: *deploy_definition

environment:

name: canary

variables:

DEPLOY_TYPE: canary

IMAGE_TAG: $CI_BUILD_REF_SLUG-$CI_BUILD_REF

only:

- master

# manuals

deploy_staging_manual:

<<: *deploy_definition

environment:

name: staging

variables:

DEPLOY_TYPE: staging

IMAGE_TAG: stg-$CI_BUILD_REF_SLUG-$CI_BUILD_REF

when: manual

except:

- develop

- master

deploy_test_manual:

<<: *deploy_definition

environment:

name: test

variables:

DEPLOY_TYPE: test

IMAGE_TAG: tst-$CI_BUILD_REF_SLUG-$CI_BUILD_REF

when: manual

except:

- master

deploy_production_manual:

<<: *deploy_definition

environment:

name: production

variables:

DEPLOY_TYPE: production

when: manual

only:

- mastergitlab-ci.yaml

Soluções

Usado em produção

Google, Red Hat, CoreOs

Monitoramento, Rolling Updates, Auto recovery

Comunidade

Documentação

Soluções

Usado em produção

Múltiplos ambientes

Deploy por ambiente

Hipster

Infrastructure as Code

Ambiente dev = prod

Host mapping

Fast

Less code = less bug

Canary Release

Soluções

frontends:

# agendor-web

web:

rules:

- "Host:web.agendor.com.br"

web_canary:

rules:

- "Host:web.agendor.com.br"

- "HeadersRegexp:Cookie,_AUSER_TYPE=canary"backends:

## agendor-web

web:

- name: k8s

url: "http://agendor-web.production.svc.cluster.local"

web_canary:

- name: k8s

url: "http://agendor-web-canary.production.svc.cluster.local"Estratégia

Estratégia - Fase 1

web.agendor.com.br

production-legacy

web.agendor.com.br

cookie: canary

production-canary

web.agendor.com.br

Estratégia - Fase 1

frontends:

web:

rules:

- "Host:web.agendor.com.br"

web_canary:

rules:

- "Host:web.agendor.com.br"

- "HeadersRegexp:Cookie,_AUSER_TYPE=canary"backends:

web:

- name: legacy_1

url: http://ec2-54-10-XXX-XXX.sa-east-1.compute.amazonaws.com

- name: legacy_2

url: http://ec2-54-20-XXX-XXX.sa-east-1.compute.amazonaws.com

web_canary:

- name: k8s

url: "http://agendor-web-canary.production.svc.cluster.local"aws.yaml

helm upgrade --kube-context k8s.agendor.com.br agendor-infra -f aws.yaml ./

Estratégia - Fase 2

web.agendor.com.br

production-legacy

web.agendor.com.br

cookie: canary

production-canary

90%

web.agendor.com.br

10%

Estratégia - Fase 2

backends:

web:

- name: legacy_1

values:

- weight = 5

- url = "http://ec2-54-10-XXX-XXX.sa-east-1.compute.amazonaws.com"

- name: legacy_2

values:

- weight = 5

- url = "http://ec2-54-20-XXX-XXX.sa-east-1.compute.amazonaws.com"

- name: k8s

values:

- weight = 1

- url = "http://agendor-web-canary.production.svc.cluster.local"

web_canary:

- name: k8s

url: "http://agendor-web-canary.production.svc.cluster.local"

aws.yaml

helm upgrade --kube-context k8s.agendor.com.br agendor-infra -f aws.yaml ./

Estratégia - Fase 3

web.agendor.com.br

production

web.agendor.com.br

cookie: canary

production-canary

production-legacy

Estratégia - Fase 3

backends:

web:

- name: k8s

url: "http://agendor-web.production.svc.cluster.local"

web_canary:

- name: k8s

url: "http://agendor-web-canary.production.svc.cluster.local"

aws.yaml

helm upgrade --kube-context k8s.agendor.com.br agendor-infra -f aws.yaml ./

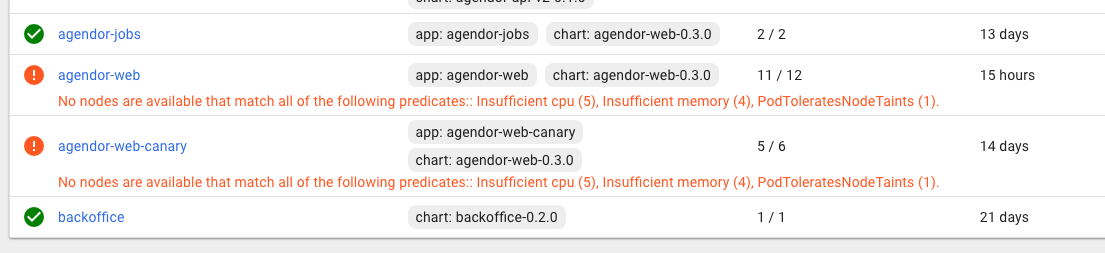

Desafios

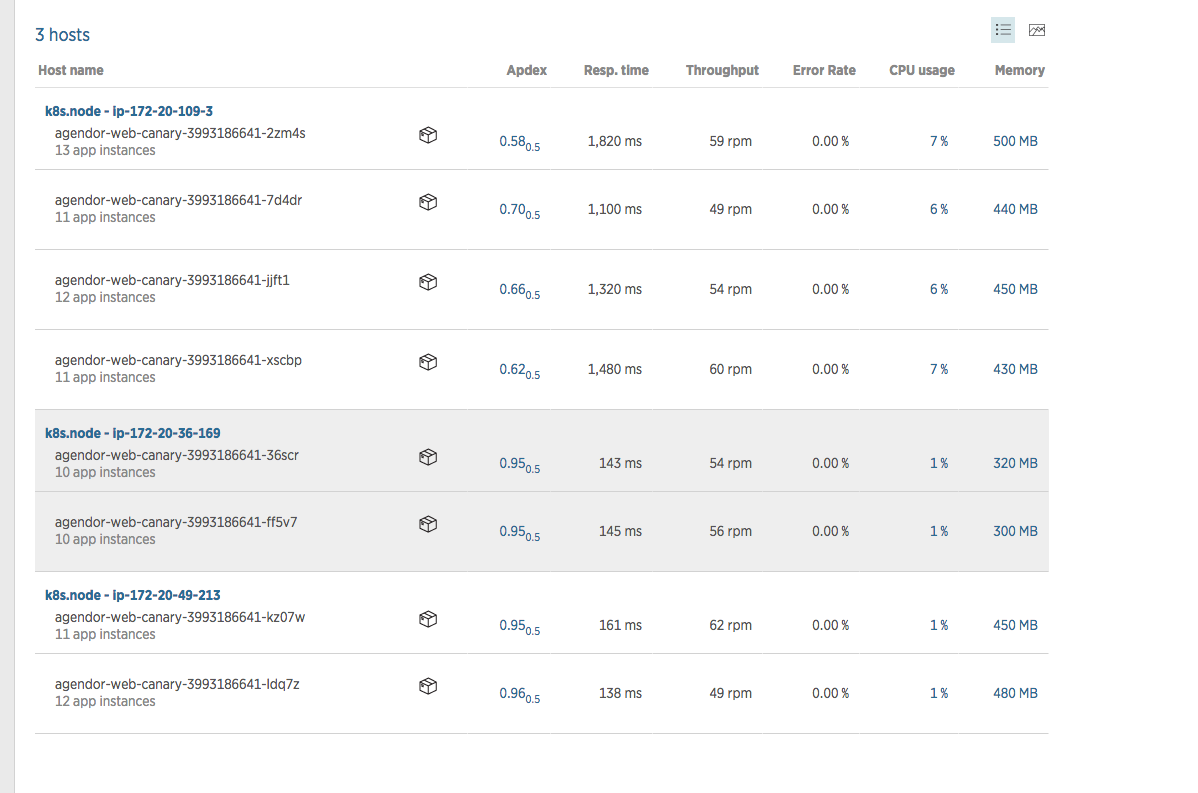

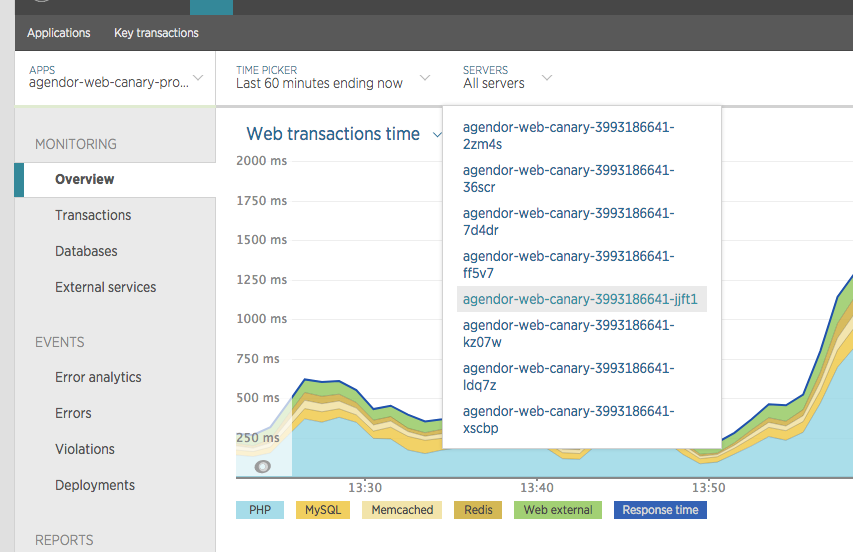

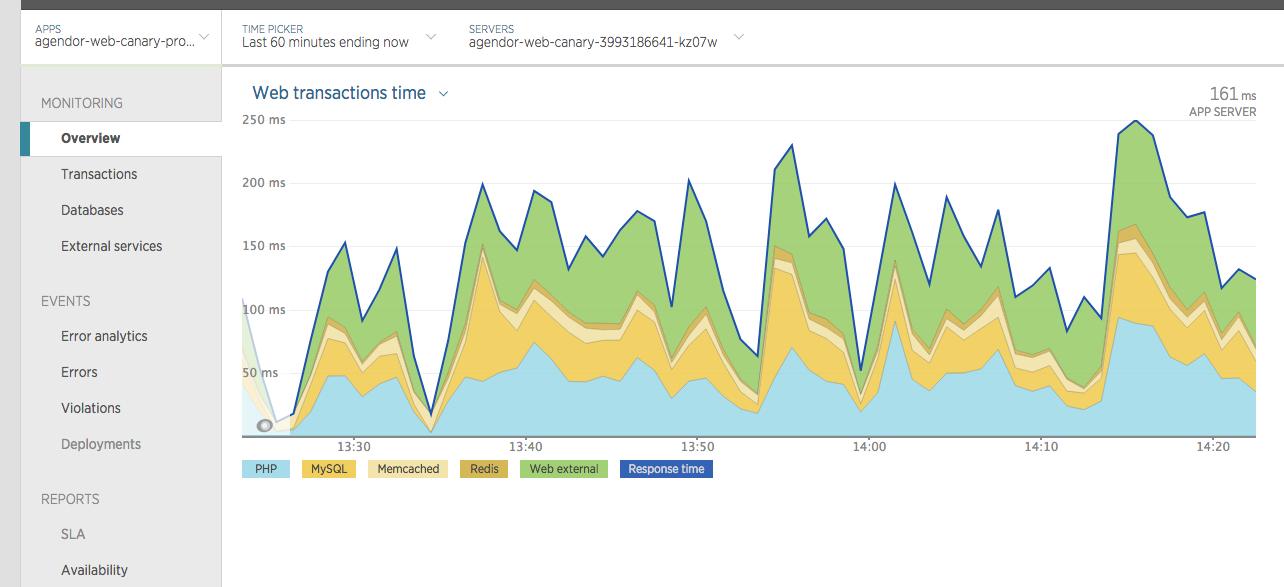

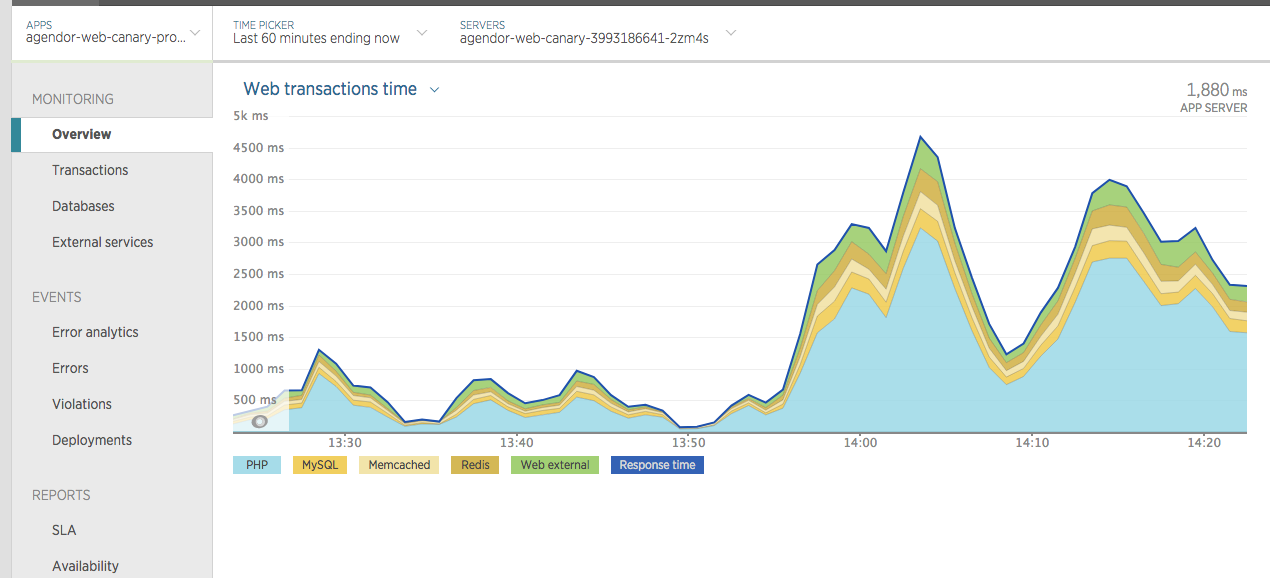

Pods Scaling

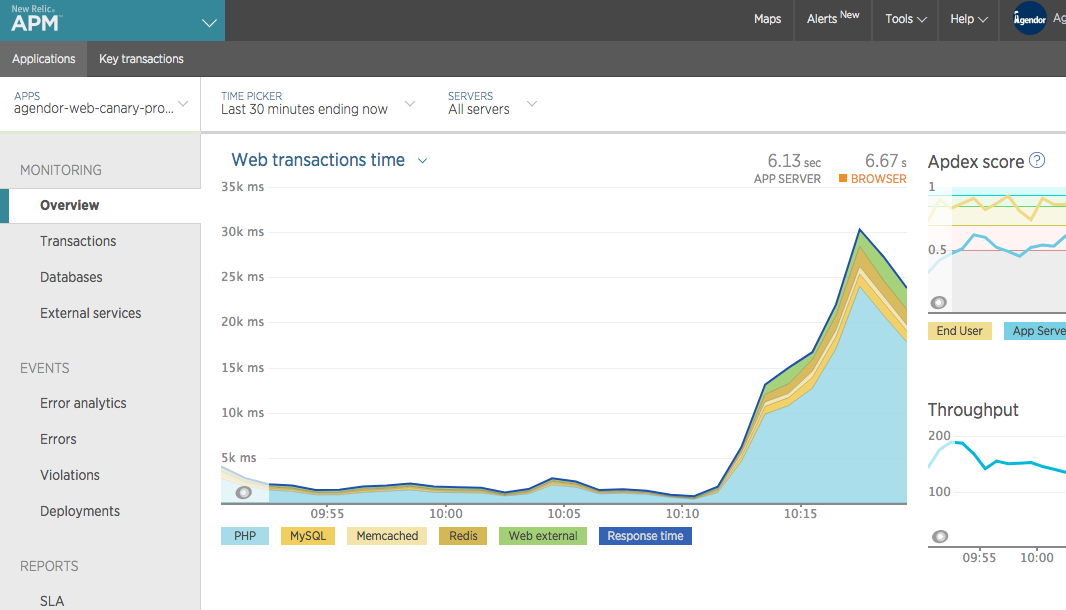

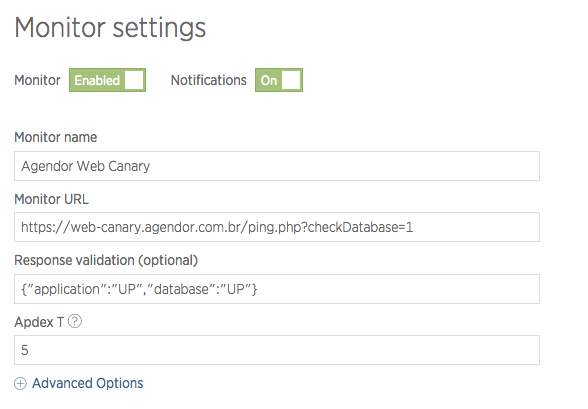

NewRelic FTW

# back-end

web_canary:

- name: k8s

url: "http://agendor-web-canary.production.svc.cluster.local"

# front-end

web_canary:

rules:

- "Host:web.agendor.com.br"

- "HeadersRegexp:Cookie,_AUSER_TYPE=canary"# back-end

web_canary:

- name: k8s

url: "http://agendor-web-canary.production.svc.cluster.local"

web_canary_host:

- name: k8s

url: "http://agendor-web-canary.production.svc.cluster.local"

# front-end

web_canary:

rules:

- "Host:web.agendor.com.br"

- "HeadersRegexp:Cookie,_AUSER_TYPE=canary"

web_canary_host:

rules:

- "Host:web-canary.agendor.com.br"Kubectl FTW

$ kubectl --namespace=production scale --replicas=8 deployment/agendor-web-canary$ kubectl --namespace=production get pods -l=app=agendor-web-canary

NAME READY STATUS RESTARTS AGE

agendor-web-canary-1388324162-2qqgr 1/1 Running 0 5h

agendor-web-canary-1388324162-38xlb 1/1 Running 0 1m

agendor-web-canary-1388324162-3g815 1/1 Running 0 5h

agendor-web-canary-1388324162-89j6k 1/1 Running 0 5h$ kubectl --namespace=production get pods -l=app=agendor-web-canary

NAME READY STATUS RESTARTS AGE

agendor-web-canary-1388324162-2qqgr 1/1 Running 0 5h

agendor-web-canary-1388324162-38xlb 1/1 Running 0 1m

agendor-web-canary-1388324162-3g815 1/1 Running 0 5h

agendor-web-canary-1388324162-89j6k 1/1 Running 0 5h

agendor-web-canary-1388324162-gjndp 0/1 ContainerCreating 0 4s

agendor-web-canary-1388324162-n57wc 0/1 ContainerCreating 0 4s

agendor-web-canary-1388324162-q0pcv 0/1 ContainerCreating 0 4s

agendor-web-canary-1388324162-t8v4s 0/1 ContainerCreating 0 4sLimits tunning

# Default values for agendor-web.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

name: agendor-web

namespace: default

app_env: staging

# autoscale configurations

minReplicas: 2

maxReplicas: 10

targetCPUUtilizationPercentage: 50

...

resources:

limits:

cpu: 100m

memory: 256Mi

requests:

cpu: 100m

memory: 256Mi

# Default values for agendor-web.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

name: agendor-web

namespace: default

app_env: staging

# autoscale configurations

minReplicas: 2

maxReplicas: 10

targetCPUUtilizationPercentage: 30 # decreased

...

resources:

limits:

cpu: 200m # increased

memory: 512Mi # increased

requests:

cpu: 100m

memory: 256Mi

Pods Scaling + Deploy

Rolling Update Fail

Deployment vs HPA

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: {{ .Values.name }}

...

spec:

replicas: {{ .Values.minReplicas }}

revisionHistoryLimit: 10

selector:

matchLabels:

app: {{ .Values.name }}apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: {{ .Values.name }}

...

spec:

scaleTargetRef:

apiVersion: extensions/v1beta1

kind: Deployment

name: {{ .Values.name }}

minReplicas: {{ .Values.minReplicas }}

maxReplicas: {{ .Values.maxReplicas }}

targetCPUUtilizationPercentage: {{ .Values.targetCPUUtilizationPercentage }}

# values.yaml

minReplicas: 1

maxReplicas: 10

targetCPUUtilizationPercentage: 30

strategy:

rollingUpdate:

maxSurge: 3

maxUnavailable: 0Rolling Update Win

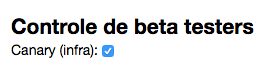

Canary Bug Tracking

Canary Bug Tracking

Traefik redireciona aleatoriamente

Cliente reclama de bug: problema na aplicação ou na nova infra?

Redirecionamento aleatório:

Bom para testar escalabilidade e performance

Ruim se mantido por muito tempo

Canary Bug Tracking

# aws.yml (traefik)

backends:

web:

- name: legacy_1

values:

- weight = 5

- url = "http://ec2-54-94-240-102.sa-east-1.compute.amazonaws.com"

- name: legacy_2

values:

- weight = 5

- url = "http://ec2-54-233-74-185.sa-east-1.compute.amazonaws.com"

- name: k8s

values:

- weight = 1

- url = "http://agendor-api-v2-canary.production.svc.cluster.local"

web_canary:

- name: k8s

url: "http://agendor-web-canary.production.svc.cluster.local"# aws.yml (traefik)

backends:

web:

- name: legacy_1

url = "http://ec2-54-94-240-102.sa-east-1.compute.amazonaws.com"

- name: legacy_2

url = "http://ec2-54-233-74-185.sa-east-1.compute.amazonaws.com"

web_canary:

- name: k8s

url: "http://agendor-web-canary.production.svc.cluster.local"# PHP

public function addBetaTesterCookie($accountId = NULL) {

if ($this->isCanaryBetaTester($accountId)) { // check on Redis

$this->setCookie(BetaController::$BETA_COOKIE_NAME,

BetaController::$BETA_COOKIE_VALUE);

} else {

$this->setCookie(BetaController::$BETA_COOKIE_NAME,

BetaController::$LEGACY_COOKIE_VALUE);

}

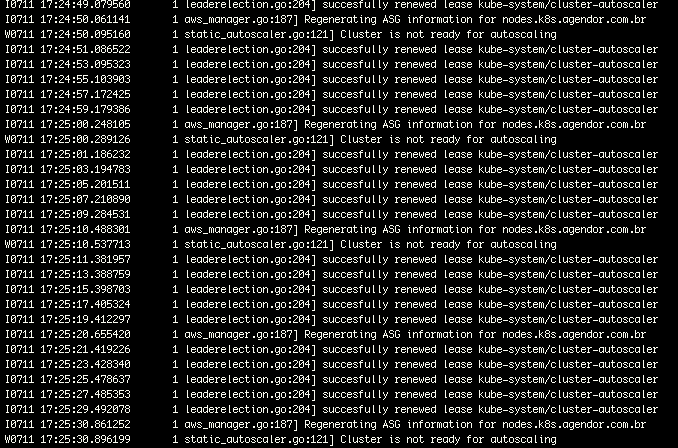

}Cluster Scaling #1

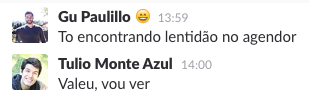

Aviso de lentidão no sistema

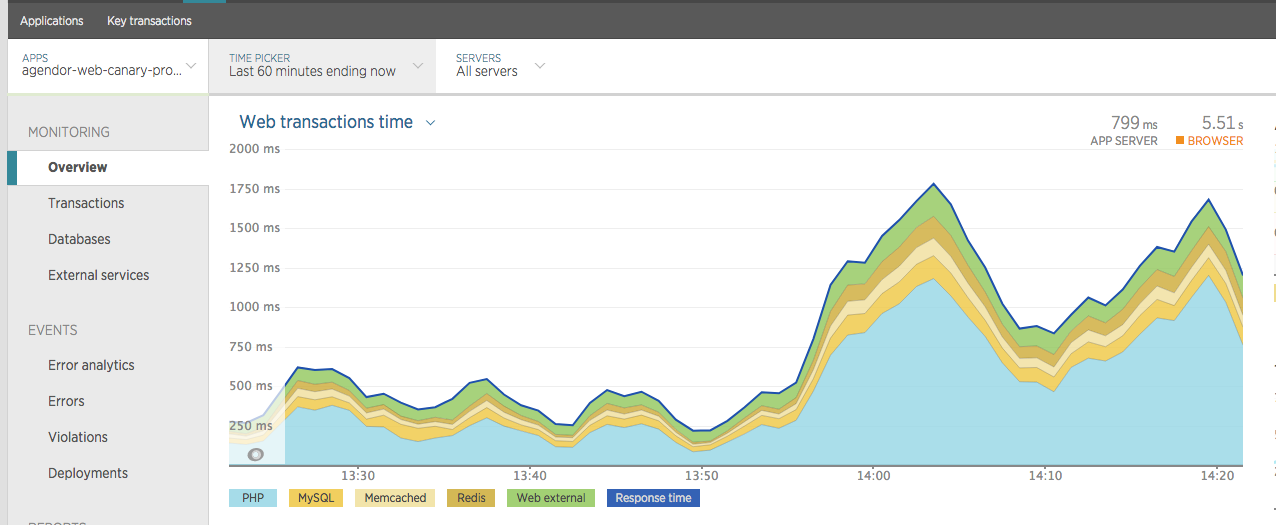

Análise da lentidão

Análise da lentidão

SSH into Server

$ ssh -i ~/.ssh/kube_aws_rsa

admin@ec2-XX-XXX-XXX-XXX.sa-east-1.compute.amazonaws.com// Deveria ter usado:

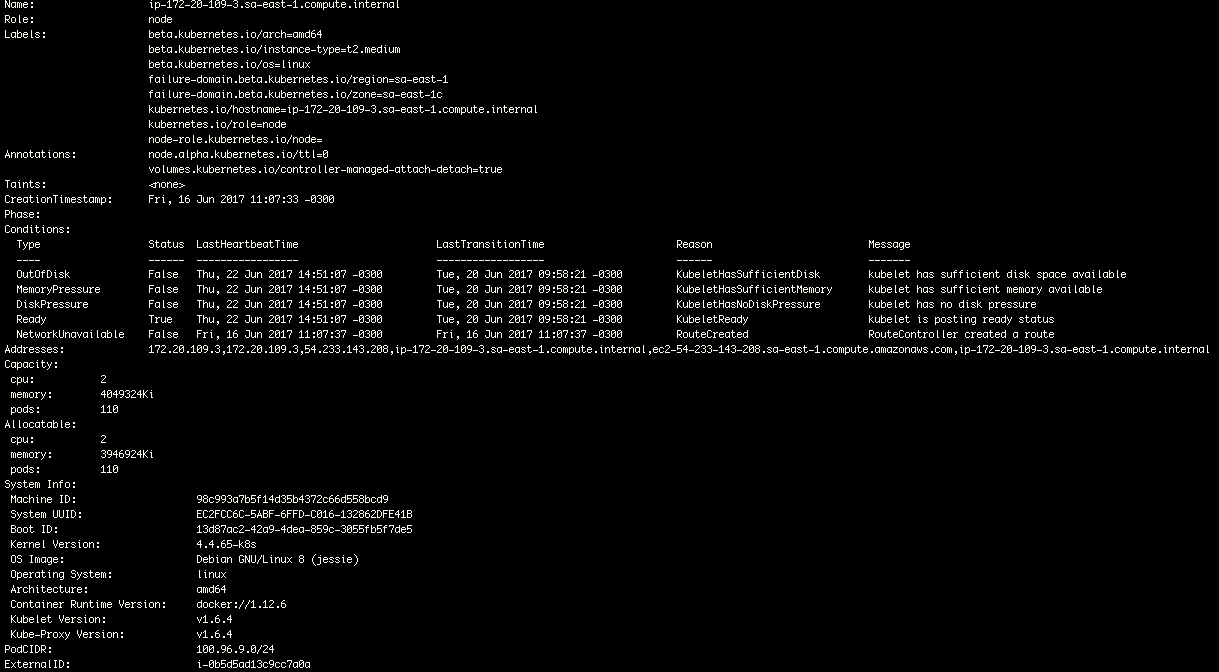

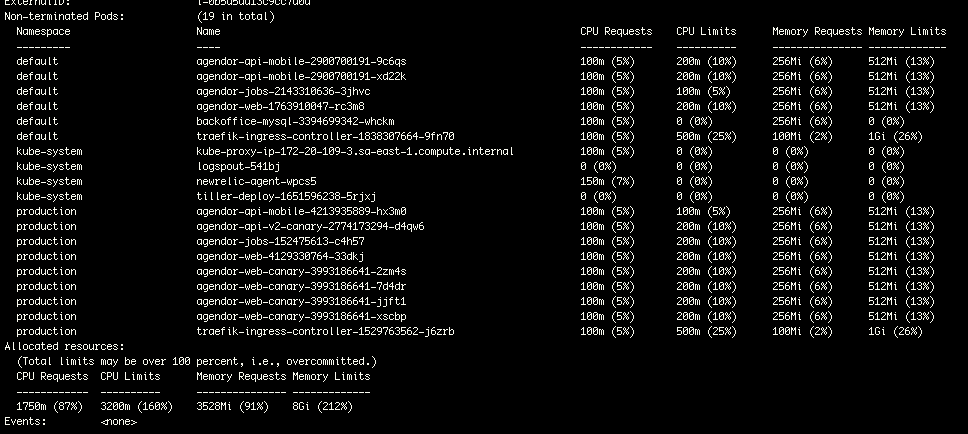

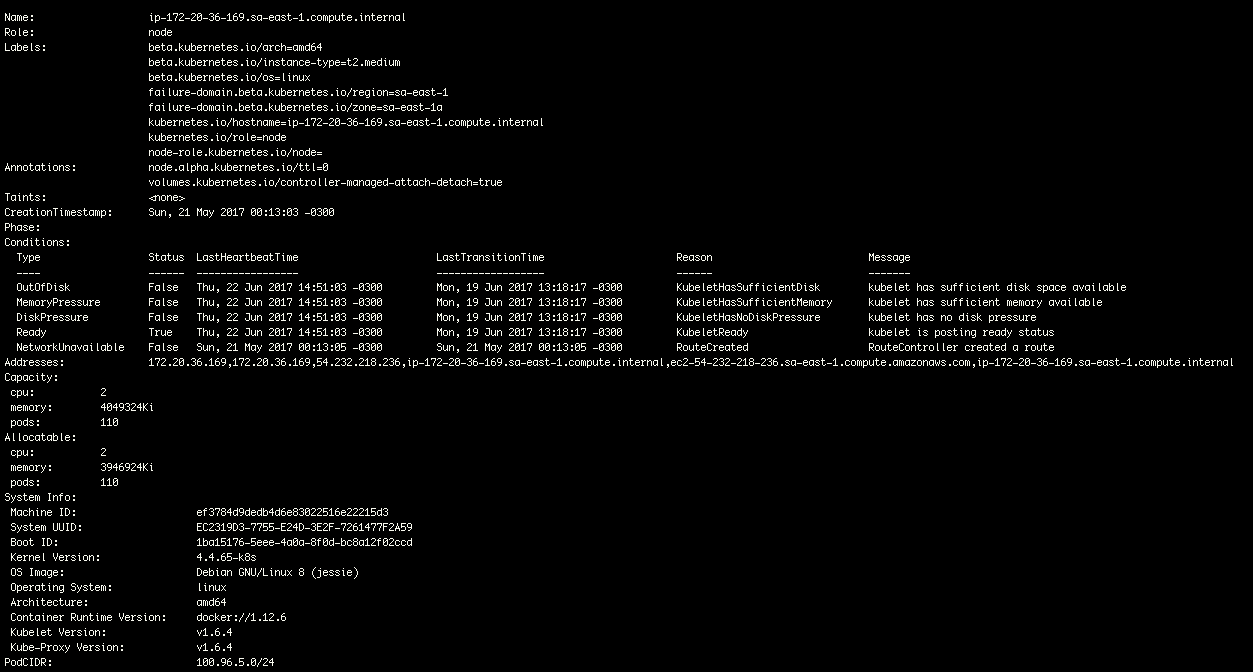

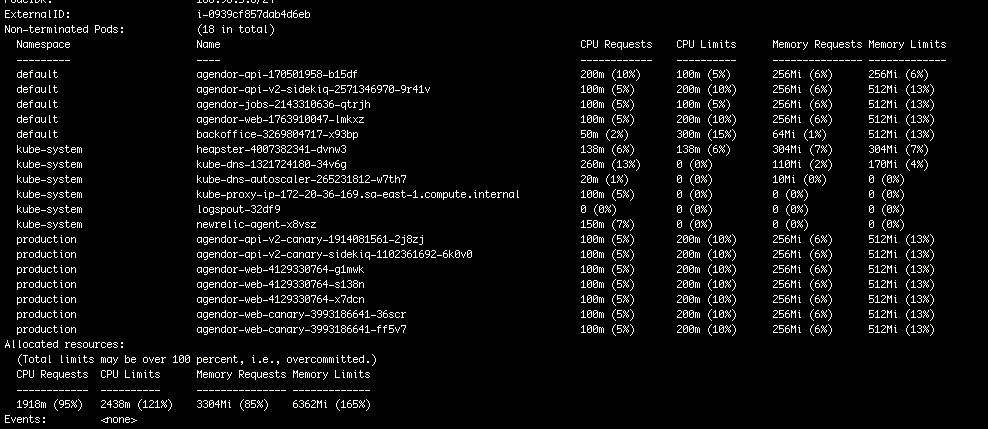

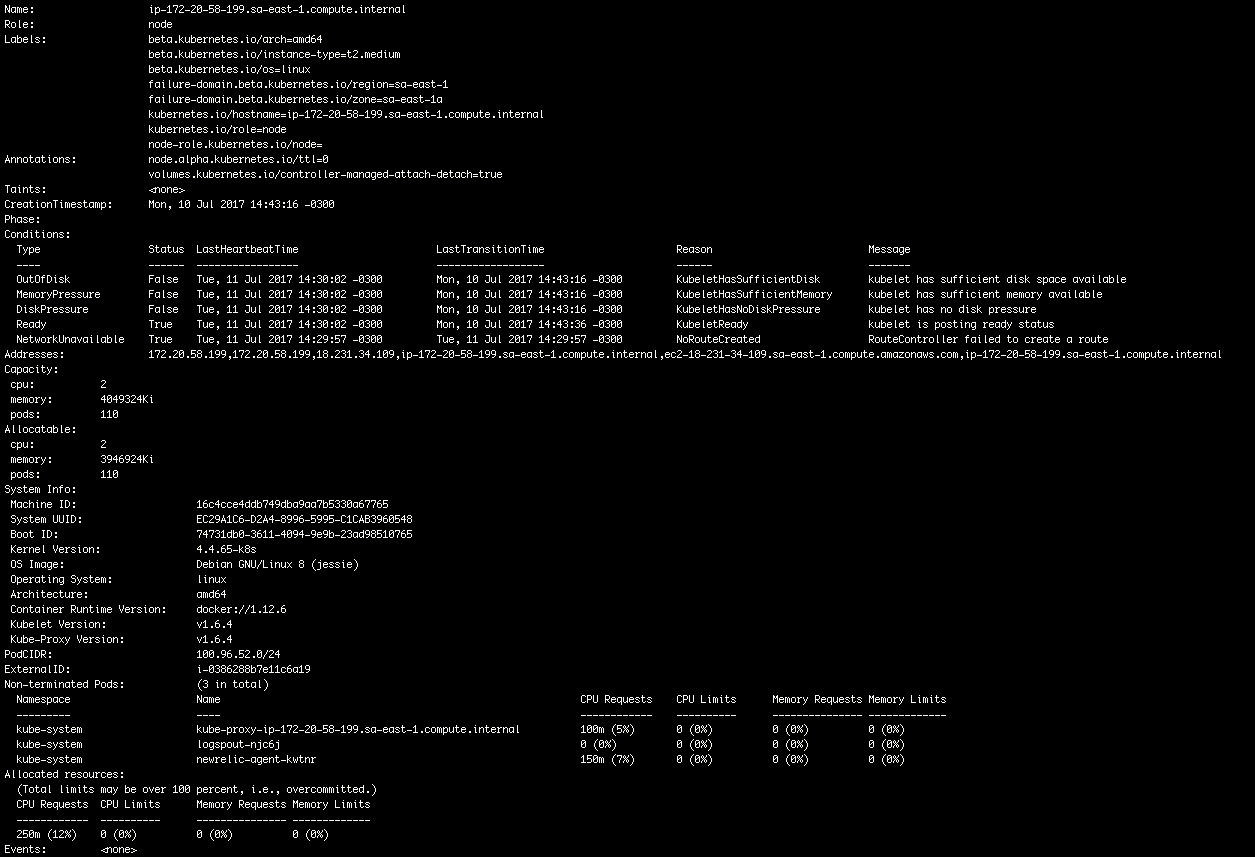

$ kubectl top nodesDebugging Nodes

$ kubectl describe nodes

Debugging auto scaler

I0622 18:08:59 scale_up.go:62] Upcoming 1 nodes

I0622 18:08:59 scale_up.go:81] Skipping node group nodes.k8s.agendor.com.br - max size reached

I0622 18:08:59 scale_up.go:132] No expansion optionskubectl logs -f --tail=100 --namespace=kube-system cluster-autoscaler-2462627368-c1hhlcontainers:

- name: cluster-autoscaler

image: "{{ .Values.image.repository }}:{{ .Values.image.tag }}"

imagePullPolicy: "{{ .Values.image.pullPolicy }}"

command:

- ./cluster-autoscaler

- --cloud-provider=aws

{{- range .Values.autoscalingGroups }}

- --nodes={{ .minSize }}:{{ .maxSize }}:{{ .name }}

{{- end }}autoscalingGroups:

- name: nodes.k8s.agendor.com.br

minSize: 1

maxSize: 15values.yaml

deployment.yaml

Change instance groups

$ kops get ig

Using cluster from kubectl context: k8s.agendor.com.br

NAME ROLE MACHINETYPE MIN MAX SUBNETS

master-sa-east-1a Master t2.medium 1 1 sa-east-1a

nodes Node t2.medium 2 6 sa-east-1a,sa-east-1capiVersion: kops/v1alpha2

kind: InstanceGroup

metadata:

creationTimestamp: "2017-02-04T07:30:23Z"

labels:

kops.k8s.io/cluster: k8s.agendor.com.br

name: nodes

spec:

image: kope.io/k8s-1.6-debian-jessie-amd64-hvm-ebs-2017-05-02

machineType: t2.medium

maxSize: 20

minSize: 2

role: Node

rootVolumeSize: 50

subnets:

- sa-east-1a

- sa-east-1c$ kops edit ig nodesapiVersion: kops/v1alpha2

kind: InstanceGroup

metadata:

creationTimestamp: "2017-02-04T07:30:23Z"

labels:

kops.k8s.io/cluster: k8s.agendor.com.br

name: nodes

spec:

image: kope.io/k8s-1.6-debian-jessie-amd64-hvm-ebs-2017-05-02

machineType: t2.medium

maxSize: 6

minSize: 2

role: Node

rootVolumeSize: 50

subnets:

- sa-east-1a

- sa-east-1cChange instance groups

$ kops update cluster

Using cluster from kubectl context: k8s.agendor.com.br

*********************************************************************************

A new kubernetes version is available: 1.6.6

Upgrading is recommended (try kops upgrade cluster)

More information: https://github.com/kubernetes/kops/blob/master/permalinks/upgrade_k8s.md#1.6.6

*********************************************************************************

I0623 10:13:07.893227 35173 executor.go:91] Tasks: 0 done / 60 total; 27 can run

I0623 10:13:10.226305 35173 executor.go:91] Tasks: 27 done / 60 total; 14 can run

I0623 10:13:10.719476 35173 executor.go:91] Tasks: 41 done / 60 total; 17 can run

I0623 10:13:11.824240 35173 executor.go:91] Tasks: 58 done / 60 total; 2 can run

I0623 10:13:11.933869 35173 executor.go:91] Tasks: 60 done / 60 total; 0 can run

Will modify resources:

AutoscalingGroup/nodes.k8s.agendor.com.br

MaxSize 6 -> 20

Must specify --yes to apply changesFrom kops update cluster docs:

This change will apply to new instances only; if you'd like to roll it out immediately to all the instances you have to perform a rolling update.

See a preview with: kops rolling-update cluster

Then restart the machines with: kops rolling-update cluster --yes

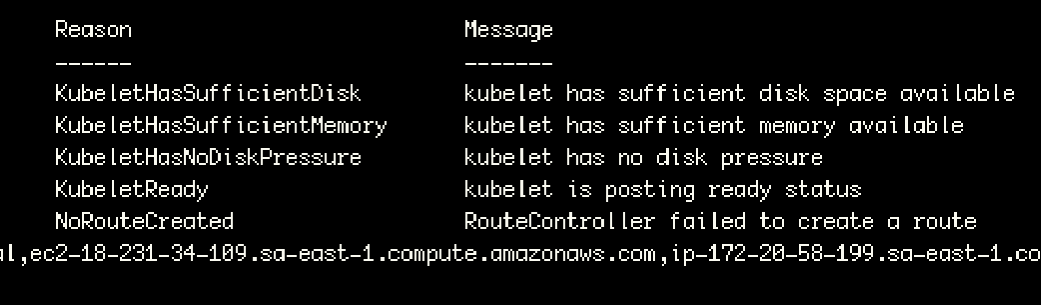

Cluster Scaling #2

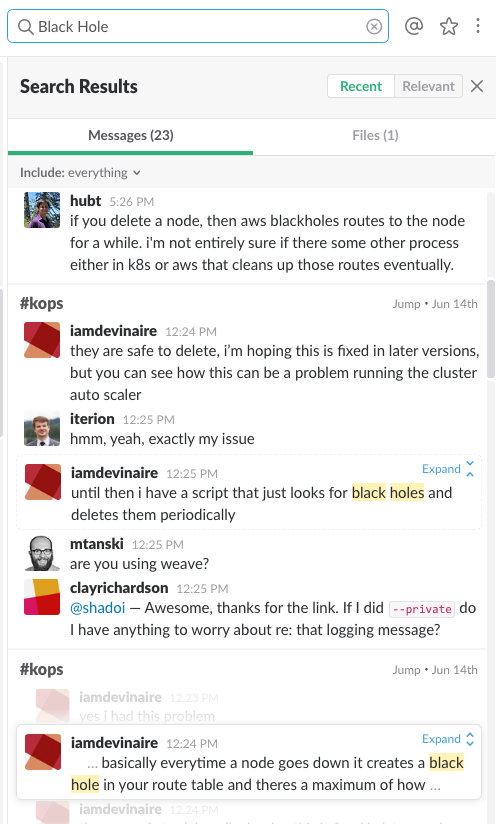

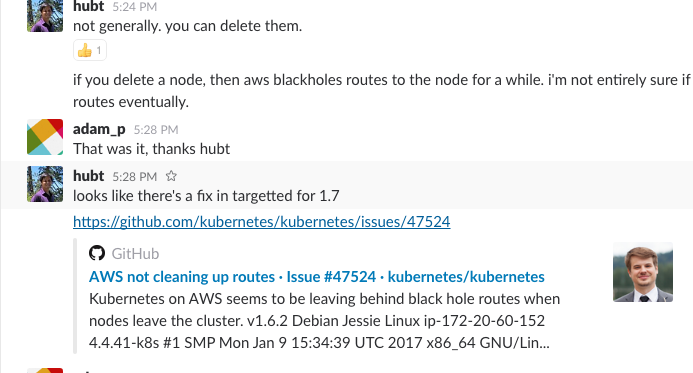

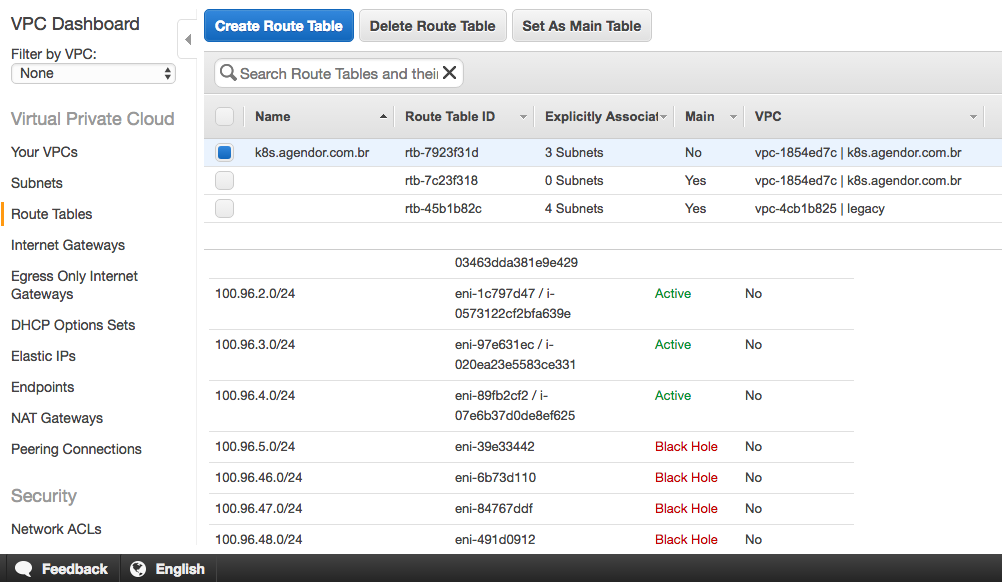

Black Hole

kubectl logs -f --tail=200 --namespace=kube-system \

$(kubectl get pods --namespace=kube-system \

| grep cluster-autoscaler \

| awk '{print $1}' \

| head -1)kubectl describe nodes

$ aws ec2 describe-route-tables \

--filters "Name=route-table-id, Values=rtb-7923f31d" \

| grep blackhole \

| awk '{print $2}' \

| xargs -n 1 \

aws ec2 delete-route --route-table-id rtb-7923f31d --destination-cidr-block12:51:26 scale_up.go:62] Upcoming 0 nodes

12:51:26 scale_up.go:145] Best option to resize: nodes.k8s.agendor.com.br

12:51:26 scale_up.go:149] Estimated 1 nodes needed in nodes.k8s.agendor.com.br

12:51:26 scale_up.go:169] Scale-up: setting group nodes.k8s.agendor.com.br size to 6

12:51:26 aws_manager.go:124] Setting asg nodes.k8s.agendor.com.br size to 6

12:51:26 event.go:217] Event(v1.ObjectReference{Kind:"ConfigMap", Namespace:"kube-system", Name:"cluster-autoscaler-status", UID:"3d1fa43e-59e8-11e7-b0ba-02fccc543533", APIVersion:"v1", ResourceVersion:"22154083", FieldPath:""}): type: 'Normal' reason: 'ScaledUpGroup' Scale-up: group nodes.k8s.agendor.com.br size set to 6

12:51:26 event.go:217] Event(v1.ObjectReference{Kind:"Pod", Namespace:"production", Name:"agendor-web-canary-3076992955-95b3p", UID:"2542369b-5a6e-11e7-b0ba-02fccc543533", APIVersion:"v1", ResourceVersion:"22154097", FieldPath:""}): type: 'Normal' reason: 'TriggeredScaleUp' pod triggered scale-up, group: nodes.k8s.agendor.com.br, sizes (current/new): 5/6

...

12:51:37 scale_up.go:62] Upcoming 1 nodes

12:51:37 scale_up.go:124] No need for any nodes in nodes.k8s.agendor.com.br

12:51:37 scale_up.go:132] No expansion options

12:51:37 static_autoscaler.go:247] Scale down status: unneededOnly=true lastScaleUpTime=2017-06-26 12:51:26.777491768 +0000 UTC lastScaleDownFailedTrail=2017-06-25 20:52:45.172500628 +0000 UTC schedulablePodsPresent=false

12:51:37 static_autoscaler.go:250] Calculating unneeded nodesDeletar rotas em status "Black Hole"

$ kubectl logs -f --tail=100 --namespace=kube-system

$(kubectl get pods --namespace=kube-system |

grep cluster-autoscaler | awk '{print $1}' | head -1)Lições Aprendidas

Lições Aprendidas

Múltiplas versões do código simultâneas

Rolling Update

Canary Release

Todos devem desenvolver pensando no deploy/release desde o começo

Lições Aprendidas

Muitas tecnologias novas de uma vez

Helm

Traefik

Docker

AWS

Kubernetes

Quando usadas em conjunto, o risco aumenta

Kops

Cluster Auto Scaler

Assim como a curva de aprendizado

Lições Aprendidas

Helm

--recreate-pods para corrigir o problema dos pods não serem re-criados

Impacto: Rolling Update fail

env:

- name: APP_NAME

value: "{{ .Values.name }}"

- name: APP_NAMESPACE

value: "{{ .Values.namespace }}"

- name: APP_ENV

value: "{{ .Values.app_env }}"

- name: APP_FULLNAME

value: "{{ .Values.name }}-{{ .Values.app_env }}"

# Force recreate pods

- name: UPDATE_CONFIG

value: {{ $.Release.Time }}Obrigado!

Dúvidas?

linkedin.com/in/tuliomonteazul

@tuliomonteazul

tulio@agendor.com.br

Everton Ribeiro

Gullit Miranda

Marcus Gadbem

Special thanks to