Intro to Kubernetes

What and Why?

What is Kubernetes (K8S)

- Kubernetes is a portable, extensible open-source platform for managing containerized workloads and services

- Basically, an environment to run applications on containers (Docker, but other engines are supported)

Why K8S

- It's a uniform platform for running containers

- It's portable (runs on clouds and bare metal)

- Makes managing and deploying infrastructure easy

- Uses a declarative approach (no scripting)

- It's a self-healing system

- It's very well suited for microservices

- Flexible: you could run your own serverless stack!

Why containers?

Basically: they make environments more consistent & manageable

Where does it come from?

- Open sourced from Google in 2014

- Based on their internal orchestrator: Borg

- Now managed by the Cloud Native Computing Foundation

+

Architecture

K8S architecture

A kubernetes cluster contains:

- 1+ Nodes: workers, they run containerized apps

- 1 Master: runs k8s APIs and administrates changes to the cluster, can be replicated

K8S architecture: Nodes

Nodes on K8S are the basic worker machine

- On cloud providers, Nodes are VMs

- Each Node runs a Kubelet process, for k8s related communication

- They also run Docker, for managing containers (or an equivalent container engine)

K8S architecture: Masters

Masters run a set of components:

- API server: a REST API for operating the cluster

- The state store (etcd): stores configuration data

- Controller manager server: handles lifecycles and business logic

- Scheduler: schedules containers on the cluster

Masters can be replicated, to ensure High Availability

A cluster with a failed master can still work (Nodes will execute containers) but won't be able to change/self-heal

Basic Concepts

The Pod

The basic building block of K8S

- It encapsulates a containerized application

- Each pod has a network IP

- Pods can have a volume assigned

- Normally one container, but can be used to run multiple

You never really run Pods directly, a Controller does!

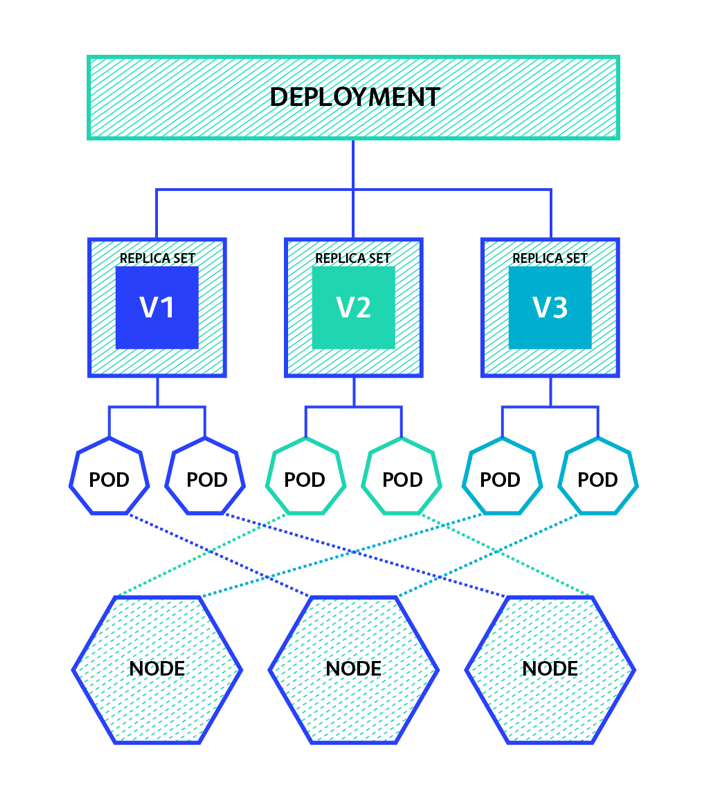

The Deployment

A Deployment is used to manage Pods

- It's declarative: describes what you want to deploy

- Allows rolling deploys (no downtime)

- Manages application restarts in case of crashes

- Manages scaling up (or down) your Pods

To be more specific:

Deployments manage ReplicaSets, which in turn are responsible for managing Pods

The Deployment: cont'd

When you change a Deployment:

- The deployment updates its spec/status

- It creates a new ReplicaSet (if needed)

- Each set is a group of Pods of the same version

- When the new ReplicaSet is ready, the old one is deleted

The Deployment: example

An example Deployment (yaml file)

- Runs the public nginx:1.7.9 image

- Will mantain 3 replicas

- Exposes port 80

But your apps are not reachable from outside the cluster yet...

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80Service Discovery

The Service

Pods are mortal:

- They have an IP, but it can change

- They can be restarted/recreated

- They can be scaled up or down

To make sure you can always reach Pods from the same group, Kubernetes uses Services

The Service

Services offer:

- An external or internal IP to reach your Pods

- An internal DNS name for Pods

- A port on your Node's IP

- Lightweight load balancing of requests

- In cloud environments they can provision Load Balancers

Ingresses

Service IPs and names are only routable by the cluster network

In the real world we want things like:

- externally reachable URLs

- properly load balanced traffic (get a cloud LB per service)

- name based virtual hosting (/billing goes to billing service)

- and more routing features...

This can be done with Ingresses (using an IngressController)

Ingresses: an example

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: k8s.io

http:

paths:

- path: /foo

backend:

serviceName: foo-service

servicePort: 80

- path: /bar

backend:

serviceName: bar-service

servicePort: 80http://k8s.io/foo

http://k8s.io/bar

foo-service

bar-service

IngressControllers are usually implemented with reverse proxies (e.g. nginx)

More features

ConfigMaps

ConfigMaps are used to decouple parameters from containers

- They can contain data in a JSON-like format (or yaml)

- Can be defined at a cluster or namespace level

- Will cause Pods to restart if the config is changed

- Useful for keeping configuration parameters centralized

kind: ConfigMap

apiVersion: v1

metadata:

name: environment-variables

labels:

name: environment-variables

data:

ENV: "test"

PORT: 8080An example:

a map containing global environment variables

(Deployments can reference it)

Secrets

- Like ConfigMaps, but meant to share sensitive data

- Normally used for tokens, passwords, keys, etc.

- They can be parameters or mounted as volumes (files)

apiVersion: v1

kind: Secret

metadata:

name: mysecret

type: Opaque

data:

password: MWYyZDFlMmU2N2RmapiVersion: v1

kind: Pod

metadata:

name: secret-env-pod

spec:

containers:

- name: mycontainer

image: redis

env:

- name: SECRET_PASSWORD

valueFrom:

secretKeyRef:

name: mysecret

key: passwordDefinition

Usage as an environment variable

DaemonSets

Like a Deployment, but for infrastructure

- Will run a copy of a Pod on every Node in the cluster

- When Nodes are added, Pods will automatically run

- Used for log collectors, monitoring, cluster storage, etc.

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd-elasticsearch

namespace: kube-system

labels:

k8s-app: fluentd-logging

spec:

containers:

- name: fluentd-elasticsearch

image: k8s.gcr.io/fluentd-elasticsearch:1.20

# pretty much, looks like a deploymentExample:

running fluentd to collect logs on every Node and send them to elasticsearch

AutoScaling

Can be done with HorizontalPodAutoscalers

- References an existing Deployment

- Defines target metrics (CPU, memory, etc.) to achieve

- When metrics are above, will scale up!

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: php-apache

namespace: default

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

targetAverageUtilization: 50Example

Scale up the "php-apache" Deployment whenever CPU usage is > 50%

Will scale up to 10 replicas and go down to 1 if possible

And lots more!

-

Cron jobs

- Can schedule containers to run periodically

-

Persistent volume claims

- Can define data volumes that persist and can be attached to Pods

-

Cluster Autoscaling

- Can add more Nodes (instances on clouds) when the cluster needs more resources

-

StatefulSets

- Like Deployments, but will mantain stable IDs for each Pod (Pod identity)

What's next?

Support and tooling

- Major cloud providers are offering managed K8S

- Easier provisioning of Nodes

- Easier to have High Availability on Masters

- Better integration with Identity and cloud provider resources

- Helm: the K8S package manager

- Can define charts to deploy common apps

- A chart can include multiple definitions

- Makes it easy to add/remove features to the cluster

Want more?

- Deep dive on K8S concepts: https://kubernetes.io/docs/concepts

- Official tutorials: https://kubernetes.io/docs/tutorials

- My own tutorial :) https://github.com/valep27/kubernetes-tutorial