Embedding Entities and Learning and Inference for KBs

Yang et al, 2015

1. Learning representations for entities and relations in KBS

* Triplets representing a fact (e1, r, e2)

* Earlier methods: Tensor factorization, Neural-embeddings

* Can be tested on link-prediction task

Examples:

"...Berlin, the capital of Germany..."

IsTheCapitalOf(Berlin, Germany)

"Jack sells car"

HasTheProfession(Jack, dealer)

2. Using representations to find new relations

* Find so called Horn rules

*

Example:

BornInCity(Jack, Berlin) ^ CityOfCountry(Berlin, Germany)

HasNationality(Jack, Germany)

Knowledge Bases used

* 150K triplets

* 40K entities

* 18 relations

WordNet (WN)

* 590K triplets

* 14K entities

* 1345 relations

FreeBase (FB15k)

Entity & Relation representation

Representation used by most methods:

* One-hot vector (each entity is a unit vector)

* Average of word vectors (used by NTN)

Low-dimensional vectors

1. Projects entities to vectors

2. Combines these vectors into one, with a relation-parameter to calculate scores

Neural model with two layers:

Task 2: Rule Extraction

* Recognize new, implicit facts in KBs

* Optimize data storage

* Complex reasoning and explanation ( a is b, because c)

Why is this useful?

B1 (a, b) ^ B2 (b, c) H (a, c)

Horn rule (length 2)

a and c are in relation iff there is b which satisfies B1 and B2.

Task 2: Rule Extraction

Implementation & results:

* Adapted version of FreeBase (FB15k-401)

* EMBEDRULES found 60K length 2 relations

* AMIE found 47K

Examples:

TVProgramCountry(a,b) ^ CountryLang(b,c) => TVProgramLang(a,c)

AthleteInTeam(a,b) ^ TeamPlaysSport(b,c) => AthletePlaysSport(a,c)

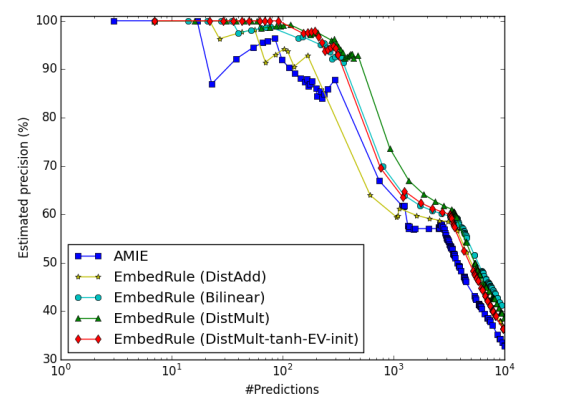

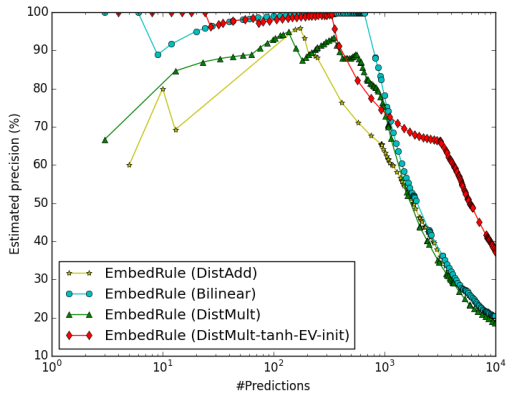

Task 2: Rule Extraction

Evaluation:

Top length-2 rules

Top length-3 rules

Conclusion

* Representation of entities and relations in KBs.

* Can be evaluated on different inference tasks

* Simpler, bilinear models can outperform state-of-the-art

models

* Learned embeddings can be used to extract new relations from KBs, i.e. they can capture semantic relations and perform compositional reasoning

r

a b