An Alternative to EM for Gaussian Mixture Models

Victor Sanches Portella

MLRG @ UBC

August 11, 2021

Why this paper?

Interesting application of Riemannian optimization to an important problem in ML

Details of the technicalities are not important

Yet, we have enough to sense the flavor of the field

Gaussian Mixture Models

Gaussian Mixture Models

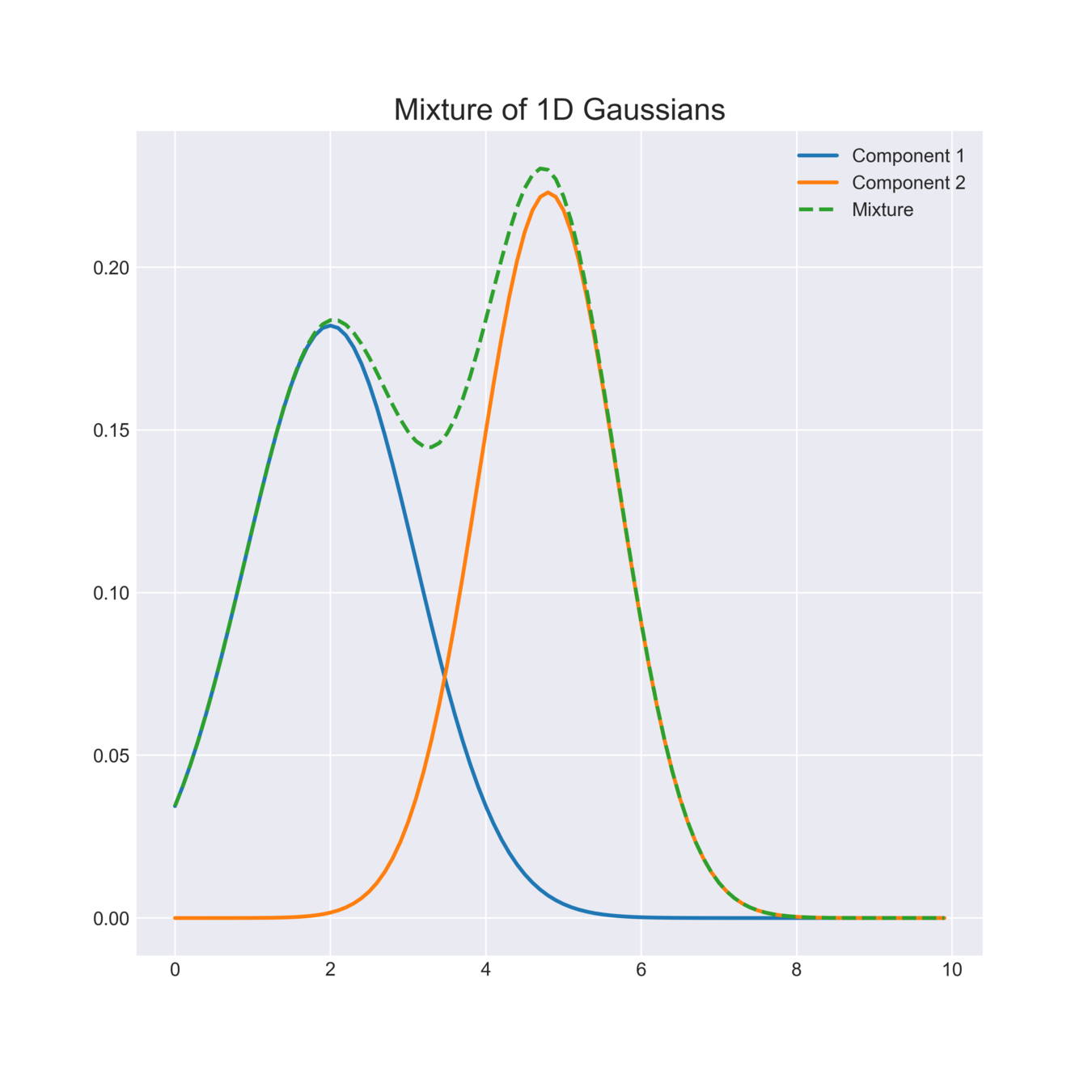

A GMM is a convex combination of a fixed number of Gaussians

Image source: https://angusturner.github.io/assets/images/mixture.png

Gaussian Mixture Models

A GMM is a convex combination of a fixed number of Gaussians

Density of a \(K\)-mixture on \(\mathbb{R}^d\):

Mean and Covariance of \(i\)-th Gaussian

Gaussian density

Weight of \(i\)-th Gaussian

(Must sum to 1)

Find GMM most likely to generate data points \(x_1, \dotsc, x_n \)

Fitting a GMM

Image source: https://towardsdatascience.com/gaussian-mixture-models-d13a5e915c8e

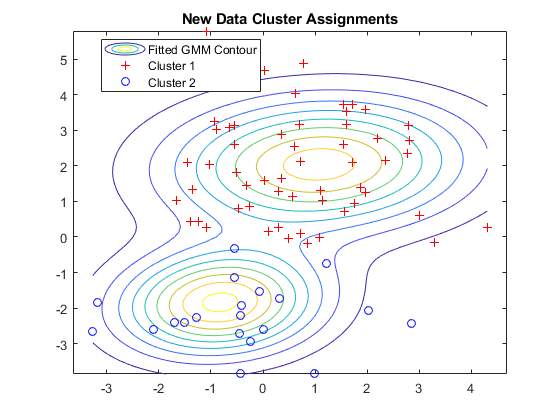

Find GMM most likely to generate data points \(x_1, \dotsc, x_n \)

Fitting a GMM

Idea: use means \(\mu_i\) and covariances \(\Sigma_i\) that maximize log-likelihood

Hard to describe

Open - No clear projection

Classical optimization methods (Newton, CG, IPM) struggle

Optimization vs "\(\succ 0\)"

Slowdown when iterates get closer to boundary

IPM works, but it is slow

Force positive definiteness via "Cholesky decomposition"

Adds spurious maxima/minima

Slow

Expectation Maximization guarantees \(\succ 0\) "for free"

Easy for GMM's

Fast in practice

EM: Iterative method to find (local) max-likely parameters

EM in one slide

E-step: Fix parameters, find "weighted log-likelihood"

M-step: Find parameters by maximizing the "weighted log-likelihood"

For GMM's:

E-step: Given \(\mu_i, \Sigma_i\), compute \(P(x_j \in \mathcal{N}_i)\)

M-step: Max weighted log-likelihood with weights \(P(x_j \in \mathcal{N}_i)\)

\(= p(x_j ; \mu_i, \Sigma_i)\)

CLOSED FORM SOLUTION FOR GMMs!

Guarantees \(\succ 0\) for free

Riemannian Optimization

Manifold of PD Matrices

Manifold of PD Matrices

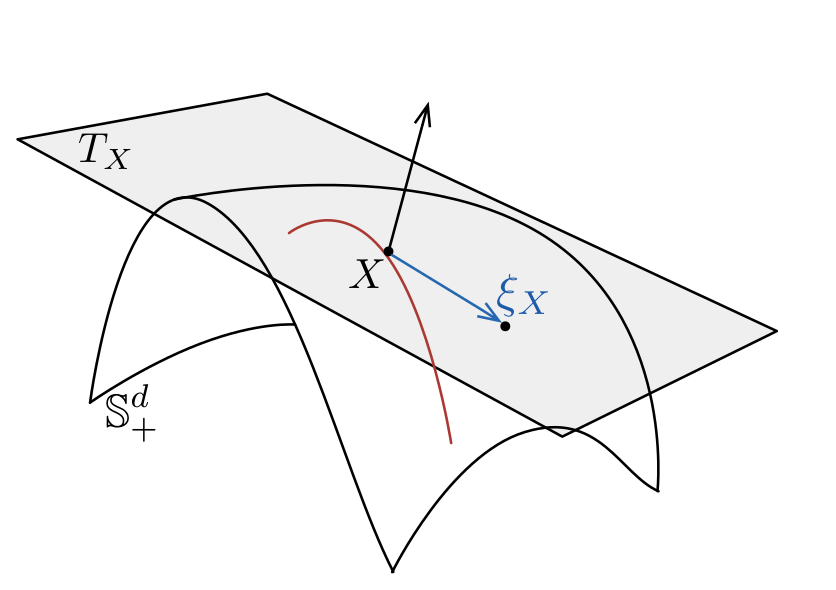

Rimennian metric at

Tangent space at a point

All symmetric matrices

Remark: Blows up when \(\Sigma\) is close to the boundary

Optimization Structure

General steps:

1 - Find descent direction

2 - Perform line-search and step with retraction

Retraction: a way to move on a manifold along a tangent direction

Not necessarily follows a geodesic!

In this paper, we will only use the Exponential Map:

where

is the geodesic starting at \(\Sigma\) with direction \(D\)

The Exponential Map

where

\(\gamma_D(t)\) is the geodesic starting at \(\Sigma\) with direction \(D\)

Geodesic between \(\Sigma\) and \(\Gamma\)

\(\Sigma\) at \(t = 0\) and \(\Gamma\) at \(t = 1\)

From this (?), we get the form of the exponential map

Gradient Descent

GD on the Riemannian Manifold \(\mathbb{P}^d\):

Riemannian gradient of \(f\).

Depends on the metric!

If we have time, we shall see conditions for convergence of SGD

The methods the authors use are Conjugate Gradient and LBFGS.

Traditional gradient descent (GD):

Defining those depends on the idea of vector transport and Hessians. I'll skip these descriptions

Riemannian opt for GMMs

We have a geometric structure on \(\mathbb{P}^d\). The other variable can stay in the traditional Euclidean space.

We can apply methods for Riemannian optimization!

... but they are way slower than traditional EM

GMMs and Geodesic Convexity

Single Gaussian Estimation

Maximum Likelihood estimation for a single Gaussian:

\(\mathcal{L}\) is concave!

In fact, the above has a closed form solution

In a Riemannian manifold, meaning of convexity changes

Geodesic convexity (g-convexity):

Geodesic from \(\Sigma_1\) to \(\Sigma_2\)

\(\mathcal{L}\) is not g-concave

Single Gaussian Estimation

Maximum Likelihood estimation for a single Gaussian:

\(\mathcal{L}\) is concave!

\(\mathcal{L}\) is not g-concave

Solution: lift the function to a higher dimension!

\(\hat{\mathcal{L}}\) is g-concave

Single Gaussian Estimation

Theorem If \(\mu^*, \Sigma^*\) max \(\mathcal{L}\) and \(S^*\) maxs \(\hat{\mathcal{L}}\), then

\(\mathcal{L}\) is not g-concave

\(\hat{\mathcal{L}}\) is g-concave

and

Theorem If \(\mu^*, \Sigma^*\) max \(\mathcal{L}\) and \(S^*\) maxs \(\hat{\mathcal{L}}\), then

and

Proof idea:

Write

Optimal choice of \(s\) is 1 \(\implies\) both \(\mathcal{L}\) and \(\hat{\mathcal{L}}\) agree

PS: \(\Sigma^* \succ 0\) by Shur's complement Lemma

Back to Many Gaussians

For now, fix

Theorem The local maxima of \(\mathcal{L}\) and of \(\hat{\mathcal{L}}\) agree

What about \(\alpha_1, \dotsc, \alpha_K\)? Reparameterize to use unconstrained opt

I think there are not many guarantees on final \(\alpha_j\)'s

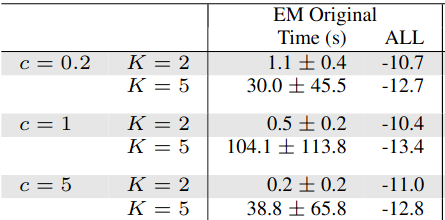

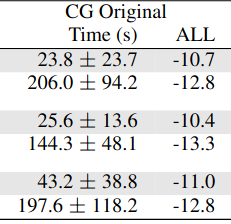

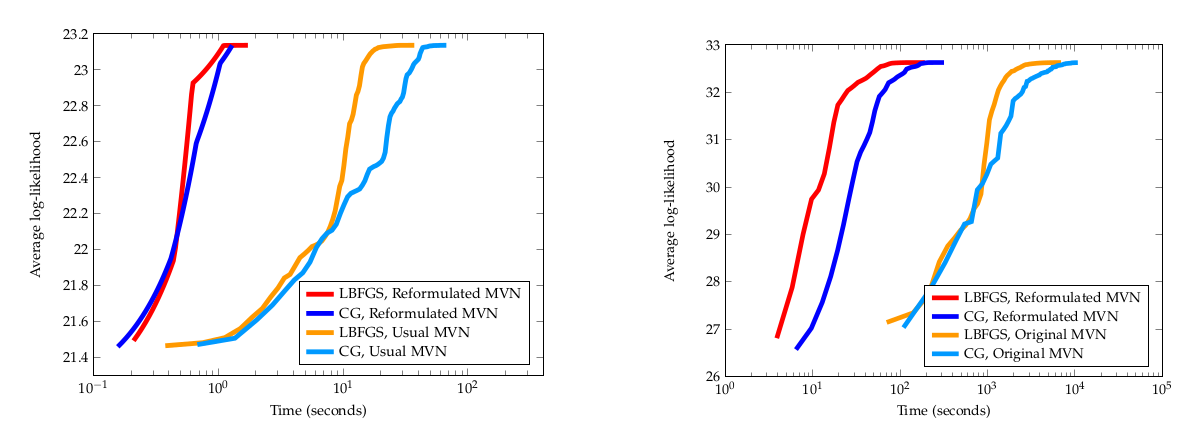

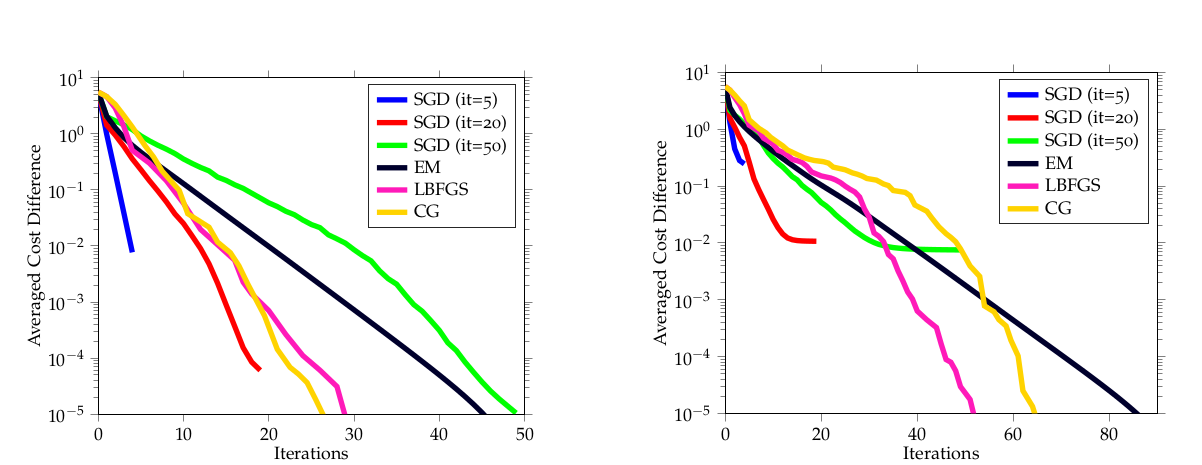

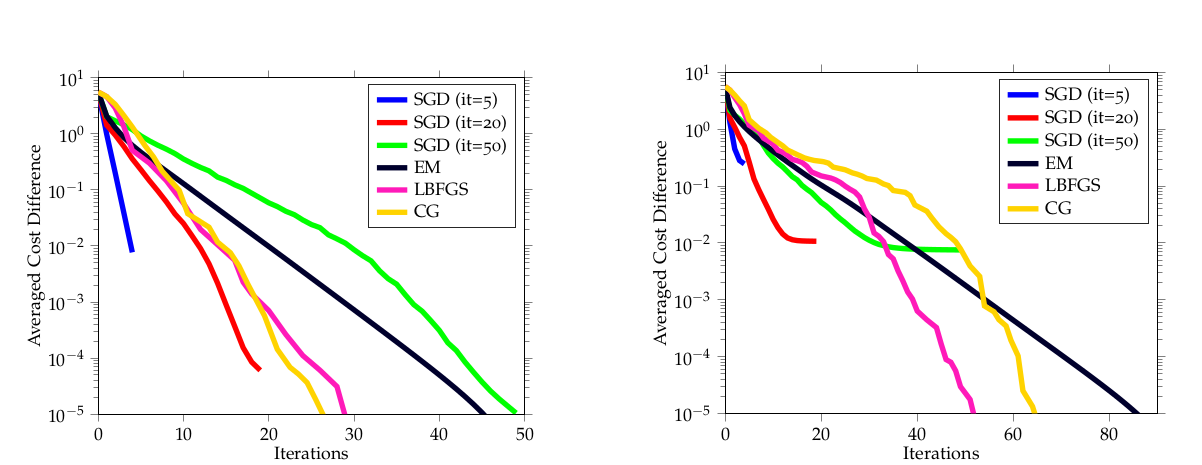

Plots!

The effect of the lifting on convergence

Plots!

EM vs Riemannian Opt

PS: Reformulation helps Cholesky as well, but this information disappeared from the final version

Remarks on What I Skipped

Step-size Line Search

Lifting with Regularization

Details on vector transport and 2nd order methods

Riemannian SGD

Riemannian SGD

\(i_t\) a random index

Assumptions on \(f\):

Riemann Lip. Smooth

Unbiased Gradients

Bounded Variance

We want to minimize

Riemannian Stochastic Gradient Descent:

Riemannian SGD

Assumptions on \(f\):

Riemann Lip. Smooth

Unbiased Gradients

Bounded Variance

Riemannian SGD

Theorem If one of the following holds:

i) Bounded gradients:

ii) Outputs an uniformly random iterate

Then

\(\tau\) is unif. random if (ii)

\(\tau\) is best iter. if (i)

(No need for bdd var)

Does SGD work for GMM?

Do the conditions for SGD to converge hold for GMMs?

Theorem If \(\eta_t \leq 1\), then iterates stay in a compact set.

(For some reason they analyze SGD with a different retraction...)

Remark Objective function is not Riemannian Lip. smooth outside of a compact set

Fact Iterates stay in a compact set \(\implies\)

Bounded Gradients

\(\implies\)

Classical Lip. smoothness

\(\implies\)

Thm from Riemannian Geometry

Riemann Lip. smoothness

It works!

With a different retraction and at a slower rate...?