Efficient and Optimal Fixed-Time Regret with Two Experts

Research Proficiency Exam

Victor Sanches Portella

PhD Student in Computer Science - UBC

October, 2020

The Two-Experts' Problem

Prediction with Expert Advice

Player

Adversary

\(n\) Experts

0.5

0.1

0.3

0.1

1

0

0.5

0.3

Probabilities

Costs

Player's loss:

Goal: sublinear regret in the worst-case

Known Results

Multiplicative Weights Update method:

Optimal for \(n,T \to \infty\) !

If \(n\) is fixed, we can do better

\(n = 2\)

\(n = 3\)

\(n = 4\)

Player knows \(T\) !

Minmax regret in some cases:

What if \(T\) is not known?

Minmax regret

\(n = 2\)

[Harvey, Liaw, Perkins, Randhawa FOCS 2020]

They give an efficient algorithm!

Known Results

Multiplicative Weights Update method:

Optimal for \(n,T \to \infty\) !

If \(n\) is fixed, we can do better

Minmax regret for 2 experts:

[Harvey, Liaw, Perkins, Randhawa FOCS 2020]

\(O(1)\) time per round

[Cover '67]

Player knows \(T\) (fixed-time)

Player doesn't know \(T\) (anytime)

\(O(T)\) time per round

Dynamic Programming

Stochastic Calculus

[Greenstreet]

Our results:

A complete theoretical analysis of the fixed-time algorithm

The case of 2 Experts

Player knows \(T\) (fixed-time)

Player doesn't know \(T\) (anytime)

\(O(1)\) time per round

\(O(T)\) time per round

Stochastic calculus and discretization techniques

Dynamic programming

?

[Greenstreet]

\(O(1)\) time per round

[Harvey et al. '20]

minmax regret

minmax regret

[Cover '67]

Our results:

An efficient and optimal algorithm for two experts

regret

Gaps and Cover's Algorithm

Binary costs

We will consider only 0 or 1 costs (no fractional costs!) Enough for the worst case

1

0

0

1

0

0

1

1

Equal costs are a "waste of time", so we do not consider those

Cover's algorithm strongly relies on these assumptions

Gap between experts

Thought experiment: how much probability mass to put on each expert?

Cumulative Loss on round \(t\)

\(\frac{1}{2}\) is both cases seems reasonable!

Takeaway: player's decision could depend on the gap between experts

Gap = |42 - 20| = 22

Lagging Expert

Leading Expert

42

20

2

2

42

42

Cover's Dynamic Program

Path-independent player:

Choice on round \(t\) depends only on the gap \(g_{t-1}\) of round \(t-1\)

Choice doesn't depend on the specific past costs

Path-independent player \(\implies\)

\(V_p[t,g]\) depends only on \(\ell_{t+1}, \dotsc, \ell_T\) and \(g_t, \dotsc, g_{T}\)

Maximum regret of \(p\)

on the Lagging expert

on the Leading expert

We can compute \(V_p\) backwards in time!

We then choose \(p^*\) that minimizes \(V^*[0,0] = V_{p^*}[0,0]\)

Maximum regret-to-be-suffered on rounds \(t+1, \dotsc, T\) if gap at round \(t\) is \(g\)

Regret and Player in terms of the Gap

Path-independent player:

If

round \(t\) and gap \(g_{t-1}\) on round \(t-1\)

on the Lagging expert

on the Leading expert

Choice doesn't depend on the specific past costs

for all \(t\), then

gap on round \(t\)

A discrete analogue of a Riemann-Stieltjes integral

A formula for the regret

A Dynamic Programming View

Maximum regret-to-be-suffered on rounds \(t+1, \dotsc, T\) when gap on round \(t\) is \(g\)

Path-independent player \(\implies\) \(V_p[t,g]\) depends only on \(\ell_{t+1}, \dotsc, \ell_T\) and \(g_t, \dotsc, g_{T}\)

Regret suffered on round \(t+1\)

Regret suffered on round \(t + 1\)

A Dynamic Programming View

Maximum regret-to-be-suffered on rounds \(t+1, \dotsc, T\) if gap at round \(t\) is \(g\)

We can compute \(V_p\) backwards in time!

Path-independent player \(\implies\)

\(V_p[t,g]\) depends only on \(\ell_{t+1}, \dotsc, \ell_T\) and \(g_t, \dotsc, g_{T}\)

We then choose \(p^*\) that minimizes \(V^*[0,0] = V_{p^*}[0,0]\)

Maximum regret of \(p\)

A Dynamic Programming View

For \(g > 0\)

Optimal player

Optimal regret (\(V^* = V_{p^*}\))

For \(g = 0\)

For \(g > 0\)

For \(g = 0\)

A Dynamic Programming View

Optimal regret (\(V^* = V_{p^*}\))

For \(g > 0\)

For \(g = 0\)

Connection to Random Walks

Maximum regret of \(p^*\)

Theorem

Expected # of 0's of a Sym. Random Walk of Length \(T\)

Theorem

For any player, if the gaps are random and distributed like a reflected symmetric random walk,

Expected # of 0's of a SRW of Length \(T - 1\)

Continuous Regret

A Probabilistic View of Regret Bounds

Formula for the regret based on the gaps

Discrete stochastic integral of \(p\) with respect to the reflected RW \(g\)

Moving to continuous time:

Random walk \(\longrightarrow\) Brownian Motion

Insight:

Regret bound \(\equiv\) almost sure bound on the integral

Gaps are on the support of a reflected random walk

A Probabilistic View of Regret Bounds

Formula for the regret based on the gaps

Random walk \(\longrightarrow\) Brownian Motion

Reflected Brownian motion

Conditions on the continuous player \(p\)

Continuous on \([0,T) \times \mathbb{R}\)

for all \(t \geq 0\)

Stochastic Integrals and Itô's Formula

How to work with stochastic integrals?

Itô's Formula:

Different from classic FTC!

\(\overset{*}{\Delta} f(t, g) = 0\) everywhere

ContRegret doesn't depend on the path of \(B_t\)

Backwards Heat Equation

Goal:

Find a "potential function" \(R\) such that

(1) \(p = \partial_g R\) is a valid continuous player

(2) \(R\) satisfies the Backwards Heat Equation

A Solution Inspired by Cover's Algorithm

For Cover's algorithm, we can show

Lagging expert finishes leading

Gaps ~ Reflected RW

Law of Large Numbers:

Itô's Formula \(\implies\)

\(Q\) satisfies BHE

\(R(t,g)\) such that

Calculus trick

\(R\) satisfies BHE

\(\partial_g R = Q\)

\(R(t,g) \leq \sqrt{T/2\pi}\)

But we wanted a potential R satisfying BHE

Discretization

Discrete Derivatives

Discrete Derivatives

\(V^*\) satisfies the "discrete" Backwards Heat Equation!

Discretizatized player:

Bound regret with a discrete analogue of Itô's Formula

Hopefully, \(R\) satisfies the discrete BHE

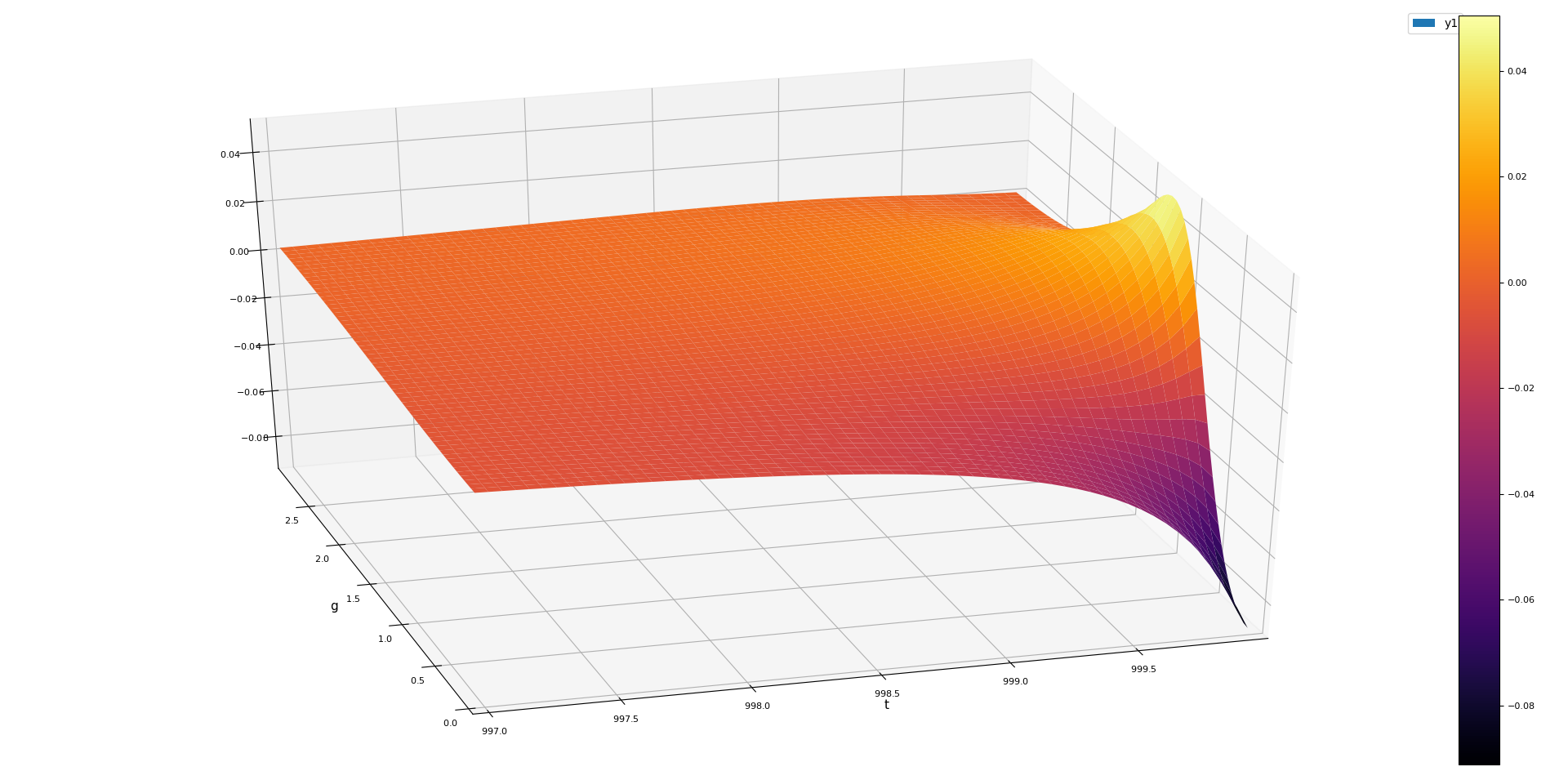

Bounding the Discretization Error

In the work of Harvey et al., they had

In this fixed-time solution, we are not as lucky

Negative discretization error!

Bounding the Discretization Error

Main idea

\(R\) satisfies the continuous BHE

Approximation error of the derivatives

Lemma

Efficient and Optimal Fixed-Time Regret with Two Experts

Research Proficiency Exam

Victor Sanches Portella

PhD Student in Computer Science - UBC

October, 2020