Proposal:

Use off-the-shelf image models to predict the "educational value" of chest x-rays and route high educational value studies to residents

Mentored by the

Walter Witschey Lab

Research Year Project Goal:

Explore and document the capabilities of artificial intelligence in radiology

Explore how humans interact with artificial intelligence in a deployed scenario

Why?

Use off-the-shelf image models to predict the "educational value" of chest x-rays and route high educational value studies to residents

Explore and document the capabilities of artificial intelligence in radiology

Why?

Use off-the-shelf image models to predict the "educational value" of chest x-rays and route high educational value studies to residents

Explore how humans interact with artificial intelligence in a deployed scenario

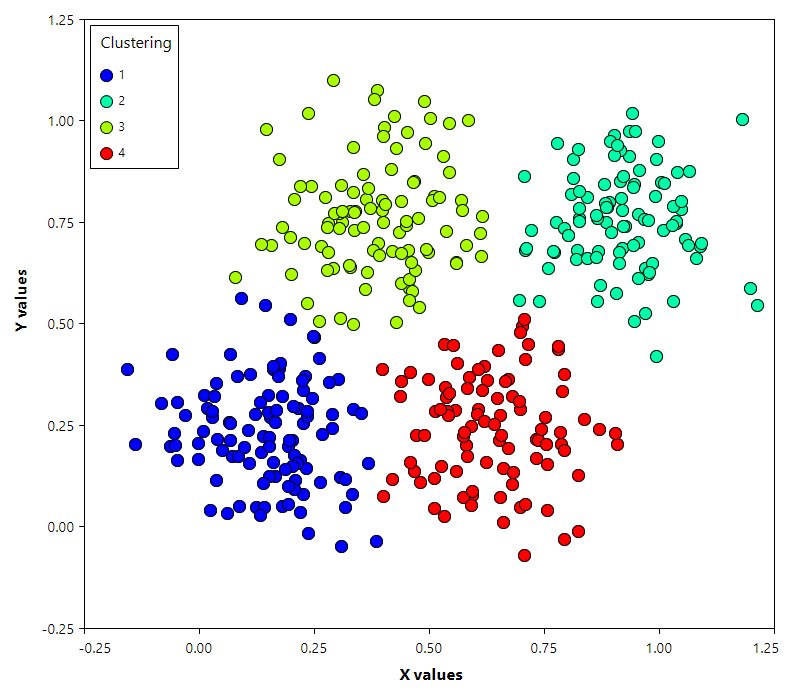

Stage 1: Retrospective analysis of PMBB data to determine the capabilities of open models

Stage 2: Depending on capabilities of open models, work with the chest department and AI Insights Infra to deploy the routing platform

Stage 3: Create HCI/HAII experiments making use of the platform

Stage 1: Model Retrospective Analysis

How to define "educational value"

- By list of important diagnoses

- By list of rare diagnoses

- By list of diagnoses individualized to that resident that the resident hasn't yet seen

- By diagnosis/finding individualized to the resident that they had missed in the past

Take advantage of our Macro-X system to filter for studies that have "Great Call" "Major Change" "Minor Change" "Notification"

| Class | Last Year | All Time | PMBB |

|---|---|---|---|

| All | 583,468 | 6,877,112 | ~2K |

| Notify | 6,044 | 86,901 | 1,693 |

| Major | 204 | 1,409 | 221 |

| Minor | 6,337 | 28,754 | 2,548 |

| Great | 228 | 1,918 | 347 |

| Model | Output | License |

|---|---|---|

| RAD-DINO | Embeddings | MIT |

| MAIRA-2 | VLM | MSRLA + disclaimer |

| MedSigLIP | Embeddings | HAI-DEF |

| MedGemma-27b | VLM | HAI-DEF |

| ARK+ | Embeddings | ASU academic |

Model

AI Insights Infra

Router

AI

Resident

Extender

$0.493 per hour for a NC4as T4 v3 (16gb VRAM)

- Embedding Models

- RAD-DINO

- MedSigLIP

- ARK+

- 4B VLMS

- MedGemma-4B

$3.505 per hour for a NV36ads A10 V5 (24gb VRAM)

- 7B VLMS

- MAIRA-2

*27B VLMS (Would require 80GB VRAM and are therefore too expensive for deployment -- MedGemma-27B)