Node Core #2

Async node

Plan

- Event loop

- setImmediate(fn) vs setTimeout(fn, 0)

- process.nextTick()

- macrotasks vs microtasks

- Worker threads and child processes

Event loop!

setTimeout(cb, 0)

when the cb will be executed?

Event-loop phases

Phases Overview

timers: this phase executes callbacks scheduled by setTimeout() and setInterval().

pending callbacks: executes I/O callbacks deferred to the next loop iteration.

idle, prepare: only used internally.

poll: retrieve new I/O events; execute I/O related callbacks

check: setImmediate() callbacks are invoked here.

close callbacks: some close callbacks, e.g. socket.on('close', ...).

Microtasks

setTimeout(() => console.log("timeout"));

Promise.resolve()

.then(() => console.log("promise"));

console.log("main");Explain this!

Only after tasks in microTasks are completed, event loop will next pick up tasks from macroTasks.

function logA() { console.log('A') };

function logB() { console.log('B') };

function logC() { console.log('C') };

function logD() { console.log('D') };

function logE() { console.log('E') };

function logF() { console.log('F') };

function logG() { console.log('G') };

function logH() { console.log('H') };

function logI() { console.log('I') };

function logJ() { console.log('J') };

logA();

setTimeout(logG, 0);

Promise.resolve()

.then(logC)

.then(setTimeout(logH))

.then(logD)

.then(logE)

.then(logF);

setTimeout(logI);

setTimeout(logJ);

logB();queueMicrotask

const messageQueue = [];

let sendMessage = message => {

messageQueue.push(message);

if (messageQueue.length === 1) {

queueMicrotask(() => {

const json = JSON.stringify(messageQueue);

messageQueue.length = 0;

fetch("url-of-receiver", json);

});

}

};console.log(1)

setTimeout(() => {

queueMicrotask(() => {

console.log(2)

});

console.log(3)

});

Promise.resolve().then(() => console.log(4))

queueMicrotask(() => {

console.log(5)

queueMicrotask(() => {

console.log(6)

});

});

console.log(7)Just remember — sync tasks → micro tasks → macro tasks

What will be the output of this snippet?

When to use microtasks

Generally, it's about capturing or checking results, or performing cleanup, after the main body of a JavaScript execution context exits, but before any event handlers, timeouts and intervals, or other callbacks are processed.

setImmediate vs. nextTick

setImmediate()

setImmediate(callback[, ...args]) takes a callback and add it to the event queue( specifically the immediate queue).

console.log('Start')

setImmediate(() => console.log('Queued using setImmediate'))

console.log('End')setTimeout(() => {

console.log('timeout');

}, 0);

setImmediate(() => {

console.log('immediate');

});What will be the output of this snippet?

process.nextTick()

process.nextTick(callback[, ...args]) will also takes a callback and optional args parameters like setImmediate() function. But instead of "immediate queue", the callbacks are queued in the "next tick queue".

console.log('Start')

process.nextTick(() => console.log('Queued using process.nextTick'))

console.log('End')It allows you to "starve" your I/O by making a recursive process.nextTick() calls, which prevents the event loop from reaching the poll phase.

Why use process.nextTick()?

There are two main reasons:

-

Allow users to handle errors, cleanup any then unneeded resources, or perhaps try the request again before the event loop continues.

-

At times it's necessary to allow a callback to run after the call stack has unwound but before the event loop continues

Promise.resolve().then(() => { console.log('1'); });

process.nextTick(() => {

console.log('2.');

process.nextTick(() => {

console.log('3');

process.nextTick(() => {

console.log('3');

process.nextTick(() => {

console.log('5');

})

})

})

})Unlike process.nextTick(), recursive calls to setImmediate() won't block the event loop

let count = 0

const cb = () => {

console.log(`Processing setImmediate cb ${++count}`)

setImmediate(cb)

}

setImmediate(cb)

setTimeout(() => console.log('setTimeout executed'), 100)

console.log('Start')We recommend developers use setImmediate() in all cases because it's easier to reason about (and it leads to code that's compatible with a wider variety of environments, like browser JS.)

Always use setImmediate.

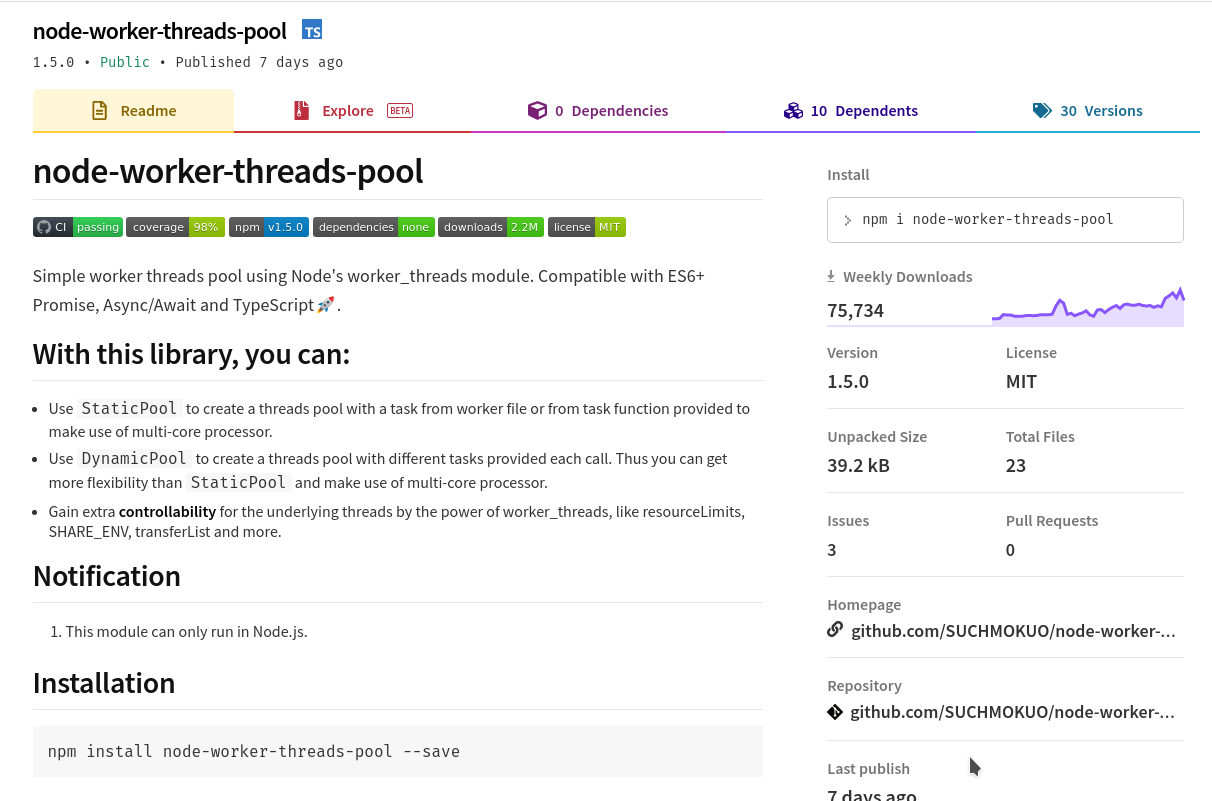

Worker threads and child processes

If we are running this heavy CPU bound operation in the context of a web application,the single thread of node will be blocked and hence the webserver won't be able to respond to any request because it is busy calculating results.

const express = require("express")

const app = express()

app.get("/getfibonacci", (req, res) => {

const startTime = new Date()

const result = fibonacci(parseInt(req.query.number)) //parseInt is for converting string to number

const endTime = new Date()

res.json({

number: parseInt(req.query.number),

fibonacci: result,

time: endTime.getTime() - startTime.getTime() + "ms",

})

})

const fibonacci = n => {

if (n <= 1) {

return 1

}

return fibonacci(n - 1) + fibonacci(n - 2)

}

app.listen(3000, () => console.log("listening on port 3000"))Wait, Cant Promises solve this problem?

Child process

const express = require("express")

const app = express()

const { fork } = require("child_process")

app.get("/isprime", (req, res) => {

const childProcess = fork("./forkedchild.js") //the first argument to fork() is the name of the js file to be run by the child process

childProcess.send({ number: parseInt(req.query.number) }) //send method is used to send message to child process through IPC

const startTime = new Date()

childProcess.on("message", message => {

//on("message") method is used to listen for messages send by the child process

const endTime = new Date()

res.json({

...message,

time: endTime.getTime() - startTime.getTime() + "ms",

})

})

})

// child.js

//

process.on("message", message => {

//child process is listening for messages by the parent process

const result = isPrime(message.number)

process.send(result)

process.exit() // make sure to use exit() to prevent orphaned processes

})

function isPrime(number) {

let isPrime = true

for (let i = 3; i < number; i++) {

if (number % i === 0) {

isPrime = false

break

}

}

return {

number: number,

isPrime: isPrime,

}

}Thread worker

const {

Worker, isMainThread, parentPort, workerData

} = require('worker_threads');

if (isMainThread) {

module.exports = function parseJSAsync(script) {

return new Promise((resolve, reject) => {

const worker = new Worker(__filename, {

workerData: script

});

worker.on('message', resolve);

worker.on('error', reject);

worker.on('exit', (code) => {

if (code !== 0)

reject(new Error(`Worker stopped with exit code ${code}`));

});

});

};

} else {

const { parse } = require('some-js-parsing-library');

const script = workerData;

parentPort.postMessage(parse(script));

}import { parentPort } from 'worker_threads';

parentPort.on('message', () => {

const numberOfElements = 100;

const sharedBuffer = new SharedArrayBuffer(Int32Array.BYTES_PER_ELEMENT * numberOfElements);

const arr = new Int32Array(sharedBuffer);

for (let i = 0; i < numberOfElements; i += 1) {

arr[i] = Math.round(Math.random() * 30);

}

parentPort.postMessage({ arr });

});In order for the memory to be shared, an instance of ArrayBuffer or SharedArrayBuffer must be sent to the other thread as the data argument or inside the data argument.

SharedArrayBuffer

Cluster

const cluster = require("cluster")

const http = require("http")

const cpuCount = require("os").cpus().length //returns no of cores our cpu have

if (cluster.isMaster) {

masterProcess()

} else {

childProcess()

}

function masterProcess() {

console.log(`Master process ${process.pid} is running`)

//fork workers.

for (let i = 0; i < cpuCount; i++) {

console.log(`Forking process number ${i}...`)

cluster.fork() //creates new node js processes

}

cluster.on("exit", (worker, code, signal) => {

console.log(`worker ${worker.process.pid} died`)

cluster.fork() //forks a new process if any process dies

})

}

function childProcess() {

const express = require("express")

const app = express()

//workers can share TCP connection

app.get("/", (req, res) => {

res.send(`hello from server ${process.pid}`)

})

app.listen(5555, () =>

console.log(`server ${process.pid} listening on port 5555`)

)

}