Speech Project

Week 12 Report

b02901085 徐瑞陽

b02901054 方為

Paper Study

Hierarchical Neural Autoencoders

Introduction

LSTM captures local compositions (the way neighboring words are combined semantically and syntatically

Words{\rightarrow}Sentence

Words→Sentence

Sentence\ encoder{\rightarrow}Paragraph\ encoder

Sentence encoder→Paragraph encoder

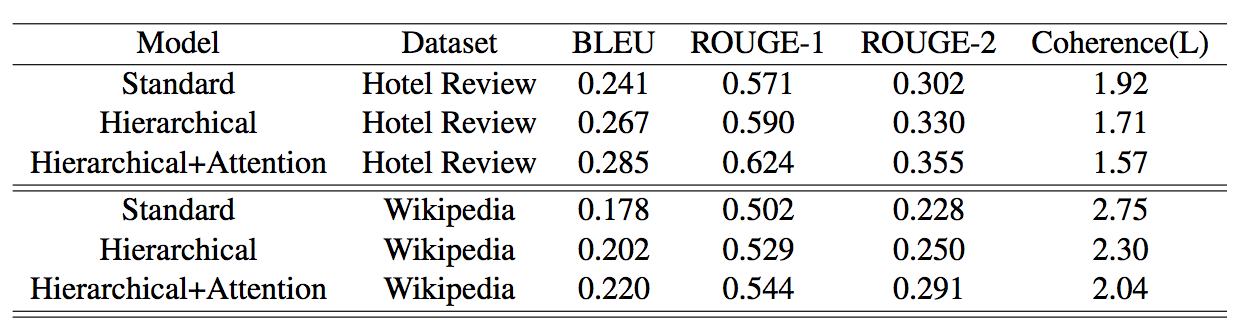

Paragraph Autoencoder Models

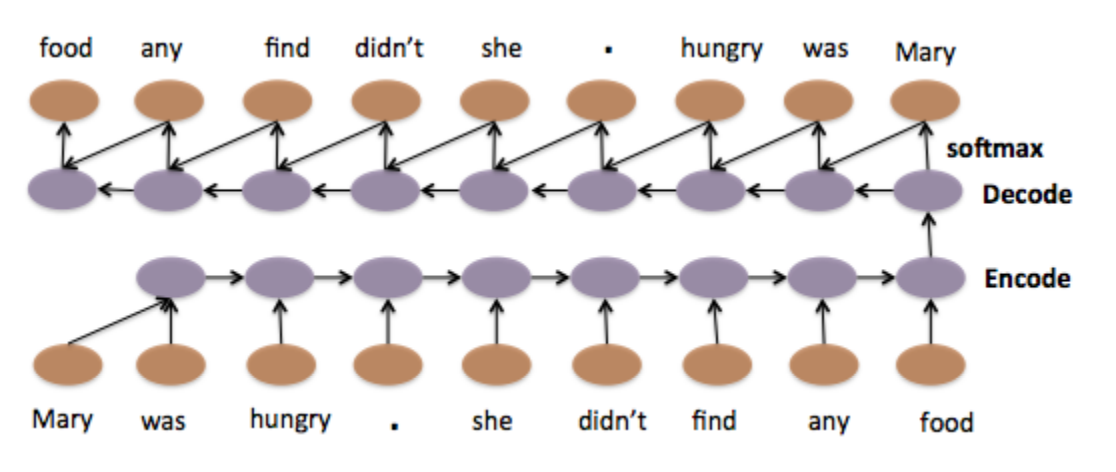

Model 1: Standard LSTM

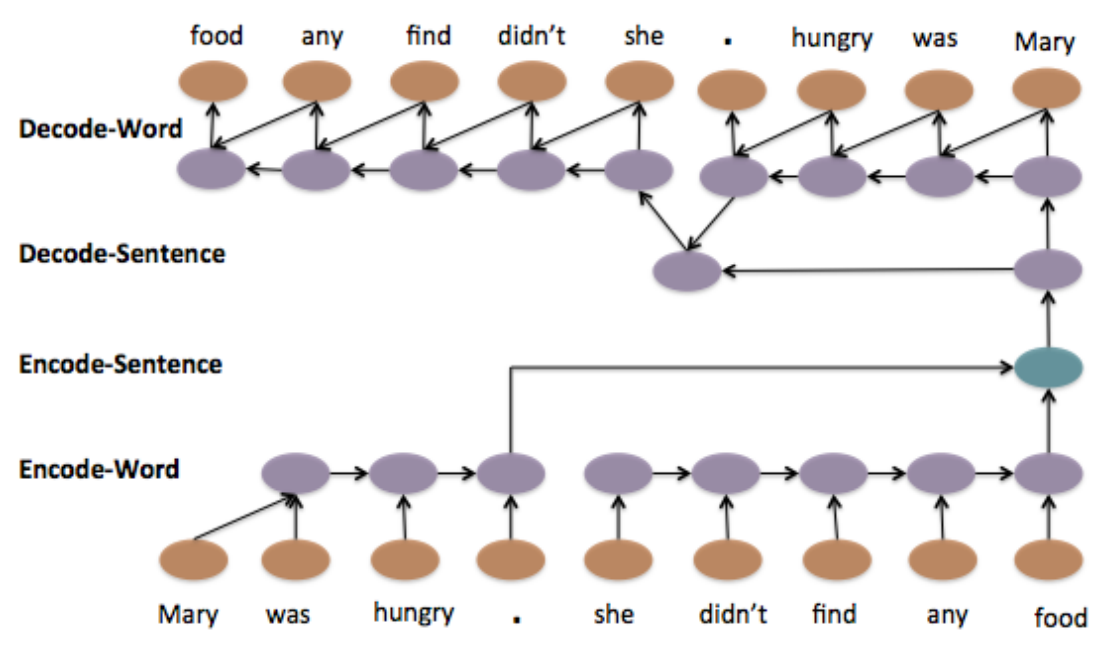

Model 2: Hierarchical LSTM

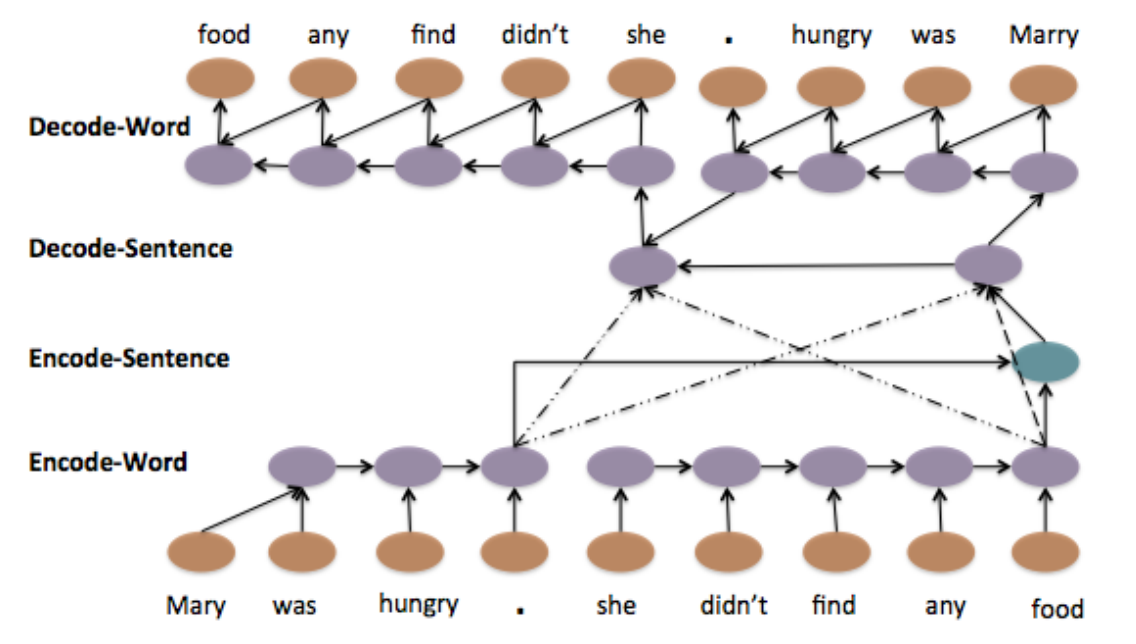

Model 3: Hierarchical LSTM with Attention

Evaluation - Summarization

- ROUGE: recall-oriented score

- BLEU: precision-oriented score

Task 5:

Aspect Based Sentiment Analysis (ABSA)

Subtask 1: Sentence-level ABSA

Given a review text about a target entity (laptop, restaurant, etc.),

identify the following information:

-

Slot 1: Aspect Category

- ex. ''It is extremely portable and easily connects to WIFI at the library and elsewhere''

----->{LAPTOP#PORTABILITY}, {LAPTOP#CONNECTIVITY}

- ex. ''It is extremely portable and easily connects to WIFI at the library and elsewhere''

-

Slot 2: Opinion Target Expression (OTE)

- an expression used in the given text to refer to the reviewed E#A

- ex. ''The fajitas were delicious, but expensive''

----->{FOOD#QUALITY, “fajitas”}, {FOOD#PRICES, “fajitas”}

-

Slot 3: Sentiment Polarity

- label: (positive, negative, or neutral)

Subtask 2: Text-level ABSA

Given a set of customer reviews about a target entity (ex. a restaurant), identify a set of {aspect, polarity} tuples that summarize the opinions expressed in each review.

Subtask 3: Out-of-domain ABSA

Test system in a previously unseen domain (hotel reviews in SemEval 2015) for which no training data was made available. The gold annotations for Slots 1 and 2 were provided and the teams had to return the sentiment polarity values (Slot 3).

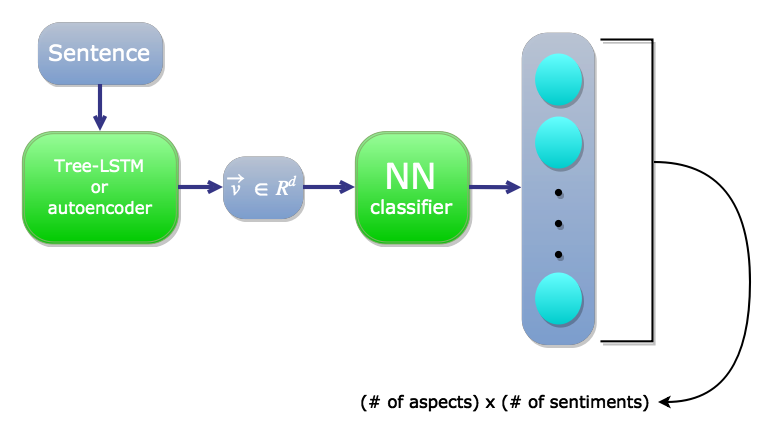

Our Framework

Framework 1

Encode by Tree-LSTM or autoencoder

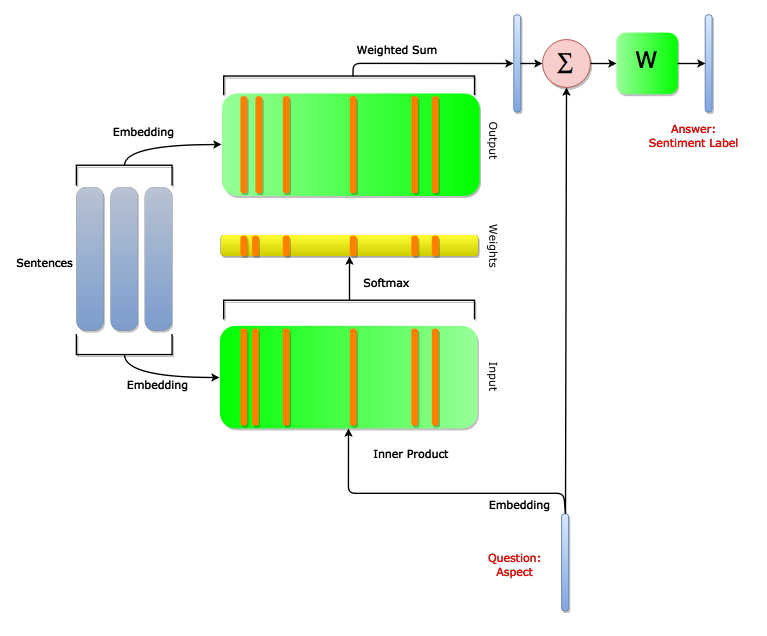

Framework 2

End-to-end MemNN