Goh Wei Wen

24 Oct 2020

Introduction

What is Docker?

- Containerize apps

- Isolated environment for launching processes

- Clean separation of environments

- Sandbox with defined resources

- Simple interface for launching applications

Why should I use Docker?

- Compatibility/dependency management

- Quick dev environment set up

- Easily convert dev environment to staging/prod environment

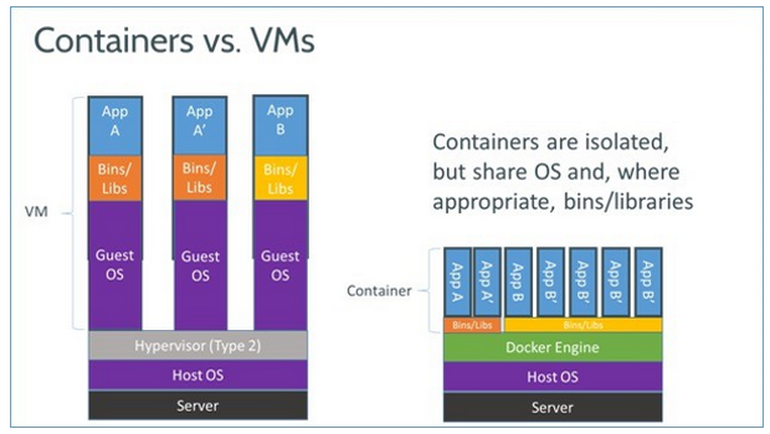

What is a container?

- Use underlying kernel to run, so any Linux host OS can run any Linux distros in Docker (e.g. CentOS/Alpine on an Ubuntu host)

$ docker run --rm ubuntu echo "Hello world"

Unable to find image 'ubuntu:latest' locally

latest: Pulling from library/ubuntu

6a5697faee43: Pull complete

ba13d3bc422b: Pull complete

a254829d9e55: Pull complete

Digest: sha256:b709186f32a3ae7d0fa0c217b8be150ef1ce4049bf30645a9de055f54a6751fb

Status: Downloaded newer image for ubuntu:latest

Hello worldHello World

docker run --rm ubuntu echo "Hello world"- Hey docker,

- start a container

- using the image

ubuntu - and run the command

echo "Hello world" - oh, and clean up after you're done

- Docker will

- look for a local image called

ubuntuwith tag latest - otherwise, look in the default registry (

docker.io) and pull it - start the container using the specified command

- remove the container after it terminates

- look for a local image called

$ docker run --rm ubuntu echo "Hello world"

Unable to find image 'ubuntu:latest' locally

latest: Pulling from library/ubuntu

6a5697faee43: Pull complete

ba13d3bc422b: Pull complete

a254829d9e55: Pull complete

Digest: sha256:b709186f32a3ae7d0fa0c217b8be150ef1ce4049bf30645a9de055f54a6751fb

Status: Downloaded newer image for ubuntu:latest

Hello worldFirst run:

Subsequent runs:

$ docker run --rm ubuntu echo "Hello world"

Hello worldImages and Containers

- Docker Image

- Immutable template for containers

- Can be pulled and pushed to a registry

- Fixed naming convention:

- [registry/][user/]name[:tag]

- Default tag to latest

- Identified using an image digest (SHA256)

- Docker Container

- Instance of an image

- Can be started, stopped, restarted

- Maintains change within file system

Docker commands

$ tldr docker

Manage Docker containers and images.

- List currently running docker containers:

docker ps

- List all docker containers (running and stopped):

docker ps -a

- Start a container from an image, with a custom name:

docker run --name {{container_name}} {{image}}

- Start or stop an existing container:

docker {{start|stop}} {{container_name}}

- Pull an image from a docker registry:

docker pull {{image}}

- Open a shell inside of an already running container:

docker exec -it {{container_name}} {{sh}}

- Remove a stopped container:

docker rm {{container_name}}

- Fetch and follow the logs of a container:

docker logs -f {{container_name}}$ docker run --rm prologic/todo- By default, will run with STDOUT attached to your terminal

- Use CTRL+C to stop

- Alternatively, use -d to run in detached mode

Attach & Detach

$ docker run --rm -d prologic/todo

a0849c33ede8c97c30d5db7ca31bcaed01212c33dd84aca9ac96402300ff005c

$ docker attach a0849$ docker run --rm -i python

print(1 + 1)

2Interactive Terminal

- --interactive: Keep STDIN open even if not attached.

- --tty: Allocate a pseudo-TTY and attach to STDIN.

$ docker run --rm -it python

Python 3.9.0 (default, Oct 13 2020, 20:14:06)

[GCC 8.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

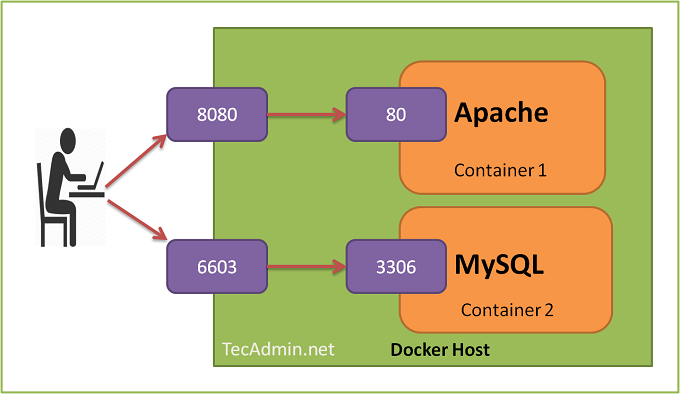

>>>Port mapping

docker run --rm -d -p 5432:5432 postgres

docker run --rm -d -p 5433:5432 postgres

docker run --rm -d -p 5434:5432 postgres

- Maps a port on the host (localhost:5432) to a port in the docker container

- Can have multiple containers using the same ports, but mapped to different host ports

Port mapping

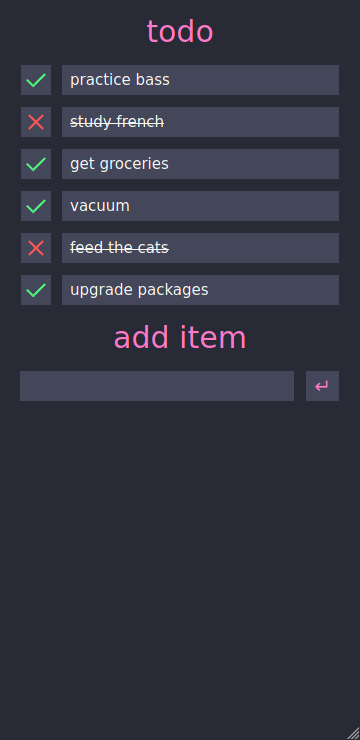

docker run --rm -d -p 8000:8000 prologic/todo- todo is a self-hosted todo web app that lets you keep track of your todos in a easy and minimal way. 📝

- Listens on port 8000

docker run --name todo -d -p 8000:8000 \

-v /tmp/todo.db:/usr/local/go/src/todo/todo.db \

prologic/todo- Docker containers are ephemeral. When removed, all data is lost

- We can mount volumes to persist the file system. Docker will overlay the volume onto the image

- Use absolute path for a local volume

Volume mapping

docker stop todo

docker rm todo

docker run --name todo -d -p 8000:8000 \

-v /tmp/todo.db:/usr/local/go/src/todo/todo.db \

-e THEME=ayu \

prologic/todo- We can configure the containers using environment variables. This is useful to configure different deployments e.g. staging/prod environments

- todo supports changing the theme:

Environment Variables

docker logs -f todo

[todo] 2020/10/23 23:56:00 (172.17.0.1:35904) "GET / HTTP/1.1" 200 842 684.422µs

[todo] 2020/10/23 23:56:00 (172.17.0.1:35904) "GET /css/color-theme.css HTTP/1.1" 200 166 196.807µs- When running in detached mode, we lose access to STDOUT.

- We can use docker logs to look at the logs

- -f flag to follow the logs

Logs

Inspect

docker inspect todo- Useful for troubleshooting

- View information

- status (running/paused/OOMkilled/dead)

- image

- command and args

- env variables

- port binds

- volumes mounts

- etc

Building your own containers

Why create my own image?

- Cannot find pre-built containers

- Existing containers don't match your requirements

- e.g. PHP applications that carry their own reverse proxies

- Want to deploy your own applications with Docker

How to create my own image?

- Plan how to get a working environment from scratch

- What OS or base image?

- What dependencies to install?

- What services does it depend on?

- Write a Dockerfile using that recipe

FROM ubuntu:latest

RUN apt-get update \

&& apt-get install nodejs npm

WORKDIR /app

COPY . .

CMD ["npm", "run", "start"]Layered architecture

- Each command creates a new layer that builds over the previous

- Helps with incremental building

FROM node:latest

WORKDIR /app

COPY . .

CMD ["npm", "run", "start"]Choose the right base image

- Reduce build times

- Save disk space if you have multiple images with similar setup

docker build . -t goweiwen/docker-workshopBuild it!

- Build the container with a specified tag:

docker history goweiwen/docker-workshop- View the layers using docker history

docker run --rm goweiwen/docker-workshop- Run it

ENV <key>=<value>More commands

- Default environment variables

VOLUME ["/data"]- Declare volumes for mounts

EXPORT <port>- Declare ports to export

Docker Compose

The Problem

- Deploying a stack of containers with commands is verbose and difficult to maintain

- Specify all mounts and ports (if bridging to host)

- Networking between containers

- Specifying all environment variables

- How to do version control?

- One solution is to put the configuration into a yaml file

- e.g. docker-compose.yaml, Kubernetes manifests

- Check that yaml into a git repository maintained by DevOps team

voting-app

- Python web app for serving front-end for voting

- Node.js web app for showing results

- Redis in-memory DB

- Postgres filesystem DB

- .NET Core worker for consuming votes

docker run -d --name=redis redis

docker run -d --name=db postgres:9.4

docker run -d --name=vote -p 5000:80 voting-app

docker run -d --name=result -p 5001:80

docker run -d --name=worker workerHave to link the containers

- Resolves hosts using /etc/hosts

- If "vote" looks for a host called "redis", need to link them for it to resolve

docker run -d --name=redis redis

docker run -d --name=db postgres:9.4

docker run -d --name=vote -p 5000:80 \

--link redis:redis voting-app

docker run -d --name=result \

--link db:db -p 5001:80

docker run -d --name=worker \

--link db:db --link redis:redis workerversion: 3

services:

redis:

image: redis

db:

image: postgres:9.4

vote:

image: voting-app

ports:

- 5000:80

result:

image: result-app

ports:

- 5001:80

worker:

image: workerdocker-compose.yml

- No need to link

- Docker compose will create a default network and link all services in it

docker-compose up

docker stack deploy voting-app \

-c docker-compose.ymlOrchestration

- Manage, scale containerized applications across multiple machines

- Abstracts the concept of individual machines, now think in terms of total resource: CPU, memory

- Containers guarantee they work the same everywhere

- Automatically scale applications up, replace failed containers, perform rolling updates

- e.g.

- Kubernetes

- Docker Swarm

Kubernetes

- a.k.a k8s

- Most commonly used orchestration solution

- Use manifest yamls to declare deployment requirements

- Complicated

- Tools such as Helm and Ksonnet to manage these yamls, allow rolling back entire deployment configs

- Enterprise-grade, offered by cloud solutions

- e.g. Google Kubernetes Engine, Anthos, Amazon Elastic Kubernetes Service, DigitalOcean Kubernetes

- Not covered today

Docker Swarm

- Docker's solution to orchestration

- Allow using multiple Docker hosts as a single virtual host

- Can use docker-compose.yml almost without change

- Much lighter than k8s, quicker to deploy and lower overhead

- Less complex

Private Registries

- Default registry is Docker Hub (docker.io)

- Sometimes, you don't want your containers to be public

- e.g. GitLab's container registry (FREE!)

docker login registry.gitlab.com

docker pull registry.gitlab.com/kiasufoodies/kiasubot:0.10.0Using Docker for Development

Quickly set up a database

- Set up a disposable Postgres or MySQL database quickly

- Restore a database dump if necessary

docker run -p 5432:5432 --name onimadb \

-e POSTGRES_USER='onima' \

-e POSTGRES_PASSWORD='password' \

-d postgres

docker exec -i onimadb pg_restore -U onima -d onima < ~/onima.dump

DB_HOST=0.0.0.0 DB_USER=onima DB_PASSWORD=password yarn startMock an S3 endpoint

- MinIO is an S3-compatible block storage solution

services:

minio:

image: minio/minio

command: server /data

ports:

- 9000:9000

volumes:

- minio:/data

environment:

MINIO_ACCESS_KEY: minio_access_key

MINIO_SECRET_KEY: minio_secret_keyAWS_S3_ENDPOINT=http://0.0.0.0:9000

AWS_ACCESS_KEY_ID=minio_access_key

AWS_SECRET_ACCESS_KEY=minio_secret_key

yarn startHelp frontend developers

- No need to understand how the backend is set up

- Just keep a docker-compose.yml in the repository

- Remember to keep secrets off the version control!

version: '3.1'

services:

api:

image: registry.gitlab.com/kiasufoodies/kiasubot:0.5.2

...

environment:

- TZ=Asia/Singapore

- DATABASE_URL=${DATABASE_URL}

- KIASU_API_KEY=${KIASU_API_KEY}

- AWS_REGION=${AWS_REGION}

- AWS_S3_BUCKET_NAME=${AWS_S3_BUCKET_NAME}

- AWS_S3_KEY_PREFIX=${AWS_S3_KEY_PREFIX}

- GOOGLE_PLACES_API_KEY=${GOOGLE_PLACES_API_KEY}

Using Docker for Deployment

Reverse Proxies

- Use the request address to proxy HTTP(S) requests to containers

- Just add labels to your containers

- Automatically create configs as containers are added/removed

- Automatically request for and renew LetsEncrypt SSL certs!

- For nginx:

- jwilder/nginx-proxy

- jrcs/letsencrypt-nginx-proxy-companion

- For Caddy:

- lucaslorentz/caddy-docker-proxy

Using GitLab's CI

- GitLab's CI is powerful

- Use it to build your projects

- Deploy to GitLab's container registry

- Even automatically deploy the containers!

...

build:

stage: build

rules:

- if: "$CI_COMMIT_TAG"

script:

- docker pull $CI_REGISTRY_IMAGE:latest

- docker build --cache-from $CI_REGISTRY_IMAGE:latest --tag

$CI_REGISTRY_IMAGE:latest .

- docker push "$CI_REGISTRY_IMAGE:$CI_COMMIT_REF_NAME"

- docker push "$CI_REGISTRY_IMAGE:latest"Backing up databases

- Don't reinvent the wheel

- Remember to test your backups -- Untested backups are worthless

- For Postgres:

- prodrigestivill/postgres-backup-local

- schickling/postgres-backup-s3

- For MariaDB:

- tiredofit/mariadb-backup

Monitoring Docker

- cAdvisor

- Collects and exports data about running containers

- Resource usage

- Network statistics

- Collects and exports data about running containers

- Prometheus

- Polling time series database, can poll cAdvisor

- Grafana

- Data visualization and alert system, can use data from Prometheus