3D Gaussan Splatting

About me

- Is Mitya / Митя

- 3.5 years in ML

- 1.5 years in 3D reconstruction specifically

- I'm into:

- 3D Generative models

- 3D reconstruction tricks

- Computer Graphics stuff

- 3D modeling (sometimes)

- Math!

- Computer Vision in general

About today

- Prerequisites

- NeRF for dummies

- 3D Gaussian Splatting intro

- Rendering speed

- Practical notes & demo

- Dynamic reconstruction

- Comparison with NeRFs

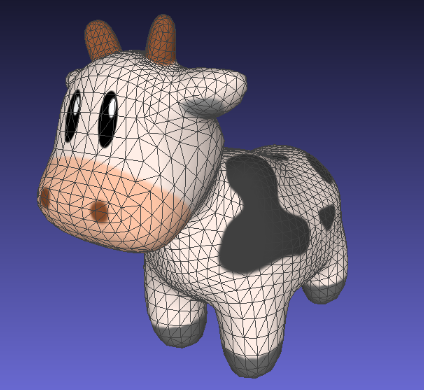

What is: Triangular Mesh

Any solid object can be represented as triangles

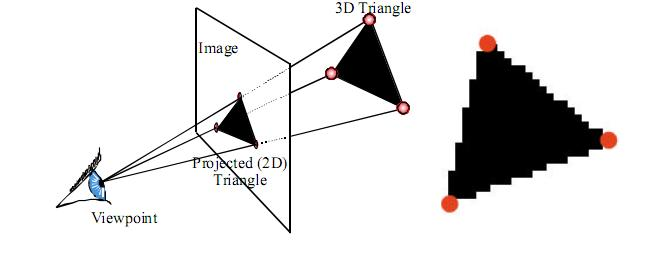

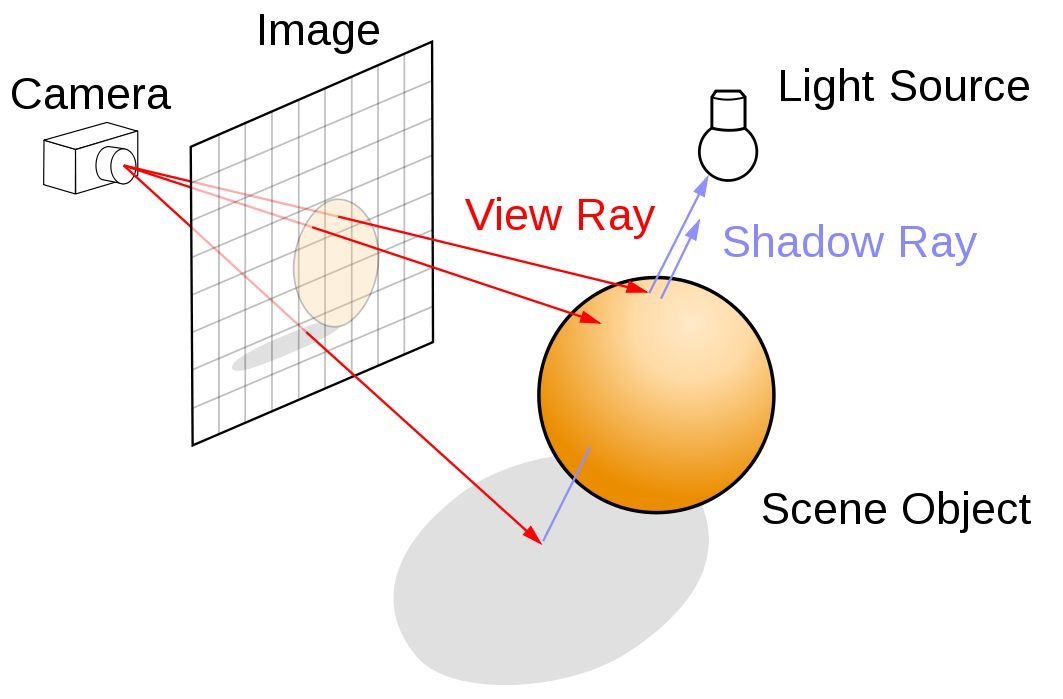

What is: Rendering

Two most used approaches

Rasterization

Projects object to screen space

Ray Tracing

Traces a ray through each pixel

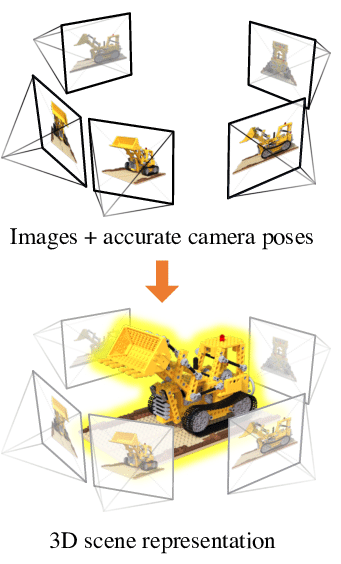

What is: Photogrammetry

Input: many-many photos of an object/scene

Output: point cloud of the object/scene

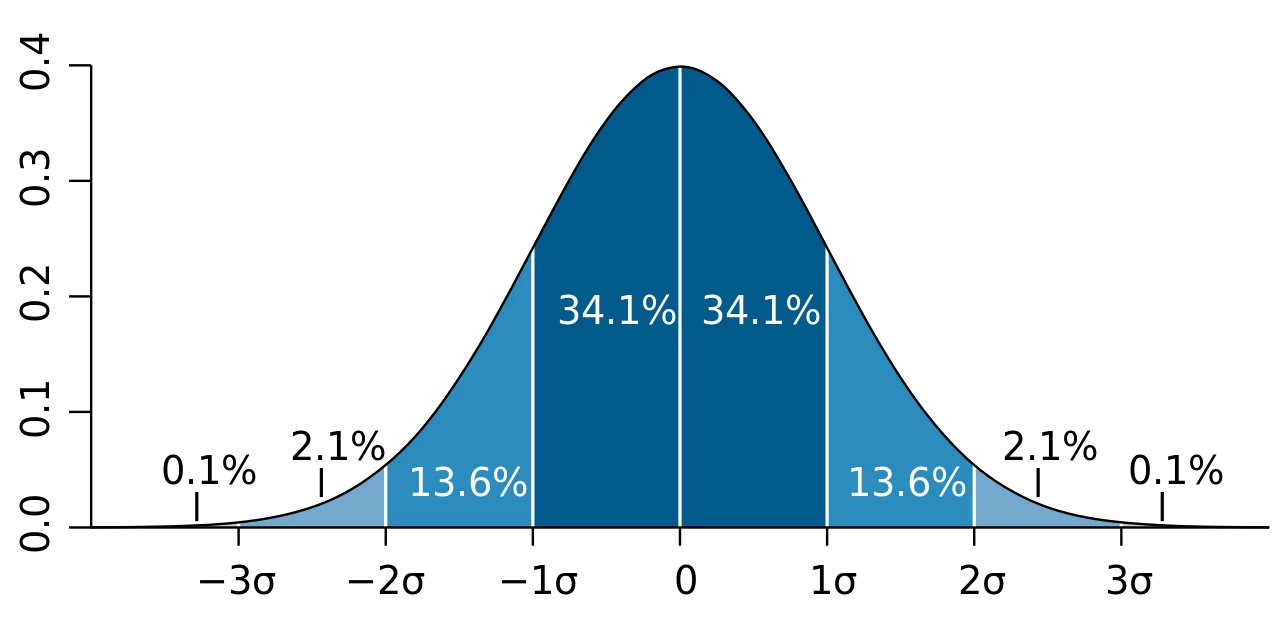

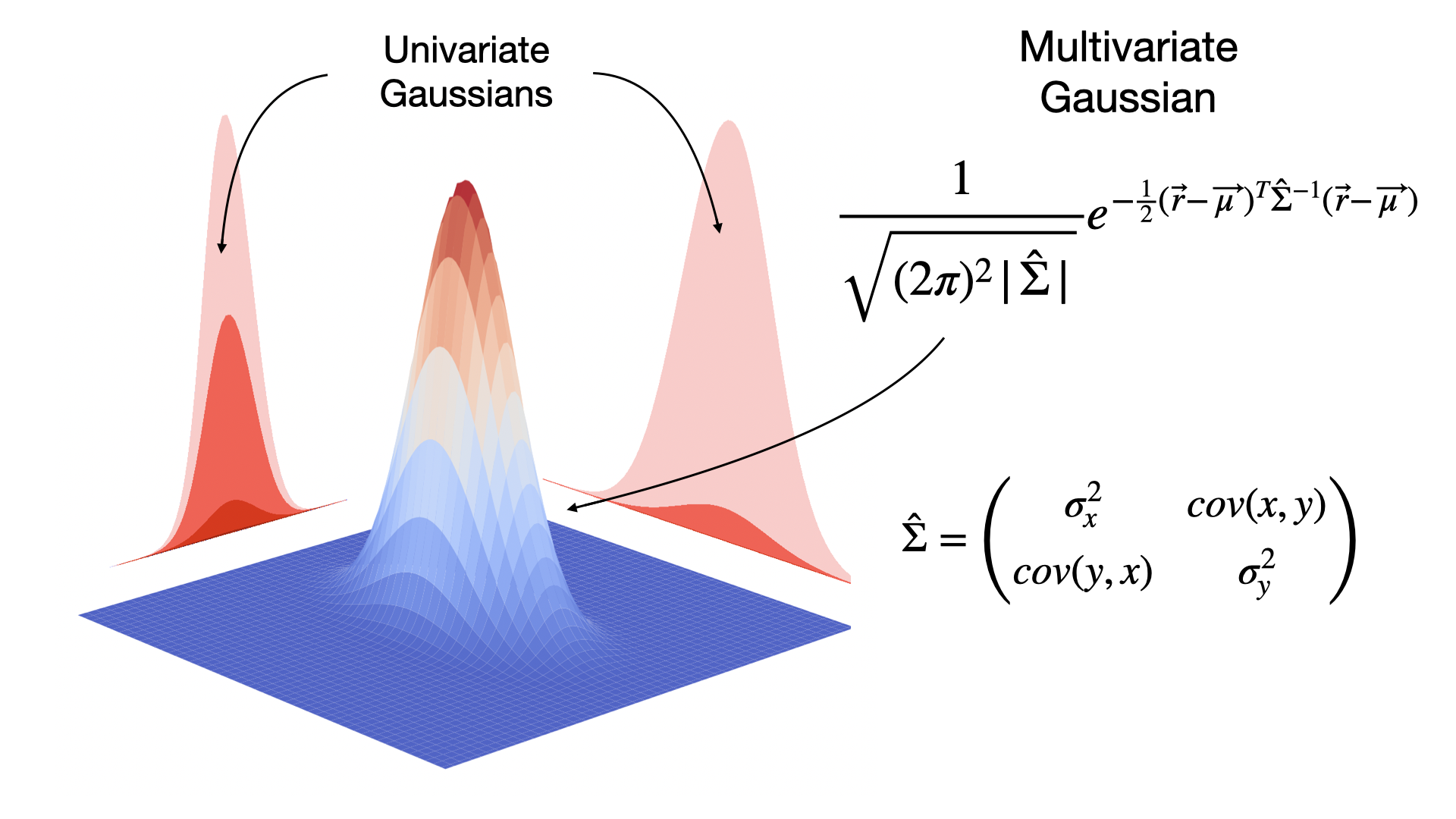

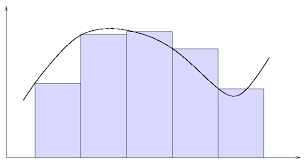

What is: Gaussian/Normal distribution

This function:

With nice properties

There is also a multivariate version

If we linearly project the distribution to a lower dimension, it will stay Gaussian

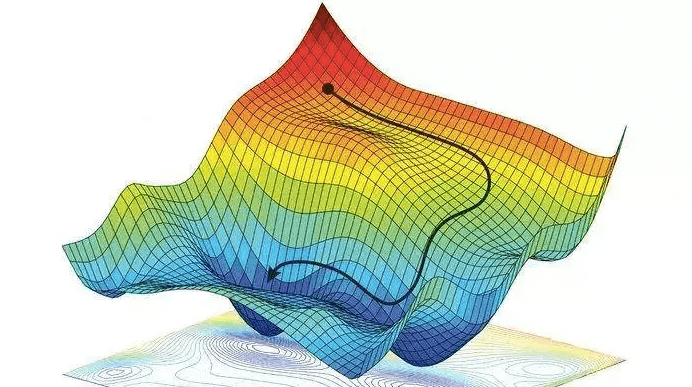

What is: Gradient Descent

Gradient Descent optimizes the parameter of a function

Some function of 2 variables

Example for Neural Networks

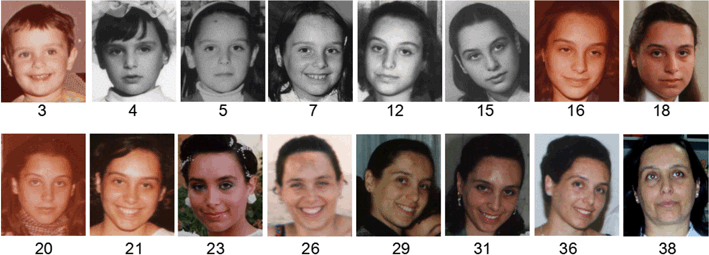

Dataset of pairs image:age

— Loss function

Optimization of the Neural Network

via Gradient Descent

We only need the gradient

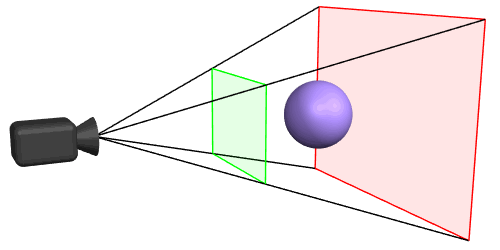

What is: Differentiable Rendering

Our rendering is a function of:

- camera parameters

- the object

And what if our object is the output of an NN

And the loss is a function of the render

Camera paremeters

object

Chain rule:

is usually not available

but PyTorch3D (and some others) have it

What is: Neural Radiance Fields (NeRF)

color = (1, 1, 1)

density = 0

color = (0.6, 0.4, 0.23)

density = 1

Any object can be described

with a function

In NeRF, this function is a NN

Ray direction is also passed because the color may depend on the point of view

the probability of a light particle passing through this point (segment)

What is: Neural Radiance Fields (NeRF)

camera ray

— probability of the ray passing through at point i

— probability of the ray reaching point i

— expected color of the ray r

That is how we render a ray

This is called Volume Rendering, we can render the whole image like this

And it's differentiable

Training a NeRF is optimizing

Loss function:

What is: 3D Gaussian Splatting

camera ray

— probability of the ray passing through at point i

— probability of the ray reaching point i

— expected color of the ray r

In NeRF we're using the NN to get density and color

What is: 3D Gaussian Splatting

camera ray

— probability of the ray reaching point i

— expected color of the ray r

In 3DGS we're using many colored 3D Gaussians

— probability of the ray passing through at point i

What is: 3D Gaussian Splatting

camera ray

expected color of the ray r

This is actually a numerical estimation done by sampling points along the ray

What is: 3D Gaussian Splatting

camera ray

expected color of the ray r

The true Volume Rendering formula

looks like this

What is: 3D Gaussian Splatting

camera ray

And due to nice Gaussian properties, it can be solved in close form!

How to render

camera ray

16x16 block

Image

- Split the image into 16x16 blocks

- For each block, allocate only Gaussians with 99% confidence intersection (culling)

- Load each block B into a separate GPU group and:

- Load allocated Gaussians

- Radix Sort in depth order

- Each pixel is computed in it's own thread:

Accumulate colors of Gaussians until 0.9999 probability of ray stopping

How to render

camera ray

16x16 block

Image

- Split the image into 16x16 blocks

- For each block, allocate only Gaussians with 99% confidence intersection (culling)

- Load each block B into a separate GPU group and:

- Load allocated Gaussians

- Radix Sort in depth order

- Each pixel is computed in it's own thread:

Accumulate colors of Gaussians until 0.9999 probability of ray stopping

Culling + iterating over objects is a property of Rasterization, not Volume Rendering

How to train

camera ray

During training, Gaussians with small opacity are removed

(With small opacity becomes useless)

Adaptive control:

camera ray

And Gaussians with large gradients are cloned

(Large gradients mean this area has a large loss, therefore we need more components)

How to train

camera ray

During training, Gaussians with small opacity are removed

(With small opacity becomes useless)

Adaptive control:

camera ray

And Gaussians with large gradients are cloned

(Large gradients mean this area has a large loss, therefore we need more components)

A good visualization

Practical notes

Tested 3DGS and NeRFStudio on 380 images of my cat (frames from a video)

A5000 GPU (rented a VPS)

3D Gaussian Splatting

NeRFStudio

- 11GB VRAM

- ~ 1 hour training

- Default viewer is a pain in the ass to get working

- 5GB VRAM

- ~ 80 minutes training

- Default viewer is great

Practical notes

Step-by-step tutorial for Windows

NeRFStudio Viewer plugin for 3D Gaussians!

https://github.com/yzslab/nerfstudio/tree/gaussian_splatting

Literally came out this week

Web viewer in the works

Practical notes

Step-by-step tutorial for Windows

NeRFStudio Viewer plugin for 3D Gaussians!

https://github.com/yzslab/nerfstudio/tree/gaussian_splatting

Literally came out this week

Web viewer in the works

DEMO

Dynamic Reconstruction

camera ray

Unlike NeRFs, all the Gaussians are individual objects we can move/rotate

Therefore animating them is a lot easier. And it can be used for reconstruction of moving scenes.

Dynamic Reconstruction algorithm:

- Reconstruct the first frame

- For each of the next frames:

- Initialize this frame's Gaussian parameters with the previous frame's

- Run optimization only on positions and rotations

Dynamic Reconstruction

camera ray

Unlike NeRFs, all the Gaussians are individual objects we can move/rotate

Therefore animating them is a lot easier. And it can be used for reconstruction of moving scenes.

Dynamic Reconstruction algorithm:

- Reconstruct the first frame

- For each of the next frames:

- Initialize this frame's Gaussian parameters with the previous frame's

- Run optimization only on positions and rotations

NeRF vs Gaussian Splatting

Previously state-of-the-art was this NeRF-based method

3DGS didn't beat it, but is pretty much equal in quality

+ Gaussian Splatting renders HD in real-time

+ Much easier to manipulate (animation / dynamic reconstruction)

- No conditional generation (EpiGRAF / NeRF in the wild)

- Meshes are worse than current NeRF methods (Neuralangelo)

- Can't dynamically reconstruct changing topologies (NeRFPlayer)

🎊

Thank you for coming!

Follow me on:

Twitter: https://twitter.com/Xallt_

Telegram: https://t.me/boring_dont_look

Github: https://github.com/Xallt