ExAIS: Executable AI Semantics

Richard Schumi Jun Sun

Singapore Management University

ICSE'22

- Semantics

- Applications

- Test Case Generation

- Model Validation

Tensorflow in Prolog

Background

- Prolog

- Tensorflow

layer(average).

layer(flatten).

ai_components(X) :- layer(X).

?- ai_components(flatten).

?- ai_components(X).# Prolog

Prolog

- declarative: describe computation rather than control flow, lacks side effect

- logic programming: relies on first order logic

- Prolog = knowledge base (rules & fact) + query

Term

- atom: no inherent meaning

- number: float or integer

- variable: start with upper-case letter or underscore

- compound term

- list / string

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(10)

])

predictions = model(x_train[:1]).numpy()# Tensorflow

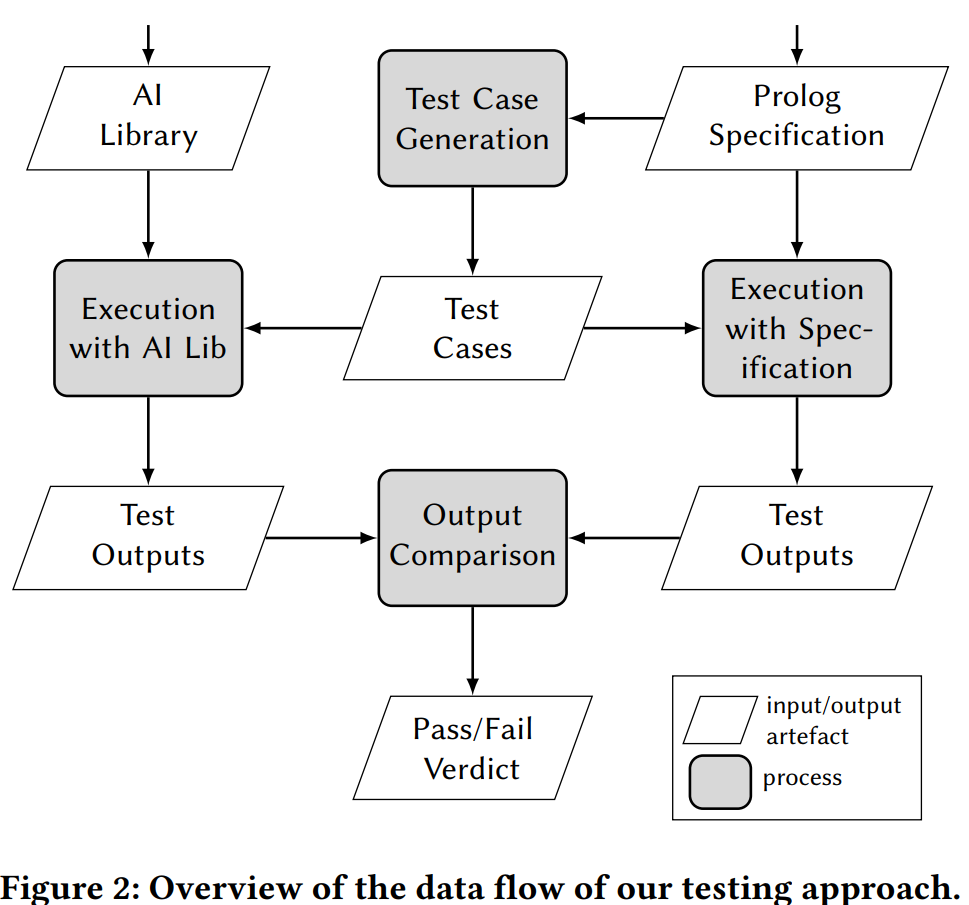

Tensorflow

- popular DL framework from Google (OSDI'16)

- computational graph (model)

- inference / training

Semantics

- Overview

- Ex: dense

- Ex: conv1D

- Ex: dropout

- Recap

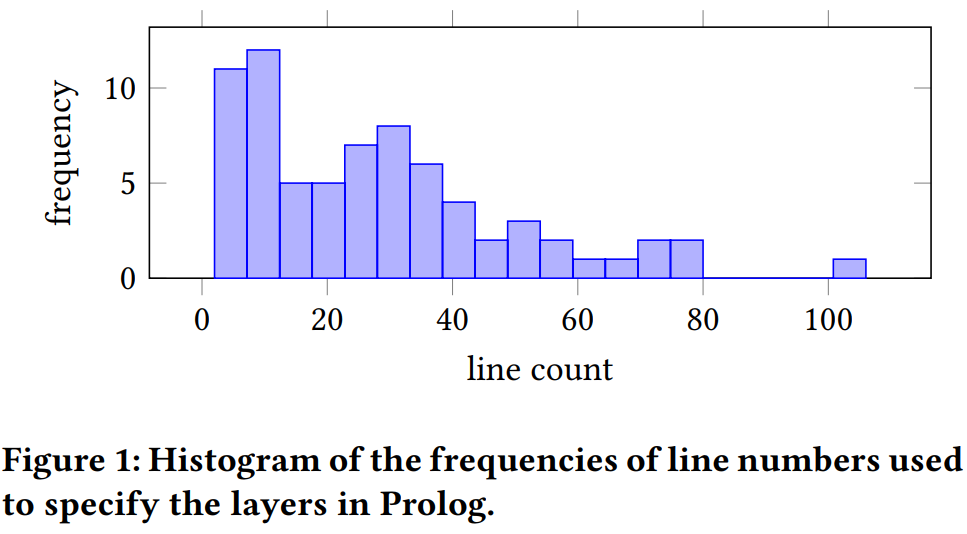

# Semantics

Semantics

- 72 layers (nearly all)

- 3200 lines of Prolog

- correctness guarantee:

- code review

- manual testing (ex in doc)

- automated testing (own fuzzing engine)

# Def: Dense

Definition: Dense

For 1-D input and output, a dense layer is an affine transformation.

\mathbf{y} = W \mathbf{x} + \mathbf{b}

- X: n-d tensor

- the first dimension denotes sample

- W: m-d tensor

- B: (m-1)-d tensor

- Y: (n+m-2)-d tensor

y_{i_2 \cdots i_{n - 1} j_2 \cdots j_m} = b_{j_2 \cdots j_m} + \sum_{k} x_{i_2 \cdots i_{n - 1} k} w_{k j_2 \cdots j_m}

dense_layer ([I|Is], IWs , Bs , [O|Os ]) :-

depth ([I|Is ] ,2),

dense_node_comp (I, IWs , Bs , O),

dense_layer (Is , IWs , Bs , Os).

dense_layer ([I|Is], IWs , Bs , [O|Os ]) :-

depth ([I|Is],D), D > 2,

dense_layer (I, IWs , Bs , O),

dense_layer (Is , IWs , Bs , Os).

dense_layer ([] , _, _, []).

dense_node_comp ([I|Is ],[ IW|IWs],Res0 ,Res) :-

multiply_list_with (IW ,I, Res1 ),

add_lists (Res0 ,Res1 , Res2 ),

dense_node_comp (Is ,IWs ,Res2 ,Res).

dense_node_comp ([] ,[] , Res ,Res).# Semantics: Dense

Semantics: Dense

y_{i_2 \cdots i_{n - 1} j_2 \cdots j_m} = b_{j_2 \cdots j_m} + \sum_{k} x_{i_2 \cdots i_{n - 1} k} w_{k j_1 \cdots j_m}

\mathbf{y}_{i_2 \cdots i_{n - 1}} = \mathbf{b} + \sum_{k} x_{i_2 \cdots i_{n - 1} k} \mathbf{w}_{k}

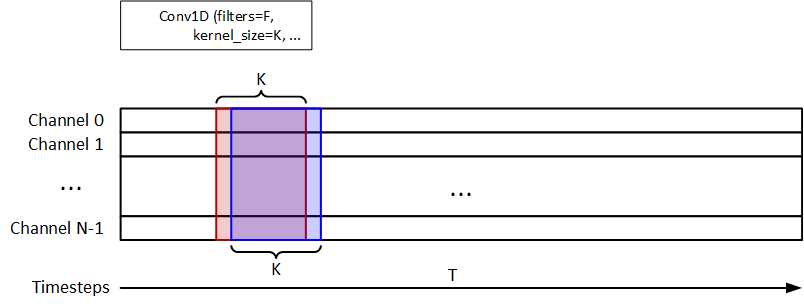

# Def: Conv1D

Definition: Conv1D

- I: (N, L, C)

- W: (K, C, F)

- B: (F,)

- O: (N, L - K + 1, F)

O_{i, j, k} = B_k + \sum_{0 \le j' < K, l} W_{j', l, k} I_{i, j + j', l}

arguments:

- stride: slide by a certain step (default 1)

- padding: add 0s to left and right

conv1D_layer (Is , KernelSize ,IWs ,Bs , Strides , Padding ,Os):-

check_dimensions (Is ,3) ,

check_valid_kernel (Is , KernelSize , Padding ),

check_valid_weight_shapes (Is , KernelSize ,IWs ,Bs),

pool1D (sum ,Is , KernelSize , Strides , Padding ,IWs ,Bs ,false ,Os).

pool1D ( Poolfunc ,[I|Is], PoolSize , Strides , Padding ,IWs ,Bs , MultiLayerPool ,[O|Os ]):-

pool1D ( Poolfunc ,I ,0,0, PoolSize , Strides , Padding ,IWs ,Bs , MultiLayerPool ,[] ,O),

pool1D ( Poolfunc ,Is , PoolSize , Strides , Padding ,IWs ,Bs , MultiLayerPool ,Os).

pool1D (_ ,[] ,_,_,_,_,_,_ ,[]) .

pool1D ( Poolfunc ,[[I|Is0 ]| Is ],0,0, PoolSize , Strides ,true ,IWs ,Bs , MultiLayerPool ,[] , Os) :-

atomic (I),length ([[I|Is0 ]| Is],L),

calc_padding (L, PoolSize , Strides ,LeftP , RightP ),

padding1D ([[I|Is0 ]| Is], x,LeftP , RightP , Is1),

pool1D ( Poolfunc ,Is1 ,0,0, PoolSize , Strides ,false ,IWs ,Bs , MultiLayerPool ,[] , Os).

pool1D ( Poolfunc ,[[I|Is0 ]| Is],X ,0, PoolSize , Strides ,false ,IWs ,Bs ,false ,Os0 ,Os) :-

atomic (I),length ([[I|Is0 ]| Is],LX),

get_pool_res1D ( Poolfunc ,[[I|Is0 ]| Is],X,Y, PoolSize , Strides , IWs ,Bs ,false ,O),

insert_pool_field (Os0 ,O,true ,X,Y, Strides ,Os1),

(X+ Strides + PoolSize =< LX -> X1 is X+ Strides ; X1 is LX +1) ,

pool1D ( Poolfunc ,[[I|Is0 ]| Is],X1 ,0, PoolSize , Strides ,false ,IWs ,Bs ,false ,Os1 ,Os).

pool1D ( Poolfunc ,[[I|Is0 ]| Is],X,Y, PoolSize , Strides , Padding ,IWs , Bs ,true ,Os0 ,Os) :-

atomic (I),length ([[I|Is0 ]| Is],LX),

get_pool_res1D ( Poolfunc ,[[I|Is0 ]| Is],X,Y, PoolSize , Strides , IWs ,Bs ,true ,O),

insert_pool_field (Os0 ,O,true ,X,Y, Strides ,Os1),

(X+ Strides + PoolSize =< LX -> X1 is X+ Strides ,Y1 is Y; X1 is 0,Y1 is Y+1) ,

pool1D ( Poolfunc ,[[I|Is0 ]| Is],X1 ,Y1 , PoolSize , Strides , Padding , IWs ,Bs ,true ,Os1 ,Os).

pool1D (_ ,[[I|Is0 ]| Is],X,Y,_,_,false ,_,_,_,Os ,Os) :-

atomic (I),

( length ([[I|Is0 ]| Is],LX), X >= LX;

length ([I|Is0],LY), Y >= LY).# Semantics: Conv1D

Semantics: Conv1D

\mathbf{O}_{i, j} = \mathbf{B} + \sum_{0 \le j' < K, l} \mathbf{W}_{j', l} I_{i, j + j', l}

# Def: Dropout

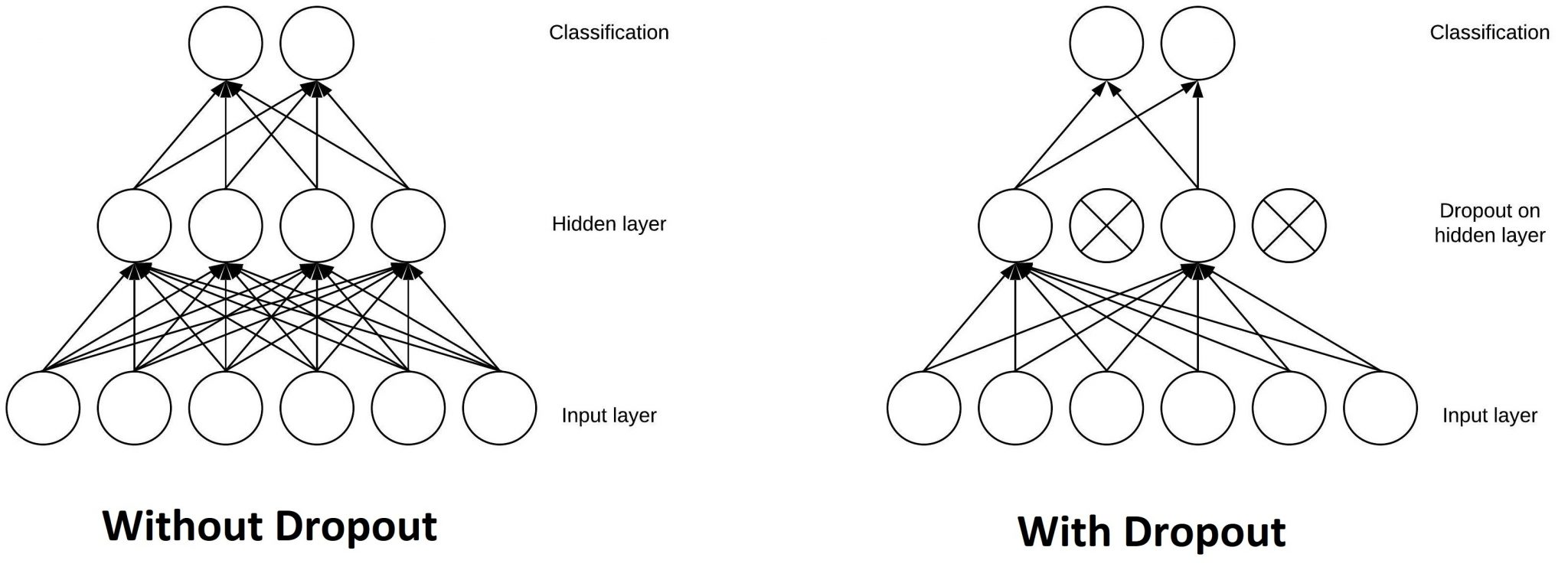

Definition: Dropout

- randomly ignore some nodes with a certain probability

- proved to be able to reduce "overfitting" when training a large neural network no a small dataset

- only effect training

- characteristics: non-determinism

dropout_layer (Is , Os , Rate , AcceptedRateDiff ) :-

count_atoms (Is ,N), count_atoms (Os ,NO), NO = N,

count_occurrences (Is ,0, NZeroOrig ),

count_occurrences (Os ,0, NZeroNew ),

RealRate is ( NZeroNew - NZeroOrig ) / (N -NZeroOrig ),

Diff is abs( Rate - RealRate ),

( Diff > AcceptedRateDiff -> ( write (" Expected Rate : "),

writeln ( Rate ), write (" Actual Rate : "), writeln (

RealRate ), false ); true ).# Semantics: Dropout

Semantics: Dropout

- Note (from Xingyu): this is not an executable way to handle non-determinism like dropout layer.

# Recap

Recap

- Identify 79 unique non-abstract and non-wrapper layers.

- Able to implement 72 of them.

- Remaining 7 layers have too generic or flexible input such as function or other layers.

- 7 are non-deterministic, only check if described properties followed.

- cost 8 work months.

- Major advantange of Prolog is declarative and high-level, which enables compactness and straightforward implementation.

- 3.2k semantics v.s. 3M source

- Only 7 layers had detailed descriptions in doc, 44 were explained with examples or reference paper, remaining 21 were under-specified or had very little examples.

Applications

- Testing

- Model Validation

- Evaluation

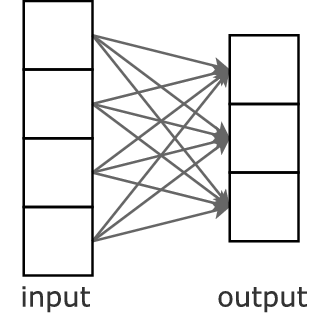

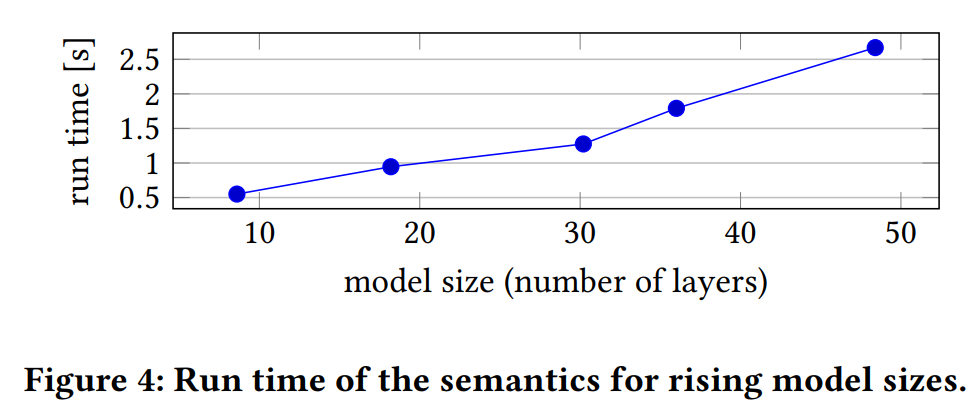

# Testing

Testing Approach

- A fuzzing method which utilises feedback from our semantics

- Test case generator produces test data in the form of tensor and a deep learning model

Model Validation

A = max_pool1D_layer ([[[1.313 ,1.02] ,[1.45 ,1.92]]] ,1 ,1 , false , Max),

B = conv1D_layer ([[[0.9421 ,0.7879] ,[0.809 ,0.855]]] ,1 ,[[[0.572 , 0.621] ,[0.5388 , 0.5741]]] ,[0 ,0] , 1, false , 1, Con),

C = cropping1D_layer (Con , 5, 5, Cro),

D = concatenate_layer ([ Max ,Cro], 1, Con1 ),

exec_layers ([A,B,C,D],["Max","Con","Cro"," Con1 "],Con1 ," Con1 ")# Model Validation

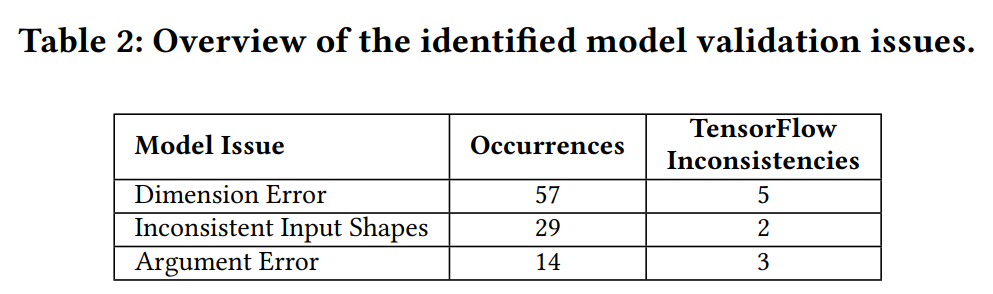

- Check if model is valid e.g. executable, that is, to detect errors such as, wrong shapes of parameters or input data, invalid arguments.

- AI libraries already produces error messages to detect such issues. However, they can be difficult to understand and rarely there is no error reported.

- By converting Tensorflow model into our Prolog representation, we can validate the models.

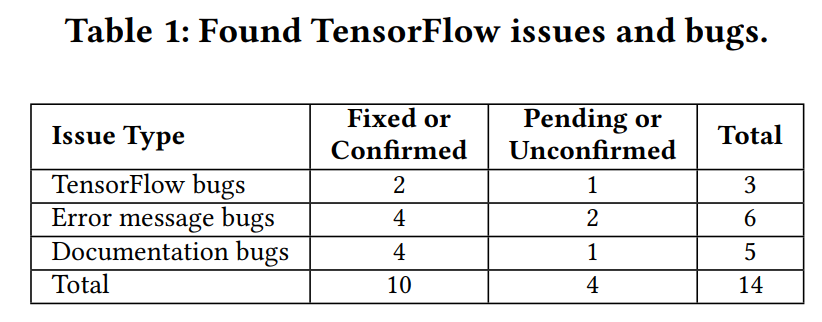

- In evaluation, invalid models generated by test generator are used to inspect the bugs of Tensorflow.

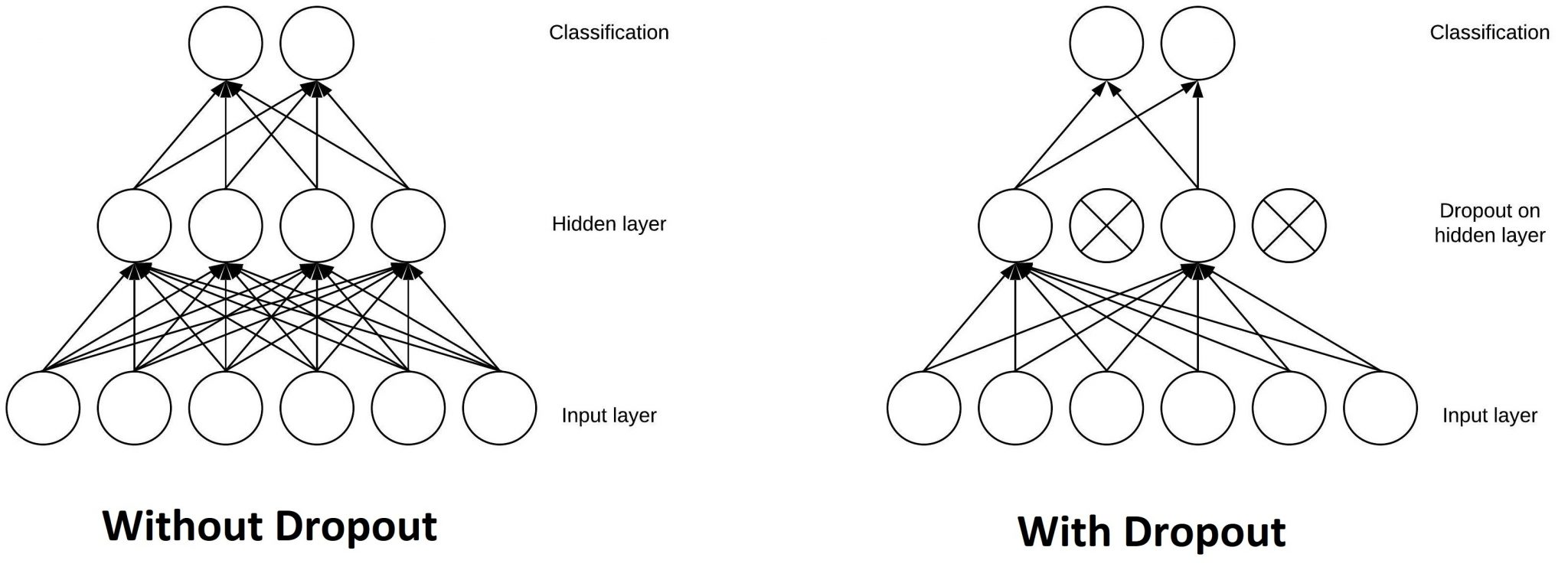

Evaluation

10,000 testcases generated in 35h

100 invalid models generated

Conclusion

- Tensorflow semantics in Prolog

- deterministic behavior

- Testing

- some issues are found

- https://github.com/rschumi0/ExAIS