Big Data & Storm

A Tiny Session

What is big data?

Volume

Velocity

Variety

- Challenge of pooling, allocating and coordinating resources from a group of computers.

- Cluster management and algorithms capable of breaking tasks into smaller pieces become increasingly important

- The speed of information moves through the system is significant to business

- Data is constantly being added, messaged, processed and analyzed in order to keep up fire out the valuable information early

- Data comes from application, system logs, social media feeds and other sources, in different duration, frequency.

?

Activities of Big Data

- Ingesting data into system

- Persisting the data in storage

- Computing and analyzing data

- Visualizing the results

Let's talk about splunk?

?

Computing and Analyzing

Batch Processing

Stream Processing

Often break tasks to individually as splitting, mapping, shuffling, reducing...

Dealing with very large datasets that require quite a bit of computation

Do we have batch processing system?

Real time processing, which operates on a continuous stream of data composed

Sometimes works the data in cluster's memory to avoid write back

Do we have stream processing system?

?

?

Frameworks

- Batch-only frameworks:

- Apache Hadoop

- Stream-only frameworks:

- Apache Storm

- Apache Samza

- Hybrid frameworks:

- Apache Spark

- Apache Flink

https://www.digitalocean.com/community/tutorials/hadoop-storm-samza-spark-and-flink-big-data-frameworks-compared

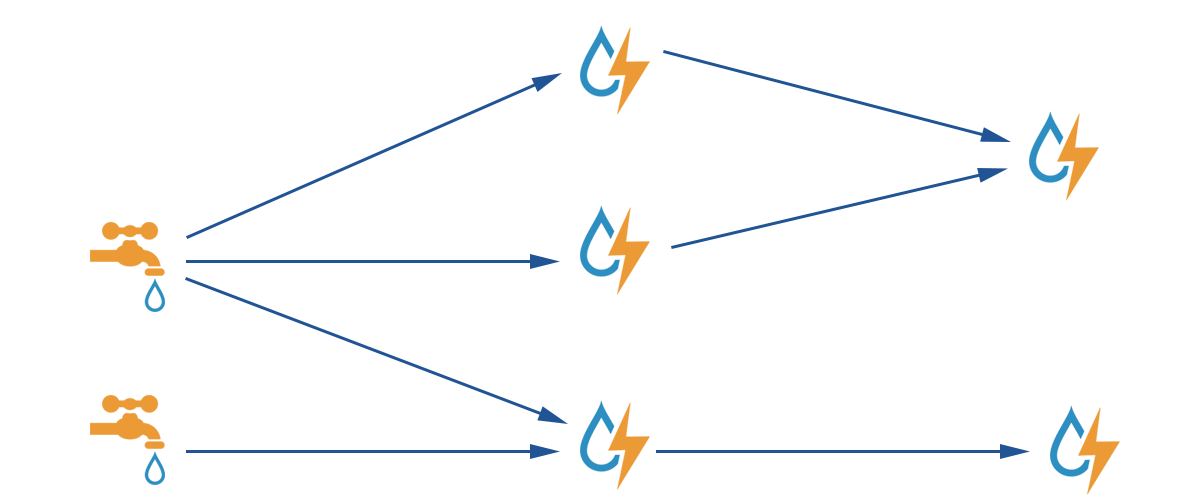

Apache Storm

spout

blot

tuple

tuple

tuple

tuple

tuple

Topology

?

We are going to build...

Spout

sentences

Blot

split

Blot

count word

Blot

report

?

Concurrency Mechanism

Nodes:The servers inside the topology,execute a part of work.

Workers:A node JVM process, each node could run one or multiple workers.

Executor:Thread, each task could run in it, in default, storm will dispatch one task to executor.

Task:the instance of spout and bolt, running nextTuple() and execute() for executors.

Grouping

Shuffle: randomly

Fields: group by field values

All: copy tuple to bolt task, each bolt will receive all tuple copies

Global: all to a task, should be careful

Direct: declare destination

?

Reliability

nextTuple()

ack()

fail()

at least once or exactly once problem? RTFM

(read the friendly manual)

http://storm.apache.org/releases/1.1.0/Guaranteeing-message-processing.html

More - Trident

Trident is a high-level abstraction for doing realtime computing on top of Storm: transaction & state

?

More - Distributed RPC

DRPC Parallelize the computation of really intense functions on the fly using Storm: request-response in topology

?