Safety and Efficiency for Autonomous Vehicles through Online Learning

Zachary Sunberg

Postdoctoral Scholar

University of California

How do we deploy autonomy with confidence?

Safety and Efficiency through Online Learning

Waymo Image By Dllu - Own work, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=64517567

Safety and efficiency through planning with drivers internal states

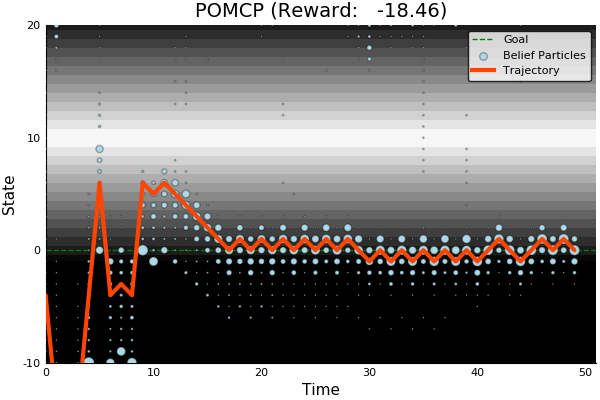

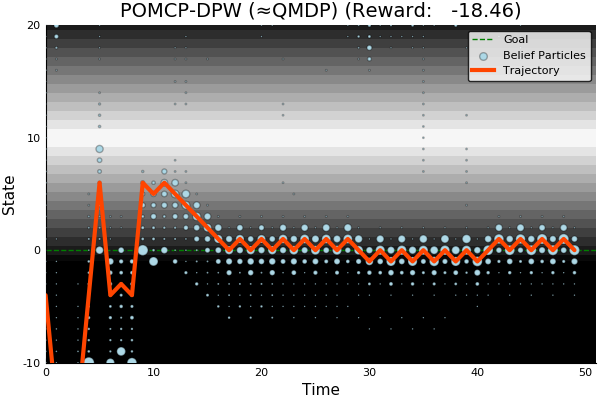

Pioneering online algorithm for POMDPs with continuous observation spaces

POMDP software with first-class speed and unprecedented flexibility

Driving

POMCPOW

POMDPs.jl

POMDPs

UAV Collision Avoidance

Space Situational Awareness

Dual Control

Autorotation

Successful flight test of automatic controller

Future

Autorotation

Driving

POMDPs

POMCPOW

POMDPs.jl

Future

Autorotation

"Expert System" Autorotation Controller

Example Controller: Flare

\[\alpha \equiv \frac{KE_{\text{available}} - KE_{\text{flare exit}}}{KE_{\text{flare entry}} - KE_{\text{flare exit}}}\]

\[TTLE = TTLE_{max} \times \alpha\]

\[\ddot{h}_{des} = -\frac{2}{TTI_F^2}h - \frac{2}{TTI_F}\dot{h}\]

[Sunberg, 2015]

Autorotation Simulation Results

[Sunberg, 2015]

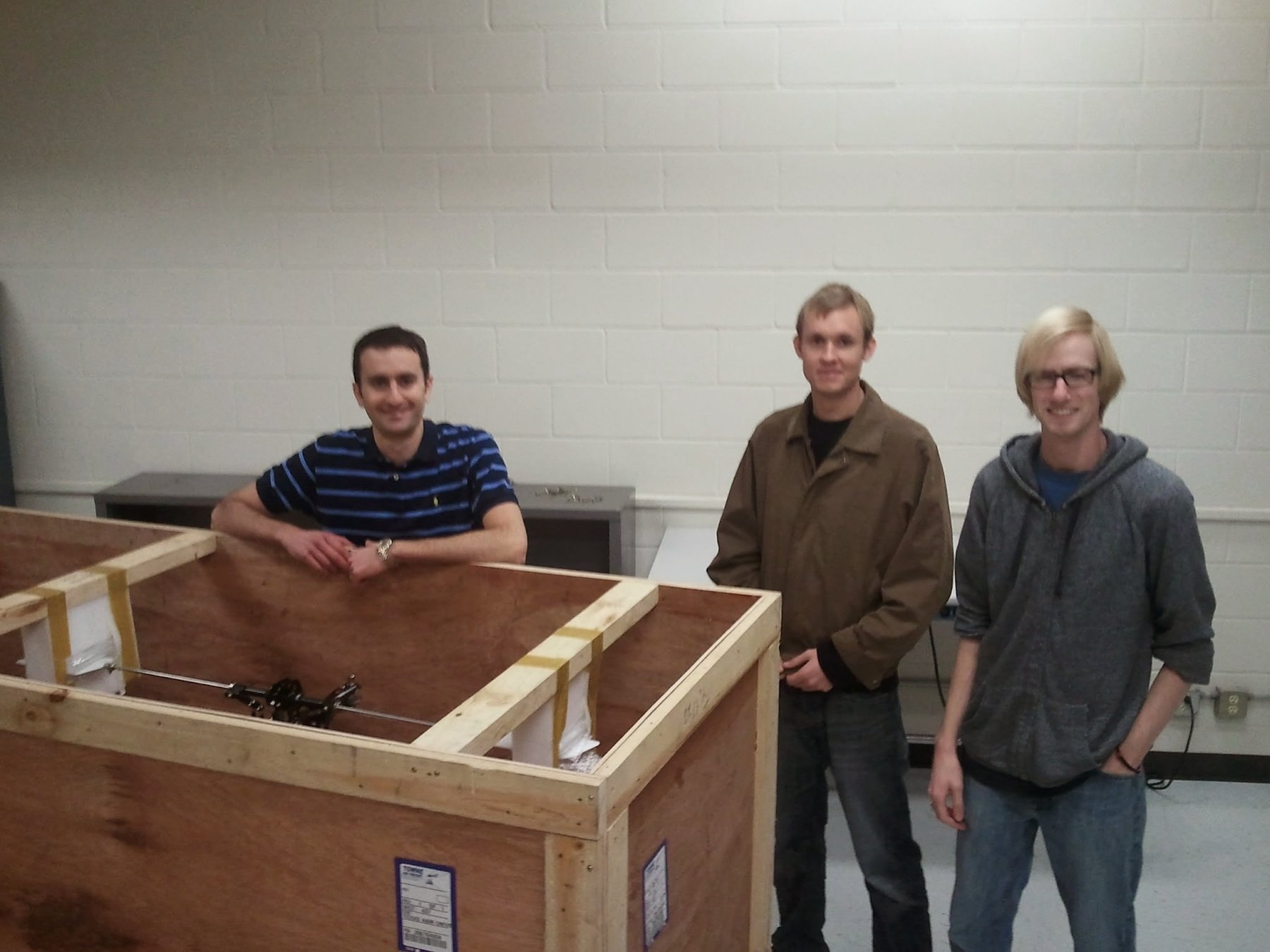

Flight Tests

[Sunberg, 2015]

"Expert System" Autorotation Controller

Example Controller: Flare

\[\alpha \equiv \frac{KE_{\text{available}} - KE_{\text{flare exit}}}{KE_{\text{flare entry}} - KE_{\text{flare exit}}}\]

\[TTLE = TTLE_{max} \times \alpha\]

\[\ddot{h}_{des} = -\frac{2}{TTI_F^2}h - \frac{2}{TTI_F}\dot{h}\]

Problems with this approach:

- No optimality justification

- Controller must be adjusted by hand to accommodate any changes

- Difficult to communicate about

- Parameters not intuitive

[Sunberg, 2015]

Autorotation

Driving

POMDPs

POMCPOW

POMDPs.jl

Future

Tweet by Nitin Gupta

29 April 2018

https://twitter.com/nitguptaa/status/990683818825736192

Two Objectives for Autonomy

EFFICIENCY

SAFETY

Minimize resource use

(especially time)

Minimize the risk of harm to oneself and others

Safety often opposes Efficiency

Pareto Optimization

Safety

Better Performance

Model \(M_2\), Algorithm \(A_2\)

Model \(M_1\), Algorithm \(A_1\)

Efficiency

$$\underset{\pi}{\mathop{\text{maximize}}} \, V^\pi = V^\pi_\text{E} + \lambda V^\pi_\text{S}$$

Safety

Weight

Efficiency

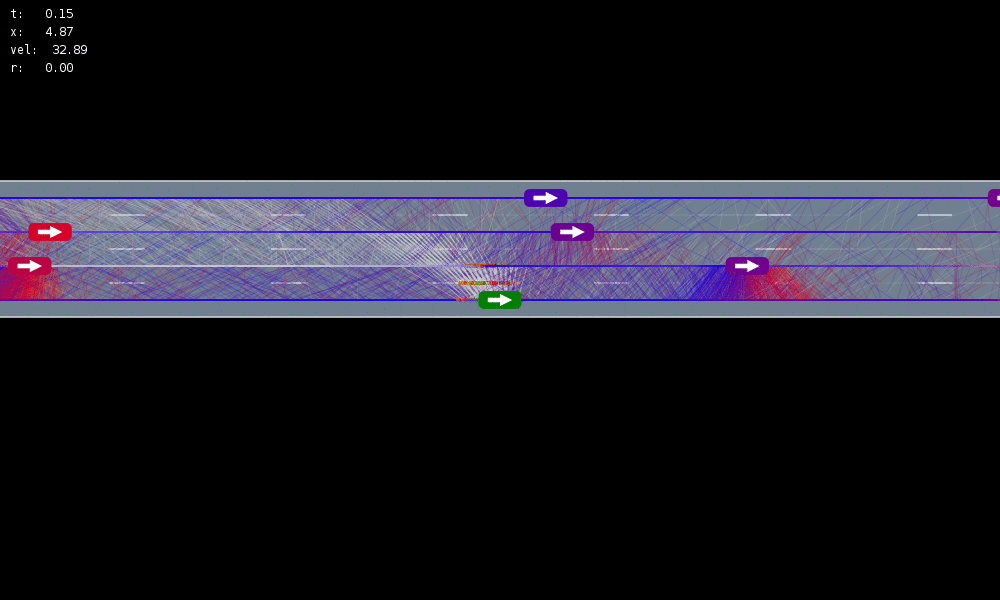

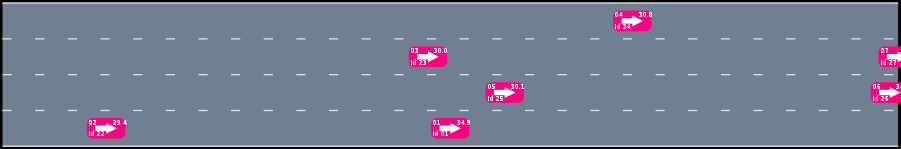

Intelligent Driver Model (IDM)

[Treiber, et al., 2000] [Kesting, et al., 2007] [Kesting, et al., 2009]

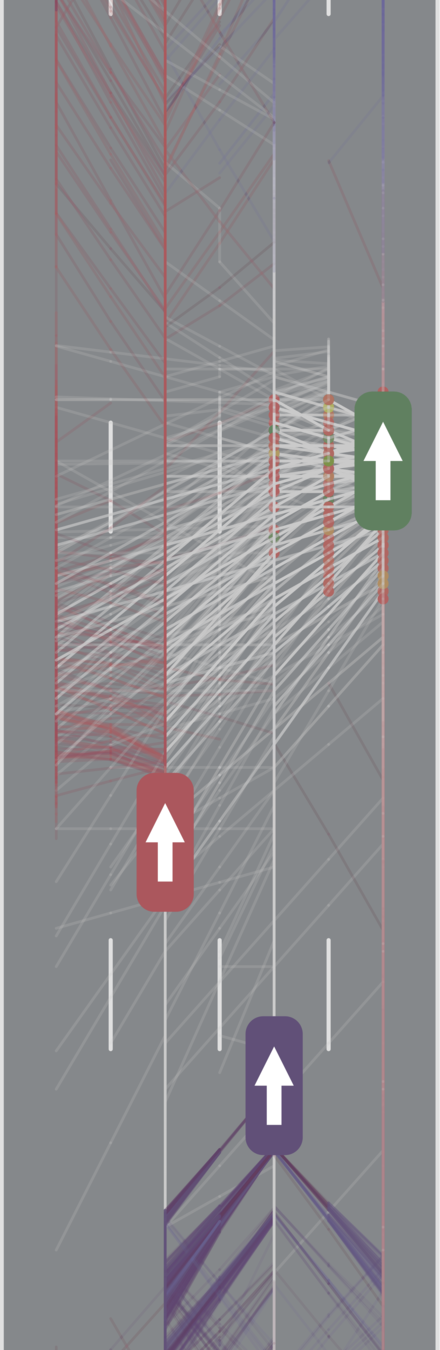

Internal States

All drivers normal

No learning (MDP)

Omniscient

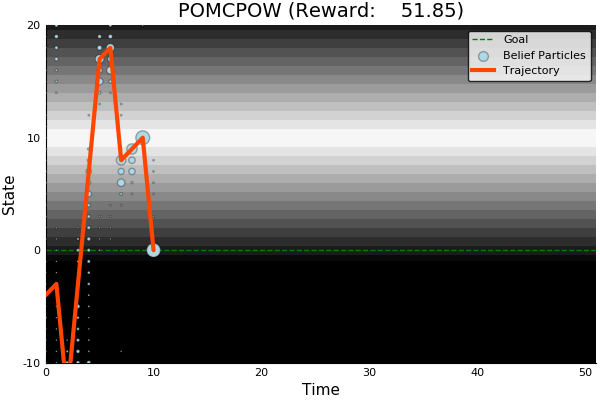

POMCPOW (Ours)

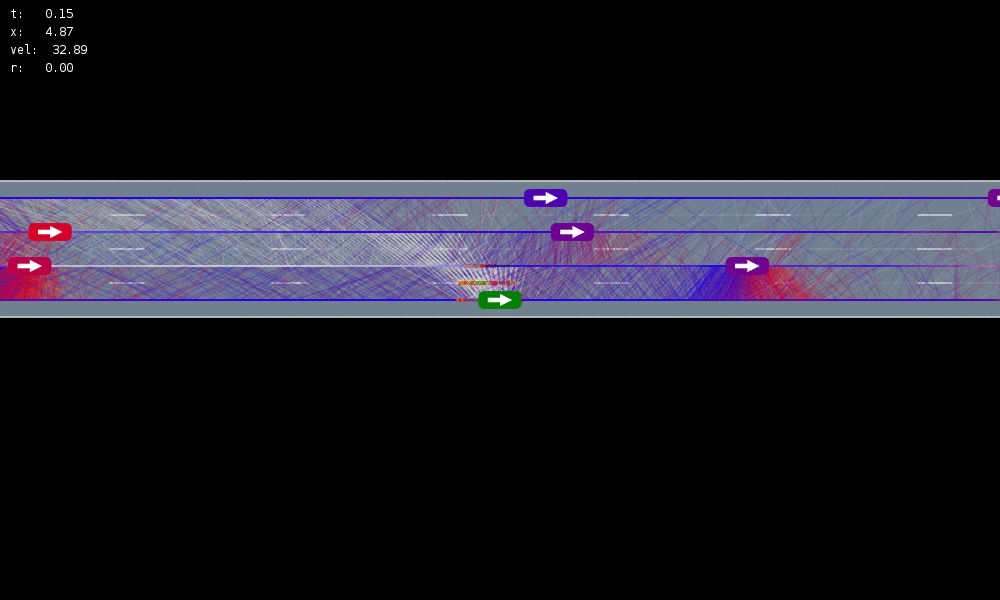

Simulation results

[Sunberg, 2017]

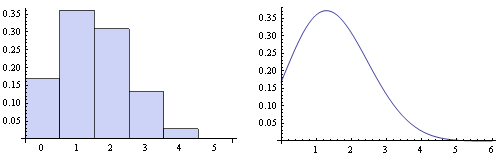

Marginal Distribution: Uniform

\(\rho=0\)

\(\rho=1\)

\(\rho=0.75\)

Internal parameter distributions

Conditional Distribution: Copula

Assume normal

No Learning (MDP)

Omniscient

Mean MPC

QMDP

POMCPOW (Ours)

[Sunberg, 2017]

Autorotation

Driving

POMDPs

POMCPOW

POMDPs.jl

Future

Types of Uncertainty

OUTCOME

MODEL

STATE

Markov Model

- \(\mathcal{S}\) - State space

- \(T:\mathcal{S}\times\mathcal{S} \to \mathbb{R}\) - Transition probability distributions

Markov Decision Process (MDP)

- \(\mathcal{S}\) - State space

- \(T:\mathcal{S}\times \mathcal{A} \times\mathcal{S} \to \mathbb{R}\) - Transition probability distribution

- \(\mathcal{A}\) - Action space

- \(R:\mathcal{S}\times \mathcal{A} \to \mathbb{R}\) - Reward

Solving MDPs - The Value Function

$$V^*(s) = \underset{a\in\mathcal{A}}{\max} \left\{R(s, a) + \gamma E\Big[V^*\left(s_{t+1}\right) \mid s_t=s, a_t=a\Big]\right\}$$

Involves all future time

Involves only \(t\) and \(t+1\)

$$\underset{\pi:\, \mathcal{S}\to\mathcal{A}}{\mathop{\text{maximize}}} \, V^\pi(s) = E\left[\sum_{t=0}^{\infty} \gamma^t R(s_t, \pi(s_t)) \bigm| s_0 = s \right]$$

$$Q(s,a) = R(s, a) + \gamma E\Big[V^* (s_{t+1}) \mid s_t = s, a_t=a\Big]$$

Value = expected sum of future rewards

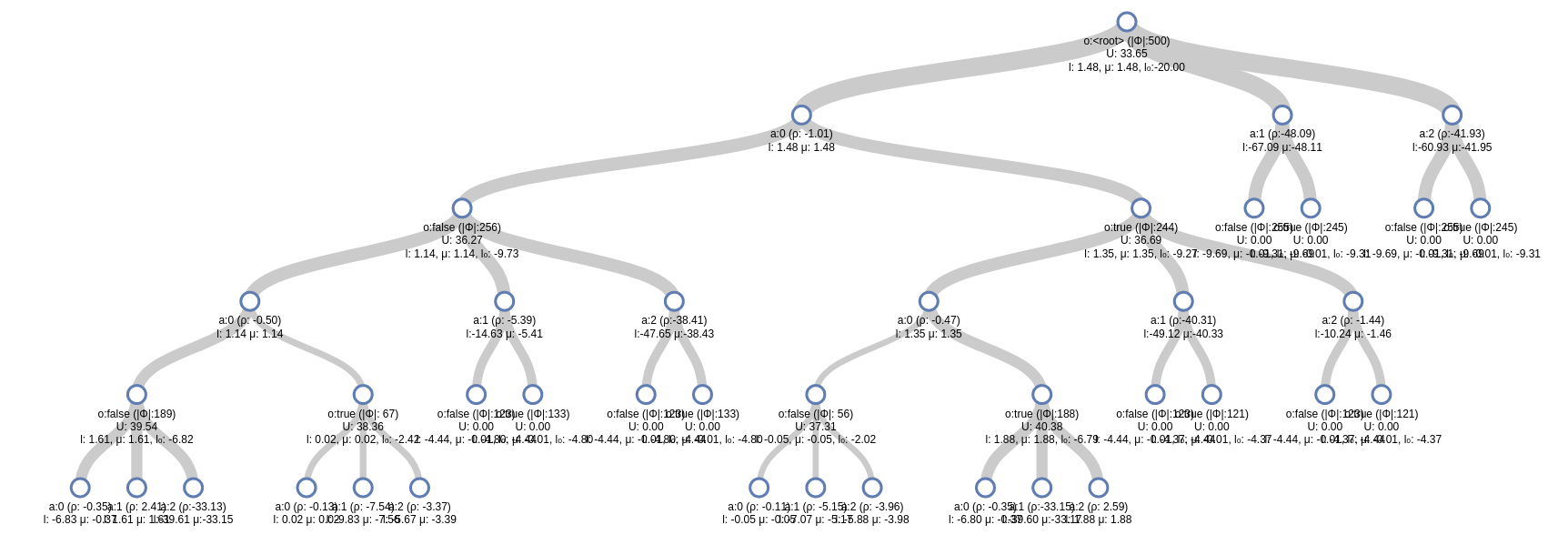

Online Decision Process Tree Approaches

Time

Estimate \(Q(s, a)\) based on children

$$Q(s,a) = R(s, a) + \gamma E\Big[V^* (s_{t+1}) \mid s_t = s, a_t=a\Big]$$

\[V(s) = \max_a Q(s,a)\]

Partially Observable Markov Decision Process (POMDP)

- \(\mathcal{S}\) - State space

- \(T:\mathcal{S}\times \mathcal{A} \times\mathcal{S} \to \mathbb{R}\) - Transition probability distribution

- \(\mathcal{A}\) - Action space

- \(R:\mathcal{S}\times \mathcal{A} \to \mathbb{R}\) - Reward

- \(\mathcal{O}\) - Observation space

- \(Z:\mathcal{S} \times \mathcal{A}\times \mathcal{S} \times \mathcal{O} \to \mathbb{R}\) - Observation probability distribution

State

Timestep

Accurate Observations

Goal: \(a=0\) at \(s=0\)

Optimal Policy

Localize

\(a=0\)

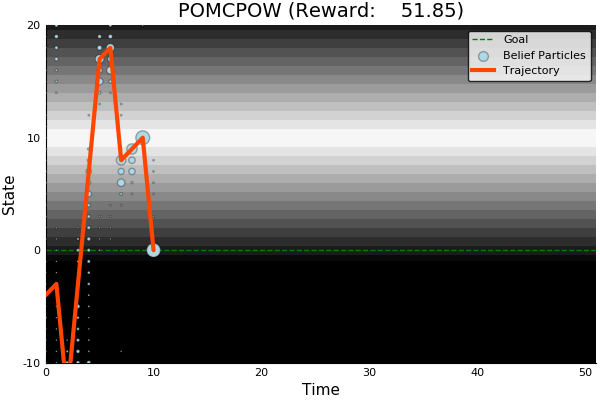

POMDP Example: Light-Dark

POMDP Sense-Plan-Act Loop

Environment

Belief Updater

Policy

\(b\)

\(a\)

\[b_t(s) = P\left(s_t = s \mid a_1, o_1 \ldots a_{t-1}, o_{t-1}\right)\]

True State

\(s = 7\)

Observation \(o = -0.21\)

Environment

Belief Updater

Policy

\(a\)

\(b = \mathcal{N}(\hat{s}, \Sigma)\)

True State

\(s \in \mathbb{R}^n\)

Observation \(o \sim \mathcal{N}(C s, V)\)

LQG Problem (a simple POMDP)

\(s_{t+1} \sim \mathcal{N}(A s_t + B a_t, W)\)

\(\pi(b) = K \hat{s}\)

Kalman Filter

\(R(s, a) = - s^T Q s - a^T R a\)

Real World POMDPs

1) ACAS

2) Orbital Object Tracking

4) Medical

3) Dual Control

ACAS X

Trusted UAV

Collision Avoidance

[Sunberg, 2016]

[Kochenderfer, 2011]

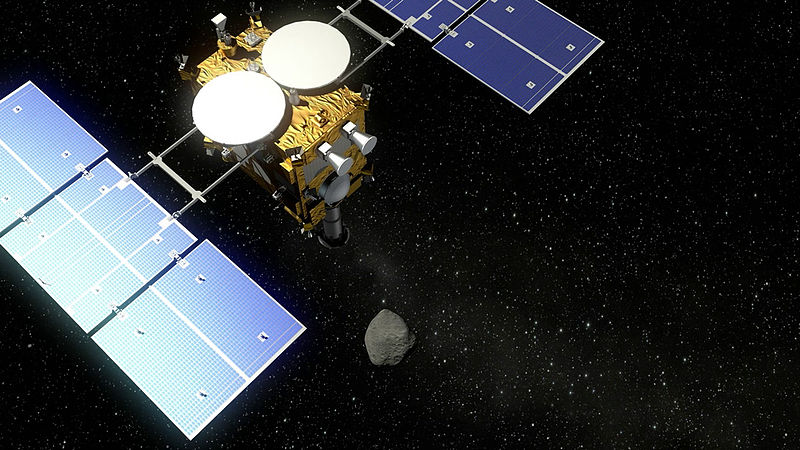

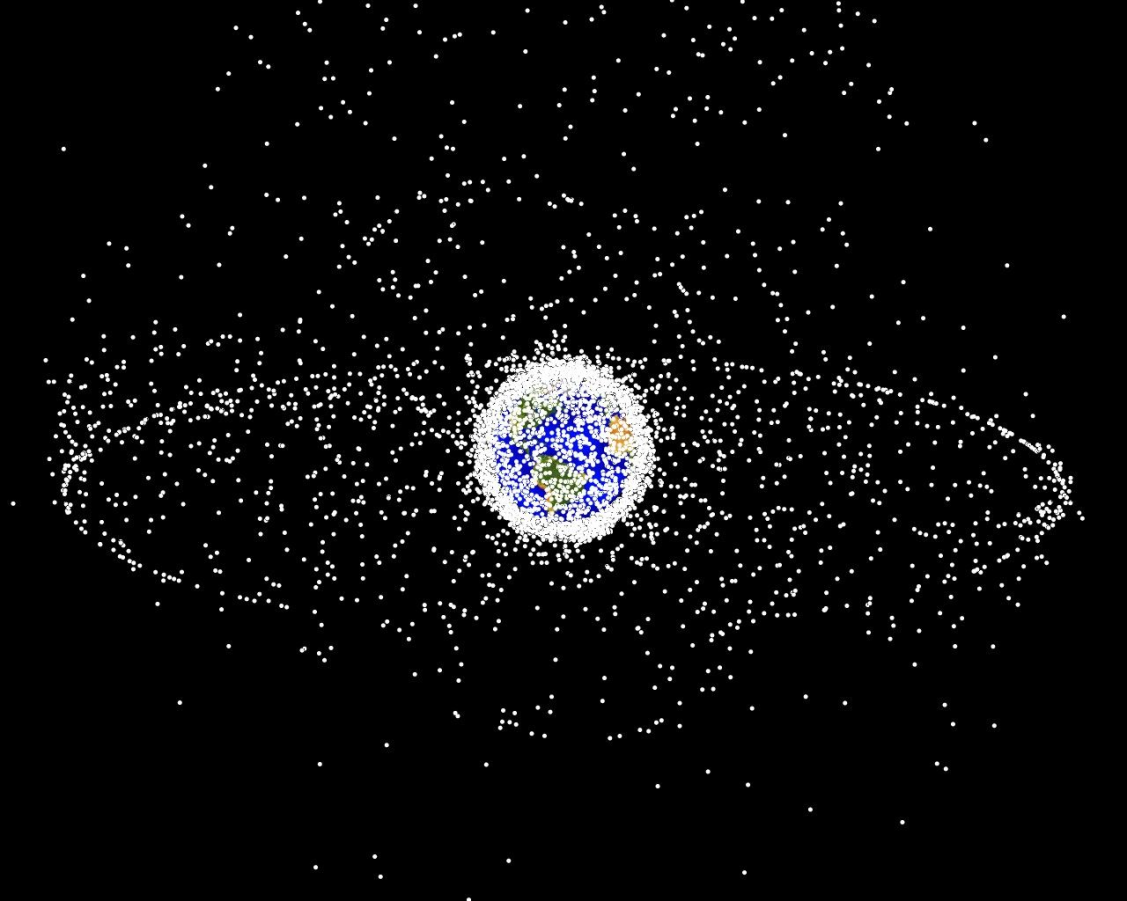

Real World POMDPs

\(\mathcal{S}\): Information space for all objects

\(\mathcal{A}\): Which objects to measure

\(R\): - Entropy

Approximately 20,000 objects >10cm in orbit

[Sunberg, 2016]

1) ACAS

2) Orbital Object Tracking

4) Medical

3) Dual Control

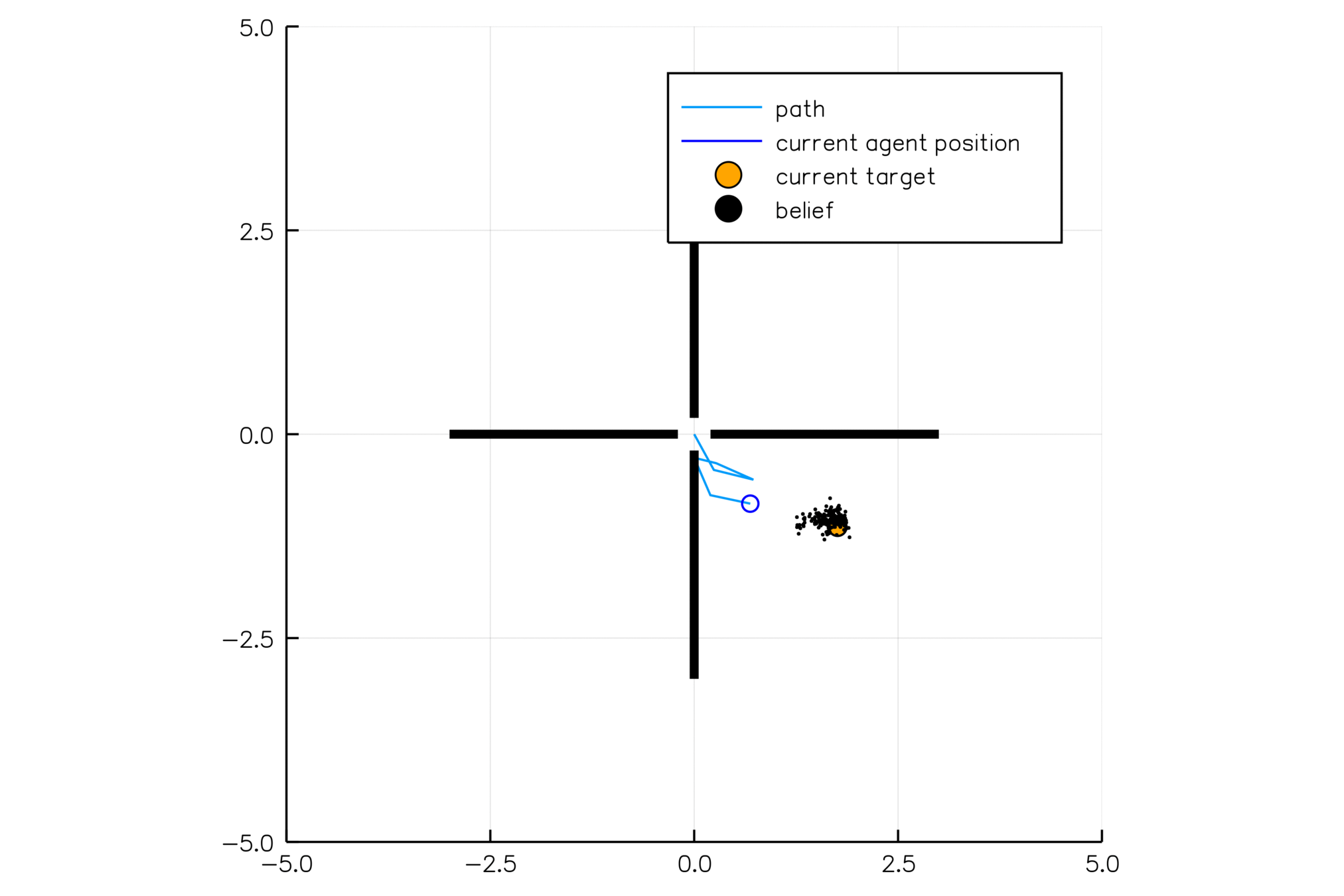

Real World POMDPs

State \(x\) Parameters \(\theta\)

\(s = (x, \theta)\) \(o = x + v\)

POMDP solution automatically balances exploration and exploitation

[Slade, Sunberg, et al. 2017]

1) ACAS

2) Orbital Object Tracking

4) Medical

3) Dual Control

Real World POMDPs

1) ACAS

2) Orbital Object Tracking

4) Medical

3) Dual Control

[Ayer 2012]

[Sun 2014]

Personalized Cancer Screening

Steerable Needle Guidance

Autorotation

Driving

POMDPs

POMCPOW

POMDPs.jl

Future

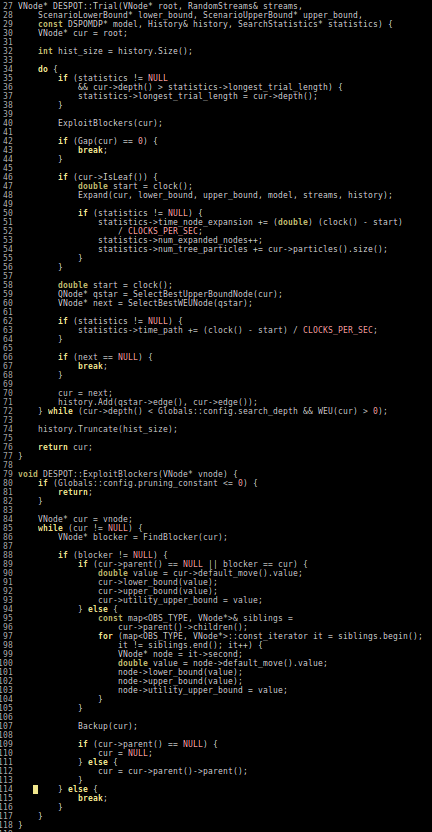

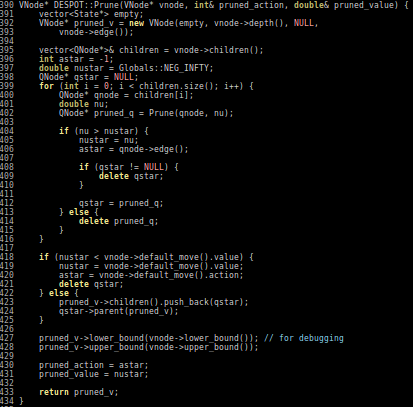

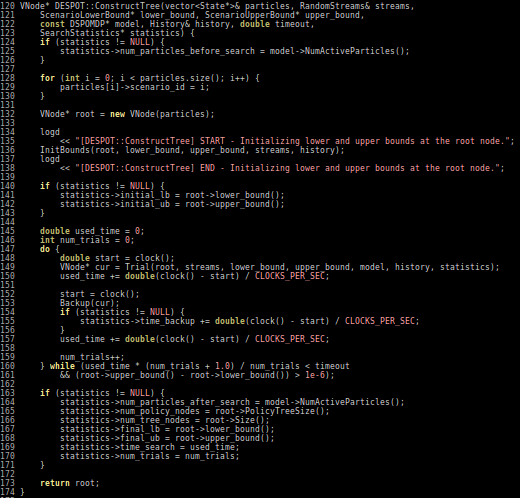

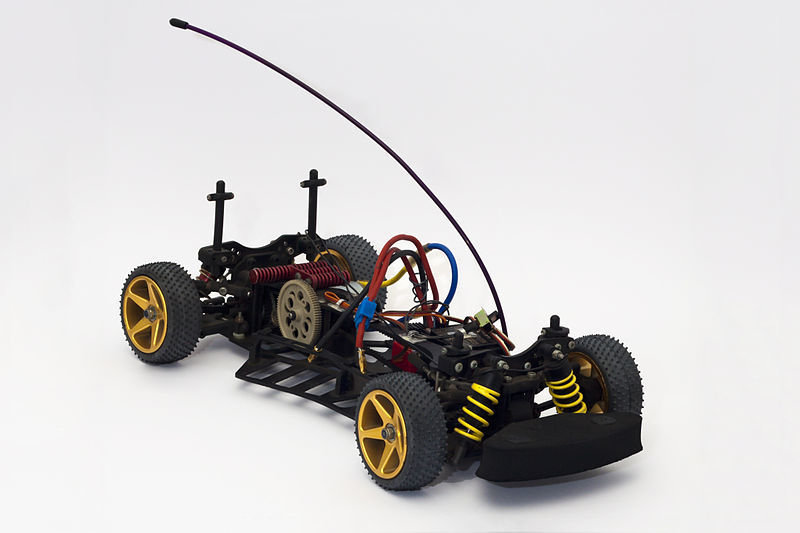

POMDP Sense-Plan-Act Loop

Environment

Belief Updater

Policy

\(o\)

\(b\)

\(a\)

- A POMDP is an MDP on the Belief Space but belief updates are expensive

- POMCP* uses simulations of histories instead of full belief updates

- Each belief is implicitly represented by a collection of unweighted particles

[Ross, 2008] [Silver, 2010]

*(Partially Observable Monte Carlo Planning)

[ ] An infinite number of child nodes must be visited

[ ] Each node must be visited an infinite number of times

Solving continuous POMDPs - POMCP fails

POMCP

✔

✔

Double Progressive Widening (DPW): Gradually grow the tree by limiting the number of children to \(k N^\alpha\)

Necessary Conditions for Consistency

[Coutoux, 2011]

POMCP

POMCP-DPW

[Sunberg, 2018]

POMDP Solution

QMDP

\[\underset{\pi: \mathcal{B} \to \mathcal{A}}{\mathop{\text{maximize}}} \, V^\pi(b)\]

\[\underset{a \in \mathcal{A}}{\mathop{\text{maximize}}} \, \underset{s \sim{} b}{E}\Big[Q_{MDP}(s, a)\Big]\]

Same as full observability on the next step

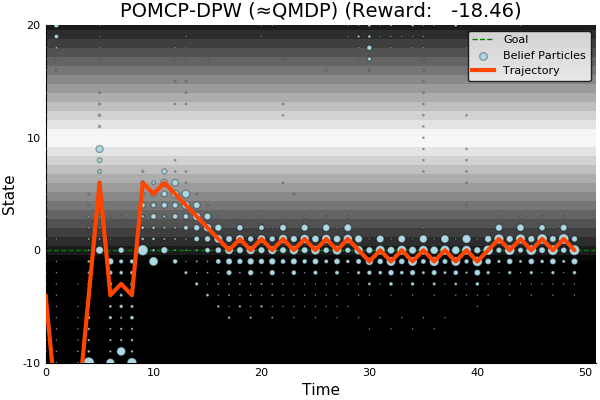

POMCP-DPW converges to QMDP

Proof Outline:

-

Observation space is continuous with finite density → w.p. 1, no two trajectories have matching observations

-

(1) → One state particle in each belief, so each belief is merely an alias for that state

-

(2) → POMCP-DPW = MCTS-DPW applied to fully observable MDP + root belief state

-

Solving this MDP is equivalent to finding the QMDP solution → POMCP-DPW converges to QMDP

[Sunberg, 2018]

POMCP-DPW

[ ] An infinite number of child nodes must be visited

[ ] Each node must be visited an infinite number of times

[ ] An infinite number of particles must be added to each belief node

✔

✔

Necessary Conditions for Consistency

Use \(Z\) to insert weighted particles

✔

[Sunberg, 2018]

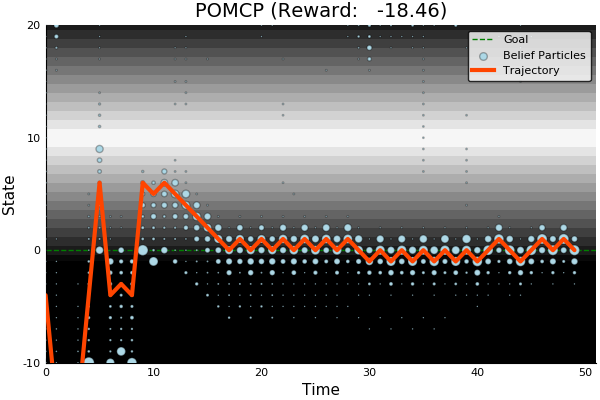

POMCP

POMCP-DPW

POMCPOW

[Sunberg, 2018]

Ours

Suboptimal

State of the Art

Discretized

[Ye, 2017] [Sunberg, 2018]

[Sunberg, 2018]

Ours

Suboptimal

State of the Art

Discretized

[Sunberg, 2018]

Ours

Suboptimal

State of the Art

Discretized

[Sunberg, 2018]

Ours

Suboptimal

State of the Art

Discretized

[Sunberg, 2018]

Ours

Suboptimal

State of the Art

Discretized

[Sunberg, 2018]

Next Step: Planning on Weighted Scenarios

Autorotation

Driving

POMDPs

POMCPOW

POMDPs.jl

Future

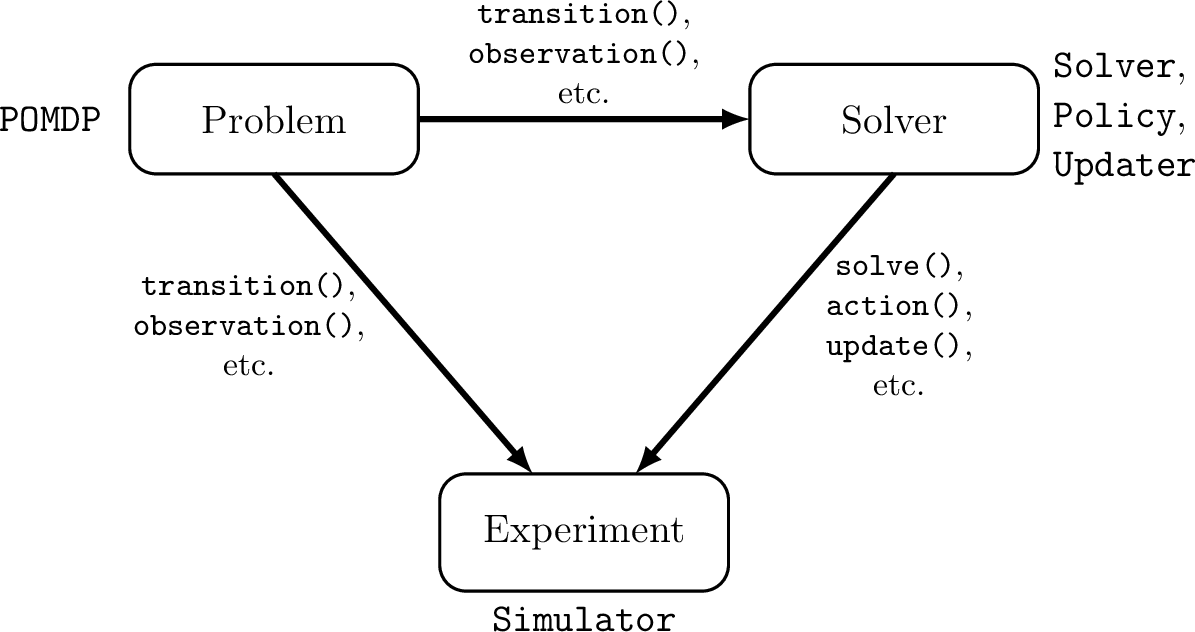

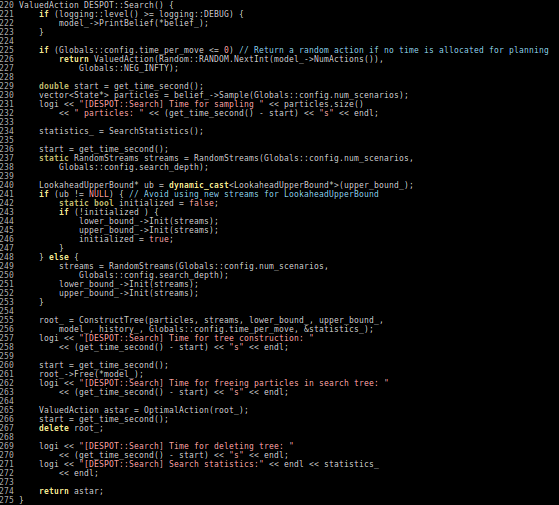

POMDPs.jl - An interface for defining and solving MDPs and POMDPs in Julia

[Egorov, Sunberg, et al., 2017]

Challenges for POMDP Software

- POMDPs are computationally difficult.

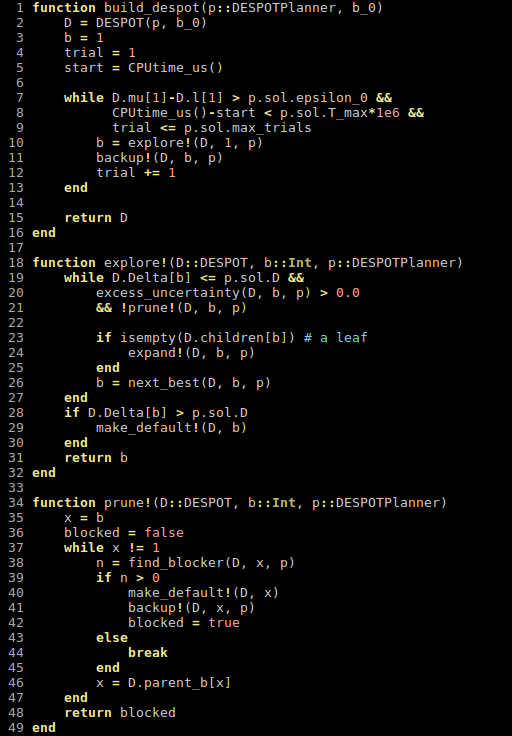

Julia - Speed

Celeste Project

1.54 Petaflops

Challenges for POMDP Software

- POMDPs are computationally difficult.

- There is a huge variety of

- Problems

- Continuous/Discrete

- Fully/Partially Observable

- Generative/Explicit

- Simple/Complex

- Solvers

- Online/Offline

- Alpha Vector/Graph/Tree

- Exact/Approximate

- Domain-specific heuristics

- Problems

Explicit

Black Box

("Generative" in POMDP lit.)

\(s,a\)

\(s', o, r\)

Previous C++ framework: APPL

"At the moment, the three packages are independent. Maybe one day they will be merged in a single coherent framework."

[Egorov, Sunberg, et al., 2017]

Autorotation

Driving

POMDPs

POMCPOW

POMDPs.jl

Future

Future Research

Deploying autonomous agents with confidence

Practical Safety Gaurantees

Trusting Visual Sensors

Algorithms for Physical Problems

Physical Vehicles

Trusting Information from Visual Sensors

Environment

Belief State

Convolutional Neural Network

Control System

Architecture for Safety Assurance

Algorithms for the Physical World

1. Continuous multi-dimensional action spaces

2. Data-driven models on modern parallel hardware

CPU Image By Eric Gaba, Wikimedia Commons user Sting, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=68125990

3. Better algorithms for existing POMDPs

- Health screening

- Human-robot interaction

Practical Safety Guarantees

- Reachability-based guarantees are usually infeasible

- Probabilistic guarantees involve low-probability distribution tails

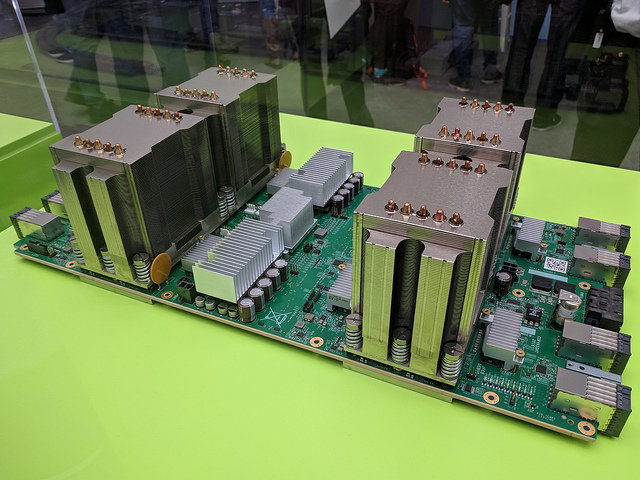

Physical Vehicles in the Real World

Texas A&M (HUSL)

RC Car with assured visual sensing

Optimized Autorotation

Active Sensing

New Course: Decision Making Under Uncertainty

Project-Centric

1. Intro to Probabilistic Models

2. Markov Decision Processes

3. Reinforcement Learning

4. POMDPs

(More focus on online POMDP solutions than Stanford course)

Acknowledgements

The content of my research reflects my opinions and conclusions, and is not necessarily endorsed by my funding organizations.

Thank You!

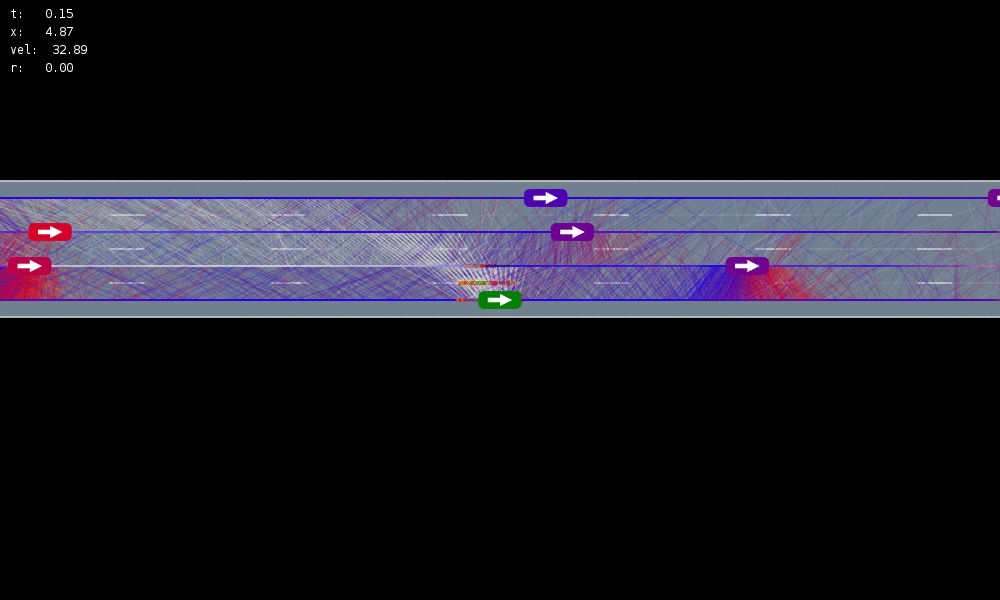

POMDP Formulation

\(s=\left(x, y, \dot{x}, \left\{(x_c,y_c,\dot{x}_c,l_c,\theta_c)\right\}_{c=1}^{n}\right)\)

\(o=\left\{(x_c,y_c,\dot{x}_c,l_c)\right\}_{c=1}^{n}\)

\(a = (\ddot{x}, \dot{y})\), \(\ddot{x} \in \{0, \pm 1 \text{ m/s}^2\}\), \(\dot{y} \in \{0, \pm 0.67 \text{ m/s}\}\)

Ego physical state

Physical states of other cars

Internal states of other cars

Physical states of other cars

- Actions filtered so they can never cause crashes

- Braking action always available

Efficiency

Safety

$$R(s, a, s') = \text{in\_goal}(s') - \lambda \left(\text{any\_hard\_brakes}(s, s') + \text{any\_too\_slow}(s')\right)$$

POMDPs in Aerospace

\(s=\left(x, y, \dot{x}, \left\{(x_c,y_c,\dot{x}_c,l_c,\theta_c)\right\}_{c=1}^{n}\right)\)

\(o=\left\{(x_c,y_c,\dot{x}_c,l_c)\right\}_{c=1}^{n}\)

\(a = (\ddot{x}, \dot{y})\)

Ego physical state

Physical states of other cars

Internal states of other cars

Physical states

Efficiency

Safety

\( - \lambda \left(\text{any\_hard\_brakes}(s, s') + \text{any\_too\_slow}(s')\right)\)

\(R(s, a, s') = \text{in\_goal}(s')\)

[Sunberg, 2017]

"[The autonomous vehicle] performed perfectly, except when it had to merge onto I-395 South and swing across three lanes of traffic"

- Bloomberg

http://bloom.bg/1Qw8fjB

Monte Carlo Tree Search

Image by Dicksonlaw583 (CC 4.0)

Autorotation

Driving

POMDPs

POMCPOW

POMDPs.jl

Future

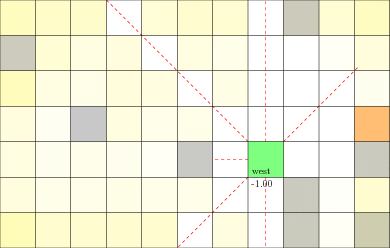

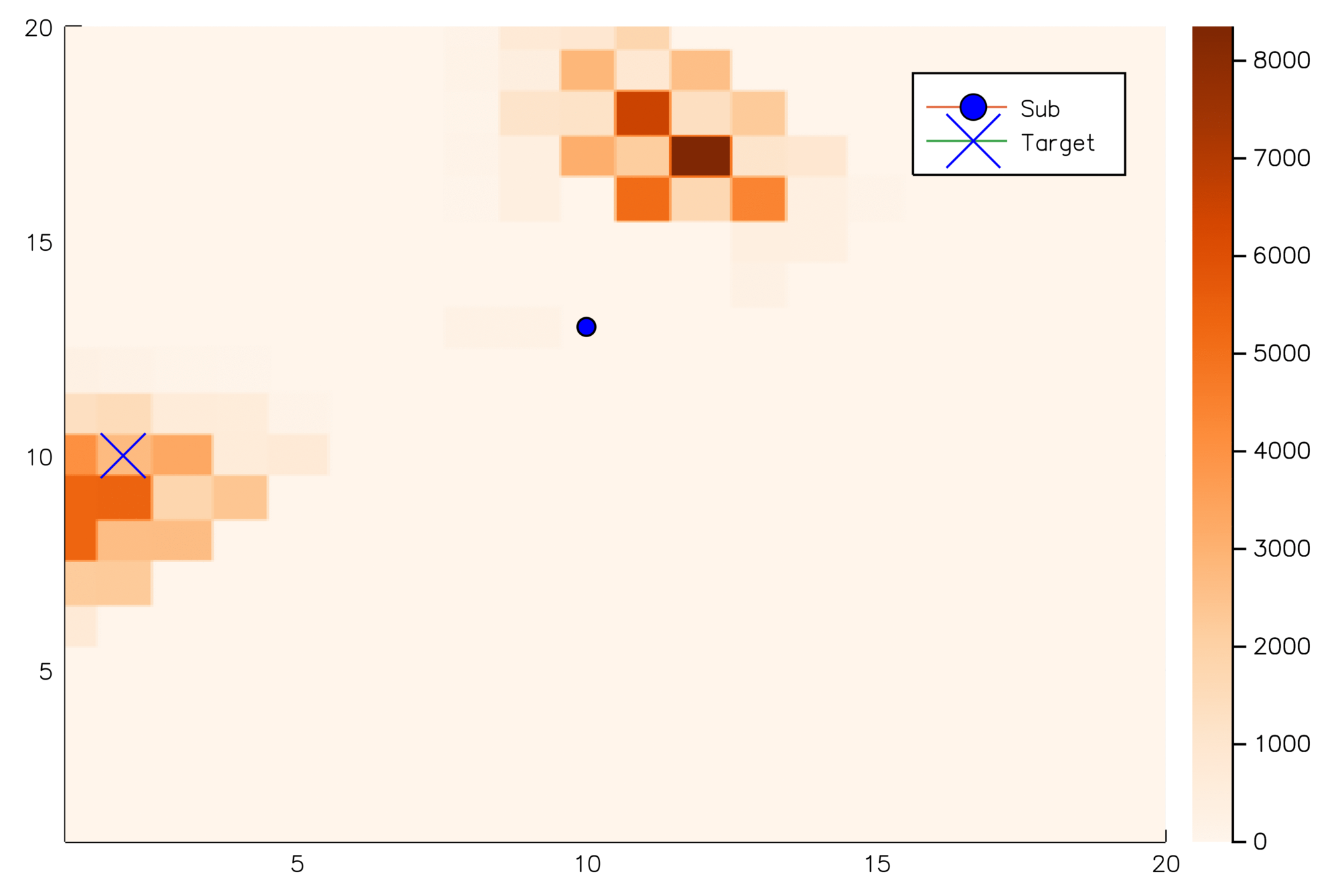

POMDP Example: Laser Tag

POMDP Sense-Plan-Act Loop

Environment

Belief Updater

Policy

\(o\)

\(b\)

\(a\)

\[b_t(s) = P\left(s_t = s \mid a_1, o_1 \ldots a_{t-1}, o_{t-1}\right)\]

Laser Tag POMDP

Online Decision Process Tree Approaches

State Node

Action Node

(Estimate \(Q(s,a)\) here)

WSU Job Talk

By Zachary Sunberg

WSU Job Talk

- 590