ModelING Litigation in USPTO patent Claims using Doc2Vec

“If you don't look/think too hard this too will make sense”

-Someone

Zachary D White Feb - 2019

Capstone

How to do a capstone

Your Problem My Project

Initial INQUIRY

Can machine learning inform and return better search results for an FTO analysis thereby decreasing costs?

Where do we begin?

Revised Definition

The scope of a patent is all the items (invented or not) that are stated directly in the claims section of the patent.

Practical Definition

The universe of inventions that infringe on the patent.

WHAT IS PATENT INFRINGEMENT?

Going Deeper

- Oldest Method - 1990 -2001

- Stanford Method 2015

- USPTO Method - 2016

- Kuhn's Methods - 2017

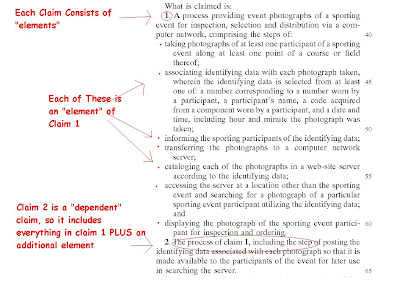

Patent Claim

Terms

ICC - Independent Claim Count is the number of independent claims made by a patent

ICL - Independent Claim Length is the number of words in said claim**.

PC - Primary Claim is the first independent Claim listed in a patent.

Project Goal

Use patent claim's probability of litigation to rank search results.

Obtain

DF.INFO()

Number of patent claims

The claim number for a particular patent

Int64Index: 8216031 entries, 0 to 8216149

Data columns (total 3 columns):

pat_no object

claim_no int64

claim_txt object

dtypes: int64(1), object(2)

memory usage: 250.7+ MBThe text contained in the claim

The Patent ID number of a particular claim

DF.head()

df = df = pd.read_csv('claim_2000_2014_v001.csv')

df.head()

pat_no claim_no claim_txt

0 8697278 17 17. Battery comprising an interior of the batt...

1 7385756 81 81. A catadioptric projection objective for im...

2 7387146 1 1. A heavy duty tire comprising a tread portio...

3 7387253 43 43. A system comprising: (a) a optical reader ...

4 7387278 17 17. A parachute ripcord pin for holding a para...

Scrub DATA &Create FEATURES

pat_no claim_no claim_txt ICL ICC litigation

8697278 17 battery comprising interior battery active ele... 106 2 0

8697278 1 battery cell casing comprising first casing el... 97 2 0

7385756 81 catadioptric projection objective imaging patt... 108 33 0

7385756 94 catadioptric projection objective imaging patt... 116 33 0

7385756 79 catadioptric projection objective imaging patt... 103 33 0-

Text Cleaning

- Removed legal stop words

- Lower cased

- Standard text cleaning

- Removed Dependent Claims

-

Computed

- Independent Claim Length (ICL)

- Independent Claim Count (ICC)

- Remove patents prior to 2000

- Merged Patent-wise Litigation Data from Stanford

explore

df['litigation'].value_counts()

0 8185679

1 30352

Name: litigation, dtype: int64

0 = number of non-litigated claims

1 = number of litigated claimsdf['litigation'].value_counts(normalize=True)

Non-Litigated Claims 99.63%

Litigated Claims 0.37%

Name: type, dtype: float64 All Claims

DF.describe()

All Claims

df.loc[:,['ICL','litigation']].groupby(['litigation']).agg(['count','min','max','mean','std'])

ICL

count min max mean std

litigation

0 8185679 1 18433 104.827044 73.717400

1 30352 1 1509 94.007380 62.130354

ICC

count min max mean std

litigation

0 2907222 1 276 2.814694 2.326064

1 7526 1 358 4.030162 7.705218

#Two Tailed Independent T-Test

statistic pvalue

ICL 30.260286 3.155879e-198

ICC -13.683259 4.067142e-42

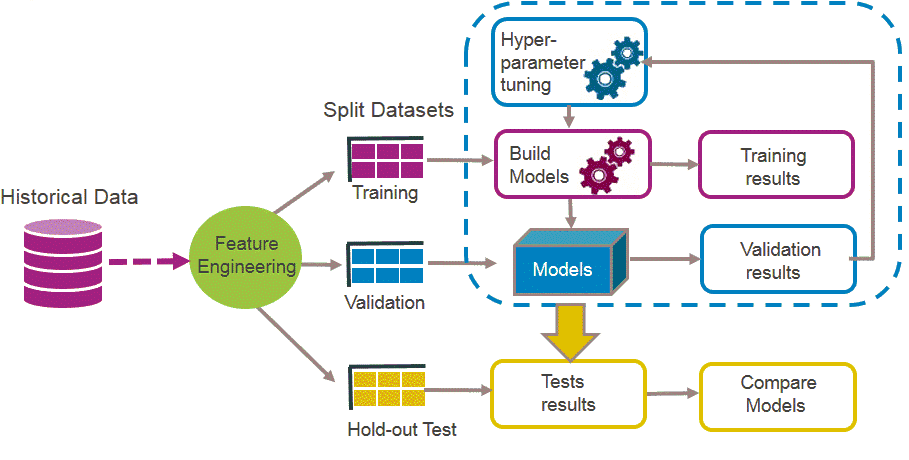

Unsupervised Learning

Supervised Learning

Modeling Techniques

Gensim Doc2Vec Language Model

Doc2vec

## Sample of a tagged document

next(corpus_for_doc2vec.__iter__())

>TaggedDocument(words=['battery', 'comprising', 'interior', 'battery', 'active', 'elements', 'battery',

'cell', 'casing', 'said', 'cell', 'casing', 'comprising', 'first', 'casing', 'element', 'first', 'contact',

'surface', 'second', 'casing', 'element', 'second', 'contact', 'surface', 'wherein', 'assembled', 'position',

'first', 'second', 'contact', 'surfaces', 'contact', 'first', 'second', 'casing', 'elements', 'encase',

'active', 'materials', 'battery', 'cell', 'interior', 'space', 'wherein', 'least', 'one', 'gas', 'tight',

'seal', 'layer', 'arranged', 'first', 'second', 'contact', 'surfaces', 'seal', 'interior', 'space',

'characterized', 'one', 'first', 'second', 'contact', 'surfaces', 'comprises', 'electrically', 'insulating',

'void', 'volume', 'layer', 'first', 'second', 'contact', 'surfaces', 'comprises', 'formable', 'material',

'layer', 'fills', 'voids', 'surface', 'void', 'volume', 'layer', 'hermetically', 'assembled', 'position',

'form', 'seal', 'layer'], tags=['8697278-17'])

Build a Language Model

import gensim

from gensim.models.doc2vec import Doc2Vec, TaggedDocument

assert gensim.models.doc2vec.FAST_VERSION > -1 #parallelize doc2vec

from gensim.utils import simple_preprocess

class MyDataframeCorpus(object):

def __init__(self, source_df, text_col, tag_col):

self.source_df = source_df

self.text_col = text_col

self.tag_col = tag_col

def __iter__(self):

for i, row in self.source_df.iterrows():

yield TaggedDocument(words=simple_preprocess(row[self.text_col]),

tags=[row[self.tag_col]])

#Model 1

corpus_for_doc2vec = MyDataframeCorpus(df, 'claim_txt', 'claim_no')

#Model 2

corpus_for_doc2vec = MyDataframeCorpus(df, 'claim_txt', 'litigation')%%time

#Params

model_smple = Doc2Vec(vector_size=100, # 100 should be fine based on the standards

window=8, #number of context words

alpha=.025, #initial learning rate

min_alpha=0.00025, #learning rate drops linearly to this

min_count=2, #ignores all words with total frequency lower than this.

dm =1, #algorith 1=distributed memory , 0=distributed bag of words (PV-DBOW)

epochs=30,

workers=workers)#cores to use

model_smple.build_vocab(corpus_for_doc2vec,progress_per=500000)

## Build vocab from streaming tagged document Show progress every 500k rows

## Should take ~ 30 minutes

# Train Language Model

model_smple.train(corpus_for_doc2vec, total_examples=model_smple.corpus_count, epochs=model_smple.epochs)

>>Wall time: 22h 02min 24s

Model In Action

def model_infer_test(df,model):

#grab a random claim from random_claim function

tagged_claim = next(random_claim(df))

#Infer a vector from that claim

inferred_vector = model.infer_vector(tagged_claim.words)

sims = model.docvecs.most_similar([inferred_vector], topn=len(model.docvecs))

#Print top 5 similar claims

print(sims[:5])

# Compare and print the most/median/least similar documents from the train corpus

print('Test Document ({}): «{}»\n'.format(tagged_claim.tags, ' '.join(tagged_claim.words)))

print(u'SIMILAR/DISSIMILAR DOCS PER MODEL %s:\n' % model)

for label, index in [('MOST', 0),('Second', 1) ,('MEDIAN', len(sims)//2), ('LEAST', len(sims) - 1)]:

print(label,index)

#print(u'%s %s: «%s»\n' % (label, sims[index], ' '.join(df[sims[index][0]].words)))

print(u'%s %s: «%s»\n' % (label, sims[index], df.loc[df['claim_no']==sims[index][0],['claim_txt']].values))

# This will let you get random tagged documents

def random_claim(df):

doc_index = np.random.randint(0,len(df))

#row = [df.iloc[doc_index,:]['claim_txt'],df.iloc[doc_index,:]['claim_no']]

yield TaggedDocument(words=simple_preprocess(df.iloc[doc_index,:]['claim_txt']),

tags=df.iloc[doc_index,:]['claim_no'])model_infer_test(df,model_smple)

[('6208475-7', 0.9243185520172119), ('6208475-3', 0.883671224117279), ('6680491-1', 0.6106572151184082),

('8913211-14', 0.5911911725997925), ('8913211-1', 0.5858227610588074)]

Test Document (6208475-7): «optical member inspection apparatus obtaining image data used inspections applying

illumination light optical member one side thereof photographing optical member side comprising holder

comprising frame shaped base portion plurality spaced optical member holding portions provided base portion

enclose space supporting optical member formed base portion said optical member holding portions receiving

face outer margin optical member mounted restriction wall restrict movement optical member mounted receiving

face contacting outer margin optical member said receiving face contacting outer margin face optical member

light passes operation optical member said restricting wall located said moving face»

SIMILAR/DISSIMILAR DOCS PER MODEL Doc2Vec(dm/m,d100,n5,w5,mc2,s0.001,t16):

MOST 0

MOST ('6208475-7', 0.9243185520172119): «[['optical-member inspection apparatus obtaining image data used

inspections applying illumination light optical member one side thereof photographing optical member side,

comprising:a holder comprising frame shaped base portion plurality spaced optical-member holding portions

provided base portion enclose space supporting optical member formed base portion, said optical-member

holding portions receiving face outer margin optical member mounted restriction wall restrict movement

optical member mounted receiving face contacting outer margin optical member, said receiving face contacting

outer margin face optical member light passes operation optical member, said restricting wall located said

moving face.']]»

#### OUTPUT CONTD

Second 1

Second ('6208475-3', 0.883671224117279): «[['holder holding outer margin optical member, comprising:a frame

shaped base portion; anda plurality spaced optical-member holding portions provided base portion enclose

space supporting optical member formed base portion, said optical-member holding portions receiving face

outer margin optical member mounted restriction wall restrict movement optical member mounted receiving face

contacting outer margin optical member, said receiving face contacting outer margin face optical member

light passes operation optical member, said restricting wall located said receiving face.']]»

MEDIAN 4108015

MEDIAN ('6592902-1', 0.08781707286834717): «[['pharmaceutical composition comprising (a) particulate

eplerenone d90 particle size 25 400 microns, amount 10 mg 1000 mg, (b) one pharmaceutically acceptable

carrier materials; said composition controlled release oral dosage form wherein 50% said eplerenone

dissolved vitro least 1.5 hours 1% sodium dodecyl sulfate solution 37� c.']]»

LEAST 8216030

LEAST ('6823942-1', -0.3702787756919861): «[['tree saver apparatus preventing excess pressure christmas tree

well, wherein christmas tree comprises tubing, master valve, top valve, second master valve, wing valve,

wherein apparatus comprises:a. hydraulic system comprising: i. piston connected piston rod; ii. cylinder

connected piston; iii. upper plunger connected piston; iv. hand wheel connected upper plunger; b. connection

least 3 outlets connected cylinder; c. frac wing valve connected connection least 3 outlets; d. frac valve

first port second port, wherein first port connected connection least 3 outlets; e. mandrel first end

second end, wherein first end connected piston rod; f. landing bowl connected top valve.']]»Data transformation

claim_no ICL ICC litigation 0 1 2 3 4 5 6 ... 90 91 92 93 94 95 96 97 98 99

8697278-17 106 2 0 -0.021915 0.124170 -0.467477 1.725972 1.353391 -0.197958 1.234415 ... -1.201108 -0.272672 0.649778 -1.023098 0.146432 0.122571 0.215042 -1.076661 -0.508896 -1.690101

8697278-1 97 2 0 -0.271349 0.260269 -0.315466 1.746054 0.874254 -0.225060 0.971804 ... -1.190130 -0.417508 0.587534 -1.157797 0.314950 0.189521 0.474607 -1.119020 -0.376173 -1.699343

7385756-81 108 33 0 1.429105 0.936555 -0.296551 -1.073354 -0.421059 -0.114407 -0.881726 ... 0.885844 0.934247 0.678008 -0.752642 -0.121980 -0.623260 -2.384536 0.471751 0.874406 1.251178

7385756-94 116 33 0 1.027478 0.484034 -0.535233 -1.124252 -0.382812 -0.101879 -0.348340 ... 0.772912 0.951951 0.901475 -0.721144 -0.304449 -0.278908 -2.655865 0.323664 0.943390 0.687369

7385756-79 103 33 0 1.396978 0.419591 -0.072397 -0.642861 -0.482944 0.496666 -1.178749 ... 0.074441 1.187986 0.909484 0.278953 -0.483475 -0.028059 -2.268470 0.986993 0.039141 0.374451

Modeling Techniques

Gensim Doc2Vec Language Model

XGBOOST Logistic Regression

Supervised Learning

XGBOOST

Multi Experiment TTS

### Test Train Split

y = df['litigation']

X = df.drop(['litigation','claim_no'],axis=1)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.30,random_state=42)

#free up memory

del df,y,X

#Class balance test and train

y_test.value_counts(normalize=True)

0 0.996355

1 0.003645

Name: litigation, dtype: float64

y_train.value_counts(normalize=True)

0 0.996285

1 0.003715

Name: litigation, dtype: float64

# Convert input data from numpy to XGBoost format

dtrain = xgb.DMatrix(X_train, label=y_train)

dtest = xgb.DMatrix(X_test, label=y_test)

#again to conserve memory

del X_train, X_test, y_train, y_testfrom sklearn.model_selection import train_test_split

import xgboost as xgb

##ICL - Independent Claim Length

##ICC - Independent Claim Count

##100F - One Hundred Length Feature Vector from Doc2Vec language model

# Model ICL_100F

df= pd.read_csv('claims_vectors_litigation.csv',

usecols=lambda x : x not in ['claim_txt','ICC'])

# Model ICC_100F

df= pd.read_csv('claims_vectors_litigation.csv',

usecols=lambda x : x not in ['claim_txt','ICL'])

# Model ICL_ICC

df= pd.read_csv('claims_vectors_litigation.csv',

usecols=['claim_no','ICL','ICC','litigation'])

#Model ICL_ICF_100F

df= pd.read_csv('claims_vectors_litigation.csv',

usecols=lambda x : x not in ['claim_txt'])

# Model 100F

df= pd.read_csv('claims_vectors_litigation.csv',

usecols=lambda x : x not in ['ICL','ICC','claim_txt'])

Training the Model

# Specify sufficient boosting iterations to reach a minimum

num_round = 5000

param = {'objective' : 'binary:logistic', # Specify binary classification

'eta' : .5,

'max_depth' : 8,

'predictor' : 'gpu_predictor',

#'verbosity' : 3,

'tree_method' : 'gpu_hist', # Use GPU accelerated algorithm

'random_state': 42

}

gpu_res = {} # Store accuracy result

tmp = time.time()

# Train model

tst_model = xgb.train(param, dtrain, num_round, evals=[(dtest, 'test')], evals_result=gpu_res)

print("GPU Training Time: %s seconds" % (str(time.time() - tmp)))

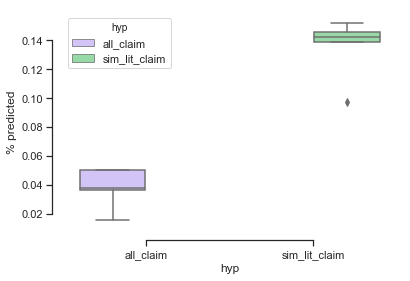

INITIAL Results

Turning water into wine

model % non-lit claims % lit claims RMSE % predicted #predicted

ICC_100F 99.6355 0.3645 0.003138 0.0507 15

ICL_ICC_100F 99.6355 0.3645 0.003142 0.0503 15

ICL_100F 99.6355 0.3645 0.003267 0.0378 11

100F 99.6355 0.3645 0.003283 0.0362 11

ICL_ICC 99.6355 0.3645 0.003490 0.0155 5

*predicted values are based on probability >.5

ICC - Independent Claim Count

ICL - Independent Claim Length

100_F - Embedded Language Vector

What do our results mean?

Text

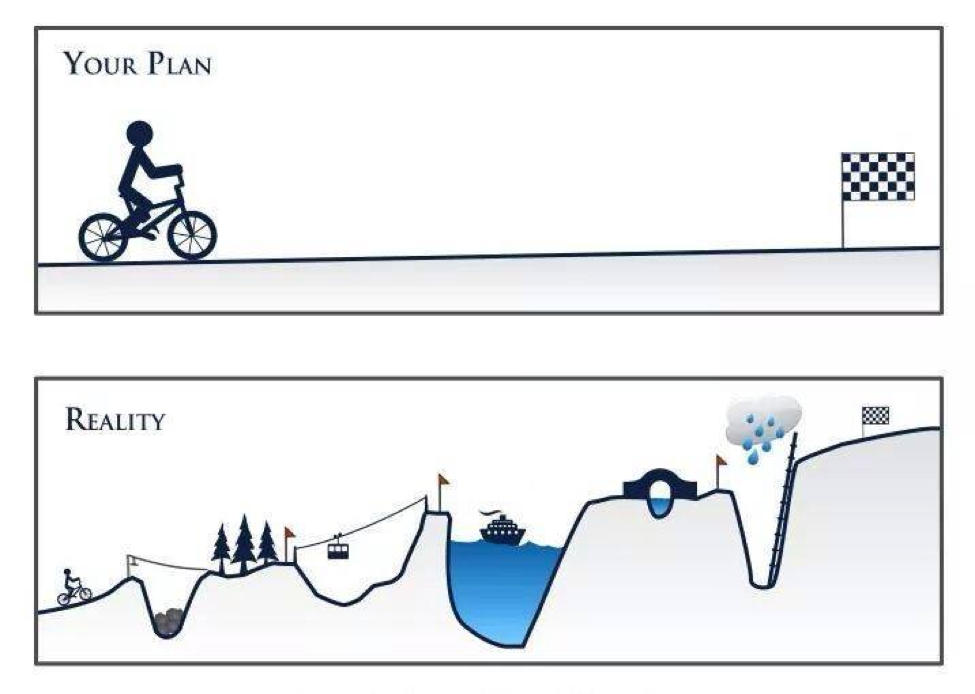

Politely laugh at the image below

Test model on Less data!

.. A LOT LESS DATA

list_of_litigated_claims = []

For each element in list of litigated claims:

Infer a vector

Calculate Similarity for all other claims

Return all Primary Claims of the 100 most similar claimsdf.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 205501 entries, 0 to 205500

Columns: 105 entries, Unnamed: 0 to 99

dtypes: float64(100), int64(4), object(1)

memory usage: 164.6+ MB

df['litigation'].value_counts()

0 197975 - 96.34%

1 7526 - 03.66%

Name: litigation, dtype: int64

Train a new model

Model prediction w/o language features

Try Try Again...one final time

Art of the Law Suit

Rich Patent Poor Patent

#Model 2

corpus_for_doc2vec = MyDataframeCorpus(df, 'claim_txt', 'litigation'):(

model % lit-predicted

0 ICL_ICC_100F_LS_NLS 0.007204

1 ICL_100F_LS_NLS 0.007104

2 ICC_100F_LS_NLS 0.006804

3 100F_LS_NLS 0.007204

4 ICL_ICC_LS_NLS -0.039496

What do we do now?

Subtitle

Lessons learned

- Medium Data is still hard to work with

- Don't be afraid to go back to the basics

- Use Joblib

- Fancy Graphs don't save money, but they make us all warm inside.

-

Read research papersDon't believe everything you read

Questions??

- Oldest Method - 1990 -2001

- Stanford Method 2015

- USPTO Method - 2016

- Kuhn's Methods - 2017

Sources listed below