Predicting spatio-temporal traffic patterns with Deep Learning

About me

Project

- Funded by German government and DLR

- Goal: Build up a mobility service plattform

- Addressing small companies

- Crowdsource traffic related data

- Explore / extend spatial data mining tools

So what is Deep Learning?

- It's Machine Learning with neural networks

- "Deep" reflects the anatomy of these nets

- Mostly supervised learning

- Ideal for fuzzy data (images, audio, text)

- Outperfoms other approaches

- New easy-to-use frameworks for DL

Yes, it's a hype

Images

Convolutional Nets

(c) Fjodor Van Veen, Asimov Institute

Input Cell

Kernel

Convolutional

Hidden Cell

Output Cell

Text Mining

Text

Text

Memory Networks

=

The entire net repeated and interconnected

Sequences?

Single values (many-to-one)

Sequences (many-to-many)

Daytime? Weekday? Holiday? Weather? Events?

Location?

Spatio-temporal traffic forecasts with neural networks

- Abdulhai et al. (1999) have done it

- Ishak et al. (2003) have done it

- Van Lint et al. (2005) have done it

- Vlahogianni et al. (2007) have done it

- Leonhardt (2008) has done it

- Zeng & Zhang (2012) have done it

- Lv et al. (2014) have done it

- Polson et al. (2015) have done it

- Ma et al. (2015) have done it

- and many more ...

Test Data

- ~ 130 inductive loops (speed, occupancy etc.)

- 9 years of data (atm testing only 1 month of 2014)

- 80% training, 20% test

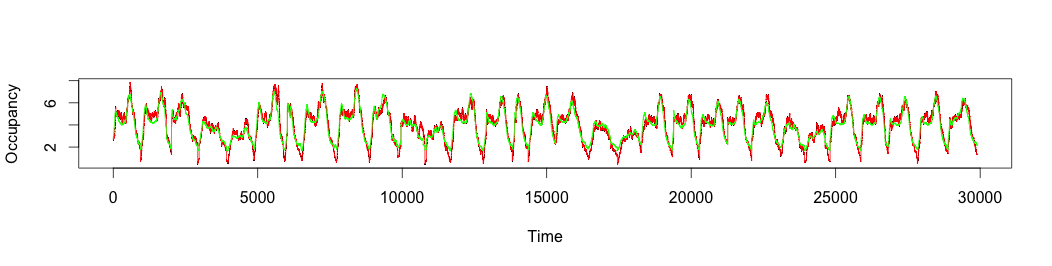

Time Series

Workflow

- Define a time window (e.g. 15 min of historical data)

- Take moving average from each sensors as input ...

- ... to predict a future value for one sensor (e.g. 15 min ahaed)

- Narrow down to neighbours

- Feed input vectors into network

- Use RMSE as loss function

Spatial Correlation?

pos. correlation

neg. correlation

Isochrones

Adjacency Matrix

| s1 | s2 | s3 | s4 | s5 | s6 | s7 | s8 | ... | |

|---|---|---|---|---|---|---|---|---|---|

| s1 | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | ... |

| s2 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ... |

| s3 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ... |

| s4 | 0 | 0 | 1 | 1 | 0 | 1 | 0 | 1 | ... |

| s5 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | ... |

| s6 | 1 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | ... |

| s7 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | ... |

| s8 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | ... |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

Python Code (Tensor Flow)

@tf_attributeLock

def prediction(self):

c.debugInfo(__name__,"Adding Prediction nodes to the graph")

with tf.name_scope('layer1'):

weights = tf.Variable(tf.truncated_normal(

(self.n_input,self.n_hidden),stddev=0.1

), name="lay1_weights")

bias = tf.Variable(tf.constant(

0.1,shape=[self.n_hidden]

), name = "lay1_bias")

out_layer1 = tf.nn.sigmoid(tf.matmul(

self.data,weights

)+bias, name = "lay1_output")

with tf.name_scope('layer2'):

weights = tf.Variable(tf.truncated_normal(

(self.n_hidden,self.n_output),stddev=0.1

), name="lay2_weights")

bias = tf.Variable(tf.constant(

0.1,shape=[self.n_output]

), name="lay2_bias")

out_layer2 = tf.nn.sigmoid(tf.matmul(

out_layer1,weights

)+bias, name = "lay2_output")

return out_layer2Visual Representation

Results

- Absolute error of 0.3 for occupancy

- Plans: Extend the input dataset to see differences between months

How it can be used

- Travel time propagation for road network links

- Create more accurate messages on traffic signs

- Recommendation of alternative routes

- Improve traffic simulation software

Caveats?

- It needs A LOT of training data

- Computationally intense = waste of energy?

- DKWGO, but it works!?

- So many hyperparameters to tune

- How often do I need to train the net?

Alternatives?

- Autoregressive methods (STARIMA etc.)

- Support-Vector Machines

Thanks

Felix Kunde

Research Assistant, MAGDa

Beuth University of Applied Science

fkunde@beuth-hochschule.de