An End-To-End Model for Business Analysis

R.P. Churchill

CBAP, PMP, CLSSBB, CSM, CSPO, CSD

What the BAs do:

- Intended Use Statement

- Assumptions, Capabilities, and Risks and Impacts

- Conceptual Model (As Is State)

- Data Source Document

- Requirements Document (To Be State)

- Design Document (Functional and Non-Functional, including Maintenance and Governance)

- Implementation Plan

- Test Plan and Results (Verification)

- Evaluation of Outputs and Operation Plan and Results (Validation)

- Evaluation of Usability (Validation)

- Acceptance Plan and Evaluation (Accreditation)

This defines the customer's goals for what the new or modified process or system will accomplish.

Intended Use Statement

-

Key Questions

-

Application

-

Outputs and Data

It may describe technical and performance outcomes but must ultimately be expressed in terms of business value.

Each goal can be described in terms of:

Define the scope of the project and what capabilities and considerations will and will not be included.

Assumptions, Capabilities, and Risks & Impacts

-

Outside of natural organizational boundaries

-

Insufficient data or understanding

-

Impact on results is small (benefit not worth cost)

Describe the risks inherent in the effort and the possible impacts of risk items occurring.

Reasons to omit features and capabilities:

If an existing process is to be modified, improved, or automated, discover all operations and data items. This defines the As Is State. (In simulation this is known as building a Conceptual Model.)

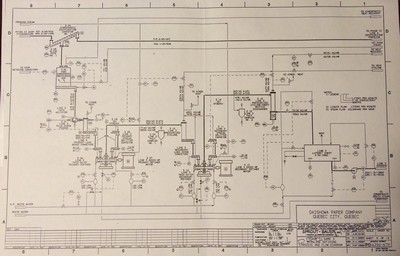

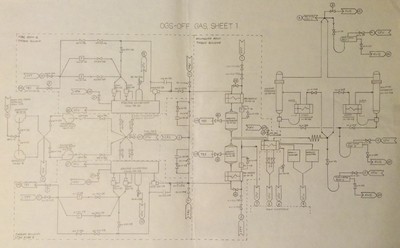

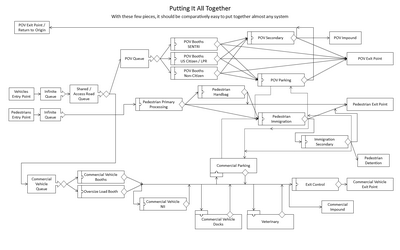

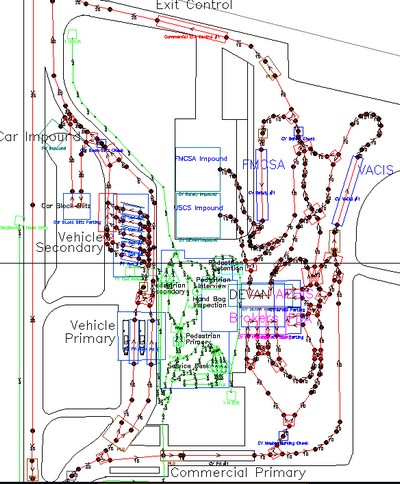

Conceptual Model / As Is State

If there is not an existing process, work backwards from the desired outcomes to determine what operations and data are required.

Map out the discovered process and document and collect data and parameters for each operation and communication.

Iteratively review the maps, data, and descriptions with customers and SMEs until all parties agree that understanding is accurate and complete!

Data sources, sinks, and messages should be mapped to the Conceptual Model (As Is State representation).

Data Source Document

Data items must be validated for accuracy, authority, and (most importantly) obtainability.

Interfaces should be identified at a high level of abstraction initially (e.g., with management and through initial discovery and scoping), and then progressively elaborated through design and implementation with process and implementation SMEs.

BAs that do not have experience working with code and databases directly should work closely with appropriate implementation SMEs (software engineers) to ensure that data and flags, states, formats, and metadata are captured and defined in sufficient detail.

Functional: What the system does. The operations carried out and the data consumed, transformed, and output. The decisions made or supported. The business value provided.

Requirements Document / To Be State

(including Acceptability Criteria)

Non-Functional: What the system is. Expressed in terms of "-ilities," e.g., maintainability, availability, reliability, flexibility, understandability, usability, and so on.

This represents the To Be State.

All items in the requirements should map to items in the Conceptual Model in both directions. This mapping is contained in the Requirements Traceability Matrix (RTM), which can be implemented in many forms.

The requirements include the criteria by which functional and non-functional criteria will be judged to be acceptable.

The design of the system is a description of how the system will be implemented and what resources will be required.

Design Document

All elements of the design must map in both directions to all elements of the Conceptual Model and Requirements. This is accomplished by extending the Requirements Traceability Matrix (RTM).

BAs may or may not participate in the design of the system directly, but must absolutely ensure that all elements are mapped to previous (and subsequent) elements via the RTM.

The design of the system also includes plans for maintenance and governance going forward.

BAs help create the plan for how the implementation will be carried out. This involves project management methodologies and strategic decision-making for prioritizing items for implementation (according to business value and architectural logic), bringing capabilities online (including training), taking existing capabilities offline, and so on.

Implementation Plan

All elements of the implementation plan must map in both directions to all elements of the Conceptual Model and Requirements. This is accomplished by extending the Requirements Traceability Matrix (RTM).

Waterfall, Agile, and other methodologies may be used as appropriate to the scope and scale of the effort.

The test plan describes how the system will be tested and the process for reporting, tracking, and resolving issues as they are identified.

Test Plan and Results (Verification)

All elements of the test plan and results must map in both directions to all previous elements in the Requirements Traceability Matrix (RTM) in both directions.

Specialized test SMEs may conduct the majority of system testing, but implementors, managers, customers, maintainers, and end users should all be involved.

Provisions for testing, V&V, and quality should be built into the process from the beginning.

This process ensures that the system generates accurate results that meet the intended use of the system and provide the desired business value.

Evaluation of Outputs (Validation)

All elements in the Requirements Traceability Matrix (RTM) in need not be addressed, but all of the stated acceptability criteria must be.

Validation of results may be objective (e.g., measured comparisons to known values in a simulation or calculation) or subjective (e.g., judged as "correct" by SMEs and managers with respect to novel situations and realizations of business value).

This process ensures that the system:

- makes sense to the users

- enables manipulation of all relevant elements

- prevents and corrects errors

- maintains users' situational awareness

- includes documentation, training, and help

Evaluation of Usability (Validation continued)

The BAs ensure that the customer's plans and criteria for acceptance are met. All of the stated acceptability criteria must be addressed.

Acceptance Plan and Evaluation (Accreditation)

This plan must include the process for handing the system or process over to the customer (internal or external). This process may include documentation, training, hardware, software, backups, licenses, and more.

The customer is the final judge of acceptance and may make three judgments:

-

Full Acceptance

-

Partial Acceptance with Limitations

-

Non-Acceptance

The process described includes the greatest amount of detail. All projects and efforts perform all of these step implicitly, but some may be streamlined or omitted as the size of the effort scales down.

Scope and Scale of the BA Effort

Appropriate to processes that have a defined beginning and end. Not for ongoing, reactive, support and maintenance operations.

Works in Waterfall, Agile, and other project management environments.

The framework does not inhibit the ability to explore and test multiple options.

Context of the BA Effort

Tell 'em what you're gonna do, do it, tell 'em what you did!

Customer sets framework, BAs and Implementers work within it.

Context of the Effort with the Customer

| Accreditation Plan | V&V Plan | V&V Report | Accreditation Report |

|---|---|---|---|

| 1. Intended Use Statement | 1. Intended Use Statement | 1. Intended Use Statement | 1. Intended Use Statement |

| 2. Assumptions, Limitations, Risks&Impacts | 2. Assumptions, Limitations, Risks&Impacts | 2. Assumptions, Limitations, Risks&Impacts | 2. Assumptions, Limitations, Risks&Impacts |

| 3. Conceptual Model | 3. Conceptual Model | 3. Conceptual Model | 3. Conceptual Model |

| 4. Data Source Document | 4. Data Source Document | 4. Data Source Document | 4. Data Source Document |

| 5. Requirements Document | 5. Requirements Document | 5. Requirements Document | 5. Requirements Document |

| 6. Design Document | 6. Design Document | 6. Design Document | 6. Design Review |

| 7. Implementation Plan | 7. Implementation Plan | 7. Implementation Description | 7. Implementation Review |

| 8. Test Plan (Verification) | 8. Test Plan (Verification) | 8. Test Results (Verification) | 8. Test Results (Verification) |

| 9. Evaluation (Validation) | 9. Evaluation (Validation) | 9. Evaluation (Validation) | 9. Evaluation (Validation) |

| 10. Acceptance Plan (Accreditation) | 10. Acceptance Plan (Accreditation) | 10. Acceptance Recommendation (Accreditation) | 10. Acceptance Recommendation (Accreditation) |

| Appendices | Appendices | Appendices | Appendices |

| A. Key Participants, Distribution | A. Key Participants, Distribution | A. Key Participants, Distribution | A. Key Participants, Distribution |

| B. Traceability Matrix | B. Traceability Matrix | B. Traceability Matrix | B. Traceability Matrix |

| C. Glossary, Acronyms | C. Glossary, Acronyms | C. Glossary, Acronyms | C. Glossary, Acronyms |

| D. References | D. References | D. References | D. References |

| E. Programmatics | E. Programmatics | E. Programmatics | E. Programmatics |

Key Concepts: Discovery vs. Data Collection

Discovery is a qualitative process. It identifies nouns (things) and verbs (actions, transformations, decisions, calculations).

Data collection is a quantitative process. It identifies adjectives (colors, dimensions, rates, times, volumes, capacities, materials, properties).

Discovery comes first, so you know what data you need to collect.

Imagine you're going to simulate or automate the process. What values do you need? This is the information the software teams will need.

Map and document the existing process (As Is)

Key Concept: Discovery

Find out what the customer needs

Create a new or modified process (To Be)

Implement the Solution

Feedback

&

Testing

If existing process is to be modified

If there is no existing process

- Describes what comes in, where it goes, how it is transformed, and what comes out.

- Describes the movement and storage of information, material, people, and other entities.

- Maps define the scope of a process. Links to connected processes or "everything else" are called interfaces.

- Are presented at the level of detail that makes sense.

- Process elements within maps can themselves be processes with their own maps.

- State, timing, and other data can be included.

- Entities in process can be split and combined.

- Processes and entities may be continuous or discrete.

Key Concept: Process Mapping

Key Concept: Process Mapping (continued)

- Processes may be mapped differently based on needs, industry standards, and the information to be represented.

Key Concept: Process Mapping (continued)

- S-I-P-O-C vs. C-O-P-I-S

- Any number of inputs and outputs are possible

Source

Source

Source

Source

Input

Input

Input

Input

Process

Output

Output

Customer

Customer

- Captures qualitative descriptions of entity types and characteristics, process types and characteristics, and decisions made.

- Captures quantitative data:

Key Concept: Process Characterization (Data Collection)

- physical dimensions, volumes, and storage capacities

- arrival and departure rates and times

- diversion percentages (what parts of outputs go where)

- process durations

- whatever is needed to describe transformations

- counts or quantities of what's stored

- velocities, frequencies, and fluxes

Data collection corresponds to the Observation technique in the BABoK. Methods include:

Key Concept: Process Characterization (Data Collection)

- Walkthroughs: guided and unguided (Waste Walk)

- Drawings, Documents, Manuals, Specifications

- Electronic Collection (real-time vs. historical, integrated vs. external sensors)

- Visual / In-Person (notes, logsheets, checklists, mobile apps)

- Interviews (with SMEs)

- Surveys

- Video

- Photos

- Calculations

- Documented Procedures and Policies

(continued)

Key Concept: Domain Knowledge Acquisition

- What you need to know to capture the process details

- What you need to know to analyze the operations

- What you need to know to perform calculations

- What you need to know to make sure you don't miss anything

Source

Source

Source

Input

Input

Input

Process

Output

Output

Customer

Customer

Process Improvement

- Incremental improvement vs. Quantum Leap (The Big Kill!)

- Rearragement (Lean)

- Compression (Lean) (subclass of Rearrangement)

- Substitution / Elimination / Automation

- Modify a sub-process and see how it affects the whole process

- See what the new constraints are (Theory of Constraints)

Source

Source

Source

Input

Input

Input

Process

Output

Output

Customer

Customer

This presentation and other information can be found at my website.

E-mail: bob@rpchurchill.com

LinkedIn: linkedin.com/in/robertpchurchill