The OpenHPC guide for overwhelmed sysadmins

Andrés Díaz-Gil,

Head of the HPC and IT department at:

Overview

- Motivation

- HPC in the IFT

- OpenHPC overview

- OpenHPC Demo

- Conclusions

Motivation

Once uppon a time...

Spanish public research institutions used to invest in HPC hardware

But not so much in personnel to take care

Now situation is even worse:

No people and fewer machine updates

Many overwhelmed sysadmins

If you are in a situation and do not want to end like this:

Pay attention

The IFT

Institute for Theoretical Physics, Madrid

Created in 2003, it is the only Spanish center dedicated entirely to research in Theoretical Physics.

It's been awarded twice with the most prestigious Spanish Award of excellence:

The Severo Ochoa

HPC in the IFT

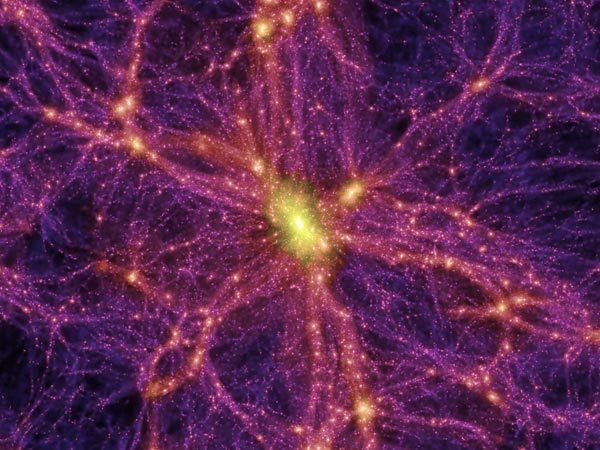

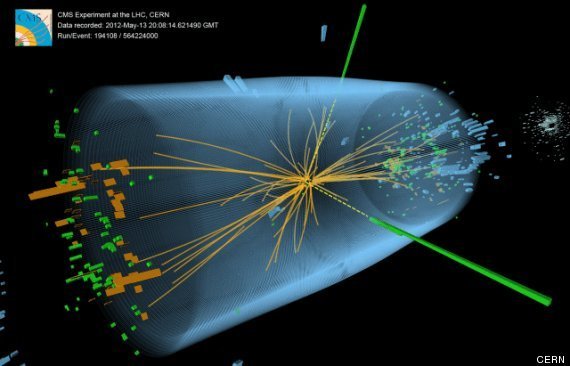

It is hard to make experiments in Theoretical Physics. HPC simulations are taking more and more importance each day:

Origin and Evo of the Universe

Elementary Particles

Dark Matter/Energy

HPC Resources

Not having a "big" cluster, but having "big" problems

The main resource of the IFT is the cluster HYDRA:

- Made of 100 compute nodes

- Infiniband network

- LUSTRE filesystem

We met all conditions in motivation:

- Non full-time dedicated HPC sysadmins

- Propietary "cluster suite"

- Heterogeneous cluster

- New hardware needed

Do not touch if working policy

OpenHPC Overview

What is NOT

- Is not a propietary stack of software

What is?

- OpenHPC is a Linux Foundation Collaborative Project

- Basically a repository that:

- Provides a reference collection of open-source HPC software components

- Awsome documentation and installation guide

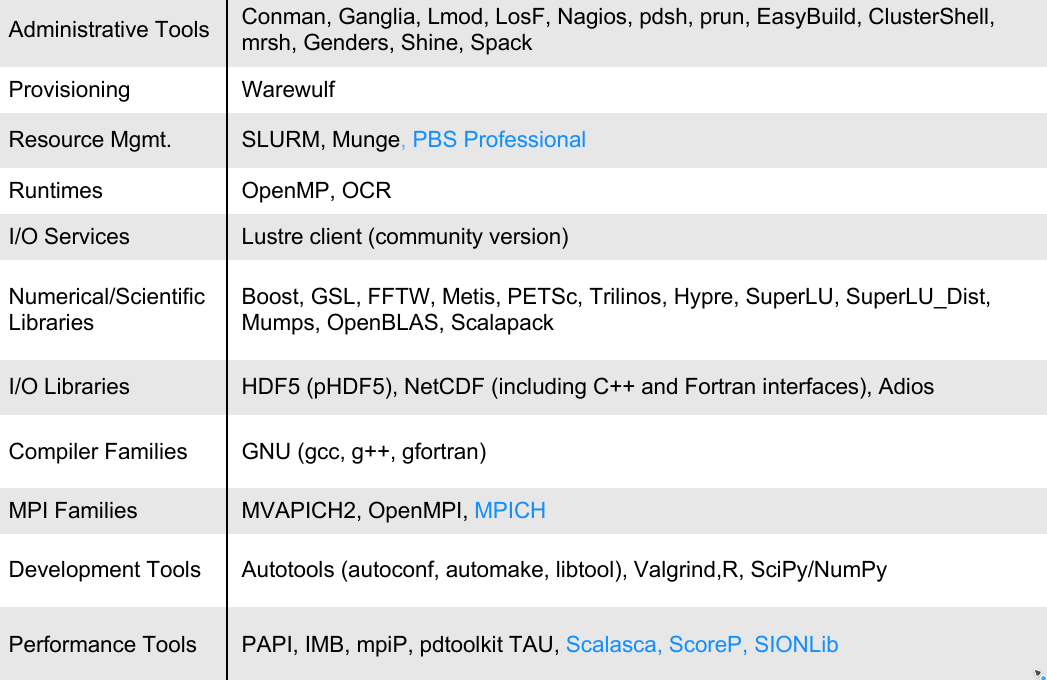

OpenHPC - S/W components

*Source: Karl W. Schulz, SC16 Community BOF

Currently at version 1.3

BOS Supported: CentOS 7.3 and SLES12SP2

OHPC Main Advantages

- All open-source, modern and community well-known software

- Just by enabling a repository. No compilation. Totally reversible (ohpc suffix)

- Components can be added/replaced/skipped by the ones of your choice

- Awesome step-by-step Installation and configuration guide (Recipes) and automation scripts:

One Recipe for each OS flavor and Resource Manager (SLRUM, PBSPro) and CPU. Each one having an automation script.

input.local + recipe.sh == installed system

OpenHPC Demo

Aka: "How to have an HPC cluster working in 15min from scratch"

OpenHPC Typical Architecture

SLURM Manager

Warewulf: Bootstrap+VNFS

DHCP, TFTP

NFS (/home, /opt/ohpc/pub)

Diskless clients

Stateless Cluster

Demo Part 1

Base Os -> Complete Master

Demo Part 2

Build&Deploy Compute Image

Demo Part 3

Resource Manager: Startup&Run

OHPC Pros

- All modern open-source software

- Provided with templates and scripts

- Awesome Installation Guides

- Fast and easy to install

- Stateless Cluster (Optional): Less energy consumption, Less hardware failures

Conclusions

OHPC Cons

- Actually none

Conclusions

Some IMOs

- Warewulf has a bit scarce documentation

- Integration with a preexisting LUSTRE may be a bit more involved

- I would like NIS (or the like) to be included in the guides/scripts

deck

By adgdt

deck

- 1,331