"Crawling" JS Pages

Andrei Demian @ Software Engineer OPSWAT. TimJS - 2015

the requirements

-

make JS content available to crawlers

-

make JS content shareable

prerender.io

Node server that uses PhantomJs to render a javascript page as HTML.

why prerender.io

-

opensource

-

easy to setup

-

well documented

-

lots of plugins & middlewares

- paid service

full compatibility

supported middlewares

-

apache

-

nginx

-

javascript

-

php

...

available plugins

-

basic auth

-

cache (S3, mongo, memory, level)

-

whitelist / blacklist requests

-

access logger (me)

...

Andrei Demian @ Software Engineer OPSWAT. TimJS - 2015

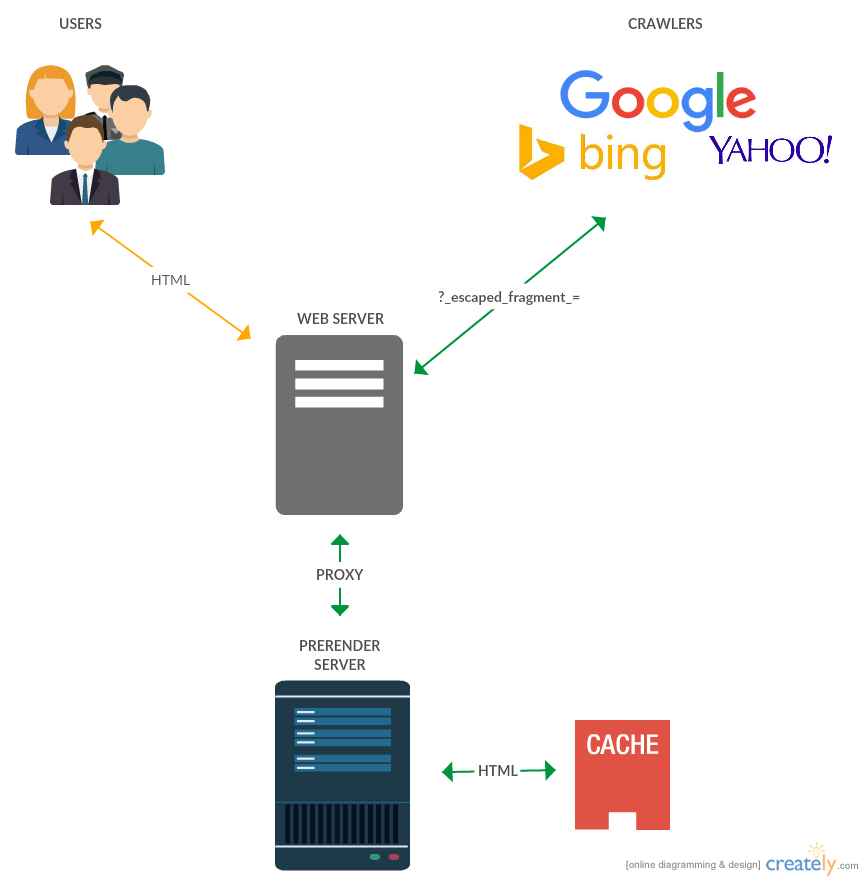

escaped fragment

If you use html5 push state (recommended):

<meta name="fragment" content="!">

http://www.example.com/user/1

http://www.example.com/user/1?_escaped_fragment_=

If you use the hashbang (#!):

http://www.example.com/#!/user/1

http://www.example.com/?_escaped_fragment_=/user/1

.htaccess magic

RewriteCond %{HTTP_USER_AGENT} (Google|Facebot|Googlebot|bingbot) [NC] RewriteCond %{QUERY_STRING} _escaped_fragment_ RewriteRule ^ http://prerender.local/http://yourdomain.com/%{REQUEST_URI} [P,L]

tricks

// Page is ready

window.prerenderReady = true;

// Replace #! in shared URLs

www.mydomain/#!/stats => www.mydomain.com/sharer/stats

// hashbang urls in angular

$location.hasPrefix = '!';

tricks

// Detect crawlers and redirect them to the prerender server

RewriteCond %{HTTP_USER_AGENT} (Google|Facebot|Googlebot|bingbot) [NC]

RewriteRule ^sharer/(.*) /?_escaped_fragment_=/$1 [P,L]

// Detect humans and redirect them to #!

RewriteCond %{HTTP_USER_AGENT} ! (Google|Facebot|Googlebot|bingbot) [NC]

RewriteRule ^sharer/(.*) /#!/$1 [NE,L,R=301]

?

Thanks!

Crawling with Prerender.io

By Andrei Demian

Crawling with Prerender.io

- 500