Tying Node.js, Socket.IO and Rails together with Redis

Why are we here?

We all enjoy fast and responsive apps which update in near real time without the need for javascript long poling or having to manually refresh the page.

I'm here to share some of the joy I've had working with Node.js's socket.io library to provide an easy to use WebSocket API.

JS on the server side

Now you are thinking, why are you talking about JavaScript on the server side, when this is a Rails group. Not all problems require the same solution, as if you rely on strict cookie cutter monkey see monkey do coding you will wind up in a world of hurt. Yes, there are high performing Ruby solutions which sit on top of EventMachine that can accomplish pushing updates to the client. The main reason for sticking with Socket.io for this discussion is the fact that EventMachine only performs well with a fairly small number of concurrent request.

Misleading METRICS DEBUNKED

You may have seen references to https://gist.github.com/Evangenieur/889761 that indicate EventMachine is faster than Node.js:

node server.jsab -n 10000 -c 100 http://localhost:3000/-> 20ms / request

ruby em_http_serv.rbab -n 10000 -c 100 http://localhost:3000/-> 12ms / request

Reality

Out of curiosity additional benchmarks have been performed using Apache's jmeter (https://gist.github.com/ccyphers/5620898):

JMeter report against Node

No HTTP error reported after several executions

|

sampler_label |

aggregate_report_count |

aggregate_report_error% |

Request/Sec |

|

HTTP Request |

20000 |

0 |

8952.551477171 |

|

TOTAL |

20000 |

0 |

8952.551477171 |

JMeter report against EventMachine

After trying numerous executions I was unable to obtain results where all 20000 http request completed without error and many of the executions failed after just a few thousand request. The best results I could produce after many executions:

|

sampler_label |

aggregate_report_count |

aggregate_report_error% |

Request/Sec |

|

HTTP Request |

19070 |

0.0640797063 |

1323.7539913925 |

|

TOTAL |

19070 |

0.0640797063 |

1323.7539913925 |

Don't Drink the Cool-Aid

Just because the cool kids are using it should you?

While developing solutions utilizing third party libraries out there does save you time, if you don't consider the performance ramifications at every step of development, not only will your solution not scale well, but even if it can be scaled with additional servers, it will cost you more in hosting cost than your developers hourly wages compared to properly thought-out implementations.

Using what's popular at the time with performance blinders on is a little like trying to eat a civilized meal during a food fight:

<performance Rant>

Why bring up the performance rant?

Don't get me wrong I am an avid Ruby developer. At the same time, by keeping in mind a few techniques we can all have the flexibility Ruby provides in rapid application development with less re-factor/re-write work in the future if an application takes off and has a huge user base.

Keep in Mind

- When at all possible, avoid pure ruby gem/library. Instead use bindings to C shared objects.

- Avoid libraries that have to fork standalone binaries for performance critical areas

-

Instantiating an instance is one of the most expensive things you can do under ruby. When at all possible reduce the number instantiated instances of classes.

- Preload as much as possible using items such as Proc and def self.method_name.

- Functional programming is usually faster performing than OOP. Try to find a balance between organized OOP and functional programming.

</PERFORMANCE RANT>

And now back to our regularly scheduled programming

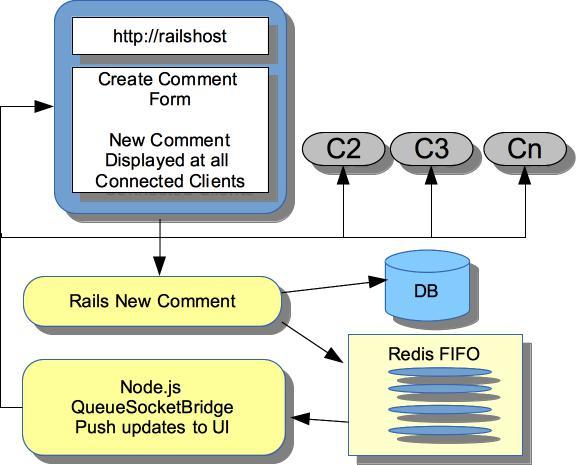

Playing Together: Tying Node.js, Socket.IO and Rails together with Redis

Keys to Structuring a WebApp Platform

- Use as little Rails specific calls for building the UI. Some of the advantages:

- If the backend application server is ever updated or written in another framework/language the UI will continue to work as long as the same API data is returned.

- Dynamic data within layouts and partials is harder to cache than static assets

- You can more easily have parallel development taking place for the UI and backend components. The front end developer can stub, mock and use ajax spies to develop with before the backend API is available. The backend developer can concentrate more on core modeling of the data and write a solution that's more reliable, available, maintainable and testable.

Keys to Structuring a WebApp Platform

- When building data API's consider performing the expensive operations once and cacheing the results.

- Any time a http request can't be returned back to the client within a reasonable time offload the job to an agent.

- Consider usability and have a good means in place to inform the client when the job is complete.

- Don't be afraid to use Queue systems for task outside background processing.

Request Lifecycle

Guts for Rails Blog New Comment

def create post = Post.find(params[:post_id]) comment = post.comments.create(params.permit(:commenter, :body, :post_id))res = {:action => 'new', :comment => comment.attributes.merge(:post_id => post.id)} redis.publish("comments_for_post_#{post.id}", res.to_json) render :json => {:results => comment.errors.empty?}.to_json end

Anatomy for WebSocket push

QueueSocketBridge is a function taking a redis client connection and an object of configuration data. The QueueSocketBridge function sets up the Socket.io object by using an instance of a Node.js http server defined in the configuration data. Once the Socket.io instance is available, it uses a connection callback in the configuration data as:

this.io.sockets.on('connection', options.connect_callback);Anatomy for WebSocket push

A subscribe method is provided that pulls from a redis queue, where there's a separate stream for each blog post. This queue is responsible for associating comments made to a post. Clients watching a blog post are pushed updates via a Socket.io room as messages arrive on the queue.

this.subscribe = function(room, push_emitter) {

if(this.rooms.indexOf(room, push_emitter) == -1) {

this.rooms.push(room);

this.client.subscribe(room);

this.client.on('message', function(channel, msg) {

console.log("MSG: " + msg)

console.log(room);

console.log("EMIT: " + push_emitter);

self.io.sockets.in(room).emit(push_emitter, msg);

});

}

}

Anatomy for WebSocket push

Example configuration data:

queue_socket_bridge_options = {

http_server: server,

authorization: function(data, accept) {

console.log(data);

if(data.headers.cookie) {

cook = data.headers.cookie.split("express.sid")

if(cook.length == 2) {

key = cook[1];

data.client = key;

} else {

return accept(null, false);

}

} else {

return accept(null, false);

}

return accept(null, true);

},

connect_callback: function (socket) {

client_key = socket.handshake.client;

socket.client_key = client_key;

self.client_connections[client_key] = {'socket': socket, 'room': ''};

socket.on('comments_for_post', function(data) {

room = 'comments_for_post_' + String(data);

console.log("ROOM: " + room);

if(self.client_connections[client_key].room != room) {

// leave the old room

socket.leave(self.client_connections[client_key].room);

console.log("in another room, must leave");

// join the new room

socket.join(room);

self.client_connections[client_key].room = room

}

//self.client_connections[client_key].socket.join(room);

self.subscribe(room, 'comment_data');

});

}

}

Anatomy for WebSocket push

To kick off the process:

require('./lib/queue_socket_bridge').QueueSocketBridge(

comment_client, queue_socket_bridge_options);Playing Together: Tying Node.js, Socket.IO and Rails together with Redis

By ccyphers

Playing Together: Tying Node.js, Socket.IO and Rails together with Redis

- 8,456