ruby's hardest problem

CONCURRENCY AND PARALLELISM

WHO AM I?

CODE MONKEY

why are we here?

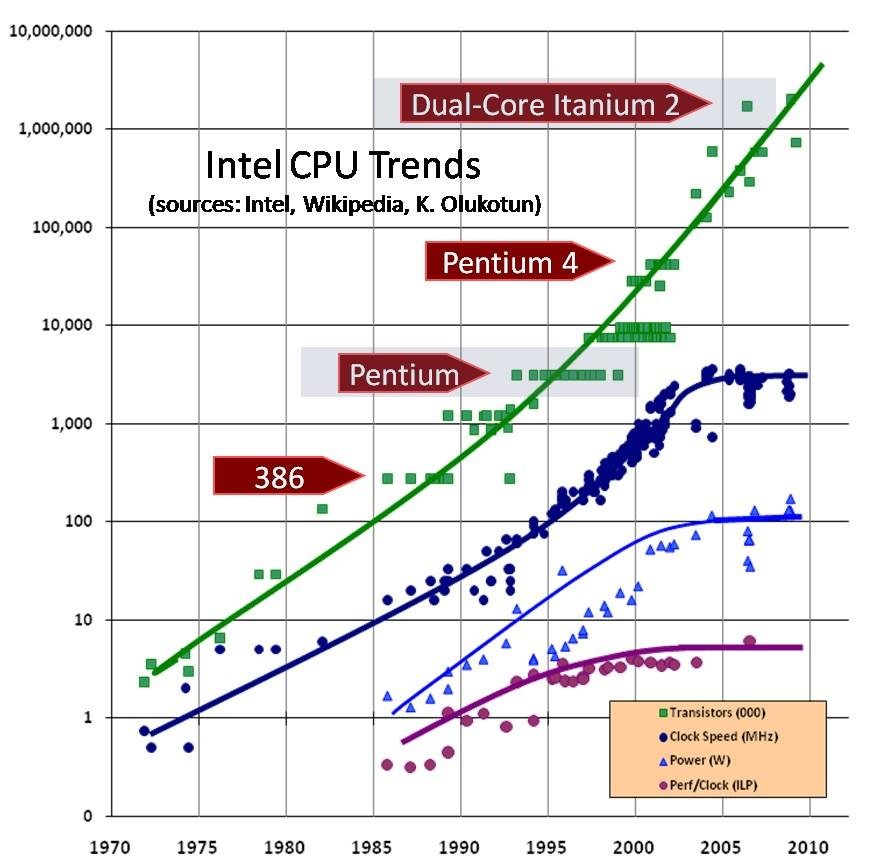

NO MORE FREE LUNCH

DARK MAGIC IS COOL

AND BECAUSE

Concurrency

A concurrent program is one with multiple threads of control. Each thread of control has effects on the world, and those threads are interleaved in some arbitrary way by the scheduler. We say that a concurrent programming language is non-deterministic, because the total effect of the program may depend on the particular interleaving at runtime. The programmer has the tricky task of controlling this non-determinism using synchronisation, to make sure that the program ends up doing what it was supposed to do regardless of the scheduling order. And that’s no mean feat, because there’s no reasonable way to test that you have covered all the cases.

Simon Marlow

parallelism

A parallel program, on the other hand, is one that merely runs on multiple processors, with the goal of hopefully running faster than it would on a single CPU.

Simon Marlow

WHY SO MUCH CONFUSION?

1. If you come with a view from the hardware and upwards toward to computing logic, then surely you may fall into the trap of equating the two terms. The reason is that to implement parallel computation in current hardware and software, you use a concurrent semantics. The pthread library for instance is writing a concurrent program which then takes advantage of multiple processors if they are available.

2. The gist of it, however, is rather simple: computing parallel is to hunt for a program speedup when adding more execution cores to the system running the program. This is a property of the machine. Computing concurrent is to write a program with multiple threads of control such that it will non-deterministically execute each thread. It is a property of the language.

So there are essentially two views: from hardware or from logic. From the perspective of logic, if you have toyed with formal semantics, is that we define concurrency-primitives in a different way than parallelism-primitives formally. That is, they are different entities. The perspective of hardware on the other hand, conflates the ideas because the only way to achieve parallelism is to use

concurrency - especially in UNIX.

THANKS JESPER LOUIS!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

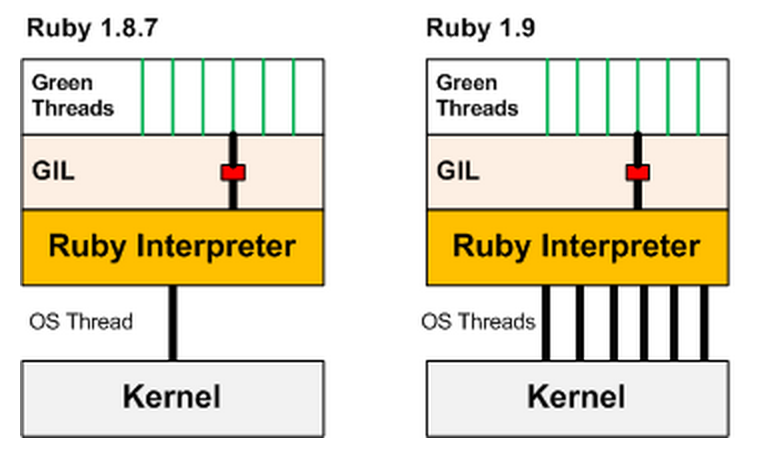

So what we have in ruby (MRI)?

THEN WHY SO MUCH WHINING

GIL

JRUBY AND RUBINIUS TO THE RESCUE

- ruby-install

- chruby

- jruby profiling

- jruby manage

- rbx profile

- rbx memory dump

threads = []

4.times do |n|

threads << Thread.new do

result = 0

100000000.times do

result += 1

end

end

end

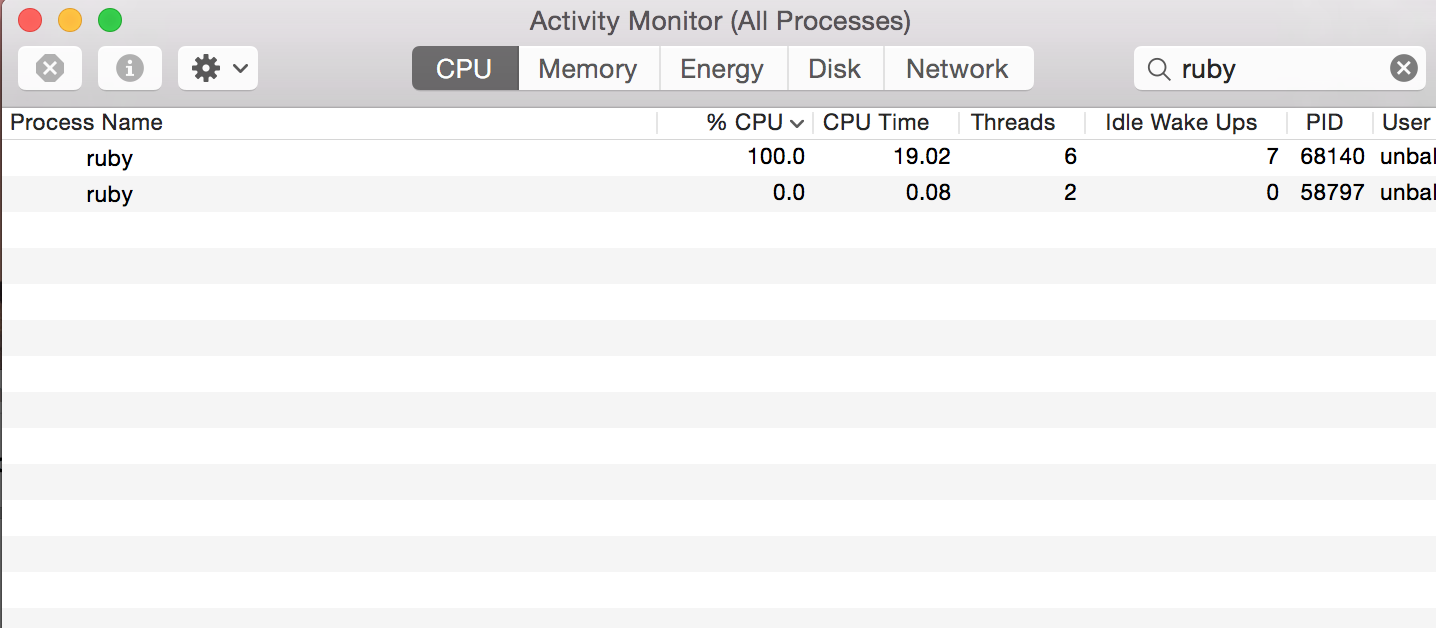

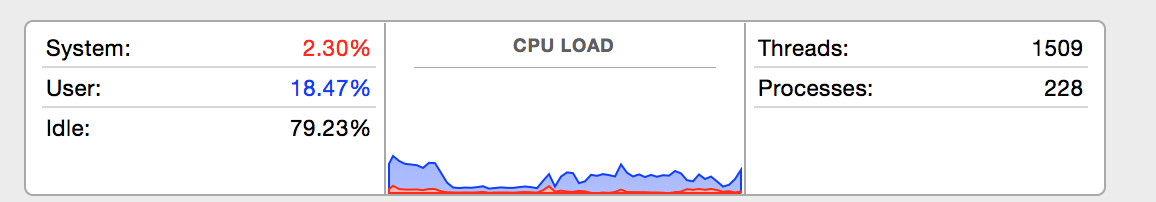

threads.map(&:join)Execution of ruby 1_counter/working_counter.rb with MRI, Rbx and Jruby with Activity Monitor/glances

Title Text

begin

last = 0

loop do

Thread.new do

sleep(1000)

end

last += 1

end

rescue

puts 'We managed to launch ' + "#{last}".green + " threads"

endExecution of ruby maximum_threads/maximum_threads.rb with Rbx and JRuby

NO REAL CONCURRENCY MODEL

we only got mutexes and locks...

require 'thread'

semaphore = Mutex.new

a = Thread.new {

semaphore.synchronize {

# access shared resource

}

}

b = Thread.new {

semaphore.synchronize {

# access shared resource

}

}

MUTEX

WHAT IS THE PROBLEM WITH LOCKS?

- Locks do not compose

- Locks breaks encapsulation (you need to know a lot!)

- Taking too few locks

- Taking too many locks

- Taking the wrong locks

- Taking locks in wrong order

- Error recovery is hard

- It's not the nature of our world!!! (we don't ask the world to stop to do something)

Jonas Boner

Concurrent ruby

- Agent

- Promise - Future

-

TimerTask

- Thread Pools

class Btc

attr_accessor :price, :runs, :task

def initialize

@price = 0

@runs = 0

@task =

Concurrent::TimerTask.new(execution_interval: 5,

run_now: true,

dup_on_deref: true) do

@price = update_price

@runs += 1

end

@task.execute

end

def update_price

uri = "https://api.bitcoinaverage.com/ticker/global/USD/"

response = RestClient.get uri

json = JSON.parse(response)

json["ask"]

end

end

btc = Btc.new

t = Thread.new do

loop do

puts 'BTC price: ' + "#{btc.price}".green

puts 'BTC timer task runs: ' + "#{btc.runs}".green

sleep 1

end

end

t.joinTimer Task

score = Concurrent::Agent.new(10)

score.value #=> 10

score << proc{|current| current + 100 }

sleep(0.1)

score.value #=> 110

score << proc{|current| current * 2 }

sleep(0.1)

score.value #=> 220

score << proc{|current| current - 50 }

sleep(0.1)

score.value #=> 170Agent

An agent is a single atomic value that represents an identity. The current value of the agent can be requested at any time (#deref). Each agent has a work queue and operates on the global thread pool. A good example of an agent is a shared incrementing counter, such as the score in a video game.

- Atoms on earth: 10^50

- 2^K = 10^50 => K = 166

- uint32_t in C has 32 bits

- 166 / 32 = 5.1875

6 integers in C have more possible states than the number of atoms on earth

Sequential code is difficult enough to test

Concurrent code, non deterministic code, is even more difficult to test since it might go through all the possible states

We need a concurrency model

"Every sufficiently complex application/language/tool will either have to use Lisp or reinvent it the hard way."

*Where highly concurrent systems are concerned, substitute Erlang for Lisp.

Don't communicate by sharing memory... Share memory by communicating

a concurrent object oriented programming framework which lets you build multithreaded programs out of concurrent objects as easily as you build sequential programs out of regular objects

based upon actor model

- actors are isolated whitin processes

- actors communicate via async messages

- messages are buffered by a mailbox

- actor works off each message

"I thought of objects being like biological cells and/or individual computers on a network, only able to communicate with messages".

Alan Kay, creator of Smalltalk, on the meaning of "object oriented programming"

class PrimeWorker

include Celluloid

def primes(limit)

primes = []

1.upto(limit) do |i|

if i.prime?

primes << i

end

end

primes

end

end

prime_pool = PrimeWorker.pool

puts prime_pool.primes 100PrimeWorker

my own small http server

class HTTPServer

include Celluloid

def initialize(port = 8080)

@server = TCPServer.new(port)

supervisor = Celluloid::SupervisionGroup.run!

supervisor.pool(AnswerWorker, as: :answer)

loop {

client = @server.accept

begin

Celluloid::Actor[:answer].start(client)

rescue

end

}

end

endclass AnswerWorker

include Celluloid

def start(client)

headers = "HTTP/1.1 200 OK\r\n" +

"Date: #{Time.now}\r\n" +

"Content-Type: text\r\n\r\n"

client.puts headers

if rand(3) == 0

client.puts "The system crashed"

client.close

throw("ATOMIC BUG")

else

client.puts "Hi..bye!"

client.close

end

end

endAnswer Worker

Supervisor restar workers after crash

class MyGroup < Celluloid::SupervisionGroup

supervise HTTPServer

end

MyGroup.runTIME TO RUN IT!

2014 GOTO => MUTABILITY

and let give a big warm welcome to hamster

Hamster collections are immutable. Whenever you modify a Hamster collection, the original is preserved and a modified copy is returned. This makes them inherently thread-safe and shareable. At the same time, they remain CPU and memory-efficient by sharing between copies.

original = Hamster.list(1, 2, 3)

copy = original.cons(0) # => Hamster.list(0, 1, 2, 3)Notice how modifying a list actually returns a new list. That's because Hamster Lists are immutable. Thankfully, just like other Hamster collections, they're also very efficient at making copies!

Hamster.interval(10_000, 1_000_000).filter do |number|

prime?(number)

end.take(3)

# => 0.0009s

(10000..1000000).select do |number|

prime?(number)

end.take(3)

# => 10s

Ruby concurrency and parallelism

By Federico Carrone

Ruby concurrency and parallelism

- 1,446