Back-end PHP

EPISODE II

HighLoad

Шкарбатов Дмитрий

Руковожу командой web-разработки

в ПриватБанке, Pentester,

MD в области защиты информации

shkarbatov@gmail.com

https://www.linkedin.com/in/shkarbatov

Остался 131 слайд

Отсылка к началу

HighLoad

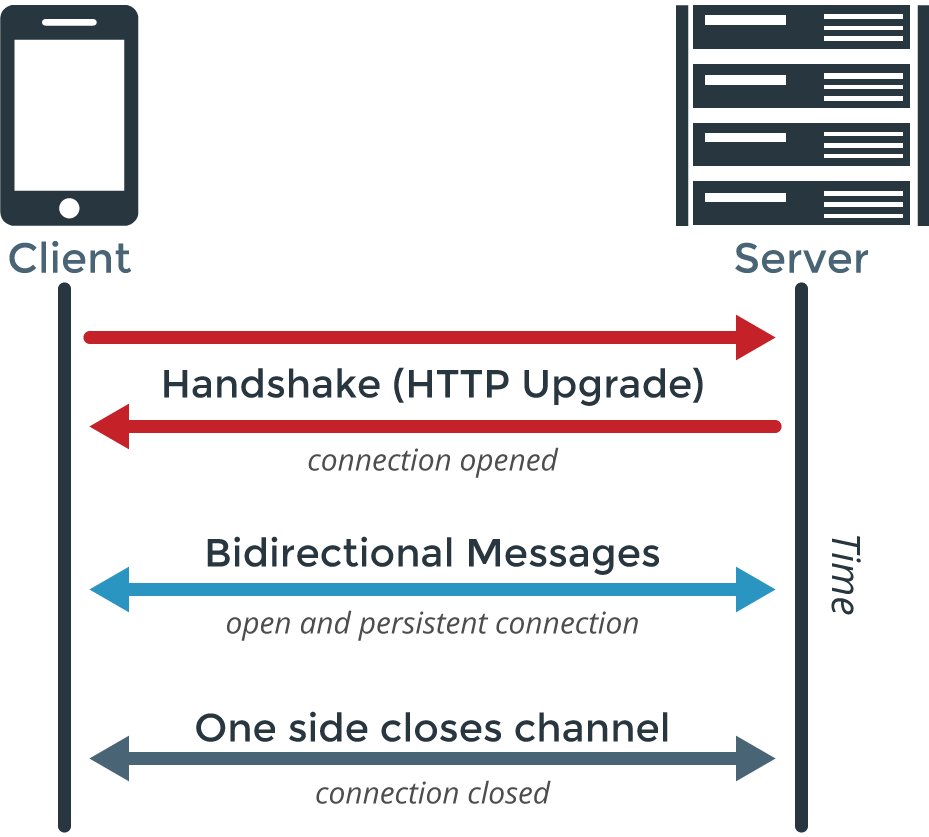

WebSocket (RFC 6455) — протокол полнодуплексной связи (может передавать и принимать одновременно) поверх TCP-соединения, предназначенный для обмена сообщениями между браузером и веб-сервером в режиме реального времени.

Он позволяет пересылать любые данные, на любой домен, безопасно и почти без лишнего сетевого трафика.

WebSocket

Протокол WebSocket работает над HTTP.

Это означает, что при соединении браузер отправляет специальные заголовки, спрашивая: «поддерживает ли сервер WebSocket?».

Если сервер в ответных заголовках отвечает «да, поддерживаю», то дальше HTTP прекращается и общение идёт на специальном протоколе WebSocket, который уже не имеет с HTTP ничего общего.

Установление WebSocket соединений

Соединение WebSocket можно открывать как WS:// или как WSS://. Протокол WSS представляет собой WebSocket над HTTPS.

Кроме большей безопасности, у WSS есть важное преимущество перед обычным WS – большая вероятность соединения.

Дело в том, что HTTPS шифрует трафик от клиента к серверу, а HTTP – нет.

Если между клиентом и сервером есть прокси, то в случае с HTTP все WebSocket-заголовки и данные передаются через него. Прокси имеет к ним доступ, ведь они никак не шифруются, и может расценить происходящее как нарушение протокола HTTP, обрезать заголовки или оборвать передачу. А в случае с WSS весь трафик сразу кодируется и через прокси проходит уже в закодированном виде. Поэтому заголовки гарантированно пройдут.

WSS

В протокол встроена проверка связи при помощи управляющих фреймов типа PING и PONG.

Тот, кто хочет проверить соединение, отправляет фрейм PING с произвольным телом. Его получатель должен в разумное время ответить фреймом PONG с тем же телом.

Этот функционал встроен в браузерную реализацию, так что браузер ответит на PING сервера, но управлять им из JavaScript нельзя.

Иначе говоря, сервер всегда знает, жив ли посетитель или у него проблема с сетью.

PING/PONG

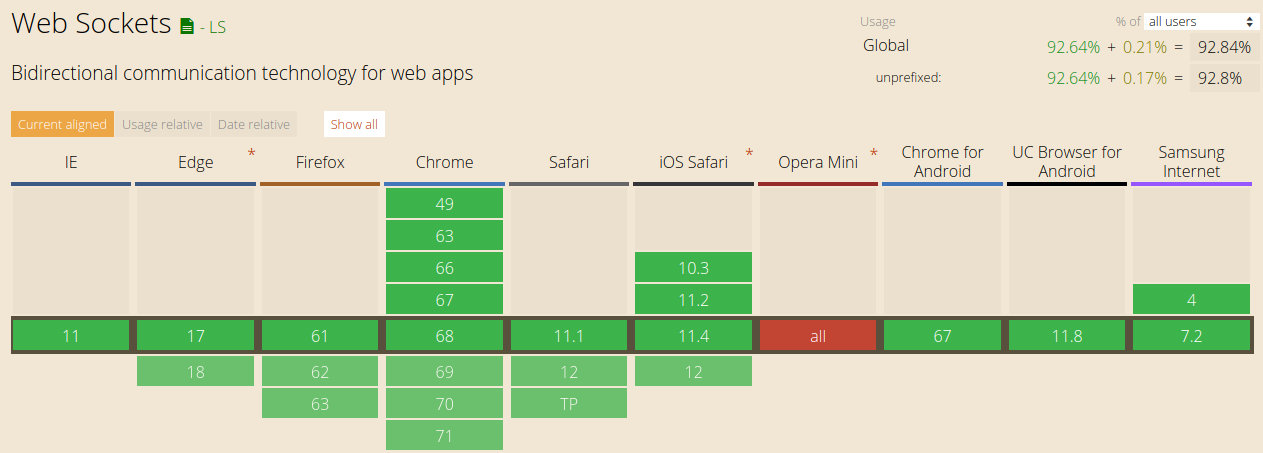

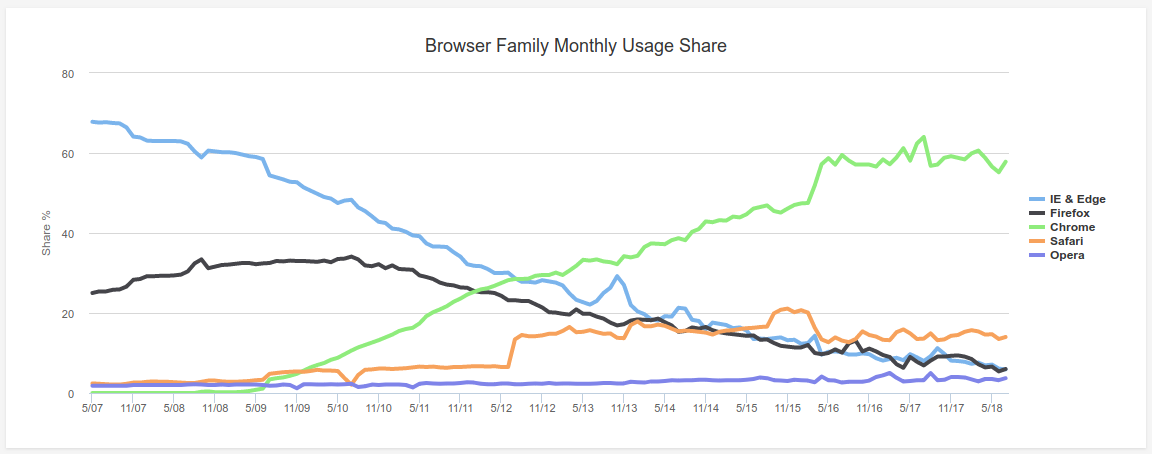

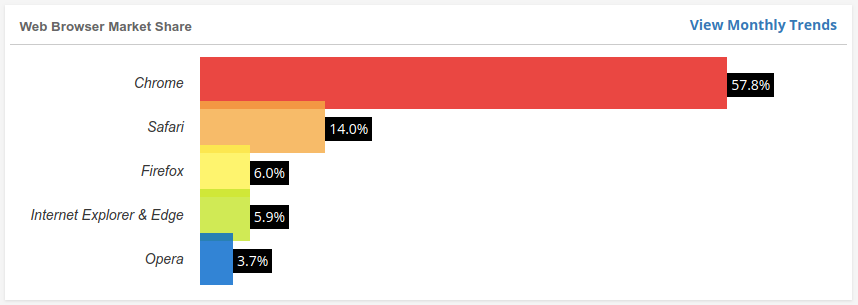

Can I use

Немного о сервере

FAQ

- Сколько TCP соединений можно поднять?

- На сколько хватит файловых дескрипторов и ресурсов сервера

- Как посмотреть лимит максимального количества открытых файловых дискрипторов

- ulimit -n (еще есть жесткий и мягкий лимиты:

ulimit -Hn, ulimit -Sn )

- Как посмотреть глобальное ограничение на количество дескрипторов

- sysctl fs.file-max

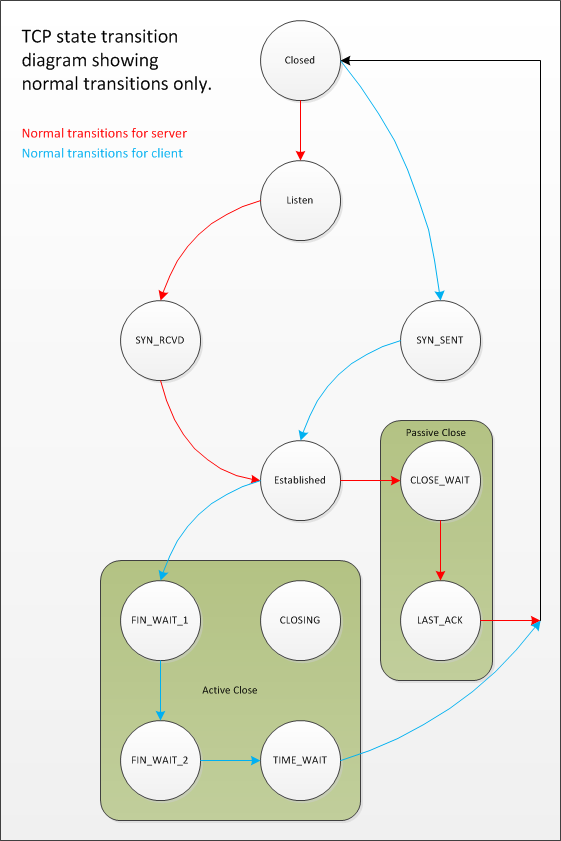

Different implementations select different values for MSL and common values are 30 seconds, 1 minute or 2 minutes. RFC 793 specifies MSL as 2 minutes and Windows systems default to this value.

TCP state transition

Оптимизация сервера

net.ipv4.tcp_tw_recycle = 1

Разрешает быструю утилизацию сокетов, находящихся в состоянии TIME-WAIT.

net.ipv4.tcp_tw_reuse = 1

Определяет возможность повторного использования сокетов TIME-WAIT для новых соединений.

net.netfilter.nf_conntrack_tcp_timeout_time_wait = 10

Время, через которое можно повторно использовать сокет, перед этим бывшем в состоянии TIME_WAIT.

Зачение по умолчанию = 120 сек.

Информация о сокетах

ss -s && ss -otp state time-wait | wc -l

Total: 2031 (kernel 2206)

TCP: 33958 (estab 868, closed 32848, orphaned 6, synrecv 0, timewait 32847/0), ports 17458

Transport Total IP IPv6

* 2206 - -

RAW 0 0 0

UDP 142 135 7

TCP 1110 1105 5

INET 1252 1240 12

FRAG 0 0 0

33357Каково решение?

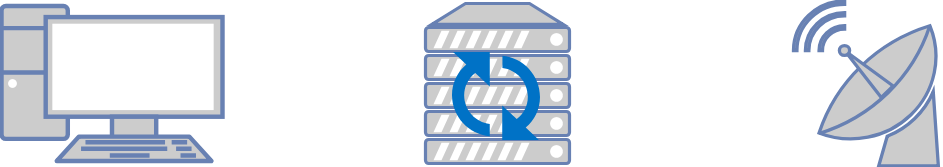

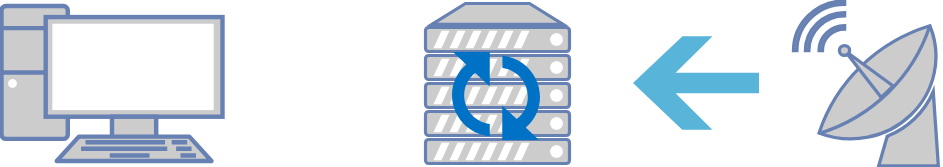

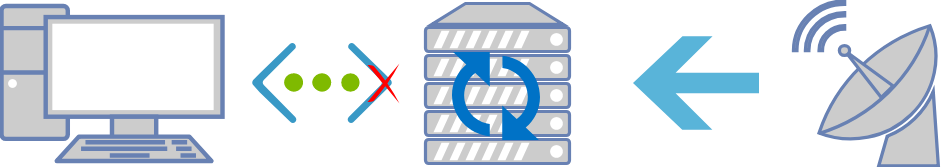

Рассмотрим архитектуру

Client

Server

API

Client

Server

API

Рассмотрим архитектуру

Client

Server

API

Рассмотрим архитектуру

Client

Server

API

Рассмотрим архитектуру

Давайте рассмотрим варианты

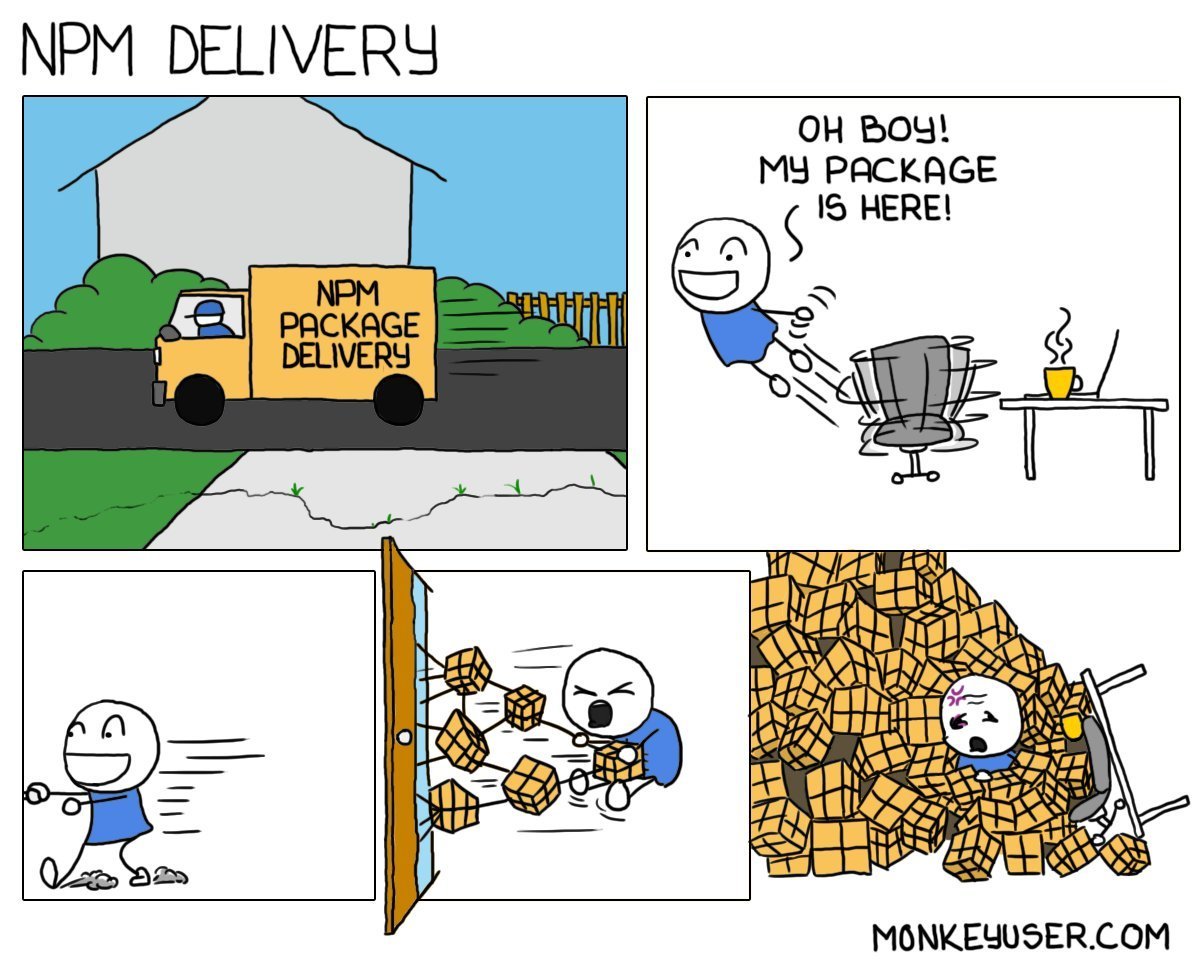

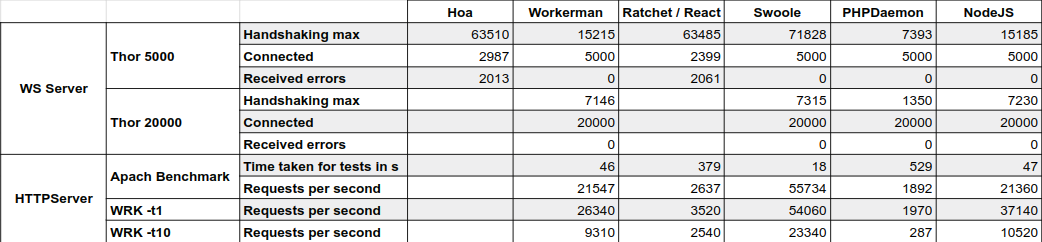

Нагрузочное тестирование

Нагрузочное тестирование

WRK - a HTTP benchmarking tool

https://github.com/wg/wrk

AB (Apache Benchmark)

https://httpd.apache.org/docs/2.4/programs/ab.html

Apache JMeter

https://jmeter.apache.org/

Thor

https://github.com/observing/thor

Характеристики ПО и железа

php -v

PHP 7.0.30-0ubuntu0.16.04.1 (cli) ( NTS )

Copyright (c) 1997-2017 The PHP Group

Zend Engine v3.0.0, Copyright (c) 1998-2017 Zend Technologies

with Zend OPcache v7.0.30-0ubuntu0.16.04.1, Copyright (c) 1999-2017, by Zend Technologies

with Xdebug v2.5.5, Copyright (c) 2002-2017, by Derick Rethans

OS: Ubuntu 16.04.1 LTS (Xenial Xerus)

CPU: Intel(R) Core(TM) i5-4690K CPU @ 3.50GHz

Capacity: 3900MHz

Memory: 15GiB

node -v

v10.9.0Hoa 3.0

https://hoa-project.net

https://github.com/hoaproject/Websocket

// Web client

<script>

var ws = new WebSocket('ws://curex.ll:8008/?user=tester01');

ws.onmessage = function(evt) { console.log(evt); };

ws.onopen = function() { ws.send("hello"); };

ws.onerror = function(error) { console.log("Error " + error.message); };

</script>

// ========================================

// PHP client

$client = new Hoa\Websocket\Client(

new Hoa\Socket\Client('tcp://127.0.0.1:8009')

);

$client->setHost('127.0.0.1');

$client->connect();

$client->send(json_encode(['user' => 'tester01', 'command' => '111111']));

$client->close();Hoa

// Worker

use Hoa\Websocket\Server as WebsocketServer;

use Hoa\Socket\Server as SocketServer;

use Hoa\Event\Bucket;

$subscribedTopics = array();

// =================================

$websocket_web = new WebsocketServer( new SocketServer('ws://127.0.0.1:8008') );

$websocket_web->on('open', function (Bucket $bucket) use (&$subscribedTopics) {

$subscribedTopics[substr($bucket->getSource()->getRequest()->getUrl(), 7)] =

['bucket'=>$bucket, 'node'=>$bucket->getSource()->getConnection()->getCurrentNode()];

});

$websocket_web->on('message', function (Bucket $bucket) {

$bucket->getSource()->send('Socket connected');

});

$websocket_web->on('close', function (Bucket $bucket) { });

// =================================

$websocket_php = new WebsocketServer( new SocketServer('ws://127.0.0.1:8009') );

$websocket_php->on('open', function (Bucket $bucket) { });

$websocket_php->on('message', function (Bucket $bucket) use (&$subscribedTopics) {

$data = json_decode($bucket->getData()['message'], true);

if (isset($data['user']) and isset($subscribedTopics[$data['user']])) {

$subscribedTopics[$data['user']]['bucket']->getSource()->send(

$data['command'],

$subscribedTopics[$data['user']]['node']

);

}

});

// =================================

$group = new Hoa\Socket\Connection\Group();

$group[] = $websocket_web;

$group[] = $websocket_php;

$group->run();Исходный код примера выше

https://github.com/Shkarbatov/WebSocketPHPHoaHoa worker

https://hoa-project.net/En/Literature/Hack/Zombie.html

https://hoa-project.net/En/Literature/Hack/Worker.html

TEST

WS Server

Hoa test

use Hoa\Websocket\Server as WebsocketServer;

use Hoa\Socket\Server as SocketServer;

use Hoa\Event\Bucket;

$websocket_web = new WebsocketServer(

new SocketServer('ws://127.0.0.1:8008')

);

$websocket_web->on('open', function (Bucket $bucket) {});

$websocket_web->on('message', function (Bucket $bucket) {

$bucket->getSource()->send('test!');

});

$websocket_web->on('close', function (Bucket $bucket) {});

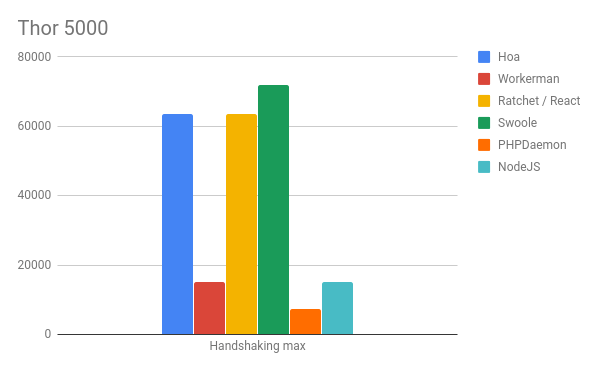

$websocket_web->run();Hoa benchmark

thor --amount 5000 ws://curex.ll:8008/

Thou shall:

- Spawn 4 workers.

- Create all the concurrent/parallel connections.

- Smash 5000 connections with the mighty Mjölnir.

Online 128077 milliseconds

Time taken 128077 milliseconds

Connected 2987

Disconnected 0

Failed 2013

Total transferred 4.52MB

Total received 595.07kB

Durations (ms):

min mean stddev median max

Handshaking 1 8655 15512 3029 63510

Latency NaN NaN NaN NaN NaN

Percentile (ms):

50% 66% 75% 80% 90% 95% 98% 98% 100%

Handshaking 3029 7059 7202 15083 31212 63226 63314 63330 63510

Latency NaN NaN NaN NaN NaN NaN NaN NaN NaN

Received errors:

2013x connect ETIMEDOUT 127.0.0.1:8008

Workerman 3.5

https://github.com/walkor/Workerman

Workerman

<?php

require_once __DIR__ . '/vendor/autoload.php';

use Workerman\Worker;

// массив для связи соединения пользователя и необходимого нам параметра

$users = [];

// создаём ws-сервер, к которому будут подключаться все наши пользователи

$ws_worker = new Worker("websocket://0.0.0.0:8000");

// создаём обработчик, который будет выполняться при запуске ws-сервера

$ws_worker->onWorkerStart = function() use (&$users)

{

// создаём локальный tcp-сервер, чтобы отправлять на него сообщения из кода нашего сайта

$inner_tcp_worker = new Worker("tcp://127.0.0.1:1234");

// создаём обработчик сообщений, который будет срабатывать,

// когда на локальный tcp-сокет приходит сообщение

$inner_tcp_worker->onMessage = function($connection, $data) use (&$users) {

$data = json_decode($data);

// отправляем сообщение пользователю по userId

if (isset($users[$data->user])) {

$webconnection = $users[$data->user];

$webconnection->send($data->message);

}

};

$inner_tcp_worker->listen();

};

$ws_worker->onConnect = function($connection) use (&$users)

{

$connection->onWebSocketConnect = function($connection) use (&$users)

{

// при подключении нового пользователя сохраняем get-параметр, который же сами и передали со страницы сайта

$users[$_GET['user']] = $connection;

// вместо get-параметра можно также использовать параметр из cookie, например $_COOKIE['PHPSESSID']

};

};

$ws_worker->onClose = function($connection) use(&$users)

{

// удаляем параметр при отключении пользователя

$user = array_search($connection, $users);

unset($users[$user]);

};

// Run worker

Worker::runAll();Workerman

<!DOCTYPE html>

<html xmlns="http://www.w3.org/1999/xhtml" xml:lang="en" lang="en">

<head>

<script>

ws = new WebSocket("ws://curex.ll:8000/?user=tester01");

ws.onmessage = function(evt) {alert(evt.data);};

</script>

</head>

</html><?php

$localsocket = 'tcp://127.0.0.1:1234';

$user = 'tester01';

$message = 'test';

// соединяемся с локальным tcp-сервером

$instance = stream_socket_client($localsocket);

// отправляем сообщение

fwrite($instance, json_encode(['user' => $user, 'message' => $message]) . "\n");Исходный код примера выше

https://github.com/Shkarbatov/WebSocketPHPWorkermanWorkerman, мониторинг дочерних процессов

// Monitor all child processes.

Workerman\Worker::monitorWorkersForLinux()

Workerman\Worker::monitorWorkersForWindows()

DTFreeman commented

$ws_worker->count = 4;

$ws_worker Master process will fork 4 child process,

and master process will never die... if child process

crash/exception/die, master will restart it!

Но остается вопрос с основным процессом

TEST

WS Server

Workerman test

use Workerman\Worker;

$ws_worker = new Worker("websocket://0.0.0.0:8000");

$ws_worker->onWorkerStart = function(){};

$ws_worker->onConnect = function($connection) {};

$ws_worker->onMessage = function($connection, $data) {

$connection->send('test!');

};

$ws_worker->onClose = function($connection) {};

Worker::runAll();Workerman benchmark

thor --amount 5000 ws://curex.ll:8000/

Thou shall:

- Spawn 4 workers.

- Create all the concurrent/parallel connections.

- Smash 5000 connections with the mighty Mjölnir.

Online 15701 milliseconds

Time taken 15704 milliseconds

Connected 5000

Disconnected 0

Failed 0

Total transferred 7.27MB

Total received 888.67kB

Durations (ms):

min mean stddev median max

Handshaking 0 1903 2786 1097 15215

Latency NaN NaN NaN NaN NaN

Percentile (ms):

50% 66% 75% 80% 90% 95% 98% 98% 100%

Handshaking 1097 1211 1384 3123 3352 7217 15123 15150 15215

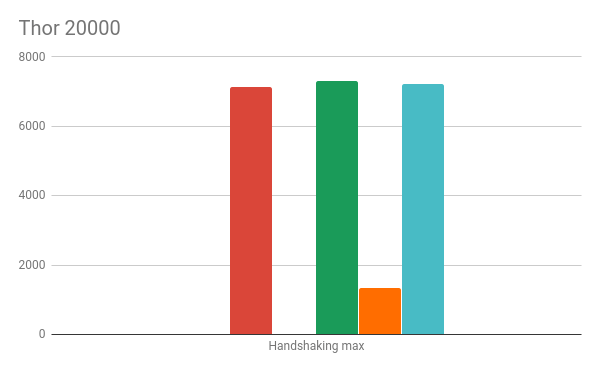

Latency NaN NaN NaN NaN NaN NaN NaN NaN NaNWorkerman benchmark 2

thor --amount 20000 ws://curex.ll:8000/

Thou shall:

- Spawn 4 workers.

- Create all the concurrent/parallel connections.

- Smash 20000 connections with the mighty Mjölnir.

Online 54970 milliseconds

Time taken 54971 milliseconds

Connected 20000

Disconnected 0

Failed 0

Total transferred 23.84MB

Total received 3.47MB

Durations (ms):

min mean stddev median max

Handshaking 0 375 860 1 7146

Latency NaN NaN NaN NaN NaN

Percentile (ms):

50% 66% 75% 80% 90% 95% 98% 98% 100%

Handshaking 1 200 372 552 1142 1380 3134 3298 7146

Latency NaN NaN NaN NaN NaN NaN NaN NaN NaN

TEST

HTTP Server

Workerman test

use Workerman\Worker;

$ws_worker = new Worker("http://0.0.0.0:1337");

$ws_worker->onMessage = function($connection) {

$connection->send('test');

};

Worker::runAll();Workerman benchmark

ab -c 10 -n 1000000 -k http://curex.ll:1337/

This is ApacheBench, Version 2.3 <$Revision: 1706008 $>

Concurrency Level: 10

Time taken for tests: 46.410 seconds

Complete requests: 1000000

Failed requests: 0

Keep-Alive requests: 1000000

Total transferred: 131000000 bytes

HTML transferred: 4000000 bytes

Requests per second: 21547.27 [#/sec] (mean)

Time per request: 0.464 [ms] (mean)

Time per request: 0.046 [ms] (mean, across all

concurrent requests)

Transfer rate: 2756.54 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.0 0 0

Processing: 0 0 0.2 0 13

Waiting: 0 0 0.2 0 13

Total: 0 0 0.2 0 13

Percentage of the requests

served within a certain time (ms)

50% 0

66% 0

75% 0

80% 0

90% 1

95% 1

98% 1

99% 1

100% 13 (longest request)Workerman benchmark 2

wrk -t1 -c1000 -d60s http://127.0.0.1:1337/

Running 1m test @ http://127.0.0.1:1337/

1 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 41.89ms 33.37ms 2.00s 99.80%

Req/Sec 24.42k 838.36 25.47k 85.67%

1458523 requests in 1.00m, 182.22MB read

Socket errors: connect 0, read 0, write 0, timeout 79

Requests/sec: 24281.40

Transfer/sec: 3.03MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 41.26ms 34.09ms 1.99s 99.79%

Req/Sec 24.82k 0.88k 26.34k 65.33%

1482095 requests in 1.00m, 185.16MB read

Socket errors: connect 0, read 0, write 0, timeout 84

Requests/sec: 24680.37

Transfer/sec: 3.08MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 42.35ms 26.78ms 1.98s 99.82%

Req/Sec 24.02k 426.16 24.86k 79.67%

1433850 requests in 1.00m, 179.13MB read

Socket errors: connect 0, read 0, write 0, timeout 59

Requests/sec: 23881.24

Transfer/sec: 2.98MBWorkerman benchmark 3

wrk -t10 -c1000 -d60s http://127.0.0.1:1337/

Running 1m test @ http://127.0.0.1:1337/

10 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 39.93ms 49.27ms 1.99s 99.57%

Req/Sec 2.43k 0.90k 9.31k 85.38%

1423948 requests in 1.00m, 177.90MB read

Socket errors: connect 0, read 0, write 0, timeout 499

Requests/sec: 23707.97

Transfer/sec: 2.96MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 40.46ms 66.24ms 1.99s 99.38%

Req/Sec 2.58k 0.88k 9.33k 85.56%

1438833 requests in 1.00m, 179.76MB read

Socket errors: connect 0, read 0, write 0, timeout 402

Requests/sec: 23955.47

Transfer/sec: 2.99MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 38.98ms 55.06ms 2.00s 99.51%

Req/Sec 2.44k 1.06k 15.43k 82.02%

1446220 requests in 1.00m, 180.68MB read

Socket errors: connect 0, read 0, write 0, timeout 490

Requests/sec: 24078.85

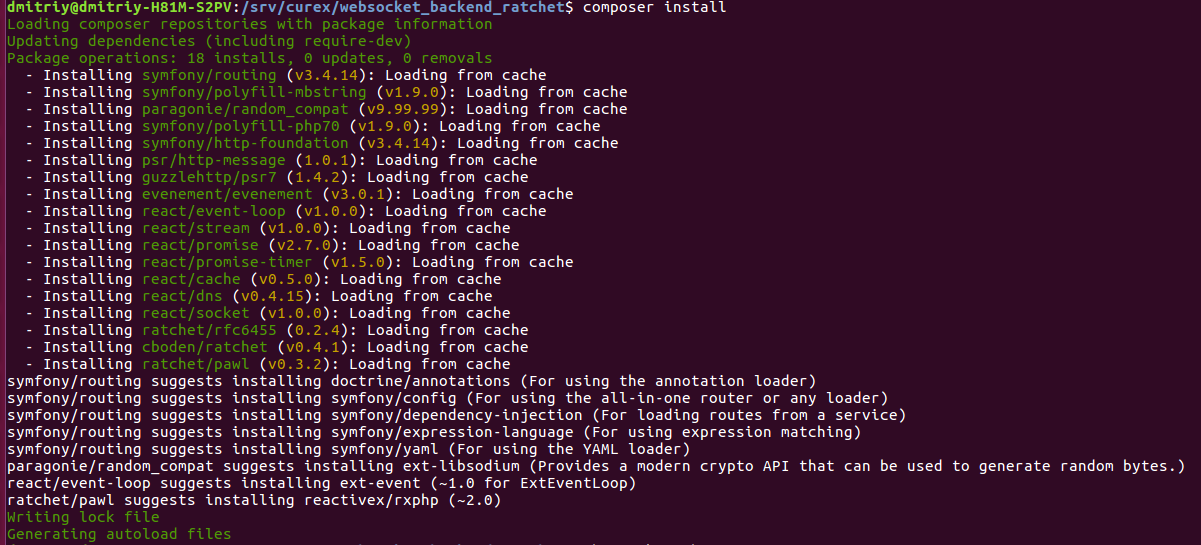

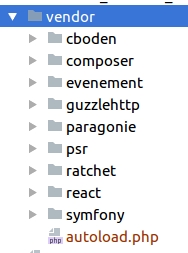

Transfer/sec: 3.01MBRatchet 0.4.1

https://github.com/ratchetphp/Ratchet

http://socketo.me/docs/design

+ https://github.com/ratchetphp/Pawl

Ratchet зависимости

{

"require": {

"cboden/ratchet": "^0.4.1",

"ratchet/pawl": "^0.3.2",

"react/event-loop": "^1.0",

"react/http": "^0.8.3"

}

}

Ratchet client

// Web client

<script>

// Then some JavaScript in the browser:

var ws = new WebSocket('ws://site.ll:9056/?user=tester01');

ws.onmessage = function(evt) { alert(evt.data); };

ws.onopen = function (event) { ws.send('test'); }

</script>

// ============================================

// PHP client

$connection = \Ratchet\Client\connect('ws://127.0.0.1:9057')->then(function($conn) {

$conn->on('message', function($msg) use ($conn) {

echo "Received: {$msg}\n";

$conn->close();

});

$conn->send('{"command": "update_data", "user": "tester01"}');

$conn->close();

}, function ($e) {

echo "Could not connect: {$e->getMessage()}\n";

});Ratchet worker

$loop = React\EventLoop\Factory::create();

$pusher = new Pusher;

// PHP client

$webSockPhp = new React\Socket\Server('0.0.0.0:9057', $loop);

new Ratchet\Server\IoServer(

new Ratchet\Http\HttpServer(

new Ratchet\WebSocket\WsServer(

$pusher

)

),

$webSockPhp

);

// WebClient

$webSockClient = new React\Socket\Server('0.0.0.0:9056', $loop);

new Ratchet\Server\IoServer(

new Ratchet\Http\HttpServer(

new Ratchet\WebSocket\WsServer(

$pusher

)

),

$webSockClient

);

$loop->run();Ratchet worker Pusher

use Ratchet\ConnectionInterface;

use Ratchet\MessageComponentInterface;

class Pusher implements MessageComponentInterface

{

/** @var array - subscribers */

protected $subscribedTopics = array();

public function onMessage(ConnectionInterface $conn, $topic)

{

$this->subscribedTopics[substr($conn->httpRequest->getRequestTarget(), 7)] = $conn;

$message = json_decode($topic, true);

if (

isset($message['command'])

and $message['command'] == 'update_data'

and isset($this->subscribedTopics[$message['user']])

) {

$this->subscribedTopics[$message['user']]->send('It works!');

}

}

public function onOpen(ConnectionInterface $conn) {}

public function onClose(ConnectionInterface $conn) {}

public function onError(ConnectionInterface $conn, \Exception $e) {}

}Исходный код примера выше

https://github.com/Shkarbatov/WebSocketPHPRatchet

TEST

WS Server

Ratchet test

use Ratchet\MessageComponentInterface;

use Ratchet\ConnectionInterface;

use Ratchet\Server\IoServer;

use Ratchet\Http\HttpServer;

use Ratchet\WebSocket\WsServer;

class Chat implements MessageComponentInterface {

protected $clients;

public function onOpen(ConnectionInterface $conn) {

$this->clients = $conn;

}

public function onMessage(ConnectionInterface $from, $msg) {

$this->clients->send('test!');

}

public function onClose(ConnectionInterface $conn) {}

public function onError(ConnectionInterface $conn, \Exception $e) {}

}

$server = IoServer::factory(

new HttpServer(new WsServer(new Chat())),

9056

);

$server->run();Ratchet benchmark

thor --amount 5000 ws://curex.ll:9056/

Thou shall:

- Spawn 4 workers.

- Create all the concurrent/parallel connections.

- Smash 5000 connections with the mighty Mjölnir.

Online 128121 milliseconds

Time taken 128121 milliseconds

Connected 2399

Disconnected 0

Failed 2601

Total transferred 3.63MB

Total received 473.24kB

Durations (ms):

min mean stddev median max

Handshaking 688 13438 17920 7045 63485

Latency NaN NaN NaN NaN NaN

Percentile (ms):

50% 66% 75% 80% 90% 95% 98% 98% 100%

Handshaking 7045 15085 15221 31122 31275 63248 63364 63417 63485

Latency NaN NaN NaN NaN NaN NaN NaN NaN NaN

Received errors:

2601x connect ETIMEDOUT 127.0.0.1:9056

TEST

HTTP Server

React test

$loop = React\EventLoop\Factory::create();

$server = new React\Http\Server(

function (Psr\Http\Message\ServerRequestInterface $request) {

return new React\Http\Response(

200,

array('Content-Type' => 'text/plain'),

"test\n"

);

}

);

$socket = new React\Socket\Server(1337, $loop);

$server->listen($socket);

$loop->run();React benchmark

ab -c 10 -n 1000000 -k http://curex.ll:1337/

This is ApacheBench, Version 2.3 <$Revision: 1706008 $>

Concurrency Level: 10

Time taken for tests: 379.245 seconds

Complete requests: 1000000

Failed requests: 0

Keep-Alive requests: 0

Total transferred: 133000000 bytes

HTML transferred: 5000000 bytes

Requests per second: 2636.82 [#/sec] (mean)

Time per request: 3.792 [ms] (mean)

Time per request: 0.379 [ms] (mean, across all

concurrent requests)

Transfer rate: 342.48 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.0 0 11

Processing: 0 4 0.4 4 18

Waiting: 0 4 0.4 4 18

Total: 1 4 0.4 4 18

Percentage of the requests served

within a certain time (ms)

50% 4

66% 4

75% 4

80% 4

90% 4

95% 4

98% 5

99% 5

100% 18 (longest request)

React benchmark 2

wrk -t1 -c1000 -d60s http://127.0.0.1:1337/

Running 1m test @ http://127.0.0.1:1337/

1 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 135.29ms 208.40ms 1.62s 82.30%

Req/Sec 1.45k 1.18k 3.33k 60.53%

51131 requests in 1.00m, 7.41MB read

Socket errors: connect 58, read 3, write 0, timeout 28

Requests/sec: 851.01

Transfer/sec: 126.32KB

Thread Stats Avg Stdev Max +/- Stdev

Latency 103.17ms 146.03ms 1.61s 82.94%

Req/Sec 1.44k 1.06k 2.99k 44.23%

58492 requests in 1.00m, 8.48MB read

Socket errors: connect 54, read 0, write 0, timeout 39

Requests/sec: 974.66

Transfer/sec: 144.68KB

Thread Stats Avg Stdev Max +/- Stdev

Latency 122.33ms 158.59ms 1.61s 83.98%

Req/Sec 1.45k 0.98k 3.52k 40.40%

66534 requests in 1.00m, 9.64MB read

Socket errors: connect 62, read 0, write 0, timeout 40

Requests/sec: 1107.78

Transfer/sec: 164.44KBReact benchmark 3

wrk -t10 -c1000 -d60s http://127.0.0.1:1337/

Running 1m test @ http://127.0.0.1:1337/

10 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 14.54ms 33.66ms 1.61s 99.10%

Req/Sec 347.09 428.97 2.54k 86.60%

48243 requests in 1.00m, 6.99MB read

Socket errors: connect 240, read 15, write 0, timeout 23

Requests/sec: 802.86

Transfer/sec: 119.17KB

Thread Stats Avg Stdev Max +/- Stdev

Latency 14.72ms 31.84ms 1.63s 99.42%

Req/Sec 309.33 250.39 2.30k 81.33%

46635 requests in 1.00m, 6.76MB read

Socket errors: connect 208, read 7, write 0, timeout 23

Requests/sec: 776.20

Transfer/sec: 115.22KB

Thread Stats Avg Stdev Max +/- Stdev

Latency 15.16ms 39.30ms 1.61s 99.39%

Req/Sec 260.18 185.05 2.21k 72.77%

48223 requests in 1.00m, 6.99MB read

Socket errors: connect 211, read 8, write 0, timeout 29

Requests/sec: 802.68

Transfer/sec: 119.15KBSwoole 2.0

https://github.com/swoole/swoole-src

https://github.com/swoole/swoole-wiki

http://php.net/manual/ru/book.swoole.php

Swoole

Swoole is an high-performance network framework using an event-driven, asynchronous, non-blocking I/O model which makes it scalable and efficient. It is written in C language without 3rd party libraries as PHP extension.

It enables PHP developers to write high-performance, scalable, concurrent TCP, UDP, Unix Socket, HTTP, WebSocket services in PHP programming language without too much knowledge about non-blocking I/O programming and low-level Linux kernel.

With swoole 2.0, developers are able to implement async network I/O without taking care of the low-level details, such as coroutine switch.

Swoole Features

- Rapid development of high performance protocol servers & clients with PHP language

- Event-driven, asynchronous programming for PHP

- Event loop API

- Processes management API

- Memory management API

- Async TCP/UDP/HTTP/WebSocket/HTTP2 client/server side API

- Async TCP/UDP client side API

- Async MySQL client side API and connection pool

- Async Redis client/server side API

- Async DNS client side API

- Message Queue API

- Async Task API

- Milliseconds scheduler

- Async File I/O API

- Golang style channels for inter-processes communication

- System locks API: Filelock, Readwrite lock, semaphore, Mutex, spinlock

- IPv4/IPv6/UnixSocket/TCP/UDP and SSL/TLS support

- Fast serializer/unserializer

Swoole Install

Install via pecl

pecl install swoole

------------------------------------------

Install from source

git clone https://github.com/swoole/swoole-src.git

cd swoole-src

phpize

./configure

make && make installSwoole

<script>

var ws = new WebSocket('ws://curex.ll:9502/?user=tester01');

ws.onmessage = function(evt) { alert(evt.data); };

ws.onopen = function (event) {

ws.send('tester01');

}

</script>

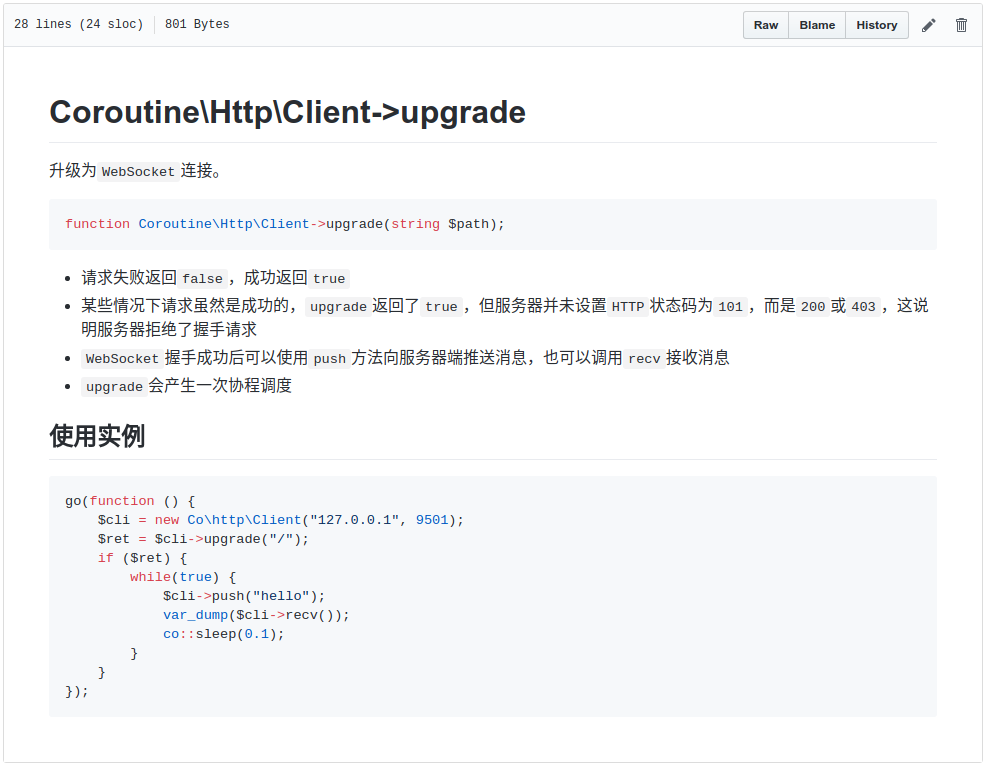

go(function() {

$cli = new Swoole\Coroutine\Http\Client("127.0.0.1", 9502);

$cli->upgrade('/');

$cli->push('{"command": "update_data", "user": "tester01"}');

$cli->close();

});Web client

PHP client

Swoole worker

$subscribedTopics = array();

$server = new swoole_websocket_server("0.0.0.0", 9502, SWOOLE_BASE);

$server->on("start", function ($server) {

echo "Swoole http server is started at http://127.0.0.1:9502\n";

});

$server->on('connect', function($server, $req) { echo "connect: {$req}\n"; });

$server->on('open', function($server, $req) { echo "connection open: {$req->fd}\n"; });

$server->on('message', function($server, $frame) use (&$subscribedTopics) {

if (

$message = json_decode($frame->data, true)

and isset($message['command'])

and $message['command'] == 'update_data'

and isset($subscribedTopics[$message['user']])

) {

$subscribedTopics[$message['user']]['server']

->push($subscribedTopics[$message['user']]['frame']->fd, $frame->data);

} else {

$subscribedTopics[$frame->data] = ['frame' => $frame, 'server' => $server];

}

});

$server->on('close', function($server, $fd) { echo "connection close: {$fd}\n"; });

$server->start();Исходный код примера выше

https://github.com/Shkarbatov/WebSocketPHPSwooleSwoole worker instance

$serv = new swoole_server("127.0.0.1", 9501);

$serv->set(array(

'worker_num' => 2,

'max_request' => 3,

'dispatch_mode'=>3,

));

$serv->on('receive', function ($serv, $fd, $from_id, $data) {

$serv->send($fd, "Server: ".$data);

});

$serv->start();Swoole Warning

The following extensions have to be disabled to use Swoole Coroutine:

xdebug

phptrace

aop

molten

xhprof

Do not use Coroutine within these functions:

__get

__set

__call

__callStatic

__toString

__invoke

__destruct

call_user_func

call_user_func_array

ReflectionFunction::invoke

ReflectionFunction::invokeArgs

ReflectionMethod::invoke

ReflectionMethod::invokeArgs

array_walk/array_map

ob_*

TEST

WS Server

$server = new swoole_websocket_server("0.0.0.0", 9502, SWOOLE_BASE);

$server->set(['log_file' => '/dev/null']);

$server->on("start", function ($server) {});

$server->on('connect', function($server, $req) {});

$server->on('open', function($server, $req) {});

$server->on('message', function($server, $frame) {

$server->push($frame->fd, 'test!');

});

$server->on('close', function($server, $fd) {});

$server->start();Swoole test

thor --amount 5000 ws://curex.ll:9502/

Thou shall:

- Spawn 4 workers.

- Create all the concurrent/parallel connections.

- Smash 5000 connections with the mighty Mjölnir.

Online 72117 milliseconds

Time taken 72117 milliseconds

Connected 5000

Disconnected 0

Failed 0

Total transferred 7.23MB

Total received 0.95MB

Durations (ms):

min mean stddev median max

Handshaking 0 1802 2089 1223 71828

Latency 0 59 77 22 252

Percentile (ms):

50% 66% 75% 80% 90% 95% 98% 98% 100%

Handshaking 1223 1312 3124 3167 3256 3323 7250 7274 71828

Latency 22 36 60 96 216 233 241 246 252 Swoole benchmark

thor --amount 20000 ws://curex.ll:9502/

Thou shall:

- Spawn 4 workers.

- Create all the concurrent/parallel connections.

- Smash 20000 connections with the mighty Mjölnir.

Online 97971 milliseconds

Time taken 97975 milliseconds

Connected 20000

Disconnected 0

Failed 0

Total transferred 28.5MB

Total received 3.81MB

Durations (ms):

min mean stddev median max

Handshaking 0 345 844 1 7315

Latency 0 18 56 0 342

Percentile (ms):

50% 66% 75% 80% 90% 95% 98% 98% 100%

Handshaking 1 2 115 381 1261 1487 3235 3275 7315

Latency 0 1 9 14 33 123 269 302 342 Swoole benchmark 2

TEST

HTTP Server

Swoole test

$server = new swoole_http_server("127.0.0.1", 9502, SWOOLE_BASE);

$server->set(['log_file' => '/dev/null']);

$server->on(

'request',

function(swoole_http_request $request, swoole_http_response $response) use ($server) {

$response->end('test!');

});

$server->start();Swoole benchmark

ab -c 10 -n 1000000 -k http://curex.ll:9502/

This is ApacheBench, Version 2.3 <$Revision: 1706008 $>

Concurrency Level: 10

Time taken for tests: 17.943 seconds

Complete requests: 1000000

Failed requests: 0

Keep-Alive requests: 1000000

Total transferred: 157000000 bytes

HTML transferred: 5000000 bytes

Requests per second: 55733.55 [#/sec] (mean)

Time per request: 0.179 [ms] (mean)

Time per request: 0.018 [ms] (mean, across all

concurrent requests)

Transfer rate: 8545.09 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.0 0 0

Processing: 0 0 0.1 0 17

Waiting: 0 0 0.1 0 17

Total: 0 0 0.1 0 17

Percentage of the requests served

within a certain time (ms)

50% 0

66% 0

75% 0

80% 0

90% 0

95% 0

98% 0

99% 0

100% 17 (longest request)Swoole benchmark 2

wrk -t1 -c1000 -d60s http://127.0.0.1:9502/

Running 1m test @ http://127.0.0.1:9502/

1 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 19.93ms 0.97ms 43.82ms 93.78%

Req/Sec 50.40k 785.03 54.06k 80.50%

3008650 requests in 1.00m, 450.48MB read

Requests/sec: 50102.71

Transfer/sec: 7.50MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 20.03ms 1.17ms 44.44ms 92.82%

Req/Sec 50.12k 1.09k 53.25k 74.33%

2992896 requests in 1.00m, 448.12MB read

Requests/sec: 49833.25

Transfer/sec: 7.46MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 20.03ms 1.17ms 44.44ms 92.82%

Req/Sec 50.12k 1.09k 53.25k 74.33%

2992896 requests in 1.00m, 448.12MB read

Requests/sec: 49833.25

Transfer/sec: 7.46MBSwoole benchmark 3

wrk -t10 -c1000 -d60s http://127.0.0.1:9502/

Running 1m test @ http://127.0.0.1:9502/

10 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 19.19ms 2.74ms 263.12ms 93.18%

Req/Sec 5.15k 658.17 12.06k 90.18%

3073586 requests in 1.00m, 460.20MB read

Requests/sec: 51153.25

Transfer/sec: 7.66MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 19.41ms 2.38ms 259.93ms 95.86%

Req/Sec 5.14k 425.07 18.59k 87.37%

3069736 requests in 1.00m, 459.62MB read

Requests/sec: 51088.02

Transfer/sec: 7.65MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 19.32ms 2.60ms 273.63ms 93.98%

Req/Sec 5.14k 545.61 23.34k 87.43%

3072101 requests in 1.00m, 459.98MB read

Requests/sec: 51118.82

Transfer/sec: 7.65MBphpDaemon 1.0-beta3

https://daemon.io/

https://github.com/kakserpom/phpdaemon

TEST

WS Server

PHPDaemon test

# /opt/phpdaemon/conf/phpd.conf

max-workers 1;

min-workers 8;

start-workers 1;

max-idle 0;

max-requests 100000000;

Pool:Servers\WebSocket

{

listen 'tcp://0.0.0.0';

port 3333;

}

WSEcho

{

enable 1;

}

include conf.d/*.conf;

PHPDaemon test

# /opt/phpdaemon/PHPDaemon/Applications/WSEcho.php

namespace PHPDaemon\Applications;

class WSEcho extends \PHPDaemon\Core\AppInstance

{

public function onReady()

{

$appInstance = $this;

\PHPDaemon\Servers\WebSocket\Pool::getInstance()

->addRoute("/", function ($client) use ($appInstance)

{

return new WsEchoRoute($client, $appInstance);

});

}

}

class WsEchoRoute extends \PHPDaemon\WebSocket\Route

{

public function onHandshake() {}

public function onFrame($data, $type)

{

$this->client->sendFrame('test!');

}

public function onFinish() {}

}PHPDaemon benchmark

thor --amount 5000 ws://curex.ll:3333/

Thou shall:

- Spawn 4 workers.

- Create all the concurrent/parallel connections.

- Smash 5000 connections with the mighty Mjölnir.

Online 7922 milliseconds

Time taken 7924 milliseconds

Connected 5000

Disconnected 0

Failed 0

Total transferred 5.99MB

Total received 1.33MB

Durations (ms):

min mean stddev median max

Handshaking 564 1825 1235 1297 7393

Latency 0 96 71 72 246

Percentile (ms):

50% 66% 75% 80% 90% 95% 98% 98% 100%

Handshaking 1297 1491 3200 3289 3397 3465 3544 7177 7393

Latency 72 116 147 178 211 231 236 239 246PHPDaemon benchmark 2

thor --amount 20000 ws://curex.ll:3333/

Thou shall:

- Spawn 4 workers.

- Create all the concurrent/parallel connections.

- Smash 20000 connections with the mighty Mjölnir.

Online 73634 milliseconds

Time taken 73655 milliseconds

Connected 20000

Disconnected 0

Failed 0

Total transferred 23.99MB

Total received 5.36MB

Durations (ms):

min mean stddev median max

Handshaking 0 93 263 2 1350

Latency 0 19 46 0 276

Percentile (ms):

50% 66% 75% 80% 90% 95% 98% 98% 100%

Handshaking 2 2 3 4 258 1051 1154 1177 1350

Latency 0 1 1 2 89 121 161 231 276

TEST

HTTP Server

# /opt/phpdaemon/conf/conf.d/HTTPServer.conf

Pool:HTTPServer {

enable 1;

listen "0.0.0.0";

port 3333;

expose 1;

}

# /opt/phpdaemon/conf/phpd.conf

max-workers 1;

min-workers 8;

start-workers 1;

max-idle 0;

max-requests 100000000;

path '/opt/phpdaemon/conf/AppResolver.php';

include conf.d/*.conf;phpDaemon test

# /opt/phpdaemon/PHPDaemon/Applications/ServerStatus.php

namespace PHPDaemon\Applications;

class ServerStatus extends \PHPDaemon\Core\AppInstance

{

public function beginRequest($req, $upstream)

{

return new ServerStatusRequest($this, $upstream, $req);

}

}phpDaemon test

phpDaemon test

# /opt/phpdaemon/conf/AppResolver.php

class MyAppResolver extends \PHPDaemon\Core\AppResolver

{

public function getRequestRoute($req, $upstream)

{

if (

preg_match(

'~^/(WebSocketOverCOMET|Example.*)/?~',

$req->attrs->server['DOCUMENT_URI'],

$m

)

) {

return $m[1];

}

if (

preg_match(

'~^/(ServerStatus)/?~',

$req->attrs->server['DOCUMENT_URI'],

$m

)

) {

return 'ServerStatus';

}

}

}phpDaemon benchmark

ab -c 10 -n 1000000 -k http://curex.ll:3333/ServerStatus

This is ApacheBench, Version 2.3 <$Revision: 1706008 $>

Document Path: /ServerStatus

Document Length: 21 bytes

Concurrency Level: 10

Time taken for tests: 528.547 seconds

Complete requests: 1000000

Failed requests: 0

Keep-Alive requests: 0

Total transferred: 134000000 bytes

HTML transferred: 21000000 bytes

Requests per second: 1891.98 [#/sec] (mean)

Time per request: 5.285 [ms] (mean)

Time per request: 0.529 [ms] (mean, across all

concurrent requests)

Transfer rate: 247.58 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.0 0 8

Processing: 1 5 0.3 5 23

Waiting: 0 5 0.3 5 23

Total: 2 5 0.3 5 23

Percentage of the requests served

within a certain time (ms)

50% 5

66% 5

75% 5

80% 5

90% 5

95% 6

98% 6

99% 6

100% 23 (longest request)phpDaemon benchmark

2 with low connection

wrk -t1 -c100 -d60s http://127.0.0.1:3333/ServerStatus

Running 1m test @ http://127.0.0.1:3333/ServerStatus

1 threads and 100 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 56.76ms 8.86ms 165.64ms 91.54%

Req/Sec 1.58k 465.21 1.95k 86.59%

25979 requests in 1.00m, 4.29MB read

Requests/sec: 432.96

Transfer/sec: 73.15KB

Thread Stats Avg Stdev Max +/- Stdev

Latency 55.49ms 8.98ms 164.02ms 93.52%

Req/Sec 1.63k 467.21 1.92k 87.12%

26736 requests in 1.00m, 4.41MB read

Requests/sec: 445.14

Transfer/sec: 75.20KB

Thread Stats Avg Stdev Max +/- Stdev

Latency 55.45ms 8.24ms 152.86ms 94.15%

Req/Sec 1.65k 431.40 1.97k 88.14%

29262 requests in 1.00m, 4.83MB read

Requests/sec: 487.52

Transfer/sec: 82.36KB

phpDaemon benchmark

3 with low connection

wrk -t10 -c100 -d60s http://127.0.0.1:3333/ServerStatus

Running 1m test @ http://127.0.0.1:3333/ServerStatus

10 threads and 100 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 56.13ms 5.84ms 146.30ms 93.80%

Req/Sec 175.35 21.51 264.00 74.48%

28615 requests in 1.00m, 4.72MB read

Requests/sec: 476.15

Transfer/sec: 80.44KB

Thread Stats Avg Stdev Max +/- Stdev

Latency 55.51ms 5.90ms 123.27ms 93.84%

Req/Sec 177.45 19.16 231.00 76.86%

28673 requests in 1.00m, 4.73MB read

Requests/sec: 477.16

Transfer/sec: 80.61KB

Thread Stats Avg Stdev Max +/- Stdev

Latency 56.10ms 5.54ms 107.28ms 91.54%

Req/Sec 176.53 19.65 287.00 72.90%

28555 requests in 1.00m, 4.71MB read

Socket errors: connect 100, read 0, write 0, timeout 0

Requests/sec: 475.15

Transfer/sec: 80.28KBДавайте поговорим про NodeJS v10.9.0

TEST

WS Server

NodeJS test

const WebSocket = require('ws');

const wss = new WebSocket.Server({ port: 1337 });

wss.on('connection', function connection(ws) {

ws.on('message', function incoming(message) {

ws.send('test!');

});

});NodeJS benchmark

thor --amount 5000 ws://curex.ll:1337/

Thou shall:

- Spawn 4 workers.

- Create all the concurrent/parallel connections.

- Smash 5000 connections with the mighty Mjölnir.

Online 15571 milliseconds

Time taken 15573 milliseconds

Connected 5000

Disconnected 0

Failed 0

Total transferred 6MB

Total received 683.59kB

Durations (ms):

min mean stddev median max

Handshaking 384 1832 2158 809 15185

Latency 0 79 64 70 374

Percentile (ms):

50% 66% 75% 80% 90% 95% 98% 98% 100%

Handshaking 809 1272 3105 3149 3216 7171 7200 7216 15185

Latency 70 105 125 130 169 205 227 245 374 NodeJS benchmark 2

thor --amount 20000 ws://curex.ll:1337/

Thou shall:

- Spawn 4 workers.

- Create all the concurrent/parallel connections.

- Smash 20000 connections with the mighty Mjölnir.

Online 73733 milliseconds

Time taken 73733 milliseconds

Connected 20000

Disconnected 0

Failed 0

Total transferred 23.99MB

Total received 2.67MB

Durations (ms):

min mean stddev median max

Handshaking 0 355 550 121 7230

Latency 0 47 73 3 381

Percentile (ms):

50% 66% 75% 80% 90% 95% 98% 98% 100%

Handshaking 121 428 571 730 1022 1080 1286 3098 7230

Latency 3 43 69 86 163 200 279 300 381

TEST

HTTP Server

NodeJS test

var http = require('http');

var data = 'test';

var app = function (req, res) {

res.writeHead(200, {

'Content-Type': 'text/plain'

});

res.end(data);

};

var server = http.createServer(app);

server.listen(1337, function() {});NodeJS benchmark

ab -c 10 -n 1000000 -k http://curex.ll:1337/

This is ApacheBench, Version 2.3 <$Revision: 1706008 $>

Concurrency Level: 10

Time taken for tests: 46.818 seconds

Complete requests: 1000000

Failed requests: 0

Keep-Alive requests: 0

Total transferred: 106000000 bytes

HTML transferred: 5000000 bytes

Requests per second: 21359.47 [#/sec] (mean)

Time per request: 0.468 [ms] (mean)

Time per request: 0.047 [ms] (mean, across all

concurrent requests)

Transfer rate: 2211.04 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.0 0 10

Processing: 0 0 0.1 0 10

Waiting: 0 0 0.1 0 10

Total: 0 0 0.1 0 10

Percentage of the requests served

within a certain time (ms)

50% 0

66% 0

75% 0

80% 0

90% 1

95% 1

98% 1

99% 1

100% 10 (longest request)NodeJS benchmark 2

wrk -t1 -c1000 -d60s http://127.0.0.1:1337/

Running 1m test @ http://127.0.0.1:1337/

1 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 29.41ms 4.03ms 274.75ms 90.07%

Req/Sec 33.69k 2.43k 36.53k 81.67%

2011524 requests in 1.00m, 285.83MB read

Requests/sec: 33489.17

Transfer/sec: 4.76MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 29.25ms 1.86ms 258.60ms 84.97%

Req/Sec 34.33k 1.70k 36.63k 77.50%

2049553 requests in 1.00m, 291.24MB read

Requests/sec: 34130.92

Transfer/sec: 4.85MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 29.02ms 1.58ms 61.15ms 90.84%

Req/Sec 34.61k 1.31k 37.14k 86.83%

2066799 requests in 1.00m, 293.69MB read

Requests/sec: 34419.75

Transfer/sec: 4.89MB

NodeJS benchmark 3

wrk -t10 -c1000 -d60s http://127.0.0.1:1337/

Running 1m test @ http://127.0.0.1:1337/

10 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 28.24ms 4.74ms 287.73ms 92.83%

Req/Sec 3.49k 504.77 10.52k 82.76%

2074503 requests in 1.00m, 294.78MB read

Requests/sec: 34521.56

Transfer/sec: 4.91MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 28.46ms 3.81ms 290.12ms 91.90%

Req/Sec 3.46k 561.03 7.61k 89.30%

2069600 requests in 1.00m, 294.08MB read

Requests/sec: 34437.34

Transfer/sec: 4.89MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 30.44ms 4.38ms 295.49ms 88.26%

Req/Sec 3.27k 488.96 5.88k 76.06%

1942947 requests in 1.00m, 276.09MB read

Requests/sec: 32350.29

Transfer/sec: 4.60MBЕще есть

GO 1.9.1

TEST

WS Server

GO test

package main

import (

"net/http"

"github.com/gobwas/ws"

"github.com/gobwas/ws/wsutil"

)

func main() {

http.ListenAndServe(":4375", http.HandlerFunc(

func(w http.ResponseWriter, r *http.Request) {

conn, _, _, err := ws.UpgradeHTTP(r, w)

if err != nil {

// handle error

}

go func() {

defer conn.Close()

for {

msg, op, err := wsutil.ReadClientData(conn)

if err != nil {

// handle error

}

err = wsutil.WriteServerMessage(conn, op, msg)

if err != nil {

// handle error

}

}

}()

}))

}GO benchmark

thor --amount 5000 ws://curex.ll:4375/

Thou shall:

- Spawn 4 workers.

- Create all the concurrent/parallel connections.

- Smash 5000 connections with the mighty Mjölnir.

Online 103095 milliseconds

Time taken 103095 milliseconds

Connected 4949

Disconnected 0

Failed 5000

Total transferred 5.93MB

Total received 5.46MB

Durations (ms):

min mean stddev median max

Handshaking 183 7789 13288 3281 102595

Latency NaN NaN NaN NaN NaN

Percentile (ms):

50% 66% 75% 80% 90% 95% 98% 98% 100%

Handshaking 3281 7256 7536 15123 15541 15706 63661 65361 102595

Latency NaN NaN NaN NaN NaN NaN NaN NaN NaN

Received errors:

4949x continuation frame cannot follow current opcode

51x read ECONNRESETGO benchmark

thor --amount 20000 -W 10 ws://curex.ll:4375/echo

Thou shall:

- Spawn 10 workers.

- Create all the concurrent/parallel connections.

- Smash 20000 connections with the mighty Mjölnir.

Online 59682 milliseconds

Time taken 59686 milliseconds

Connected 20000

Disconnected 0

Failed 0

Total transferred 24.43MB

Total received 2.9MB

Durations (ms):

min mean stddev median max

Handshaking 0 304 626 2 7653

Latency NaN NaN NaN NaN NaN

Percentile (ms):

50% 66% 75% 80% 90% 95% 98% 98% 100%

Handshaking 2 9 395 672 1201 1430 1719 3443 7653

Latency NaN NaN NaN NaN NaN NaN NaN NaN NaN

TEST

HTTP Server

GO test

package main

import (

"flag"

"fmt"

"log"

"github.com/valyala/fasthttp"

)

var (

addr = flag.String("addr", ":1080", "TCP address to listen to")

compress = flag.Bool("compress", false, "Enable transparent response compression")

)

func main() {

flag.Parse()

h := requestHandler

if *compress {

h = fasthttp.CompressHandler(h)

}

if err := fasthttp.ListenAndServe(*addr, h); err != nil {

log.Fatalf("Error in ListenAndServe: %s", err)

}

}

func requestHandler(ctx *fasthttp.RequestCtx) {

fmt.Fprintf(ctx, "test!")

}GO benchmark

ab -c 10 -n 1000000 -k http://curex.ll:1080/

This is ApacheBench, Version 2.3 <$Revision: 1706008 $>

Concurrency Level: 10

Time taken for tests: 5.291 seconds

Complete requests: 1000000

Failed requests: 0

Keep-Alive requests: 1000000

Total transferred: 163000000 bytes

HTML transferred: 5000000 bytes

Requests per second: 188986.79 [#/sec] (mean)

Time per request: 0.053 [ms] (mean)

Time per request: 0.005 [ms] (mean, across all

concurrent requests)

Transfer rate: 30082.86 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.0 0 0

Processing: 0 0 0.0 0 15

Waiting: 0 0 0.0 0 15

Total: 0 0 0.0 0 15

Percentage of the requests served

within a certain time (ms)

50% 0

66% 0

75% 0

80% 0

90% 0

95% 0

98% 0

99% 0

100% 15 (longest request)GO benchmark 2

wrk -t1 -c1000 -d60s http://127.0.0.1:1080/

Running 1m test @ http://127.0.0.1:1080/

1 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 4.43ms 2.48ms 203.58ms 91.59%

Req/Sec 116.78k 17.73k 181.35k 74.45%

6975630 requests in 1.00m, 0.90GB read

Requests/sec: 116085.89

Transfer/sec: 15.39MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 4.68ms 1.53ms 75.21ms 88.31%

Req/Sec 111.63k 9.22k 129.71k 82.71%

6668636 requests in 1.00m, 0.86GB read

Requests/sec: 110964.66

Transfer/sec: 14.71MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 4.06ms 0.92ms 49.96ms 82.41%

Req/Sec 126.12k 4.39k 139.98k 80.34%

7521656 requests in 1.00m, 0.97GB read

Requests/sec: 125357.36

Transfer/sec: 16.62MB

GO benchmark 3

wrk -t10 -c1000 -d60s http://127.0.0.1:1080/

Running 1m test @ http://127.0.0.1:1080/

10 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 4.95ms 3.65ms 214.84ms 86.45%

Req/Sec 19.22k 4.30k 57.91k 70.20%

11466434 requests in 1.00m, 1.48GB read

Requests/sec: 190835.48

Transfer/sec: 25.30MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 5.15ms 3.04ms 206.87ms 80.61%

Req/Sec 18.95k 4.04k 44.79k 65.71%

11290473 requests in 1.00m, 1.46GB read

Requests/sec: 187902.38

Transfer/sec: 24.91MB

Thread Stats Avg Stdev Max +/- Stdev

Latency 5.41ms 3.36ms 214.86ms 81.62%

Req/Sec 17.89k 4.25k 65.17k 66.50%

10666600 requests in 1.00m, 1.38GB read

Requests/sec: 177480.72

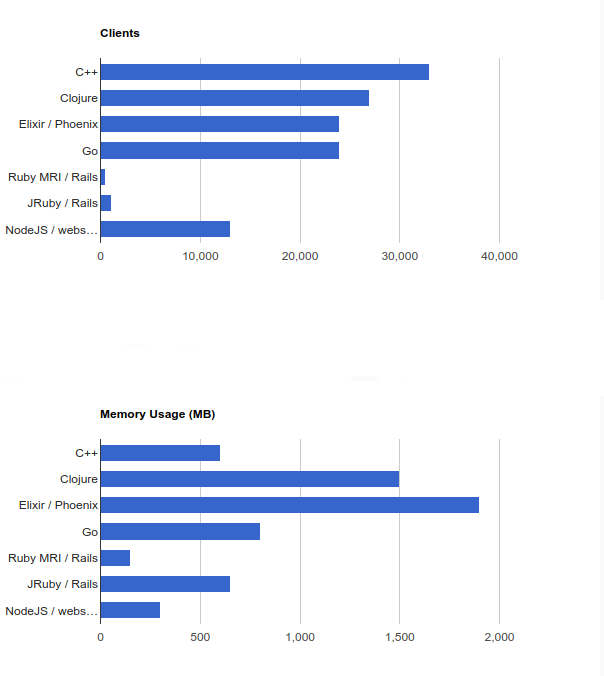

Transfer/sec: 23.53MBWebsocket Shootout: Clojure, C++, Elixir, Go, NodeJS, and Ruby

https://hashrocket.com/blog/posts/websocket-shootout

Как же это все масштабировать?

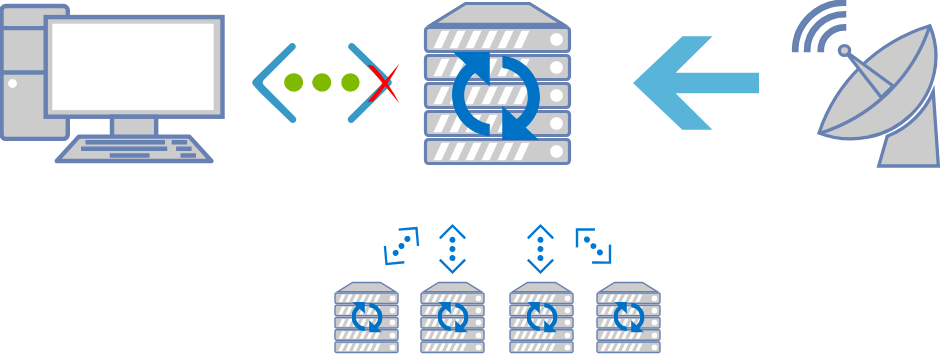

Важный нюанс, — скрипт обрабатывающий сокет-соединения отличается от обычного скрипта PHP, который многократно выполняется от начала до конца при разгрузке страницы.

Отличаются скрипты работы с WebSocket-ами тем, что длительность их выполнения должна быть бесконечной. Т.е. мы должны инициировать выполнение PHP-скрипта содержащего бесконечный цикл, в котором происходит получение/отправка ообщений по протоколу веб-сокет.

Стоит обратить внимание

Пожалуйста не форкайте процессы

PHP для этого не предназначен!

Supervisord

[program:websocket]

; Имя программы в supervisor, например будет выводится в supervisorctl

process_name=%(program_name)s_%(process_num)02d

; Вы можете указать сколько таких процессов надо запустить, по умолчанию 1

numprocs=5

; Команда для запуска программы

command=/srv/curex.privatbank.ua/yii2/yii websocket/start

; При загрузке самого supervisor запускать программу

autostart=true

; Если программа аварийно завершилась, то перезапускать её

autorestart=true

; Перенаправляет пришедший STDERR в ответ supervisor~у в STDOUT (эквивалент /the/program 2>&1)

redirect_stderr=true

; Таймаут в секундах, после которого supervisor пошлет SIGKILL процессу,

; которому до этого посылал SIGCHLD

stopwaitsecs=60

; Какой сигнал посылать для остановки программы

stopsignal=INT

; Путь до error-лога

stderr_logfile=/tmp/websocket_err.log

; Путь до output-лога

stdout_logfile=/tmp/websocket_out.log

; Максимальный размер файла output-лога, после чего будет "rotate"

stdout_logfile_maxbytes=100MB

; Количество файлов output-лога

stdout_logfile_backups=30

; Размер буфера для output-лога

stdout_capture_maxbytes=1MB

; Количество попыток переподъема

startretries=9999999Результат будет следующим

Client

Server

API

Выводы

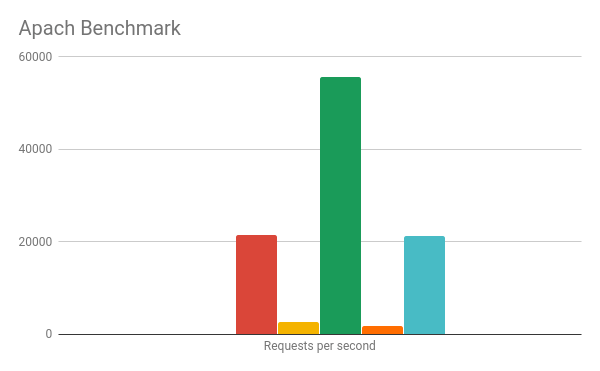

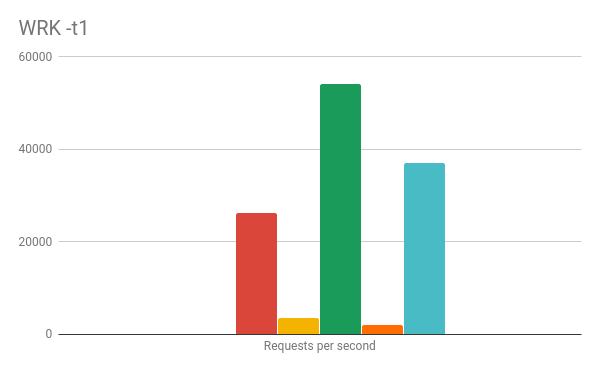

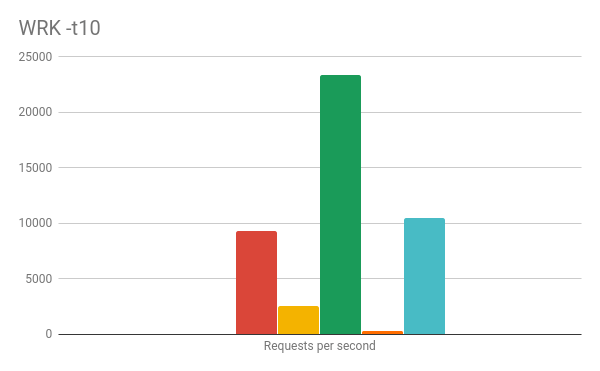

Итоговый benchmark

Итоговый benchmark

WS SERVER

Итоговый benchmark

WS Server

Итоговый benchmark

HTTP Server

Итоговый benchmark

HTTP Server

Итоговый benchmark

HTTP Server

Выводы

- Переходить с PHP на NodeJS ради WebSockets - не стоит;

- Для мелких проектов или проектов у которых нет возможности поставить дополнительное расширение стоит использовать библиотеку workerman;

- Для высоконагруженных проектов стоит использовать библиотеку swoole;

- Back-end должен говорить о новых сообщения, а не фронт его опрашивать.

Литература

- http://www.serverframework.com/asynchronousevents/2011/01/time-wait-and-its-design-implications-for-protocols-and-scalable-servers.html

- https://vincent.bernat.im/en/blog/2014-tcp-time-wait-state-linux

- http://fx-files.ru/archives/602

- https://habr.com/post/331462/

- https://habr.com/post/337298/

- https://ru.stackoverflow.com/questions/496002/Сопрограммы-корутины-coroutine-что-это

- https://habr.com/post/164173/

- https://www.digitalocean.com/community/tutorials/how-to-benchmark-http-latency-with-wrk-on-ubuntu-14-04

- https://hharek.ru/веб-сокет-сервер-на-phpdaemon

- http://r00ssyp.blogspot.com/2016/07/phpdaemon-centos7-php-5.html

- https://docs.google.com/spreadsheets/d/1Jb4ymhYnpwY53IXsf3n2GElUreiKQYIRjC3KYBCZPAs

Вопросы?

Удачи!

WebSockets 2018 episode 2 back-end php

By James Jason

WebSockets 2018 episode 2 back-end php

- 1,972