Phase-type models in life insurance

and empirical dynamic modelling

Patrick J. Laub

University of Melbourne

Outline

- Mention some past work

- Phase-type distributions in life insurance

- Describe some current work (EDM)

Phil Pollett

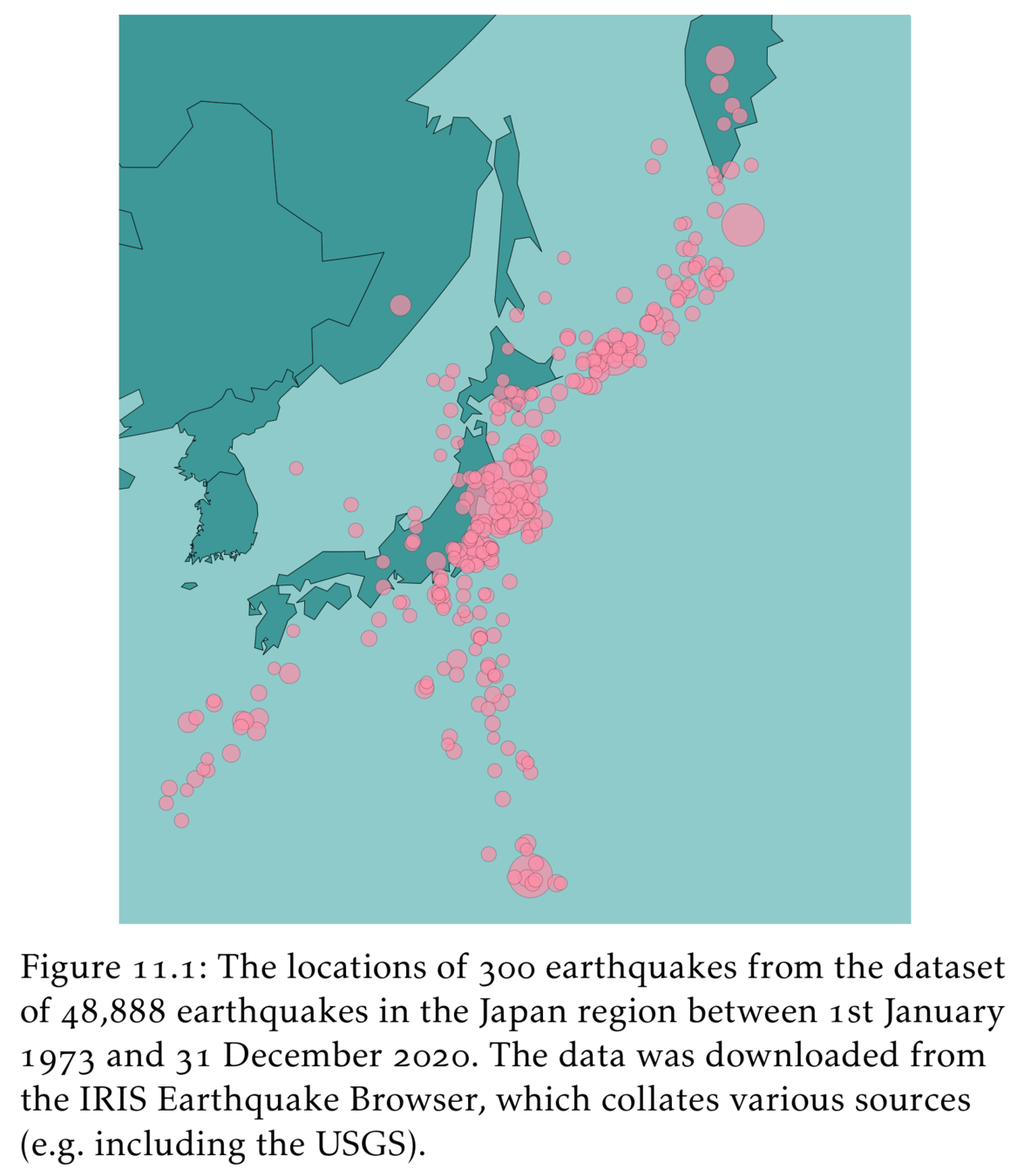

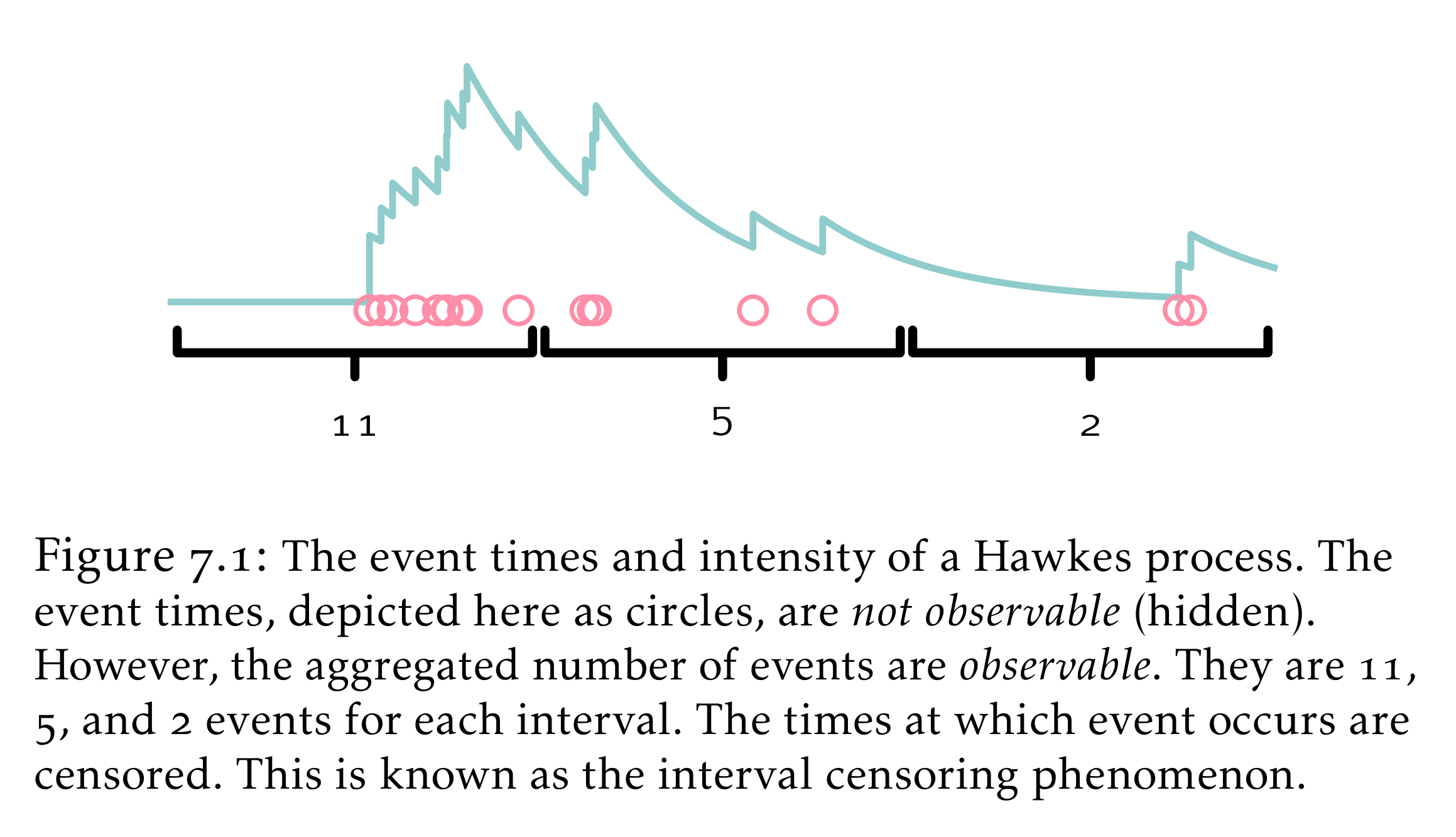

Hawkes processes

Sums of random variables

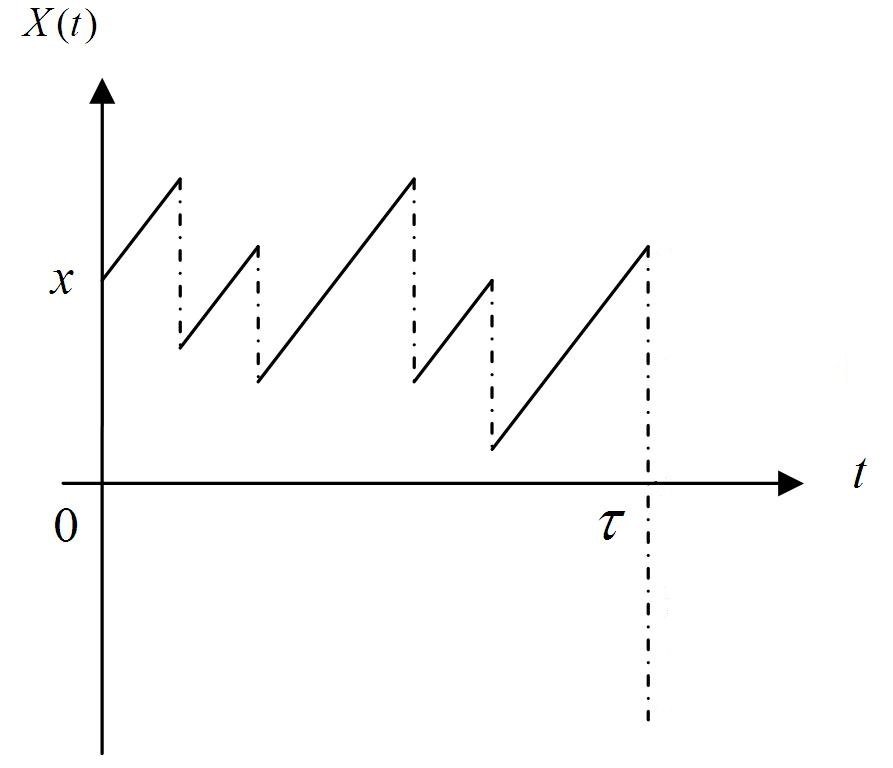

Cramér-Lundberg model

Useful for:

- Ruin probability (finite time)

- Ruin probability (infinite time)

- Stop-loss premiums

- Option pricing

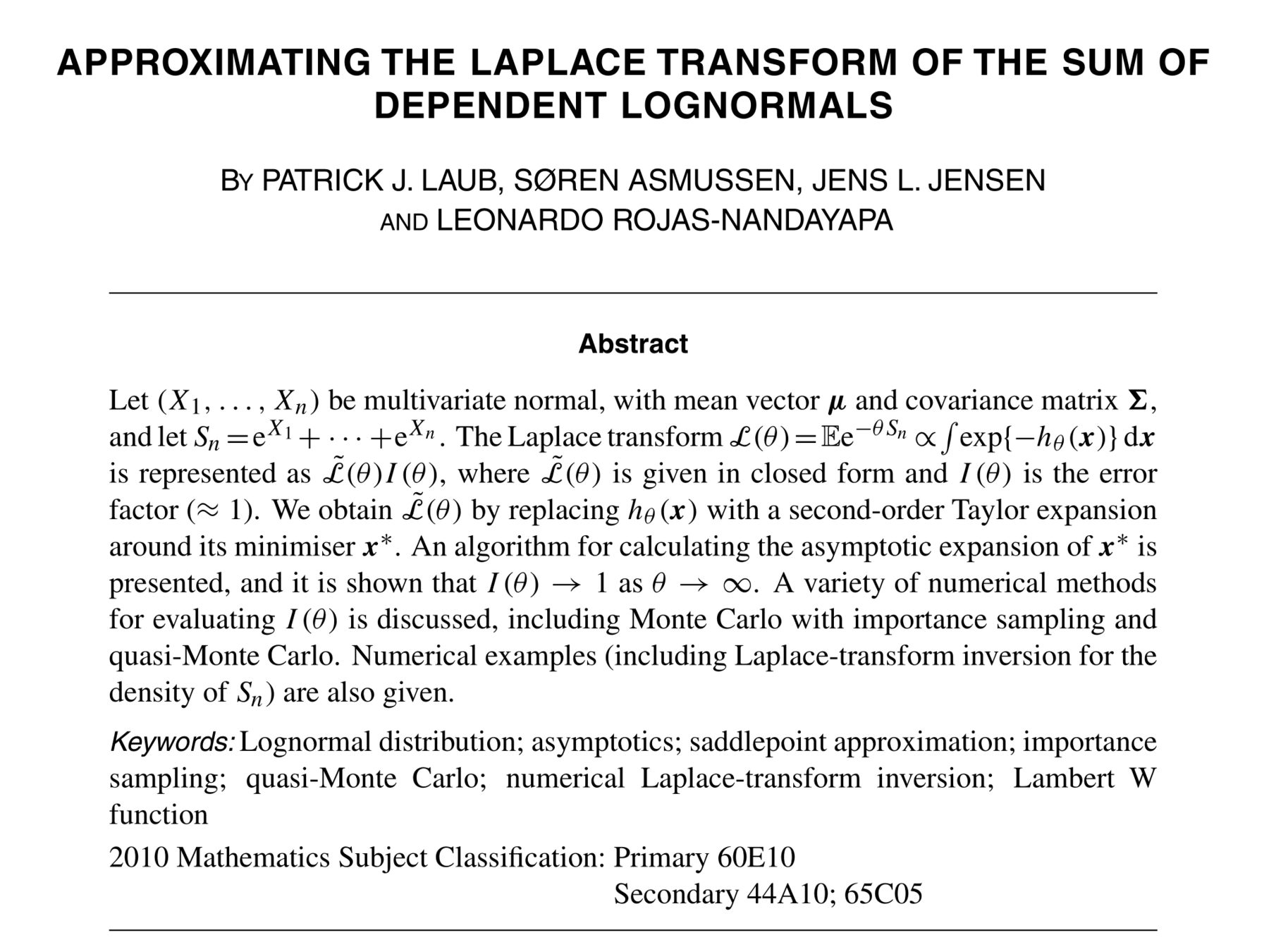

Sum of lognormals distributions

where

Advances in Applied Probability, 48(A)

"I used to be a chick[en] but am less so as I get older. After all, I have to die from something."

Savage condition

Hashorva, E. (2005), 'Asymptotics and bounds for multivariate Gaussian tails', Journal of Theoretical Probability

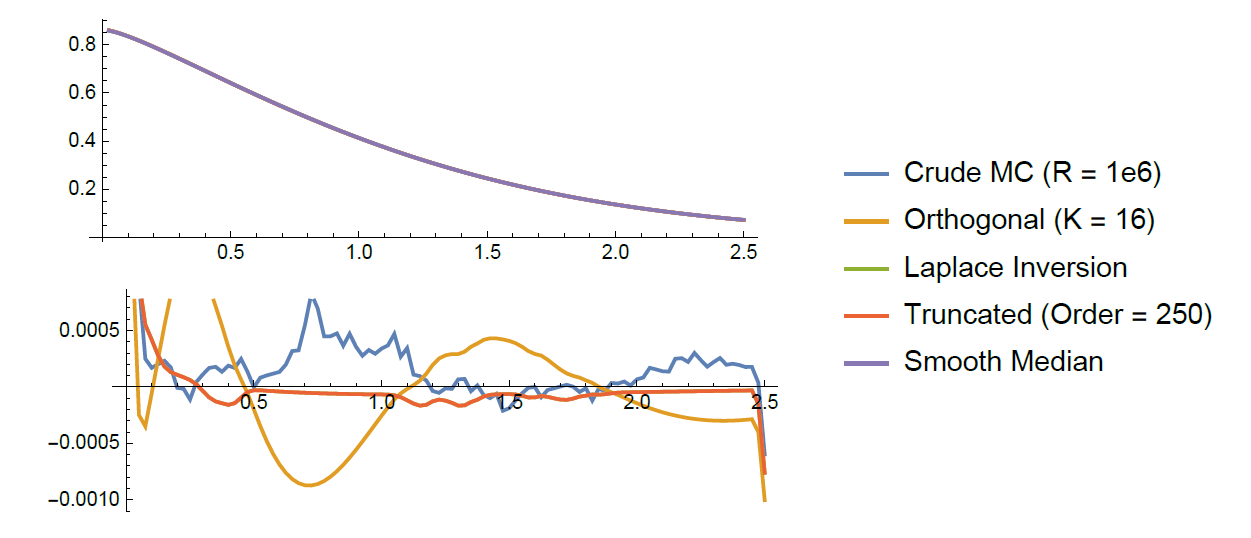

Pierre-Olivier Goffard, Patrick J. Laub (2020), Orthogonal polynomial expansions to evaluate stop-loss premiums, Journal of Computational and Applied Mathematics

Orthogonal polynomials

Approximate S using orthogonal polynomial expansion

where

Pierre-O Goffard

Stop-loss premiums

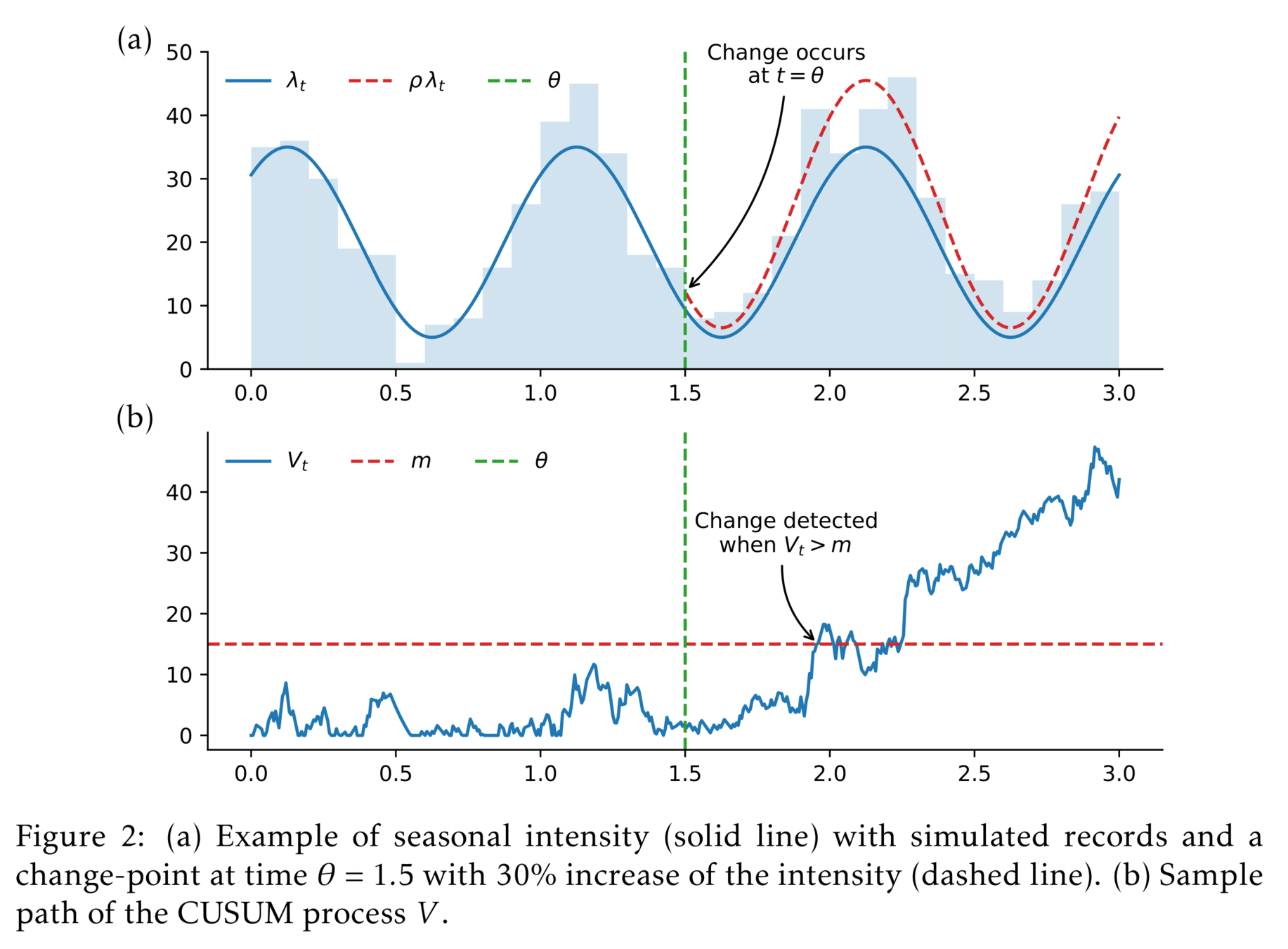

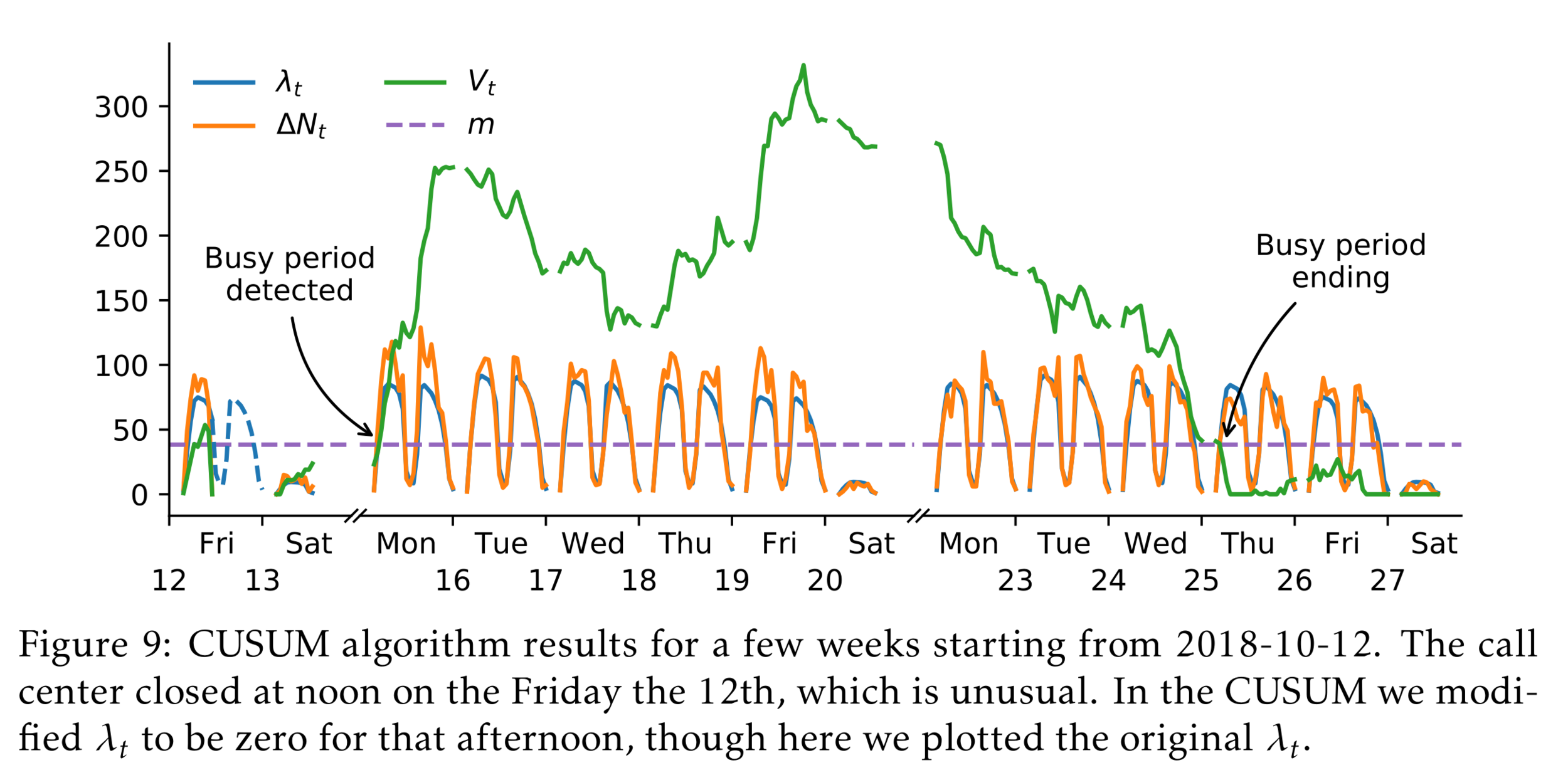

Change-point detection

Approximate Bayesian Computation

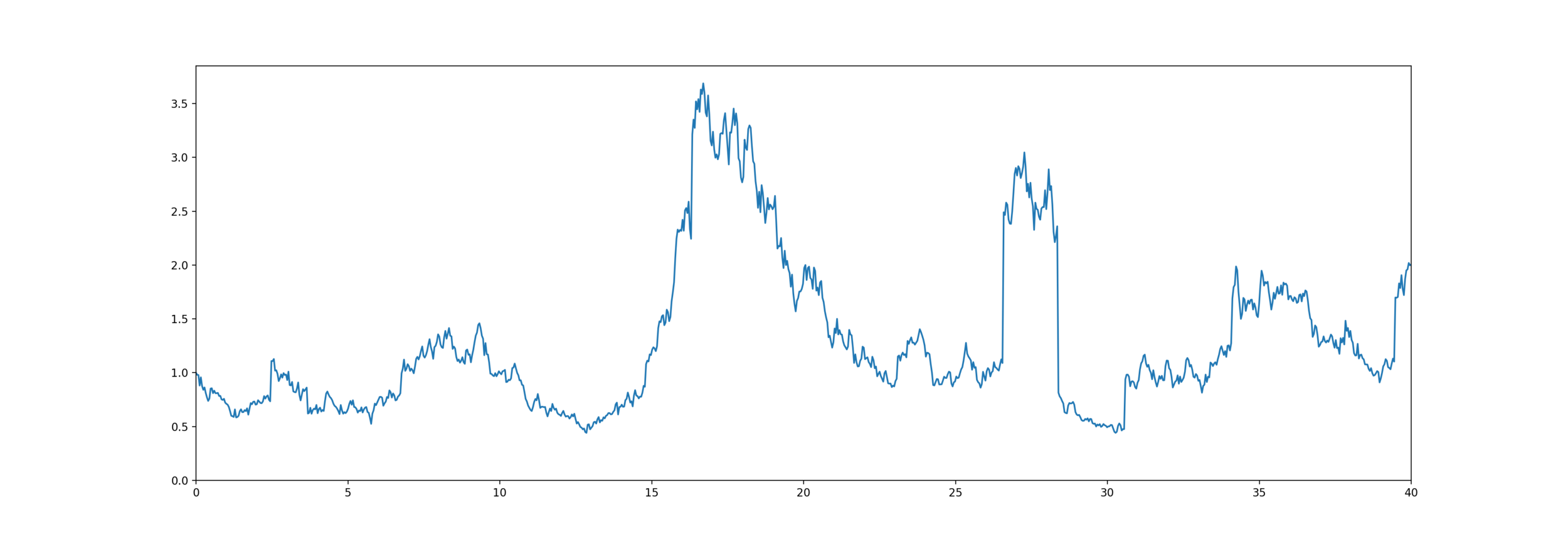

Time-varying example

- Claims form a Poisson process point with arrival rate \( \lambda(t) = a+b[1+\sin(2\pi c t)] \).

- The observations are \( X_s = \sum_{i = N_{s-1}}^{N_{s}}U_i \).

- Frequencies are \(\mathsf{CPoisson}(a=1,b=5,c=\frac{1}{50})\) and sizes are \(U_i \sim \mathsf{Lognormal}(\mu=0, \sigma=0.5)\).

- Claims form a Poisson process point with arrival rate \( \lambda(t) = a+b[1+\sin(2\pi c t)] \).

- The observations are \( X_s = \sum_{i = N_{s-1}}^{N_{s}}U_i \).

- Frequencies are \(\mathsf{CPoisson}(a=1,b=5,c=\frac{1}{50})\) and sizes are \(U_i \sim \mathsf{Lognormal}(\mu=0, \sigma=0.5)\).

- ABC posteriors based on 50 \(X\)'s and on 250 \(X\)'s with uniform priors.

Wasserstein distance?

where \(\mathcal{S}_t\) denotes the set of all the permutations of \(\{1,\ldots, t\}\).

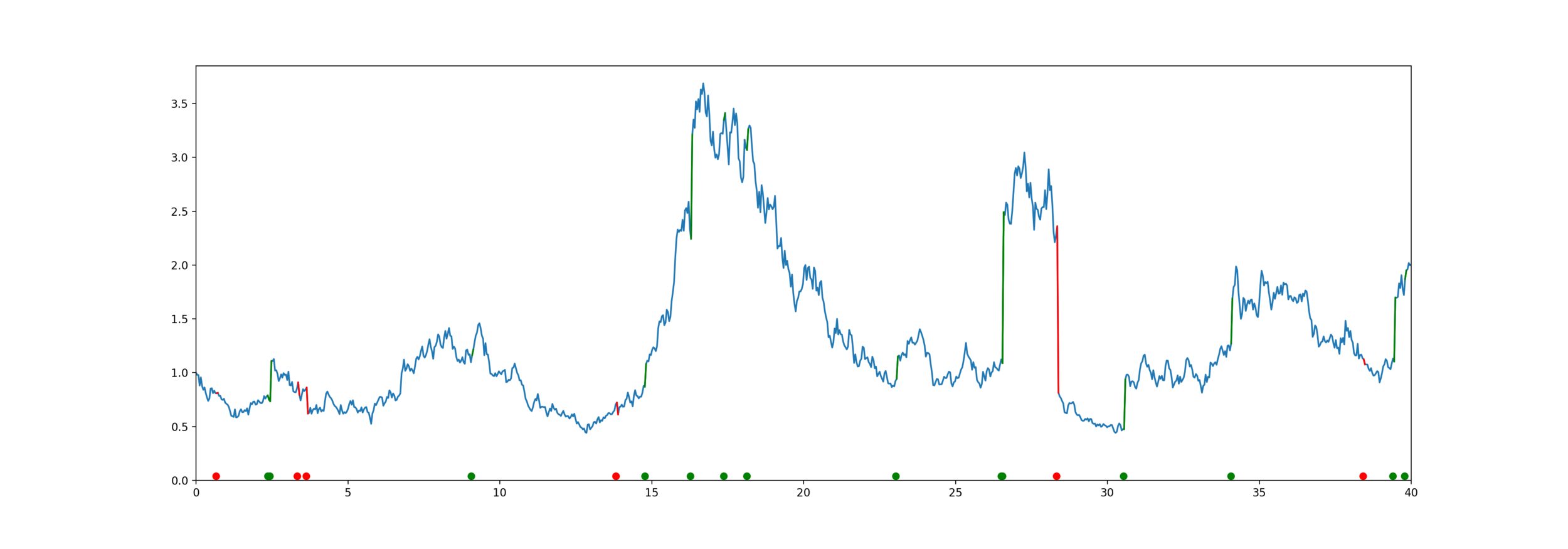

One-dimensional data:

Sorting is only \( \mathcal{O}(n \log n) \)

Compare \(\boldsymbol{x}_{\text{obs}} \sim f(\cdot, \boldsymbol{\theta}) \) to \(\boldsymbol{y} \sim f(\cdot, \boldsymbol{\theta}) \)

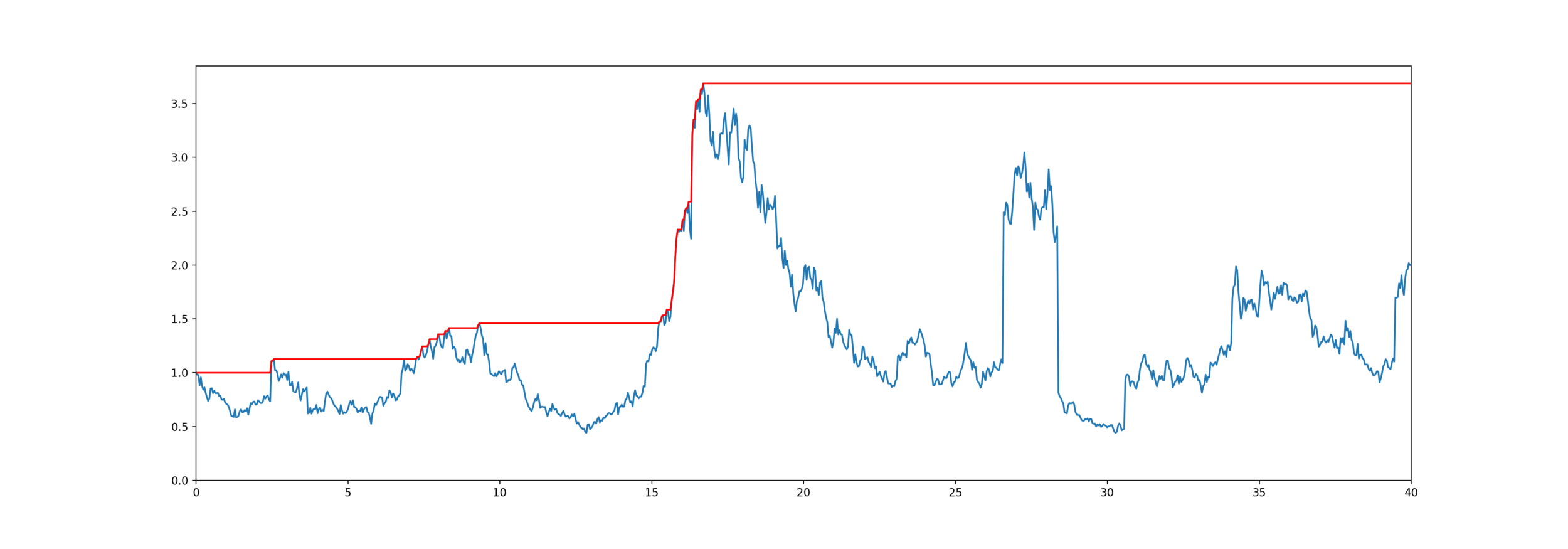

Normal Wasserstein distance ignores time

\( L^1 \) distance strictly enforces time

- Claims form a Poisson process point with arrival rate \( \lambda(t) = a+b[1+\sin(2\pi c t)] \).

- The observations are \( X_s = \sum_{i = N_{s-1}}^{N_{s}}U_i \).

- Frequencies are \(\mathsf{CPoisson}(a=1,b=5,c=\frac{1}{50})\) and sizes are \(U_i \sim \mathsf{Lognormal}(\mu=0, \sigma=0.5)\).

- ABC posteriors based on 50 \(X\)'s and on 250 \(X\)'s with uniform priors.

We can scale time to balance the two axes

Jevgenijs Ivanovs

Guaranteed Minimum Death Benefit

Equity-linked life insurance

High Water Death Benefit

Equity-linked life insurance

Model for mortality and equity

The customer lives for years,

The stock price is an exponential jump diffusion,

Exponential PH-Jump Diffusion

Wiener-Hopf Factorisation

-

is an exponential random variable

- is a Lévy process

Doesn't work for deterministic time

IME Papers by Gerber, Hans U., Elias S. W. Shiu, and Hailiang Yang:

- Valuing equity-linked death benefits and other contingent options: A discounted density approach (2012)

- Valuing equity-linked death benefits in jump diffusion models (2013)

- Geometric stopping of a random walk and its application to valuation of equity-linked death benefits (2015)

Problem: to model mortality via phase-type

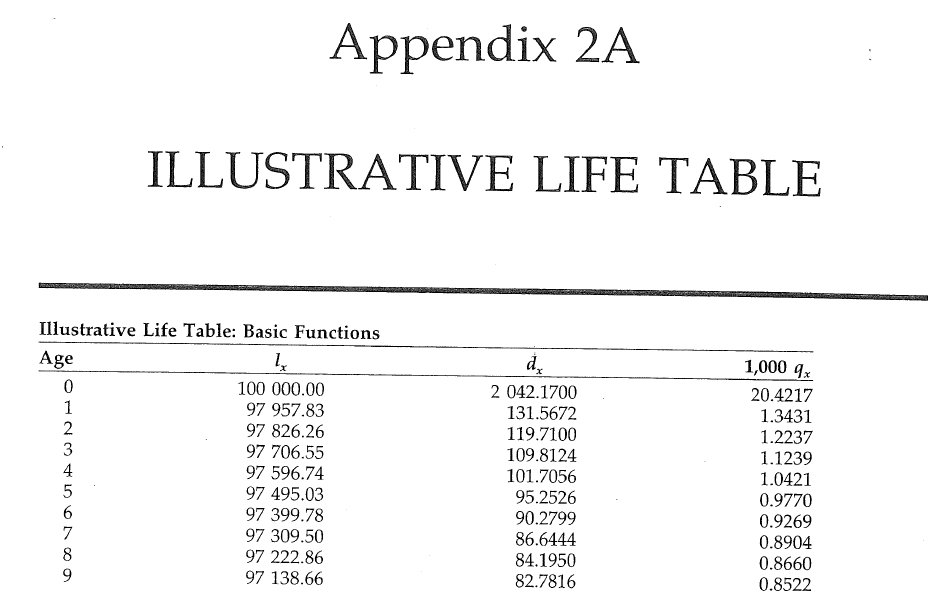

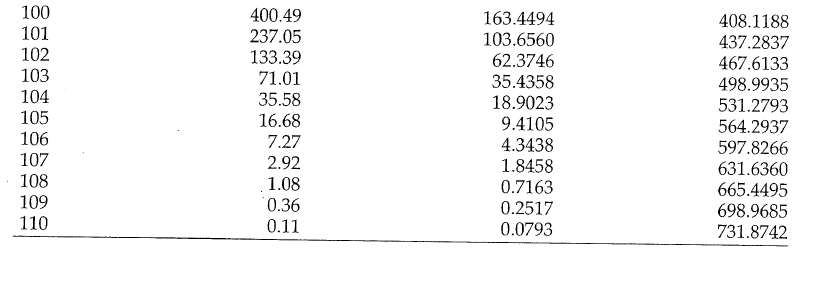

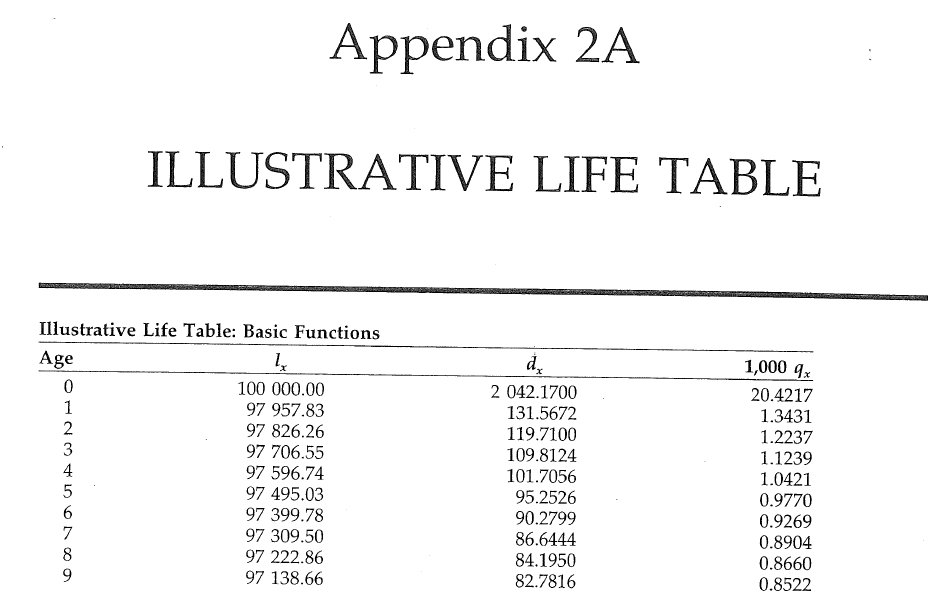

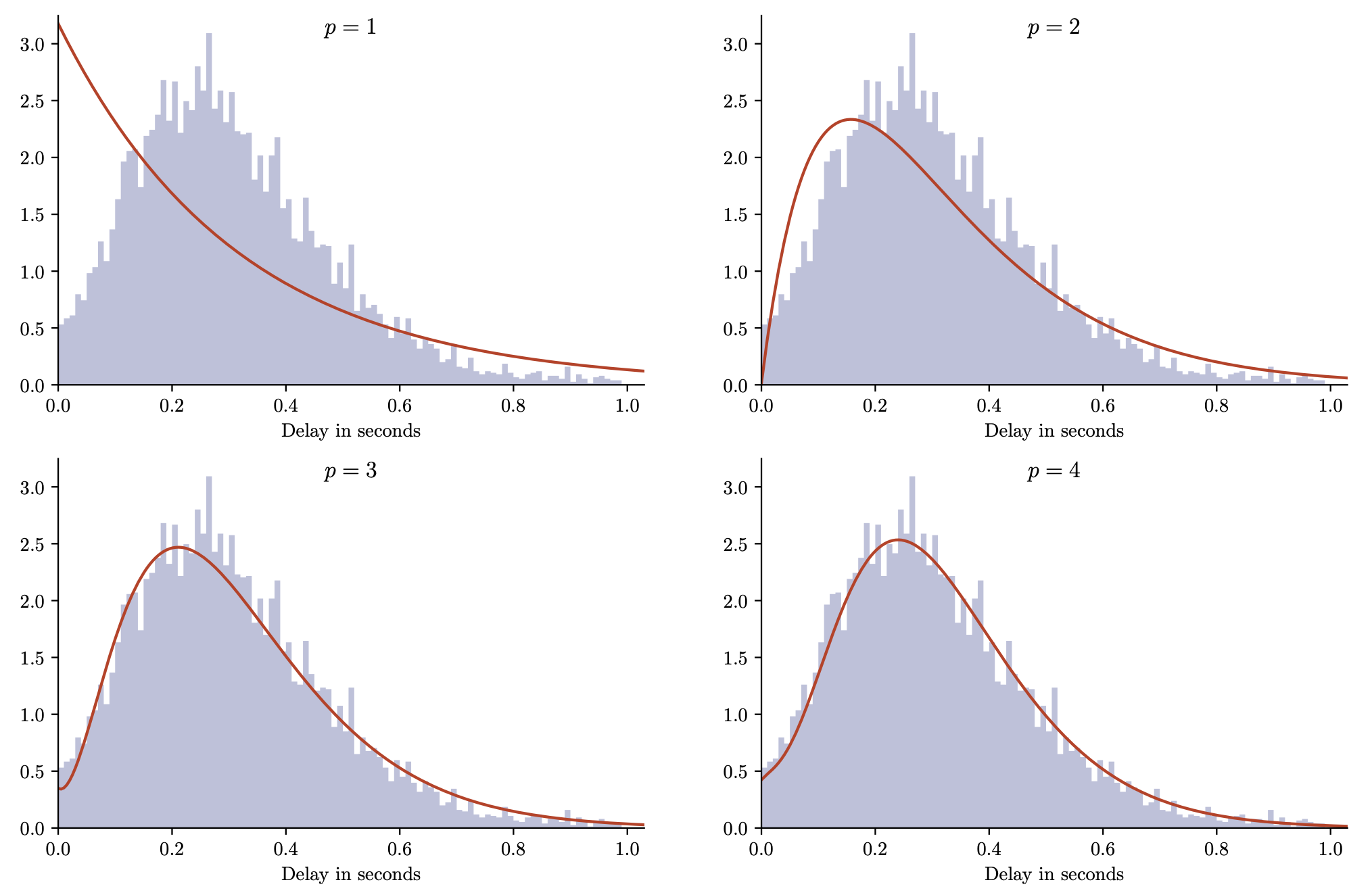

Bowers et al (1997), Actuarial Mathematics, 2nd Edition

Example Markov process

Sojourn times

Sojourn times are the random lengths of time spent in each state

Phase-type definition

Markov process State space

- Initial distribution

- Sub-transition matrix

- Exit rates

Example

Markov process State space

Phase-type generalises...

- Exponential distribution

- Sums of exponentials (Erlang distribution)

- Mixtures of exponentials (hyperexponential distribution)

Phase-type properties

Matrix exponential

Density and tail

Moments

Laplace transform

More properties

Closure under addition, minimum, maximum

... and under conditioning

When to use phase-type?

Your problem has "flowchart" structure.

"Coxian distribution"

"Calendar" Age

"Physical" age

X.S. Lin & X. Liu (2007) , M. Govorun, G. Latouche, & S. Loisel (2015).

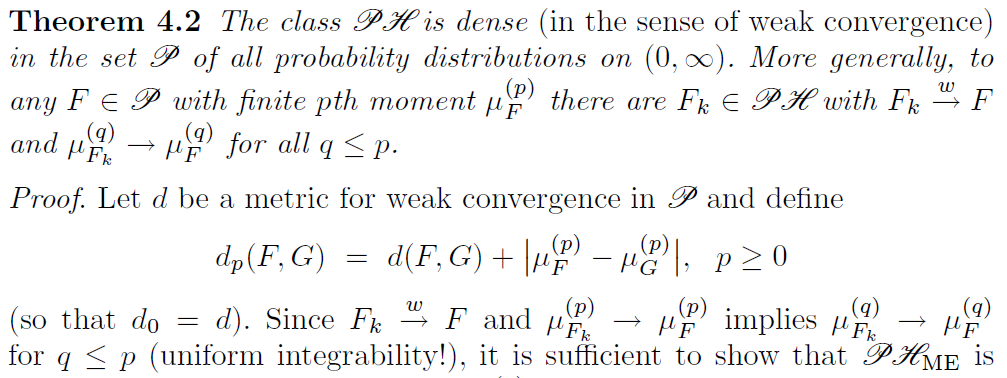

Class of phase-types is dense

S. Asmussen (2003), Applied Probability and Queues, 2nd Edition, Springer

Class of phase-types is dense

Why does that work?

Can make a phase-type look like a constant value

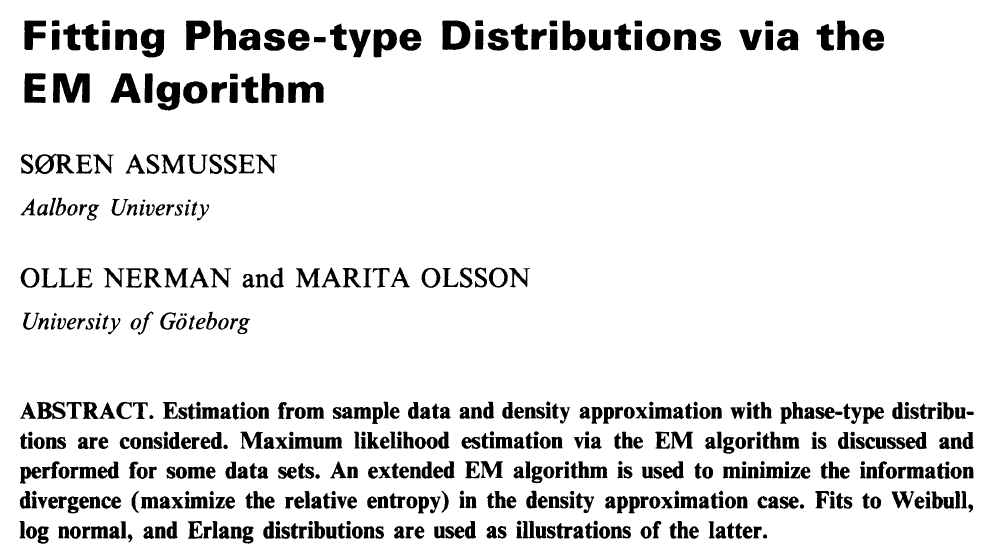

How to fit them?

Observations:

Derivatives?

Model (p.d.f.):

How to fit them?

Hidden values:

\(B_i\) number of MC's starting in state \(i\)

\(Z_i\) total time spend in state \(i\)

\(N_{ij}\) number of transitions from state \(i\) to \(j\)

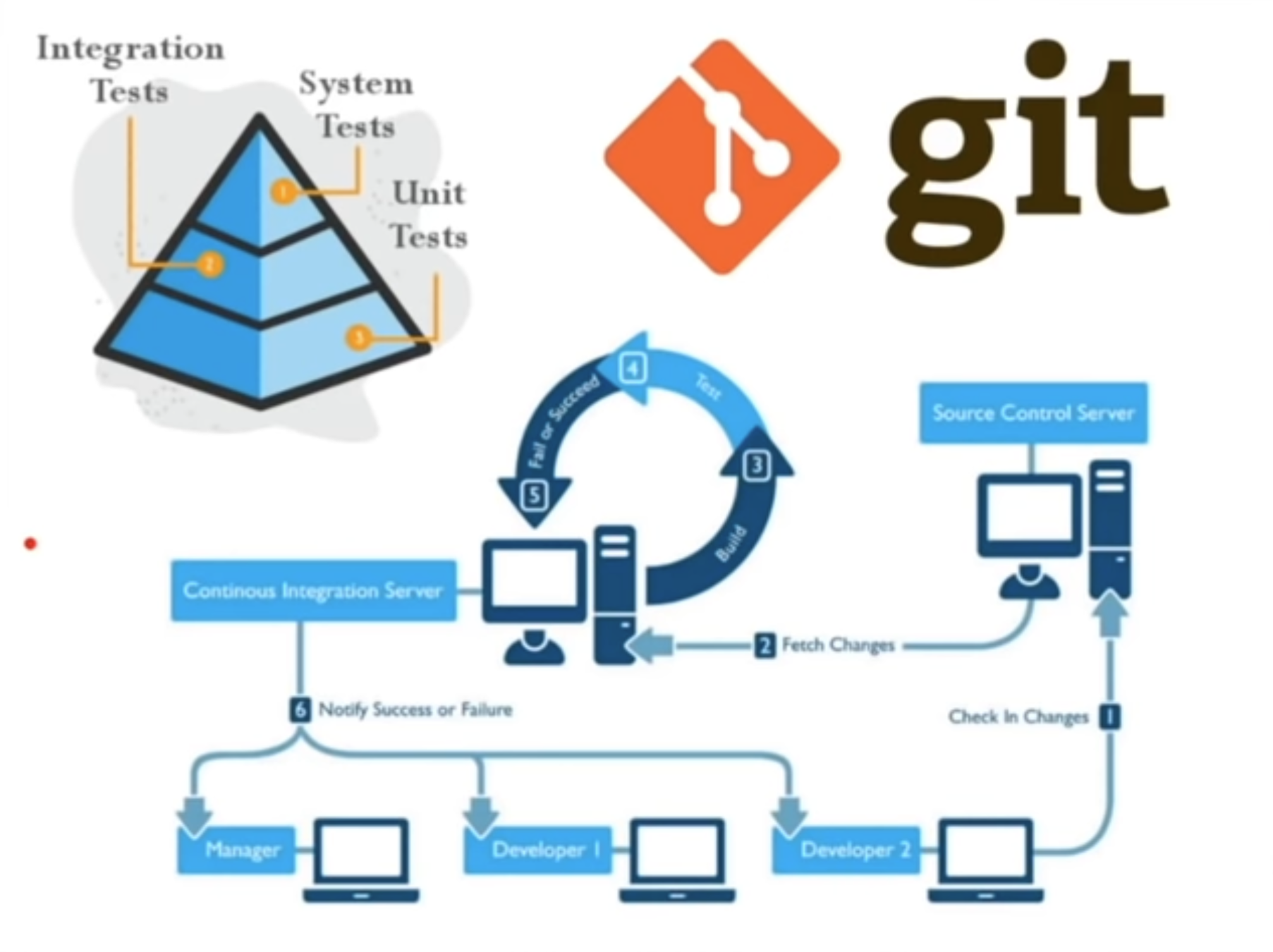

Diving into the source

- Single threaded

- Homegrown matrix handling (e.g. all dense)

- Computational "E" step

- Instead solve a large system of DEs

- Hard to read or modify (e.g. random function)

C to Julia

void rungekutta(int p, double *avector, double *gvector, double *bvector,

double **cmatrix, double dt, double h, double **T, double *t,

double **ka, double **kg, double **kb, double ***kc)

{

int i, j, k, m;

double eps, h2, sum;

i = dt/h;

h2 = dt/(i+1);

init_matrix(ka, 4, p);

init_matrix(kb, 4, p);

init_3dimmatrix(kc, 4, p, p);

if (kg != NULL)

init_matrix(kg, 4, p);

...

for (i=0; i < p; i++) {

avector[i] += (ka[0][i]+2*ka[1][i]+2*ka[2][i]+ka[3][i])/6;

bvector[i] += (kb[0][i]+2*kb[1][i]+2*kb[2][i]+kb[3][i])/6;

for (j=0; j < p; j++)

cmatrix[i][j] +=(kc[0][i][j]+2*kc[1][i][j]+2*kc[2][i][j]+kc[3][i][j])/6;

}

}

}

This function: 116 lines of C, built-in to Julia

Whole program: 1700 lines of C, 300 lines of Julia

# Run the ODE solver.

u0 = zeros(p*p)

pf = ParameterizedFunction(ode_observations!, fit)

prob = ODEProblem(pf, u0, (0.0, maximum(s.obs)))

sol = solve(prob, OwrenZen5())https://github.com/Pat-Laub/EMpht.jl

Lots of parameters to fit

- General

- Coxian distribution

In general, representation is not unique

A twist on the Coxian form

Canonical form 1

Problem: to model mortality via phase-type

Bowers et al (1997), Actuarial Mathematics, 2nd Edition

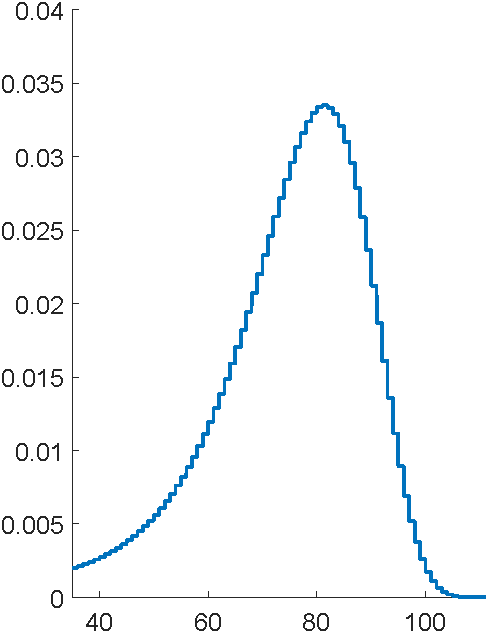

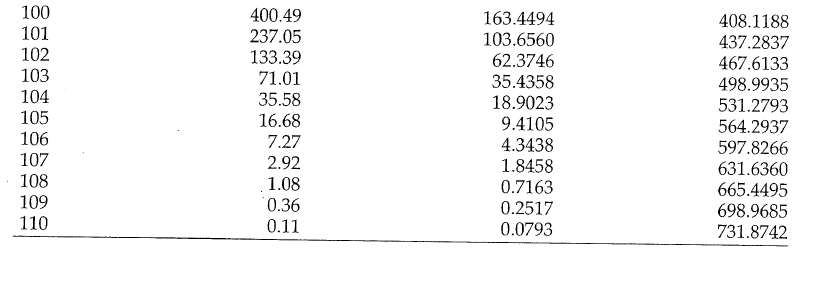

Final fit

using EMpht

lt = EMpht.parse_settings("life_table.json")[1]

phCF200 = empht(lt, p=200, ph_structure="CanonicalForm1")Another cool thing

Contract over a limited horizon

Can use Erlangization so

and phase-type closure under minimums

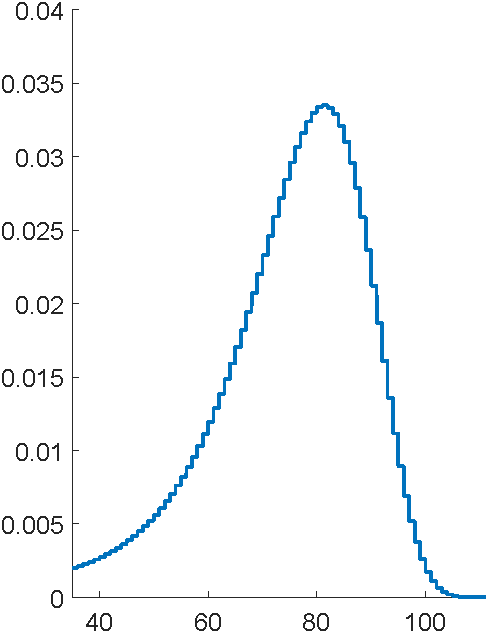

A. Vuorinen, The blockchain propagation process: a machine learning and matrix analytic approach

Phase-type for bitcoin

Heavy-tailed modeling?

Phase-types are always light-tailed

Can 'splice' together a Franken-distribution

Norwegian fire data

Take logarithms

Take logarithms and shift

logClaims = log.(claims)

logClaimsCentered = logClaims .- minimum(logClaims) .+ 1e-4

~, int, intweight = bin_observations(logClaimsCentered, 500)

sInt = EMpht.Sample(int=int, intweight=intweight)

ph = empht(sInt, p=5)

logClaims = log.(claims)

logClaimsCentered = logClaims .- minimum(logClaims) .+ 1e-4

~, int, intweight = bin_observations(logClaimsCentered, 500)

sInt = EMpht.Sample(int=int, intweight=intweight)

ph = empht(sInt, p=40)Take logarithms and shift

Albrecher and Bladt, Inhomogeneous phase-type distributions and heavy tails, Journal of Applied Probability

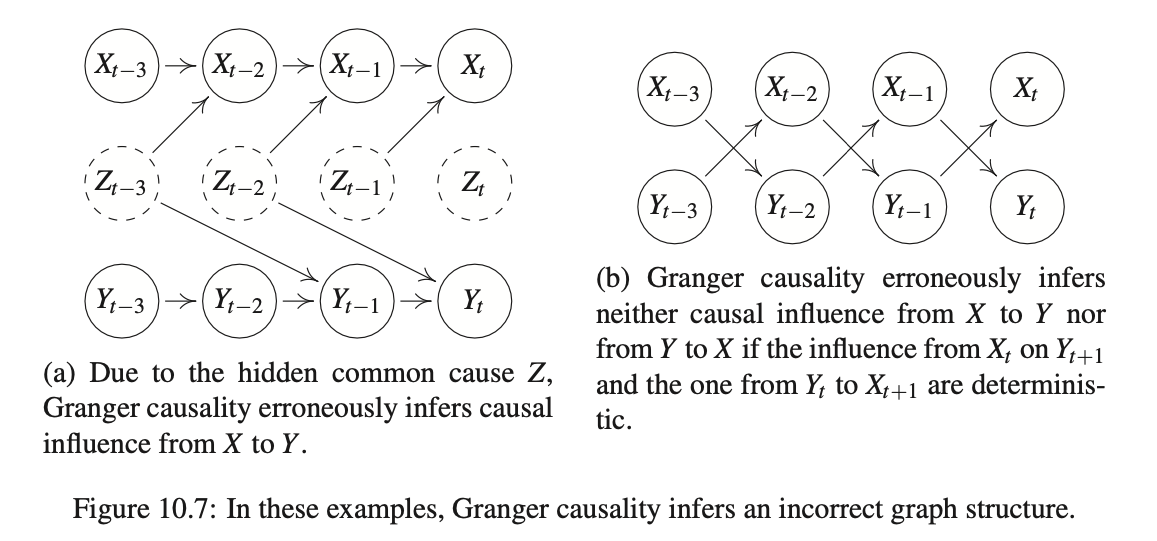

Causal analysis

Crime and temperature in Chicago

Empirical dynamic modelling

Quick Demo

Richard McElreath, Science as Amateur Software Development

Current projects

- EDM

- Missing data

- Alternative distance functions

- Hawkes process loss model with discounting

- Bayesian-model based (Rubin) causal inference for count data

Phase-type models in life insurance and empirical dynamic modelling

By plaub

Phase-type models in life insurance and empirical dynamic modelling

- 634