introduction to asynchronous programming

with

python and twisted

self.who()

fabio sangiovanni

@sanjioh

I work with computers!

Backend Developer and DevOps Engineer

@MailUp

self.who()

fabio sangiovanni

@sanjioh

I work with computers!

Backend Developer and DevOps Engineer

@MailUp

My hobby is computers.

And travelling.

But, computers! <3

basic concepts

software properties

- CONCURRENCY

several tasks (units of work) can advance without waiting for each other to complete

-

PARALLELISM

concurrency with tasks executed simultaneously, literally at the same physical instant

basic concepts

OPERATING systems

- PROCESS

an instance of a computer program that is being executed

-

THREAD

-

part of a process (shares code and memory)

-

the smallest sequence of instructions that can be managed independently by the OS scheduler (e.g. runnable on a CPU core)

-

execution models

- an execution model defines how work takes place

- what is an indivisible unit of work?

- what is the order in which those units of work are executed?

execution models

- an execution model defines how work takes place

- what is an indivisible unit of work?

- what is the order in which those units of work are executed?

- fundamental stage of software design

- heavy impact on concurrency management

execution models

- an execution model defines how work takes place

- what is an indivisible unit of work?

- what is the order in which those units of work are executed?

- fundamental stage of software design

- heavy impact on concurrency management

- we will examine the following:

- single-threaded synchronous

- multi-threaded

- single-threaded asynchronous

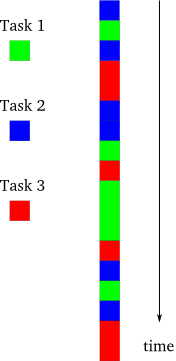

execution models

- an execution model defines how work takes place

- what is an indivisible unit of work?

- what is the order in which those units of work are executed?

- fundamental stage of software design

- heavy impact on concurrency management

- we will examine the following:

- single-threaded synchronous

- multi-threaded

- single-threaded asynchronous

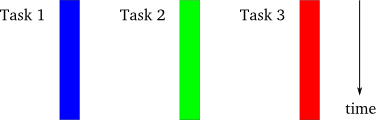

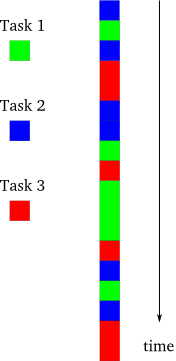

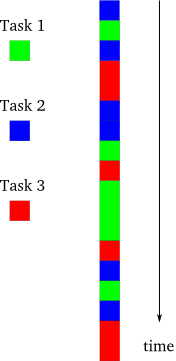

- let's now imagine our application needs to perform 3 tasks...

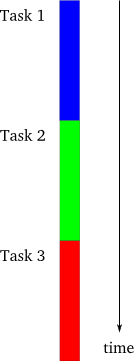

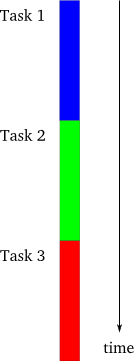

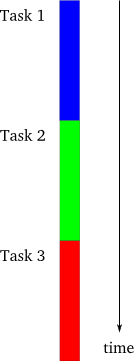

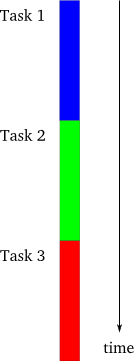

execution models

single-threaded synchronous

- one task performed at any given time

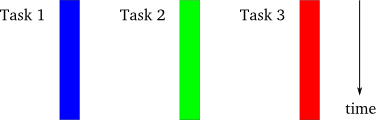

execution models

single-threaded synchronous

- one task performed at any given time

- each task starts after the previous has completely finished its job

execution models

single-threaded synchronous

- one task performed at any given time

- each task starts after the previous has completely finished its job

- task order allows a later task to assume no errors and available outputs from the earlier ones

execution models

single-threaded synchronous

- one task performed at any given time

- each task starts after the previous has completely finished its job

- task order allows a later task to assume no errors and available outputs from the earlier ones

- very simple, but no concurrency, no parallelism

code example

single-threaded synchronous

# python3

import requests

_WEBSITES = ('https://www.google.com', 'https://www.amazon.com',

'https://www.facebook.com')

def get_homepages():

homepages = {}

for website in _WEBSITES:

response = requests.get(website)

homepages[website] = response.text

return homepages

if __name__ == '__main__':

homepages = get_homepages()

for website in _WEBSITES:

print(homepages[website])

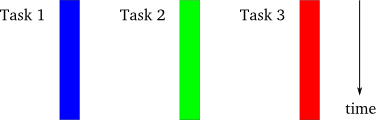

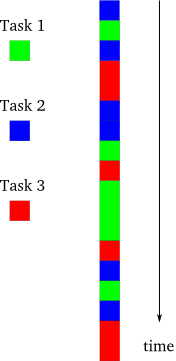

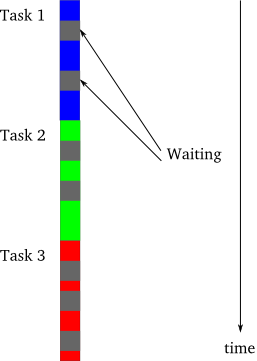

execution models

multi-threaded

- each task runs in a separate thread

execution models

multi-threaded

- each task runs in a separate thread

- threads are exclusively managed by the OS

execution models

multi-threaded

- each task runs in a separate thread

- threads are exclusively managed by the OS

- tasks run in parallel on multiple CPU cores

(on single core CPUs time slicing occurs)

execution models

multi-threaded

- each task runs in a separate thread

- threads are exclusively managed by the OS

- tasks run in parallel on multiple CPU cores

(on single core CPUs time slicing occurs) - concurrency and parallelism

code example

multi-threaded

# python3

import threading

import requests

_WEBSITES = ('https://www.google.com', 'https://www.amazon.com',

'https://www.facebook.com')

def _fetch(website, homepages):

response = requests.get(website)

homepages[website] = response.text # !!!

def get_homepages():

homepages = {}

threads = [threading.Thread(target=_fetch, args=(website, homepages))

for website in _WEBSITES]

for thread in threads: thread.start()

for thread in threads: thread.join()

return homepages

if __name__ == '__main__':

homepages = get_homepages()

for website in _WEBSITES: print(homepages[website])

but wait...

A typical scenario

in a PARALLEL WORLD

-

multiple threads within one process

but wait...

A typical scenario

in a PARALLEL WORLD

-

multiple threads within one process

-

...executing at the very same time

but wait...

A typical scenario

in a PARALLEL WORLD

-

multiple threads within one process

-

...executing at the very same time

-

...on one or more CPU cores

but wait...

A typical scenario

in a PARALLEL WORLD

-

multiple threads within one process

-

...executing at the very same time

-

...on one or more CPU cores

-

...communicating by resource sharing

(most notably: memory)

but wait...

A typical scenario

in a PARALLEL WORLD

-

multiple threads within one process

-

...executing at the very same time

-

...on one or more CPU cores

-

...communicating by resource sharing

(most notably: memory) -

...with no control over scheduling by the developer

what could possibly

go wrong?

what could possibly

go wrong?

(tl;dr: a lot)

threads

are

hard

here be dragons

multithreading programming is...

- hard to reason about

here be dragons

multithreading programming is...

- hard to reason about

- hard to actually get right

- communication and coordination between threads is an advanced programming topic

here be dragons

multithreading programming is...

- hard to reason about

- hard to actually get right

- communication and coordination between threads is an advanced programming topic

- hard to test

here be dragons

multithreading programming is...

- hard to reason about

- hard to actually get right

- communication and coordination between threads is an advanced programming topic

- hard to test

- ridden with perils

- deadlock

- starvation

- race conditions

here be dragons

multithreading programming is...

- hard to reason about

- hard to actually get right

- communication and coordination between threads is an advanced programming topic

- hard to test

- ridden with perils

- deadlock

- starvation

- race conditions

same applies to multiprocessing

here be dragons

multithreading programming is...

- hard to reason about

- hard to actually get right

- communication and coordination between threads is an advanced programming topic

- hard to test

- ridden with perils

- deadlock

- starvation

- race conditions

same applies to multiprocessing

"who's there?"

"race condition"

"knock, knock"

and in python...

"In CPython, the global interpreter lock, or GIL, is a mutex that prevents multiple native threads from executing Python bytecodes at once."

https://wiki.python.org/moin/GlobalInterpreterLock

"The GIL is controversial because it prevents multithreaded CPython programs from taking full advantage of multiprocessor systems in certain situations."

bonus!!!

Removing Python's GIL: The Gilectomy

https://www.youtube.com/watch?v=P3AyI_u66Bw

by Larry Hastings, core CPython committer

-- photo by Kenneth Reitz

So what?

single-threaded synchronous is not concurrent :(

multithreading is OMG NOPE!

do we really need to choose between

poor performance

and

programming pain?

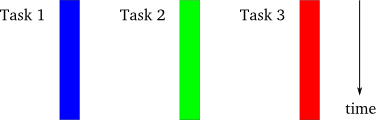

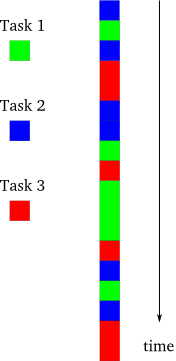

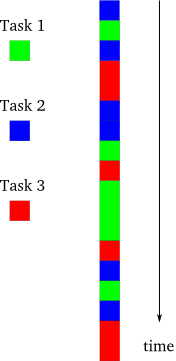

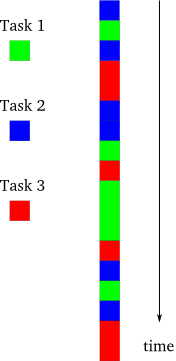

execution models

SINGLE-THREADED aSYNCHRONOUS

- one task performed at any given time

execution models

SINGLE-THREADED aSYNCHRONOUS

- one task performed at any given time

- tasks run in a single thread, but! they're interleaved with one another

execution models

SINGLE-THREADED aSYNCHRONOUS

- one task performed at any given time

- tasks run in a single thread, but! they're interleaved with one another

- a task will continue to run until it explicitly relinquishes control to other tasks

- the developer is back in control of task scheduling

execution models

SINGLE-THREADED aSYNCHRONOUS

- one task performed at any given time

- tasks run in a single thread, but! they're interleaved with one another

- a task will continue to run until it explicitly relinquishes control to other tasks

- the developer is back in control of task scheduling

- concurrency without parallelism!

but there's more...

SINGLE-THREADED aSYNCHRONOUS

- the model works best when tasks are forced to wait, or block

- typically to perform I/O

- network and disk are MUCH slower than the CPU!

but there's more...

SINGLE-THREADED aSYNCHRONOUS

- the model works best when tasks are forced to wait, or block

- typically to perform I/O

- network and disk are MUCH slower than the CPU!

- an asynchronous program will meanwhile execute some other task that can still make progress

- it's a non-blocking program

but there's more...

SINGLE-THREADED aSYNCHRONOUS

- the model works best when tasks are forced to wait, or block

- typically to perform I/O

- network and disk are MUCH slower than the CPU!

- an asynchronous program will meanwhile execute some other task that can still make progress

- it's a non-blocking program

- less waiting == more performance

mind = blown

the use case

- large number of tasks

- there is always at least one that can make progress

- lots of I/O

- a synchronous program would waste time blocking

- tasks are largely independent from one another

- no need for inter-task communication

- no task waits for others to complete

the use case

every modern

CLIENT-SERVER

NETWORK APPLICATION

ASYNCHRONOUS NETWORKING

THE RECIPE

ASYNCHRONOUS NETWORKING

THE RECIPE

non-blocking sockets

ASYNCHRONOUS NETWORKING

THE RECIPE

non-blocking sockets

a socket polling method

ASYNCHRONOUS NETWORKING

THE RECIPE

non-blocking sockets

a socket polling method

a loop

sockets

- abstractions of network endpoints

- you write into sockets to send data

- you read from sockets to receive data

sockets

- abstractions of network endpoints

- you write into sockets to send data

- you read from sockets to receive data

- by default sockets are in blocking mode

- operations on sockets don't return control to the program until complete

sockets

- abstractions of network endpoints

- you write into sockets to send data

- you read from sockets to receive data

- by default sockets are in blocking mode

- operations on sockets don't return control to the program until complete

- in non-blocking mode operations return immediately

-

socket.setblocking(False)

-

polling

- in non-blocking mode, operations fail if they cannot be completed immediately

polling

- in non-blocking mode, operations fail if they cannot be completed immediately

- the OS provides functions that can be used to know when a socket is available for I/O

polling

- in non-blocking mode, operations fail if they cannot be completed immediately

- the OS provides functions that can be used to know when a socket is available for I/O

- if none is available, they wait (block)

polling

- in non-blocking mode, operations fail if they cannot be completed immediately

- the OS provides functions that can be used to know when a socket is available for I/O

- if none is available, they wait (block)

- OS dependent implementations, e.g.

-

select()

-

epoll()

-

kqueue()

-

polling

- in non-blocking mode, operations fail if they cannot be completed immediately

- the OS provides functions that can be used to know when a socket is available for I/O

- if none is available, they wait (block)

- OS dependent implementations, e.g.

-

select()

-

epoll()

-

kqueue()

-

-

import select

a loop

well, it's a loop

nothing fancy to see here

while True:

do_stuff()

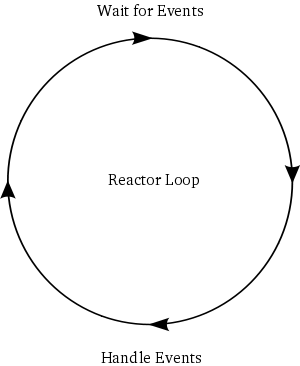

the reactor loop

- a loop

the reactor loop

- a loop

- ...that monitors a set of sockets

- in non-blocking mode

the reactor loop

- a loop

- ...that monitors a set of sockets

- in non-blocking mode

- ...and reacts when they are ready for I/O

- using an OS provided polling function

the reactor loop

- a loop

- ...that monitors a set of sockets

- in non-blocking mode

- ...and reacts when they are ready for I/O

- using an OS provided polling function

- also called event loop, because it

waits for events to happen

the reactor loop

- a loop

- ...that monitors a set of sockets

- in non-blocking mode

- ...and reacts when they are ready for I/O

- using an OS provided polling function

- also called event loop, because it

waits for events to happen

code example

import select

# sockets = [...]

# def do_reads(socks): ...

# def do_writes(socks): ...

while sockets:

r_sockets, w_sockets, _ = select.select(sockets, sockets, [])

closed_r_sockets = do_reads(r_sockets)

closed_w_sockets = do_writes(w_sockets)

for sock in closed_r_sockets + closed_w_sockets:

sockets.remove(sock)enter twisted

-

event-driven networking engine

- a framework to build asynchronous network applications (client and server)

enter twisted

-

event-driven networking engine

- a framework to build asynchronous network applications (client and server)

- implementation of the Reactor Pattern

enter twisted

-

event-driven networking engine

- a framework to build asynchronous network applications (client and server)

- implementation of the Reactor Pattern

- + Internet protocols

- SMTP, POP3, IMAP, SSHv2, DNS, etc.

- + Internet protocols

enter twisted

-

event-driven networking engine

- a framework to build asynchronous network applications (client and server)

- implementation of the Reactor Pattern

- + Internet protocols

- SMTP, POP3, IMAP, SSHv2, DNS, etc.

- + abstractions to build custom protocols

- + Internet protocols

enter twisted

-

event-driven networking engine

- a framework to build asynchronous network applications (client and server)

- implementation of the Reactor Pattern

- + Internet protocols

- SMTP, POP3, IMAP, SSHv2, DNS, etc.

- + abstractions to build custom protocols

- + utilities to make your async life easier

- + Internet protocols

enter twisted

-

event-driven networking engine

- a framework to build asynchronous network applications (client and server)

- implementation of the Reactor Pattern

- + Internet protocols

- SMTP, POP3, IMAP, SSHv2, DNS, etc.

- + abstractions to build custom protocols

- + utilities to make your async life easier

- + Internet protocols

- written in Python

enter twisted

-

event-driven networking engine

- a framework to build asynchronous network applications (client and server)

- implementation of the Reactor Pattern

- + Internet protocols

- SMTP, POP3, IMAP, SSHv2, DNS, etc.

- + abstractions to build custom protocols

- + utilities to make your async life easier

- + Internet protocols

- written in Python

- cross-platform

enter twisted

- originally written by Glyph Lefkowitz, still project lead

enter twisted

- originally written by Glyph Lefkowitz, still project lead

-

first release in 2002

- initial use case was a large multiplayer game

enter twisted

- originally written by Glyph Lefkowitz, still project lead

-

first release in 2002

- initial use case was a large multiplayer game

- open source

- MIT license

enter twisted

- originally written by Glyph Lefkowitz, still project lead

-

first release in 2002

- initial use case was a large multiplayer game

- open source

- MIT license

- runs on CPython 2.7 and PyPy

- porting to CPython 3.3+ is in progress!

enter twisted

- originally written by Glyph Lefkowitz, still project lead

-

first release in 2002

- initial use case was a large multiplayer game

- open source

- MIT license

- runs on CPython 2.7 and PyPy

- porting to CPython 3.3+ is in progress!

- https://github.com/twisted/twisted

enter twisted

Twisted solves C10K on a common laptop

enter twisted

Twisted solves C10K on a common laptop

compare this with a threaded implementation:

assuming 128KB/thread of stack size,

~1.3GB memory overhead

(let alone the required context switching)

baby steps

# python3

from twisted.internet import reactor

reactor.run()

# absolutely nothing happensbaby steps

# python3

from twisted.internet import reactor

reactor.run()

# absolutely nothing happens- Twisted’s reactor loop needs to be imported and explicitly started

baby steps

# python3

from twisted.internet import reactor

reactor.run()

# absolutely nothing happens- Twisted’s reactor loop needs to be imported and explicitly started

- it runs in the same thread it was started in

baby steps

# python3

from twisted.internet import reactor

reactor.run()

# absolutely nothing happens- Twisted’s reactor loop needs to be imported and explicitly started

- it runs in the same thread it was started in

- if it has nothing to do, it will stay idle, without consuming CPU

- it's not a busy loop

do something!

# python3

from twisted.internet import reactor

def hello():

print('I like turtles')

reactor.callWhenRunning(hello)

print('Starting the reactor')

reactor.run()

# Starting the reactor

# I like turtlescallbacks

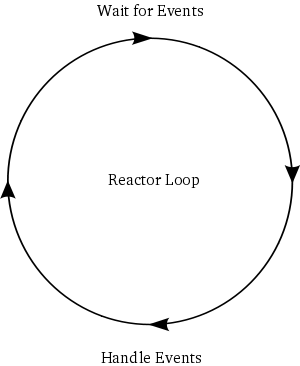

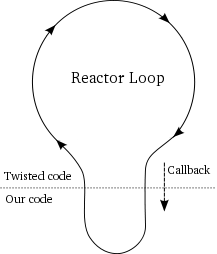

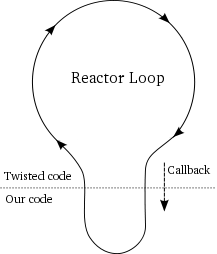

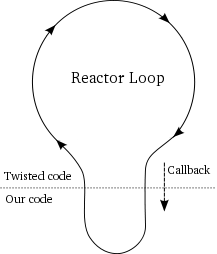

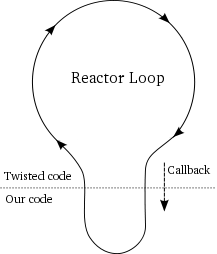

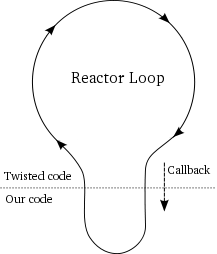

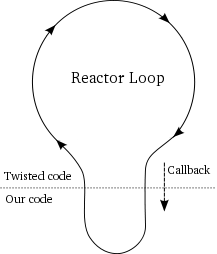

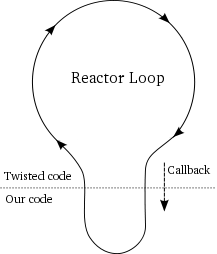

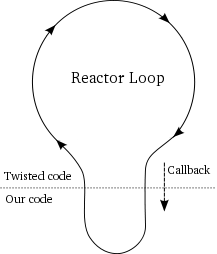

- Twisted provides the reactor loop

callbacks

- Twisted provides the reactor loop

- we provide the business logic to do something useful

callbacks

- Twisted provides the reactor loop

- we provide the business logic to do something useful

- the reactor loop controls the thread it runs in, and will call our code

callbacks

- Twisted provides the reactor loop

- we provide the business logic to do something useful

- the reactor loop controls the thread it runs in, and will call our code

- a callback is a function that we give to Twisted and that the reactor will use to "call us back" at the right time

callbacks

- our callback code runs in the same thread as the Twisted loop

callbacks

- our callback code runs in the same thread as the Twisted loop

- when our callbacks are running, the Twisted loop is not running

- be careful not to block!

callbacks

- our callback code runs in the same thread as the Twisted loop

- when our callbacks are running, the Twisted loop is not running

- be careful not to block!

- and vice versa

callbacks

- our callback code runs in the same thread as the Twisted loop

- when our callbacks are running, the Twisted loop is not running

- be careful not to block!

- and vice versa

- the reactor loop resumes when our callback returns

stopping

# python3

from twisted.internet import reactor

def hello():

print('I like turtles')

def stop():

reactor.stop()

reactor.callWhenRunning(hello)

reactor.callWhenRunning(stop)

print('Starting the reactor')

reactor.run()

print('Reactor stopped')

# Starting the reactor

# I like turtles

# Reactor stoppedok, slow down

since these programs don't use sockets at all, why don't they get blocked in the

select()

call?

OK, SLOW DOWN

since these programs don't use sockets at all, why don't they get blocked in the

select()

call?

- because it also accepts an optional timeout value

OK, SLOW DOWN

since these programs don't use sockets at all, why don't they get blocked in the

select()

call?

- because it also accepts an optional timeout value

- if no sockets* have become ready for I/O within the specified time then it will return anyway

- a timeout value of zero allows to poll a set of sockets without blocking at all

*more accurately, file descriptors

exceptions

# python3

from twisted.internet import reactor

def error():

raise Exception('BOOM!')

def back():

print('Aaaand we are back!')

reactor.stop()

reactor.callWhenRunning(error)

reactor.callWhenRunning(back)

print('Starting the reactor')

reactor.run()

# Starting the reactor

# Unhandled Error

# Traceback (most recent call last):

# ...

# builtins.Exception: BOOM!

# Aaaand we are back!exceptions

- the second callback runs after the traceback

exceptions

- the second callback runs after the traceback

- unhandled exceptions don't stop the reactor

- they're just reported

exceptions

- the second callback runs after the traceback

- unhandled exceptions don't stop the reactor

- they're just reported

- this improves the general robustness of a network application built with Twisted

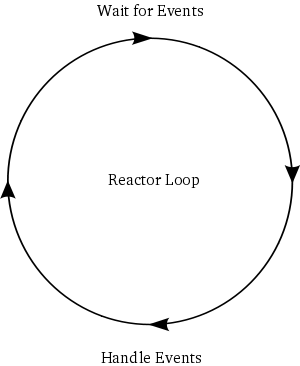

more on callbacks

- callbacks are deeply connected to asynchronous programming

more on callbacks

- callbacks are deeply connected to asynchronous programming

- using any reactor-based system means organizing our code as a series of callback chains invoked by a reactor loop

more on callbacks

- callbacks are deeply connected to asynchronous programming

- using any reactor-based system means organizing our code as a series of callback chains invoked by a reactor loop

- we tipically distinguish:

- callbacks, that handle normal results

- errbacks, that handle errors

callbacks

WE: "Reactor, please, do_this_thing(), it's important"

R: "Sure"

WE: "Uhm, let me know when it's done.

Please callback() ASAP"

R: "You bet"

WE: "Oh, and Reactor...should anything go wrong...

make sure you errback() to me"

R: "Gotcha"

WE: <3

R: <3

a love story

before the storm

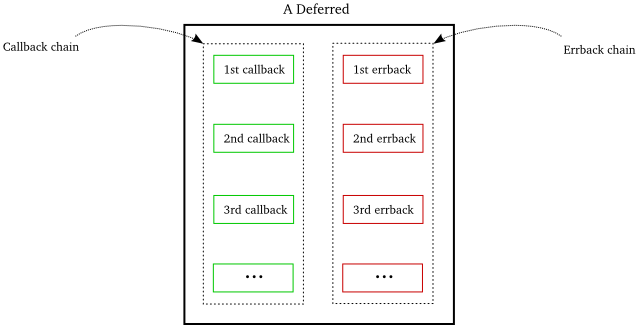

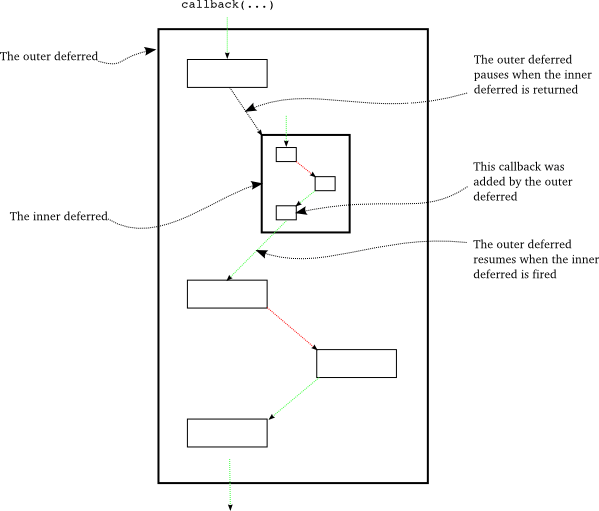

deferred

- a Twisted abstraction to make programming with callbacks easier

DEFERRED

- a Twisted abstraction to make programming with callbacks easier

- a deferred contains a callback chain and an errback chain (both empty after instantiation)

DEFERRED

- a Twisted abstraction to make programming with callbacks easier

- a deferred contains a callback chain and an errback chain (both empty after instantiation)

- we can add functions to both chains

DEFERRED

- a Twisted abstraction to make programming with callbacks easier

- a deferred contains a callback chain and an errback chain (both empty after instantiation)

- we can add functions to both chains

- the reactor will fire the deferred with a result or an error by calling its methods

-

deferred.callback(result)

-

deferred.errback(exception)

-

DEFERRED

- a Twisted abstraction to make programming with callbacks easier

- a deferred contains a callback chain and an errback chain (both empty after instantiation)

- we can add functions to both chains

- the reactor will fire the deferred with a result or an error by calling its methods

-

deferred.callback(result)

-

deferred.errback(exception)

-

- firing the deferred will invoke the appropriate callbacks or errbacks in the order they were added

DEFERRED

code example

# python3

from twisted.internet.defer import Deferred

def eb(error):

print('OMG NOOO!')

print(error)

def cb(result):

print('WHOA, NICE!')

print(result)

d = Deferred()

d.addCallbacks(cb, eb)

d.callback(42) # fire!

# WHOA, NICE!

# 42code example

# python3

from twisted.internet.defer import Deferred

def eb(error):

print('OMG NOOO!')

print(error)

def cb(result):

print('WHOA, NICE!')

print(result)

d = Deferred()

d.addCallbacks(cb, eb)

d.errback(Exception('BOOM!')) # fire!

# OMG NOOO!

# [Failure instance: Traceback (failure with no frames): <class 'Exception'>: BOOM!

# ]deferred

-

a deferred wraps an Exception into a Failure instance

- it preserves the traceback from the point where that exception was raised

deferred

-

a deferred wraps an Exception into a Failure instance

- it preserves the traceback from the point where that exception was raised

- deferreds are fully synchronous

- no reactor involved, it's plain function calling

deferred

-

a deferred wraps an Exception into a Failure instance

- it preserves the traceback from the point where that exception was raised

- deferreds are fully synchronous

- no reactor involved, it's plain function calling

- you can't fire a deferred twice

- you invoke callback() OR errback(), and just once

DEFERRED

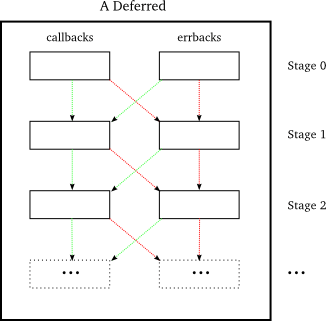

callback chains

- a deferred contains callback/errback pairs (stages), in the order they were added to the deferred

callback chains

- a deferred contains callback/errback pairs (stages), in the order they were added to the deferred

- stage 0: invoked when the deferred is fired

- callback() invoked: stage 0 callback is called

- errback() invoked: stage 0 errback is called

callback chains

- a deferred contains callback/errback pairs (stages), in the order they were added to the deferred

- stage 0: invoked when the deferred is fired

- callback() invoked: stage 0 callback is called

- errback() invoked: stage 0 errback is called

- stage raises OR returns a Failure: the following stage's errback is called with the Failure as the first argument

callback chains

- a deferred contains callback/errback pairs (stages), in the order they were added to the deferred

- stage 0: invoked when the deferred is fired

- callback() invoked: stage 0 callback is called

- errback() invoked: stage 0 errback is called

- stage raises OR returns a Failure: the following stage's errback is called with the Failure as the first argument

- stage succeeds: the following stage's callback is called with the current return value as the first argument

callback chains

- a deferred contains callback/errback pairs (stages), in the order they were added to the deferred

- stage 0: invoked when the deferred is fired

- callback() invoked: stage 0 callback is called

- errback() invoked: stage 0 errback is called

- stage raises OR returns a Failure: the following stage's errback is called with the Failure as the first argument

- stage succeeds: the following stage's callback is called with the current return value as the first argument

- exceptions that go beyond the last stage will be just reported (they won't crash the program)

callback chains

callback chains

yeah. seriously.

standard reaction

to deferreds

code example

from twisted.internet.defer import Deferred

def stage0_cb(result): print('What is love?'); raise Exception

def stage0_eb(error): print('guh')

def stage1_cb(result): print('blablabla')

def stage1_eb(error): print("Baby don't hurt me"); raise Exception

def stage2_cb(result): print('Eeeeh Macarena!')

def stage2_eb(error): print("Don't hurt me"); return 'No more'

def stage3_cb(result): print(result)

def stage3_eb(error): print("I'm blue dabadi dabada")

d = Deferred()

d.addCallbacks(stage0_cb, stage0_eb)

d.addCallbacks(stage1_cb, stage1_eb)

d.addCallbacks(stage2_cb, stage2_eb)

d.addCallbacks(stage3_cb, stage3_eb)

d.callback(None)

# What is love?

# Baby don't hurt me

# Don't hurt me

# No moreback to basics

back to basics

# python3

from twisted.internet.defer import gatherResults

from twisted.internet import reactor

import treq

_WEBSITES = ('https://www.google.com', 'https://www.amazon.com',

'https://www.facebook.com')

def _print_all(_, homepages):

for website in _WEBSITES: print(homepages[website])

def _store(html, website, homepages):

homepages[website] = html

def get_homepages():

homepages, responses = {}, []

for website in _WEBSITES:

d = treq.get(website)

d.addCallback(treq.content)

d.addCallback(_store, website, homepages)

responses.append(d)

alldone = gatherResults(responses)

alldone.addCallback(_print_all, homepages)

alldone.addCallback(lambda _: reactor.stop())

if __name__ == '__main__':

reactor.callWhenRunning(get_homepages); reactor.run()

a handle to what will be

by returning a deferred, an API is telling us that it's asynchronous, and that the result isn't available yet

it will be, eventually,

and we'll get it as soon as the deferred is fired

you made it this far!

it's a silver bullet!

it's a silver bullet!

No. Forget it. There's no silver bullet.

don'ts

- don't block

- e.g. time.sleep(), synchronous networking

- always rely on Twisted APIs to do time management and I/O

- use Twisted specific 3rd party libraries

don'ts

- don't block

- e.g. time.sleep(), synchronous networking

- always rely on Twisted APIs to do time management and I/O

- use Twisted specific 3rd party libraries

- don't perform CPU bound computations

- e.g. calculations, parsing, image processing

cpu bound

"make it work, make it right, make it fast" -- Kent Beck

- measure, don't guess

- use a profiler, find hot spots, act accordingly

cpu bound

"make it work, make it right, make it fast" -- Kent Beck

- measure, don't guess

- use a profiler, find hot spots, act accordingly

- write idiomatic code

- Burkhard Kloss - Performant Python https://youtu.be/2raXkX0Wi2w

cpu bound

"make it work, make it right, make it fast" -- Kent Beck

- measure, don't guess

- use a profiler, find hot spots, act accordingly

- write idiomatic code

- Burkhard Kloss - Performant Python https://youtu.be/2raXkX0Wi2w

- use PyPy

cpu bound

"make it work, make it right, make it fast" -- Kent Beck

- measure, don't guess

- use a profiler, find hot spots, act accordingly

- write idiomatic code

- Burkhard Kloss - Performant Python https://youtu.be/2raXkX0Wi2w

- use PyPy

- if possible, use libraries implemented as C extensions

cpu bound

"make it work, make it right, make it fast" -- Kent Beck

- measure, don't guess

- use a profiler, find hot spots, act accordingly

- write idiomatic code

- Burkhard Kloss - Performant Python https://youtu.be/2raXkX0Wi2w

- use PyPy

- if possible, use libraries implemented as C extensions

- distribute workload with queues, publishers and workers

cpu bound

"make it work, make it right, make it fast" -- Kent Beck

- measure, don't guess

- use a profiler, find hot spots, act accordingly

- write idiomatic code

- Burkhard Kloss - Performant Python https://youtu.be/2raXkX0Wi2w

- use PyPy

- if possible, use libraries implemented as C extensions

- distribute workload with queues, publishers and workers

- most of all: design for scale

enemy of the state

state shared among threads today

may become

state shared among machines

across a network tomorrow

strive for statelessness

be an enemy of the state

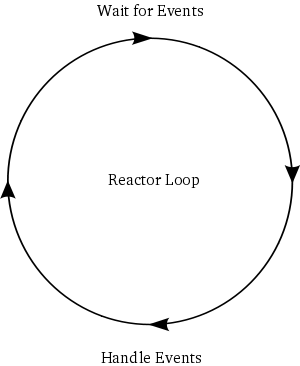

the future: asyncio

- standard library's reactor pattern implementation

- new in Python 3.4

- pluggable event loop!

the future: asyncio

- standard library's reactor pattern implementation

- new in Python 3.4

- pluggable event loop!

- it aims to become the de facto standard

the future: asyncio

- standard library's reactor pattern implementation

- new in Python 3.4

- pluggable event loop!

- it aims to become the de facto standard

- Twisted devs are working on interoperability

- make both event loops interoperable

- address Future/Deferred compatibility

- make the HUGE Twisted protocol codebase work with asyncio (3rd parties included)

the future: asyncio

- standard library's reactor pattern implementation

- new in Python 3.4

- pluggable event loop!

- it aims to become the de facto standard

- Twisted devs are working on interoperability

- make both event loops interoperable

- address Future/Deferred compatibility

- make the HUGE Twisted protocol codebase work with asyncio (3rd parties included)

-

Amber Brown - The Report Of Twisted’s Death

- https://youtu.be/UkkO3_GSR2g

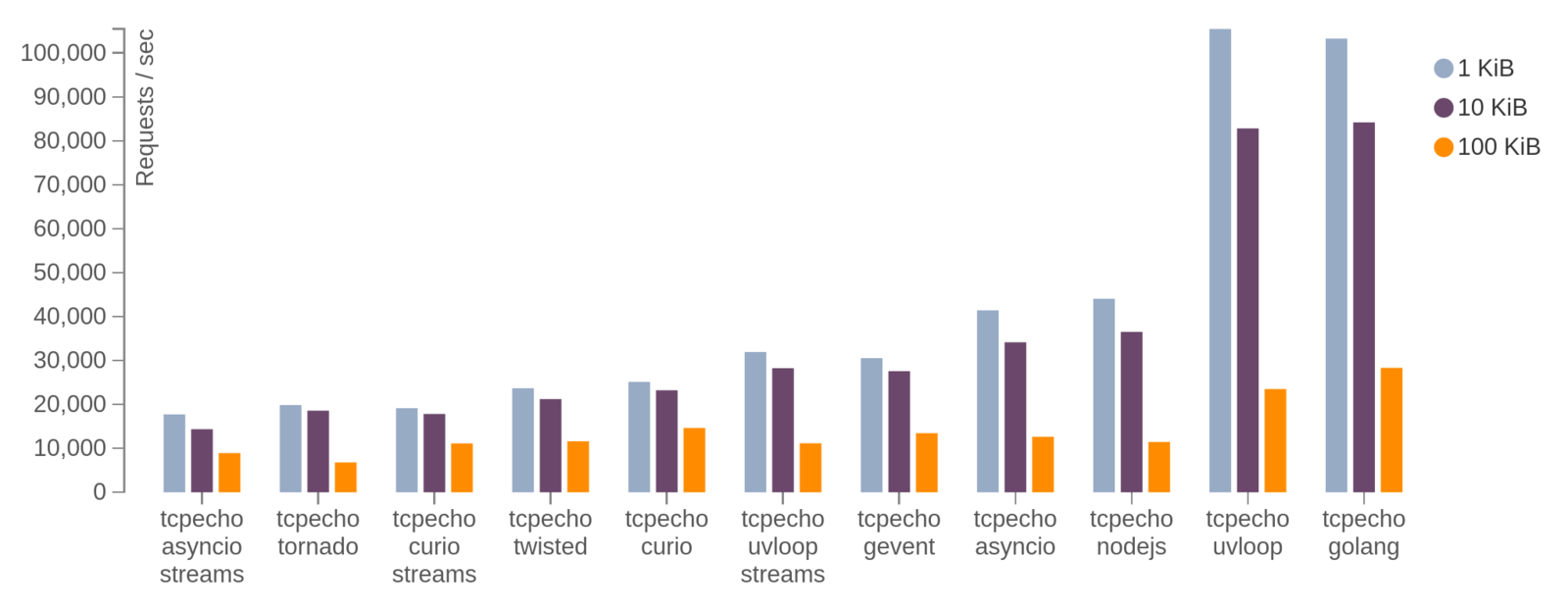

bonus!!!

uvloop

- drop-in replacement for asyncio's event loop

bonus!!!

uvloop

- drop-in replacement for asyncio's event loop

- by Yury Selivanov, core CPython committer

bonus!!!

uvloop

- drop-in replacement for asyncio's event loop

- by Yury Selivanov, core CPython committer

- written in Cython

bonus!!!

uvloop

- drop-in replacement for asyncio's event loop

- by Yury Selivanov, core CPython committer

- written in Cython

- built on top of libuv

- asynchronous I/O engine that powers Node.js

bonus!!!

uvloop

https://magic.io/blog/uvloop-blazing-fast-python-networking

do want moAr!

references and credits

-

Dave Peticolas - Twisted Introduction

- http://krondo.com/an-introduction-to-asynchronous-programming-and-twisted

- http://twistedmatrix.com

- Jessica McKellar, Abe Fettig - Twisted Network Programming Essentials

answers!

or questions.

but mostly, answers.

please.

pssssssst. thank you! <3

python-twisted

By sanjioh

python-twisted

- 1,786