UNSUPERVISED REPRESENTATION LEARNING

WITH DEEP CONVOLUTIONAL

GENERATIVE ADVERSARIAL NETWORKS

Gideon Rosenthal

Shay Ben-Sasson

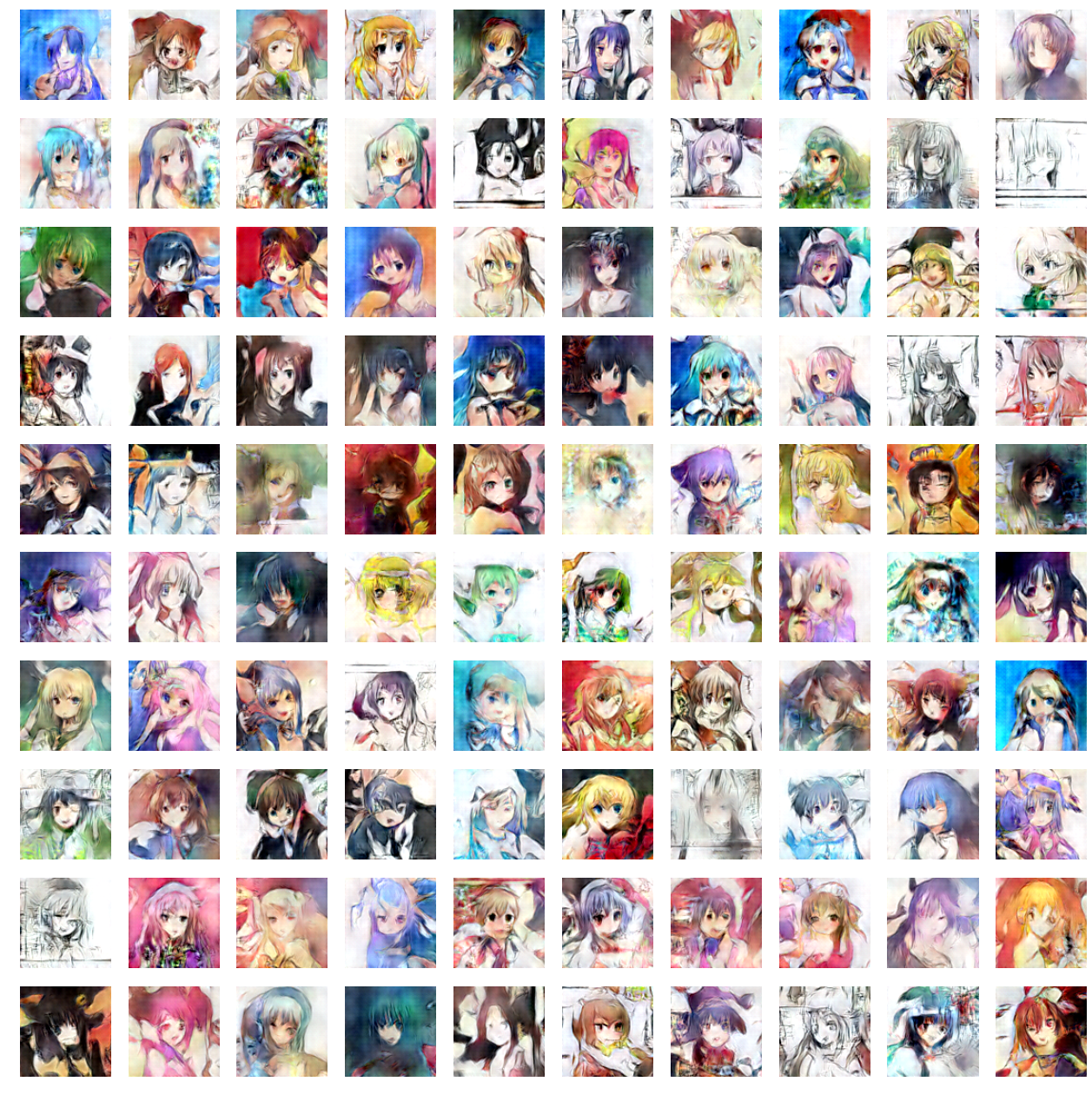

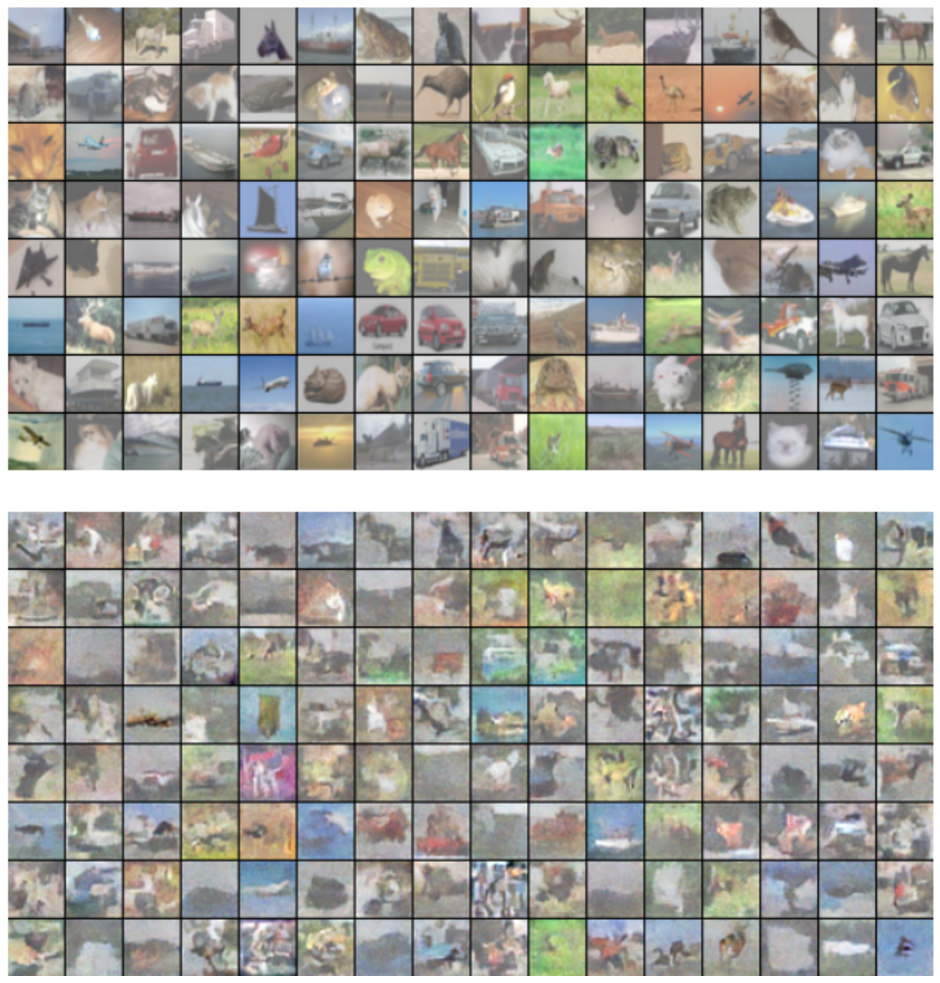

Examples of DCGAN

Examples of DCGAN

Examples of DCGAN

Examples of DCGAN

https://github.com/mattya/chainer-DCGAN

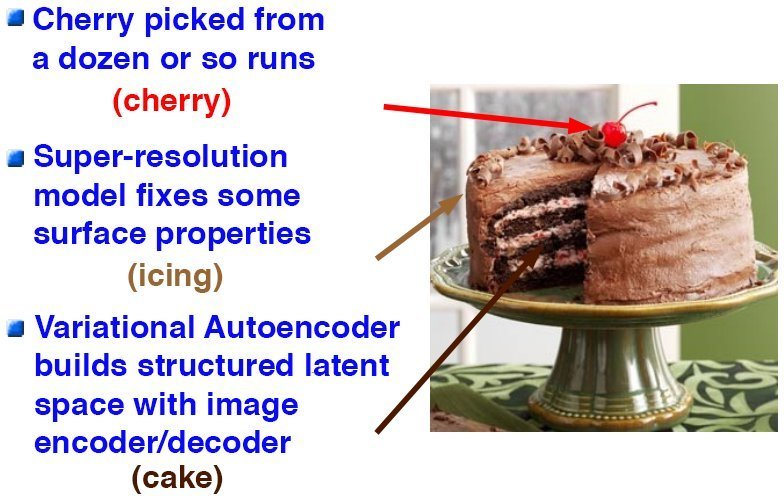

Examples of DCGAN extensions

Ledig, C., Theis, L., Huszar, F., Caballero, J., Aitken, A. P., Tejani, A., Totz, J., Wang, Z., and Shi, W. (2016).

Examples of DCGAN extensions

Examples of DCGAN extensions

Generative

Model the distribution of individual classes

p(x,y)

Discriminative

Learn the (hard or soft) boundary between classes

p(y|x)

Discriminative Models

Classification:

"The process of classification discards most of the information in the input and produces a single output"

(from Goodfelow book)

An example of

Generative Models

Sampling:

"The model generates new samples from the distribution p(x). This requires a good model of the entire input"

(from Goodfelow book)

An example of

What are generative networks?

- Parameterized computational procedures for generating samples

- The architecture provides the family of possible distributions to sample from

- The parameters select a distribution from within that family

(from Goodfelow book)

Generative models - Landscape

- Restricted Boltzman Machines (Somelnsky, 1986):

- Modeling high order interactions between visible and hidden (unobserved) variables

- Going Deep: DBMs (Hinton, 2006), DBNs (Hinton, 2006)

- Problems: Intractable partition and inference function

- Solution: Approximation (CD, Gibbs sampling)

- Practice: Greedy layer-wise pre-training.

Generative models - Landscape

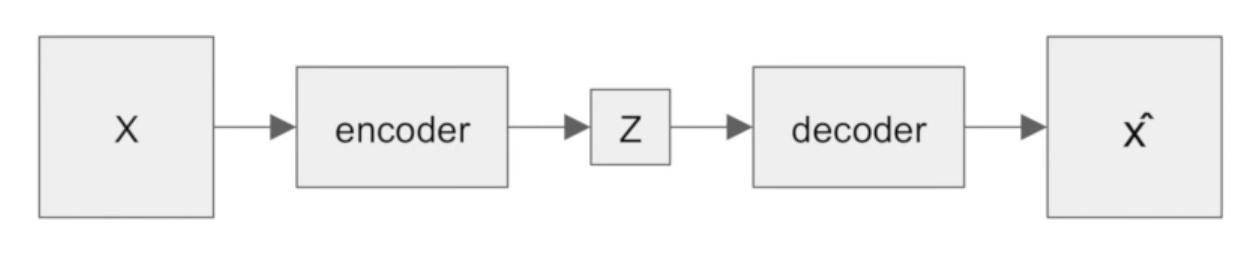

- Variational autoencoder (Kingma, 2013; Rezende, 2014):

- Another explicit method of approximating the intractable partition function.

- Variational inference is used to analytically solve the Lower Bound of the KL divergence.

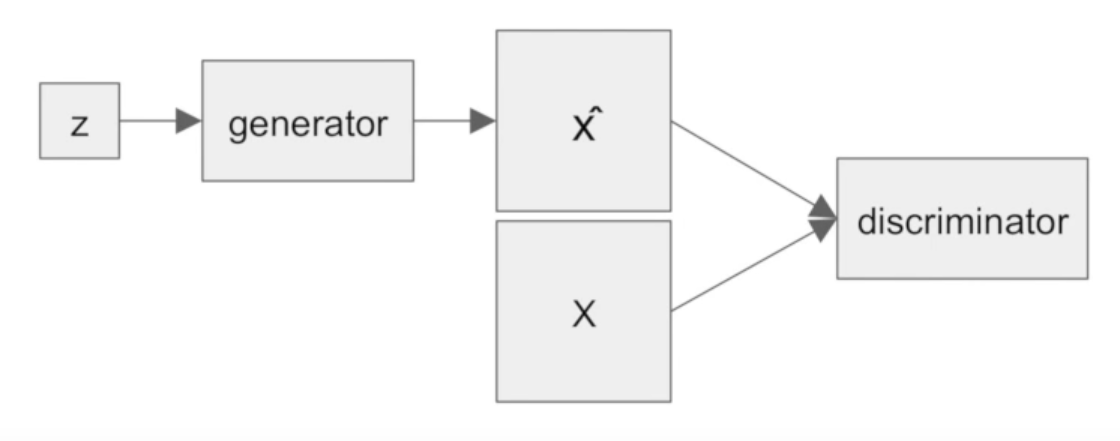

Generative Adversarial Nets

George

Danielle

x~Pdata

Generative Adversarial Nets

George

Danielle

x~Pdata

x'=G(z)

z~uniform(0,1)

maximize D1, minimize D2

maximize D2

* D1 and D2 outputs is [0,1]

Generative Adversarial Nets

George

Danielle

x~Pdata

* D1 and D2 outputs is [0,1]

obj_d=tf.reduce_mean(tf.log(D1)+tf.log(1-D2))

opt_d=tf.train.GradientDescentOptimizer(0.01)

.minimize(1-obj_d,global_step=batch,var_list=theta_d)

obj_g=tf.reduce_mean(tf.log(D2))

opt_g=tf.train.GradientDescentOptimizer(0.01)

.minimize(1-obj_g,global_step=batch,var_list=theta_g)GANs in action

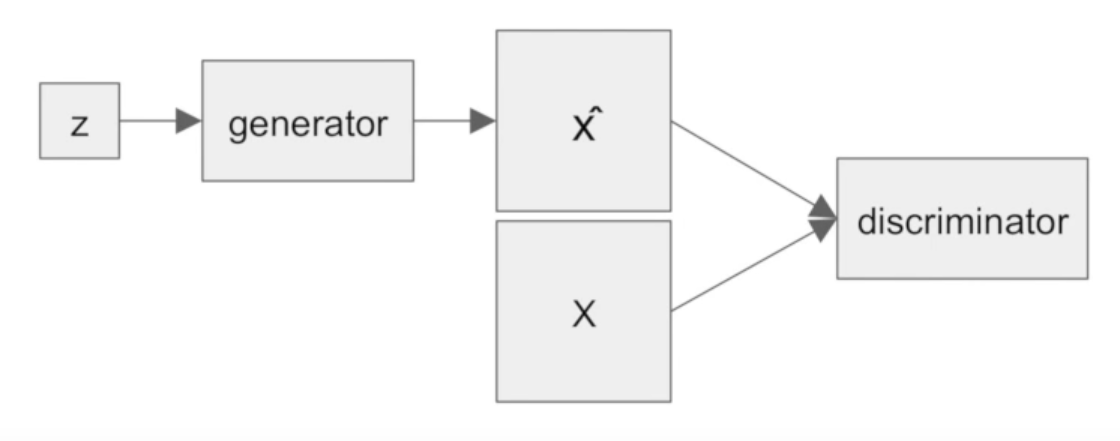

- z is the set of latent variables used by the generator to produce samples

GANs are difficult to train

- GAN images suffer from being noisy and incomprehensible

- Scaling up GANs using CNNs to model images have been unsuccessful.

- GANs are unstable

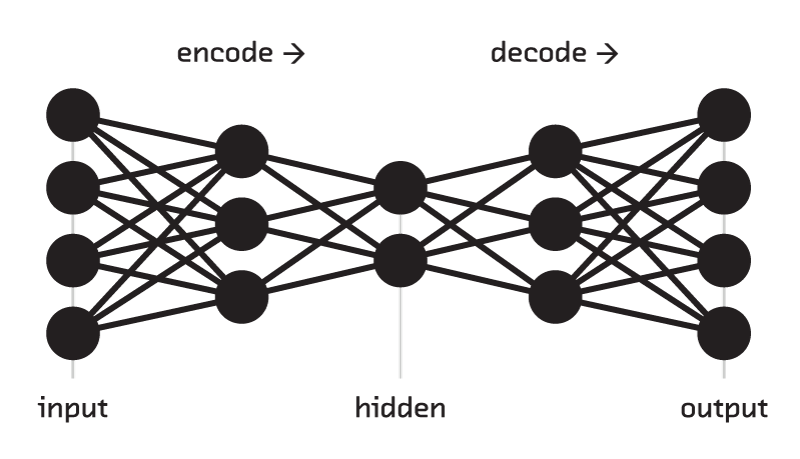

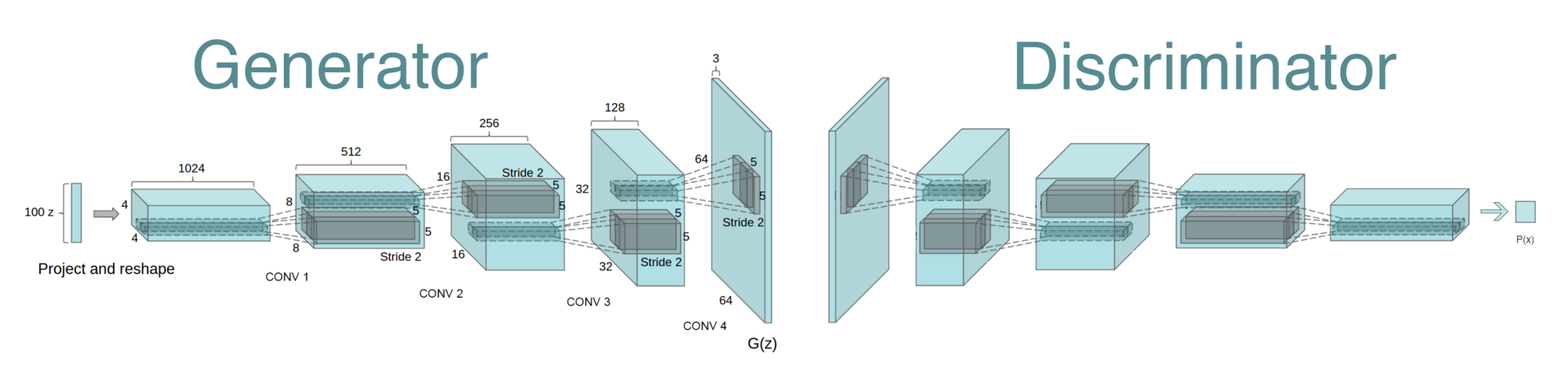

DCGAN

DCGAN - a bag of tricks

- Replace any pooling layers with strided convolutions (discriminator) and fractional-strided convolutions (generator).

- Use batchnorm in both the generator and the discriminator.

- Remove fully connected hidden layers for deeper architectures.

- Use ReLU activation in generator for all layers except for the output, which uses Tanh.

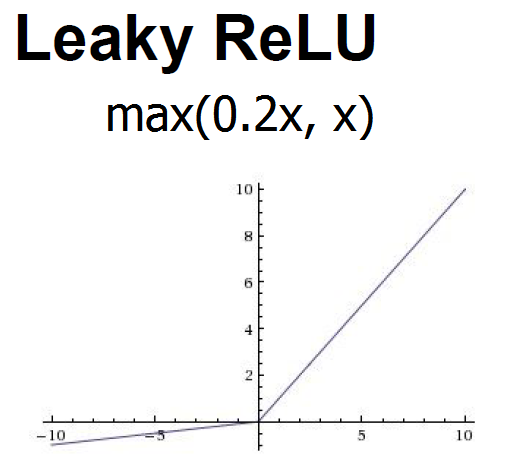

- Use LeakyReLU activation in the discriminator for all layers.

Strided convolutions (all)

Fractionally strided convolutions

Subtitle

All convolutional except

- layer 1->2 : matrix multiplication (fully connected)

- Z is reshaped into a 3-dimensional tensor and used as the start of the convolution stack

- For the discriminator, the last convolution layer is flattened and then fed into a single sigmoid output

Batchnorm

- Deals with poor initialization and helps gradient flow in deeper models

- Prevents the generator from collapsing all samples to a single point which is a common failure mode observed in GANs.

-

Directly applying

batchnorm to alllayers however, results in sample oscillation and model instability. -

This is avoided by not applying

batchnorm to the generator output layer and the discriminator input layer

Remove fully connected hidden layers for deeper architectures

Use ReLU activation in generator for all layers except for the output, which uses Tanh

Use LeakyReLU activation in the discriminator for all layers

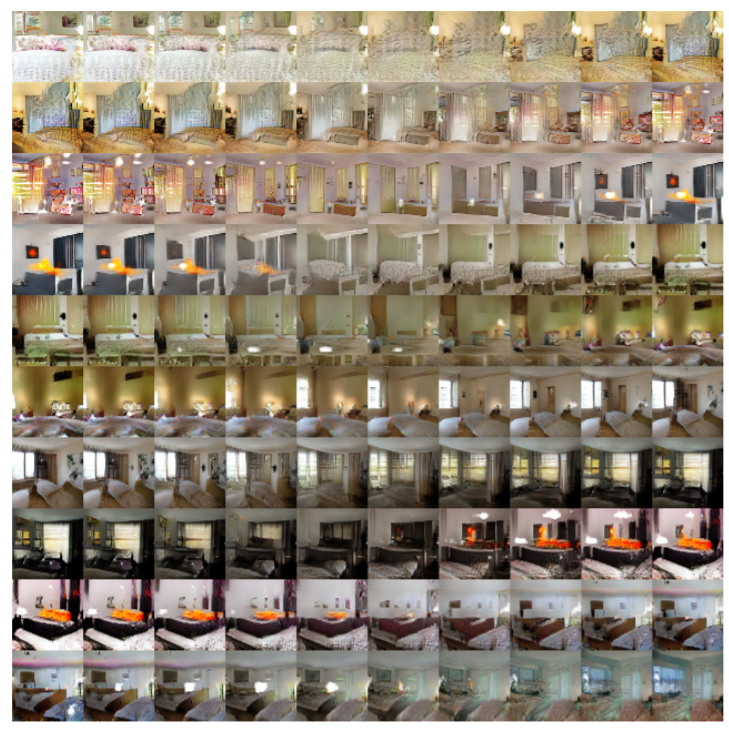

Tested dataset

- Large-scale Scene Understanding (LSUN) (Yu et al., 2015)

- Imagenet-1k (Deng et al., 2009)

- Newly assembled Faces dataset (350000 cropped faces)

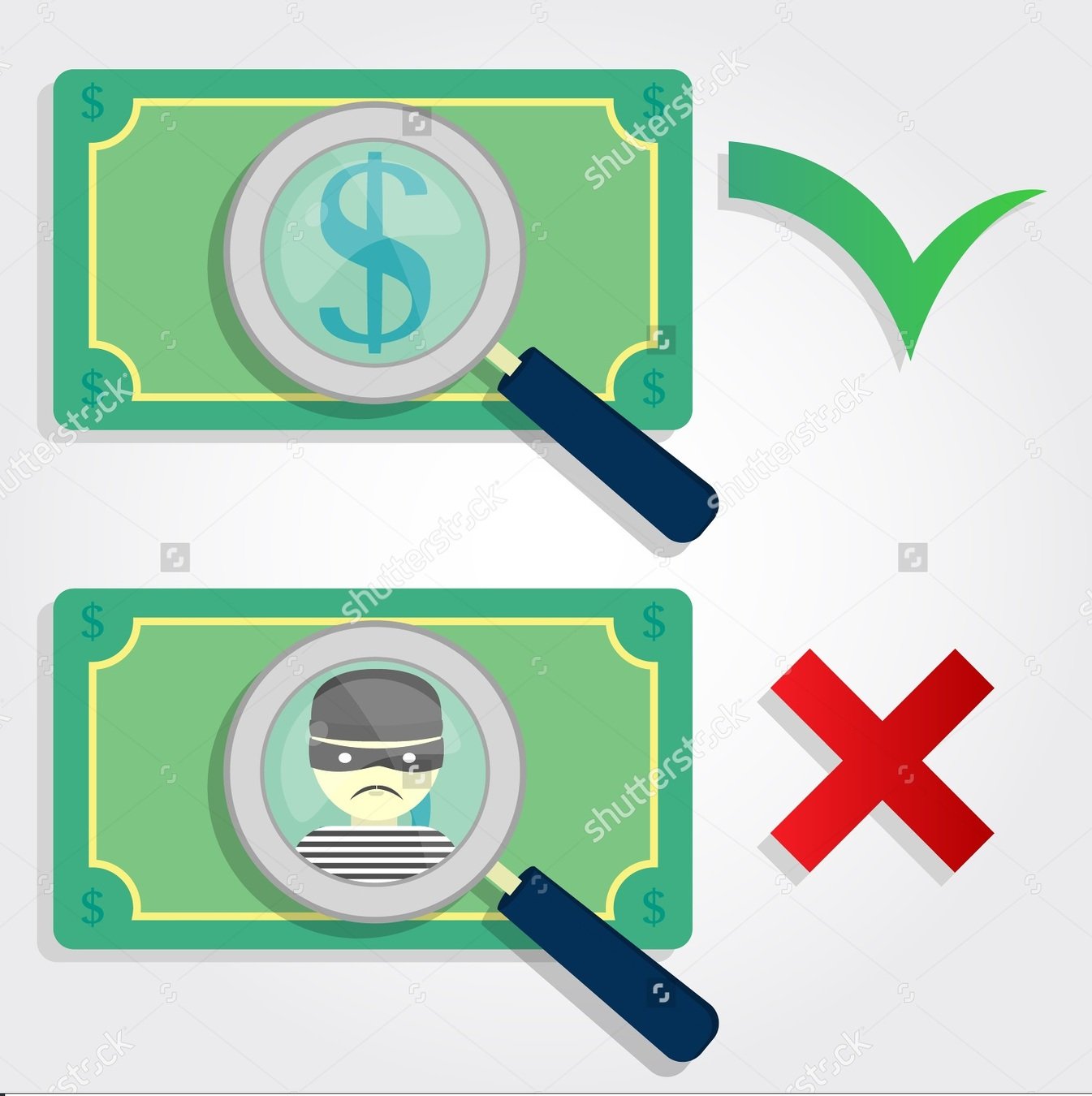

LSUN - is it overfitting?

- Generated bedrooms after one training pass through the dataset.

- Overfitting unlikely as we train with a small learning rate and minibatch SGD.

- We are aware of no prior empirical evidence demonstrating memorization with SGD and a small learning rate ;0

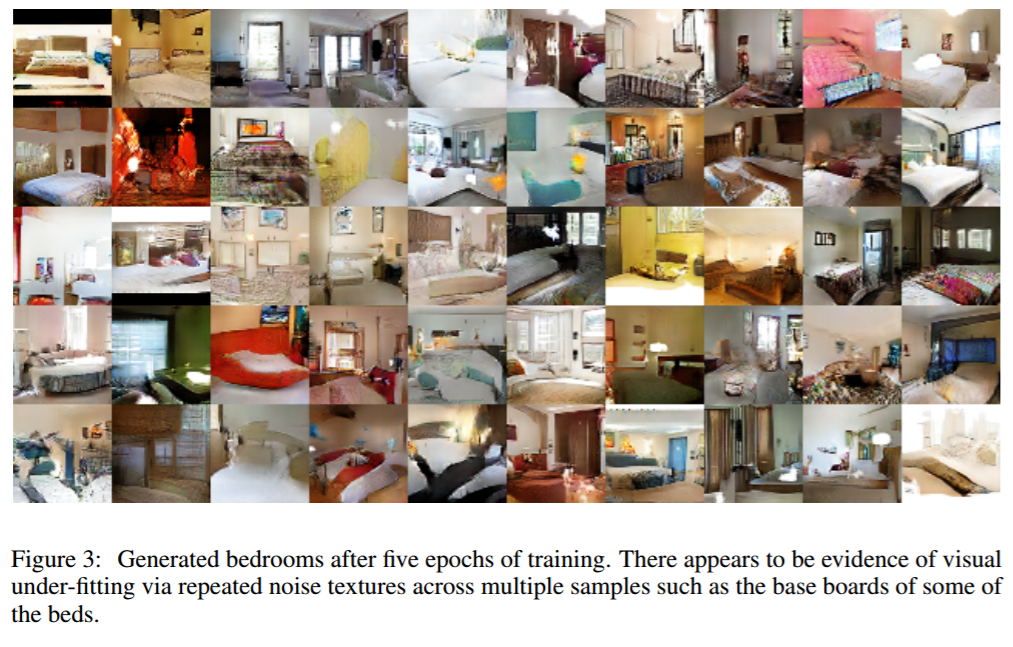

LSUN - is it overfitting?

- Generated bedrooms after five epochs of training.

- There appears to be evidence of visual under-fitting via repeated noise textures across multiple samples such as the base boards of some of the beds

Interpolation between a series of 9 random points in Z

Walking in the latent space

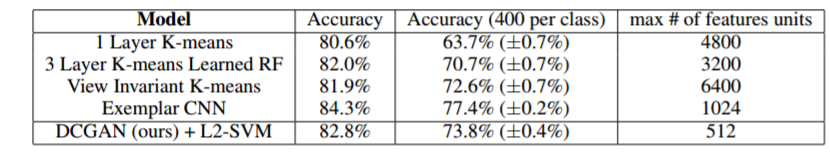

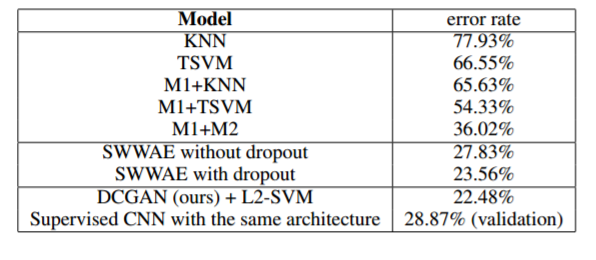

GAN as feature extractors

- Semi-Supervised learning:

- Evaluating the quality of unsupervised representation learning algorithms by applying it as a feature extractor on supervised datasets

CIFAR 10

- Trained the GAN on Imagenet-1k and then use the discriminator’s convolutional features from all layers, maxpooling each layers representation.

- These features are then flattened and concatenated to form a 28672 dimensional vector and a regularized linear L2-SVM classifier is trained on top of them.

SVHN DIGITS USING GANS AS A FEATURE EXTRACTOR

Shay - I think we can let this go (boring, we got the point) -

StreetView House Numbers dataset (SVHN)(Netzer et al., 2011)

Same feature extraction pipeline used for CIFAR-10.

Supervised CNN with the same architecture on the same data achieves a signficantly higher 28.87% validation error.

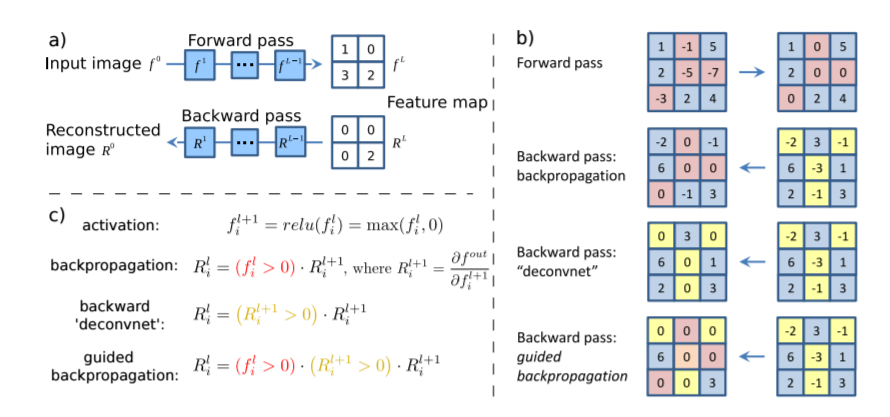

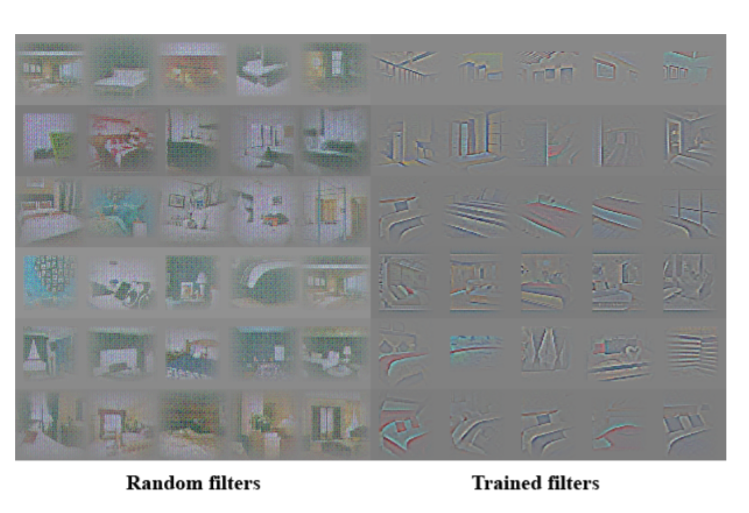

The internals of the networks

Discriminator

- Unsupervised DCGAN trained on a large image dataset can learn a hierarchy of features which can be interesting

Guided Backprop (Springenberg et al., 2014)

Guided Backprop

Guided backpropagation

Generator representation

- The generator might learn specific object representations for major scene components such as beds, windows, lamps, doors, and miscellaneous furniture.

- To explore the form that these representations take, they tried to remove windows from the generator completely

Generator representation

- On 150 samples, 52 window bounding boxes were drawn manually.

- On the second highest convolution layer features, logistic regression was fit to predict whether a feature activation was on a window (or not).

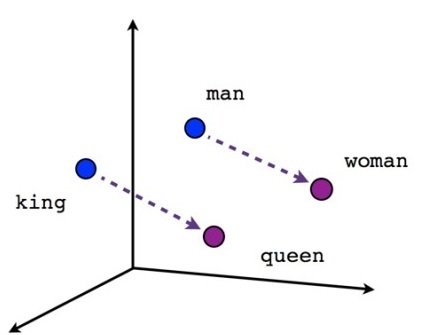

Face vectors arithmetic

- For each column, the Z vectors of samples are averaged.

- Arithmetic was then performed on the mean vectors creating a new vector Y .

- The center sample on the right-hand side is produced by feeding Y as input to the generator.

- To demonstrate the interpolation capabilities of the generator, uniform noise sampled with scale +-0.25 was added to Y to produce the 8 other samples

Face vectors arithmetic

- A ”turn” vector was created from four averaged samples of faces looking left vs looking right

- Reliably transform pose of random images using this vector

Generative Adversarial Nets

By shaybensasson

Generative Adversarial Nets

- 2,032