Fs2 + Effects

Streaming applications

About me

- Functional Programmer

- Scala & Haskell

- Occasional writer at

- GitHub repo:

Effects

cats.effect.IO[A] A pure abstraction representing the intention to perform a side effect, where the result of that side effect may be obtained synchronously (via return) or asynchronously (via callback).

trait Effect[F[_]] extends Async[F]A monad that can suspend side effects into the `F` context and that supports lazy and potentially asynchronous evaluation.

Effects

f(println("hi"), println("hi"))val x = println("hi")

f(x, x)import cats.effect.IO

import scala.io.StdIn

val program = for {

_ <- IO { println("Please enter your name:") }

name <- IO { StdIn.readLine }

_ <- IO { println(s"Hi $name!") }

} yield ()

program.unsafeRunSync()"If replacing expression x by its value produces the same behavior, then x is referentially transparent"

def putStrLn(line: String) = IO { println(line) }val x = putStrLn("hi")f(x, x) == f(putStrLn("hi"), putStrLn("hi"))Fs2 concepts

Stream[F,O]It represents a discrete stream of O values which may request evaluation of F effects.

Pipe[F,I,O]= Stream[F,I] => Stream[F,O]Sink[F,I]= Pipe[F,I,Unit]Just a streaming transformation!

Its sole purpose is to run effects.

A bit of history

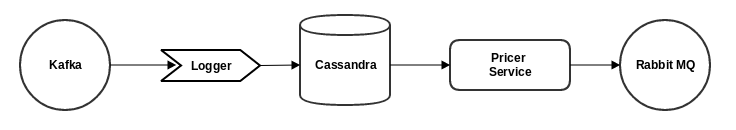

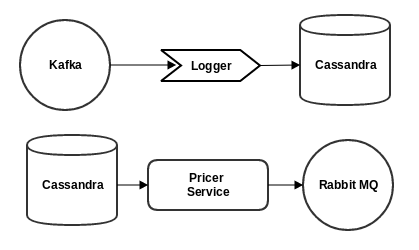

Process[F,O]F[A] = scalaz.Task[A]Case of Study

Order Generator

Pricer Service

Case of Study

Order Generator

Pricer Service

Case of Study

import cats.effect.IO

import com.gvolpe.fs2.streams.model._

import fs2.{Pipe, Sink, Stream}

import scala.concurrent.ExecutionContext

class PricerFlow() {

def flow(consumer: Stream[IO, Order],

logger: Sink[IO, Order],

storage: OrderStorage,

pricer: Pipe[IO, Order, Order],

publisher: Sink[IO, Order])

(implicit ec: ExecutionContext): Stream[IO, Unit] = {

Stream(

consumer observe logger to storage.write,

storage.read through pricer to publisher

).join(2)

}

}Pricer Service

Case of Study

class OrderGeneratorFlow()(implicit ec: ExecutionContext, R: Scheduler) {

private def defaultOrderGen: Pipe[IO, Int, Order] = { orderIds =>

val tickInterrupter = time.sleep[IO](11.seconds).map(_ => true)

val orderTick = time.awakeEvery[IO](2.seconds).interruptWhen(tickInterrupter)

(orderIds zip orderTick) flatMap { case (id, _) =>

val itemId = Random.nextInt(500).toLong

val itemPrice = Random.nextInt(10000).toDouble

val newOrder = Order(id.toLong, List(Item(itemId, s"laptop-$id", itemPrice)))

Stream.emit(newOrder)

}

}

def flow(source: Sink[IO, Order],

orderGen: Pipe[IO, Int, Order] = defaultOrderGen): Stream[IO, Unit] = {

Stream.range(1, 10).covary[IO] through orderGen to source

}

}Pricer Service

Case of Study

implicit val R = fs2.Scheduler.fromFixedDaemonPool(2, "generator-scheduler")

implicit val S = scala.concurrent.ExecutionContext.Implicits.global

val pricerProgram = for {

kafkaTopic <- Stream.eval(async.topic[IO, Order](Order.Empty))

rabbitQueue <- Stream.eval(async.boundedQueue[IO, Order](100))

dbQueue <- Stream.eval(async.boundedQueue[IO, Order](100))

kafkaBroker = new OrderKafkaBroker(kafkaTopic)

rabbitBroker = new OrderRabbitMqBroker(rabbitQueue)

db = new OrderDb(dbQueue)

pricerFlow = new PricerFlow().flow(kafkaBroker.consume, logger,

OrderStorage(db.read, db.persist),

pricer, rabbitBroker.produce)

orderGenFlow = new OrderGeneratorFlow().flow(kafkaBroker.produce)

program <- pricerFlow mergeHaltBoth orderGenFlow

} yield program

pricerProgram.run.unsafeRunSync()Pricer Service

Case of Study

protected def acquireConnection[F[_] : Effect]: F[(Connection, Channel)] =

Effect[F].delay {

val conn = factory.newConnection

val channel = conn.createChannel

(conn, channel)

}

/**

* Creates a connection and a channel in a safe way using Stream.bracket.

* In case of failure, the resources will be cleaned up properly.

*

* @return An effectful [[fs2.Stream]] of type [[Channel]]

* */

def createConnectionChannel[F[_] : Effect](): Stream[F, Channel] =

Stream.bracket(acquireConnection)(

cc => asyncF[F, Channel](cc._2),

cc => Effect[F].delay {

val (conn, channel) = cc

log.info(s"Releasing connection: $conn previously acquired.")

if (channel.isOpen) channel.close()

if (conn.isOpen) conn.close()

}

)Fs2 Rabbit library

Case of Study

def jsonEncode[F[_] : Effect, A : Encoder]: Pipe[F, AmqpMessage[A], AmqpMessage[String]] =

streamMsg =>

for {

amqpMsg <- streamMsg

json <- asyncF[F, String](amqpMsg.payload.asJson.noSpaces)

} yield AmqpMessage(json, amqpMsg.properties)

private val log = LoggerFactory.getLogger(getClass)

def jsonDecode[F[_] : Effect, A : Decoder]:

Pipe[F, AmqpEnvelope, (Either[Error, A], DeliveryTag)] =

streamMsg =>

for {

amqpMsg <- streamMsg

parsed <- asyncF[F, Either[Error, A]](decode[A](amqpMsg.payload))

_ <- asyncF[F, Unit](log.debug(s"Parsed: $parsed"))

} yield (parsed, amqpMsg.deliveryTag)

// asyncF(body) is an alias for Stream.eval(F.delay(body))Fs2 Rabbit library

Comparison

Monix vs Akka Stream vs Fs2

Resources

Questions?

Fs2 + Effects

By Gabriel Volpe

Fs2 + Effects

- 2,938