The Storage Elephant in the Container Room

What you Need to Know About Containers & Persistance

@ryanwallner

ryan.wallner@clusterhq.com

IRC: wallnerring (freednode -> #clusterhq)

"Stateless" Containers

- What is a stateless container?

- All containers may have some "state"; Memory, Sockets , etc

- I like to think of stateless containers as HTTP is defined as "stateless"

Because a stateless protocol does not require the server to retain session information or status about each communications partner for the duration of multiple requests. HTTP is a stateless protocol, which means that the connection between the browser and the server is lost once the transaction ends

Stateful Containers

- Maintains information after processes or interactions are complete.

- Can mean may things

- Databases, Message Queues, Cache, Logs ...

Containers should be portable, even when they have state.

Stateful things scale vertically, stateless things scale horizontally

Ease of operational manageability

Flocker

- Distributed volume manager

- Is aware of data-volumes and what host they live on

- Can move data-volumes alongside containers as an atomic unit

- Choice of backend support - ZFS, EBS, OpenStack Cinder

- Working with industry partners - EMC, NetApp, ConvergeIO, Hedvig, VMWare, etc

Flocker Details

-

Control Service

- REST API Endpoint

-

Dataset Agent

- Controls Volume Provisioning

-

Container Agent

- Restart Containers

- CLI

- UI

- Docker Plugin

User Requests Volume

for Container

- "or" integrations with either Mesos, Kubernetes, CoreOS, Compose, Swarm

docker: "--volume-driver=flocker"

flocker: "volumes-cli create"

Storage Driver

Flocker requests for storage to be automatically provisioned through it's configured backend.

Storage Driver

A persistent storage volume is successfully created and ready to be given to a container application

Storage Driver

Persistent storage is mounted inside the container so the application can storage information that will remain after the container's lifecycle

Host

Host

What Happens when containers move?

Host

Host

Container fails, scheduled to moved and migrates

Host

Host

New container is started on a new host, the volume is moved to the new host so when container starts is has the data it expected.

Storage Implementations

- Different backends offer different service levels and can add to the value of the applications

- Flocker can and will take advantage of different aspects of storage such as storage groups, io limits, storage type (SSD, Disk, Flash)

- Hackday Profiles

Orchestration

- Flocker is designed to work with the other tools you are using to build and run your distributed applications. Thanks to the Flocker plugin for Docker, Flocker can be used with popular container managers or orchestration tools like the Docker Engine, Docker Swarm, Docker Compose.

Consuming Storage Containers

Linking

Expose Ports

Directly Using Storage

docker --link <name or id>:<alias>docker -p 3306:3306 or docker -Pdocker --volume-driver=flocker myCache:/data/nginx/cache

http {

...

proxy_cache_path /data/nginx/cache keys_zone=one:10m;

server {

proxy_cache one;

location / {

proxy_pass http://localhost:8000;

}

}

}Intro to demo

https://github.com/wallnerryan/swarm-compose-flocker-aws-ebs Installation

//Very easy to get started: http://doc-dev.clusterhq.com/labs/installer.html#labs-installer

uft-flocker-sample-files

uft-flocker-get-nodes --ubuntu-aws

uft-flocker-install cluster.yml && \

uft-flocker-config cluster.yml && \

uft-flocker-plugin-install cluster.ymlGetting Swarm Going

// Prep-work

NODE1=<public ip for node1>

NODE2=<public ip for node2>

NODE3=<public ip for node3>

MASTER=<public ip for master>

PNODE1=<private ip for node1>

PNODE2=<private ip for node2>

PNODE3=<private ip for node3>

KEY=/Path/to/your/aws/ec2/user.pem

chmod 0600 $KEY

// Joining the slaves

ssh -i $KEY root@$MASTER docker run --rm swarm create

ssh -i $KEY root@$NODE1 docker run -d swarm join --addr=$PNODE1:2375 token://$CLUSTERKEY

ssh -i $KEY root@$NODE2 docker run -d swarm join --addr=$PNODE2:2375 token://$CLUSTERKEY

ssh -i $KEY root@$NODE3 docker run -d swarm join --addr=$PNODE3:2375 token://$CLUSTERKEY

// Starting the Master

ssh -i $KEY root@$MASTER docker run -d -p 2357:2375 swarm manage token://$CLUSTERKEY

Running your application with Flocker

// Point docker tools at your swarm master in AWS

export DOCKER_HOST=tcp://<your_swarm_master_public_ip>:2357

// Start the app

./start_or_moveback.sh

(Add some data)

// When ready, move your application

./move.sh

Compose

web:

image: wallnerryan/todolist

environment:

- DATABASE_IP=<Private IP Your Database Container will be scheduled to>

- DATABASE=mysql

ports:

- 8080:8080

mysql:

image: wallnerryan/mysql

volume_driver: flocker

volumes:

- 'todolist:/var/lib/mysql'

environment:

- constraint:node==<Node Name Your Database Container will be scheduled to>

ports:

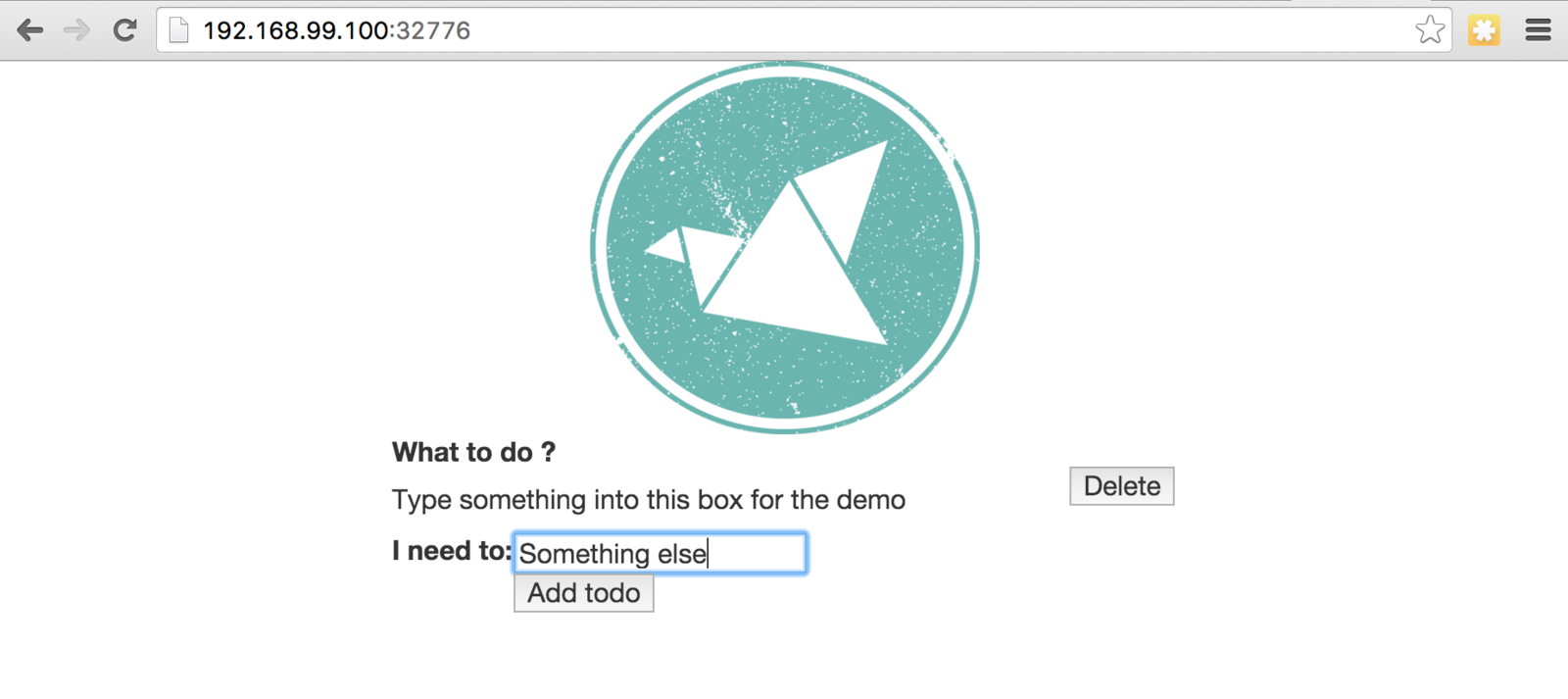

- 3306:3306Adding some data

Questions?

- @RyanWallner

- ryan.wallner@clusterhq.com

- Boston, MA

- IRC: wallnerryan (#clusterhq)

containerdaysnyc-2015

By Ryan Wallner

containerdaysnyc-2015

- 5,778