AWS Kinesis

Guillaume Simard

- Coveo

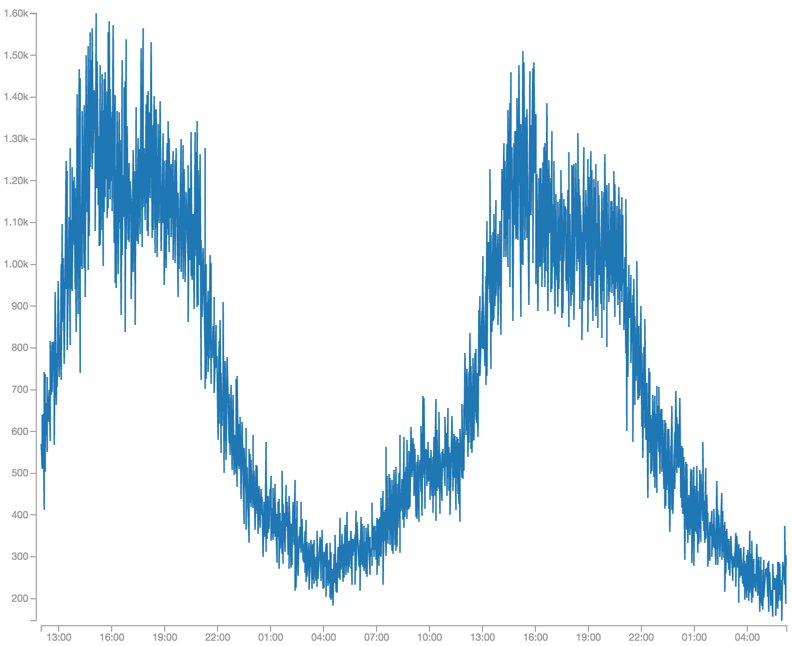

- Big data

Record application usage

122M

235M

1M

much data

such throughput

so wow

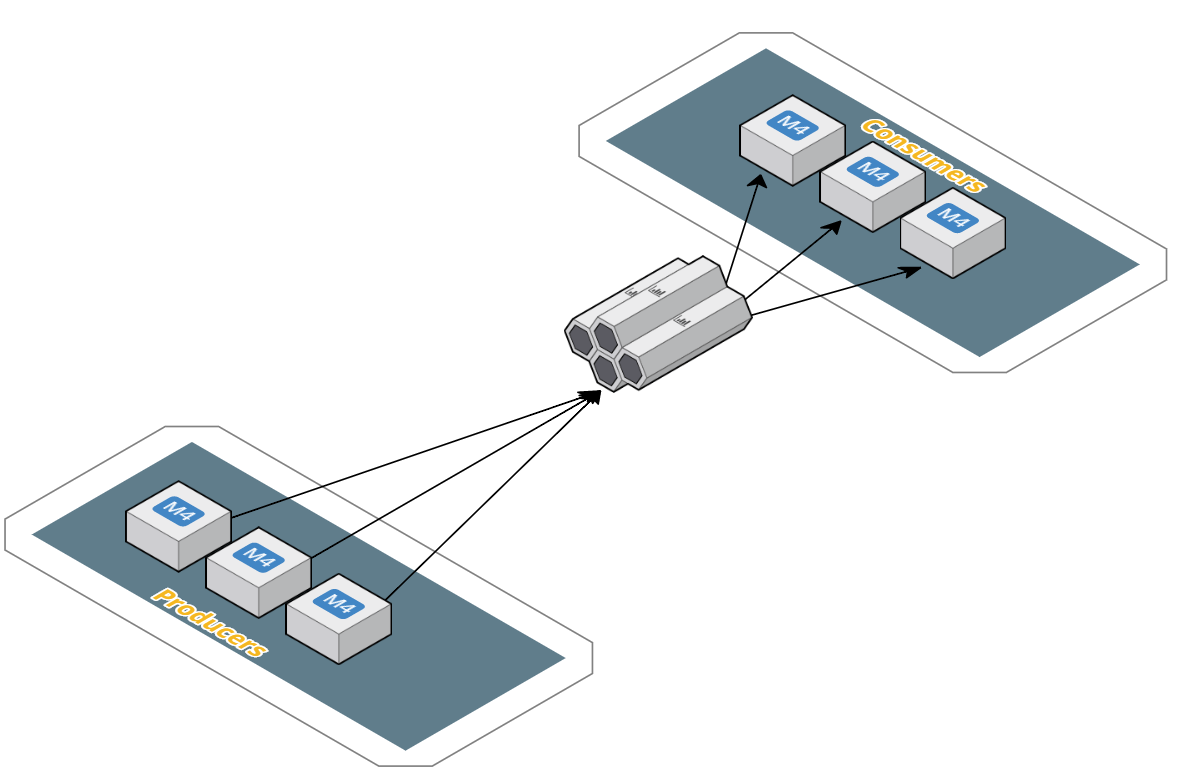

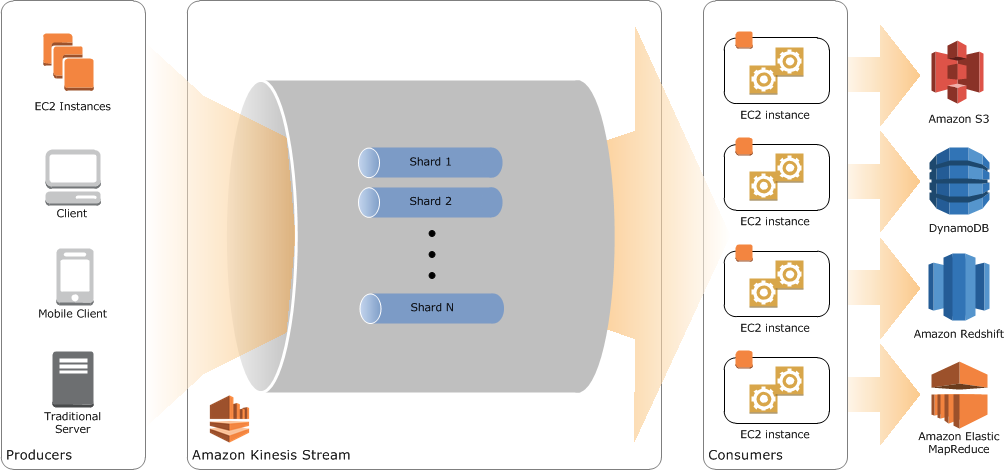

AWS Kinesis Streams

real-time streaming data

Amazon Kinesis is a platform for streaming data on AWS, offering powerful services to make it easy to load and analyze streaming data, and also providing the ability for you to build custom streaming data applications for specialized needs.

Core concepts

Record

{

"Records": [

{

"Data": "XzxkYXRhPl8w",

"PartitionKey": "partitionKey1"

},

{

"Data": "AbceddeRFfg12asd",

"PartitionKey": "partitionKey1"

},

{

"Data": "KFpcd98*7nd1",

"PartitionKey": "partitionKey3"

},

"StreamName": "myStream"

}Core concepts

Shard

Core concepts

Shard iterator

{

"ShardIterator": "AAAAAAAAAAETYyAYzd665+8e0X7JTsASDM/Hr2rSwc0X2qz93iuA3udrjTH+ikQvpQk/1ZcMMLzRdAesqwBGPnsthzU0/CBlM/U8/8oEqGwX3pKw0XyeDNRAAZyXBo3MqkQtCpXhr942BRTjvWKhFz7OmCb2Ncfr8Tl2cBktooi6kJhr+djN5WYkB38Rr3akRgCl9qaU4dY="

}Core concepts

Data retention

24 to 168 hours

Producers

AmazonKinesisClient amazonKinesisClient = new AmazonKinesisClient(credentialsProvider);

PutRecordsRequest putRecordsRequest = new PutRecordsRequest();

putRecordsRequest.setStreamName("DataStream");

List <PutRecordsRequestEntry> putRecordsRequestEntryList = new ArrayList<>();

for (int i = 0; i < 100; i++) {

PutRecordsRequestEntry putRecordsRequestEntry = new PutRecordsRequestEntry();

putRecordsRequestEntry.setData(ByteBuffer.wrap(String.valueOf(i).getBytes()));

putRecordsRequestEntry.setPartitionKey(String.format("partitionKey-%d", i));

putRecordsRequestEntryList.add(putRecordsRequestEntry);

}

putRecordsRequest.setRecords(putRecordsRequestEntryList);

PutRecordsResult putRecordsResult = amazonKinesisClient.putRecords(putRecordsRequest);

System.out.println("Put Result" + putRecordsResult);Consumers

// Create shard iterator

GetShardIteratorRequest getShardIteratorRequest = new GetShardIteratorRequest();

getShardIteratorRequest.setStreamName(myStreamName);

getShardIteratorRequest.setShardId(shard.getShardId());

getShardIteratorRequest.setShardIteratorType("TRIM_HORIZON");

GetShardIteratorResult getShardIteratorResult;

getShardIteratorResult = client.getShardIterator(getShardIteratorRequest);

String shardIterator = getShardIteratorResult.getShardIterator();

// Get the records

GetRecordsRequest getRecordsRequest = new GetRecordsRequest();

getRecordsRequest.setShardIterator(shardIterator);

getRecordsRequest.setLimit(25);

GetRecordsResult getRecordsResult = client.getRecords(getRecordsRequest);

List<Record> records = getRecordsResult.getRecords();

// Get the next shard iterator

shardIterator = getRecordsResult.getNextShardIterator();

KCL

#! /usr/bin/env ruby

require 'aws/kclrb'

class SampleRecordProcessor < Aws::KCLrb::RecordProcessorBase

def init_processor(shard_id)

# initialize

end

def process_records(records, checkpointer)

# process batch of records

end

def shutdown(checkpointer, reason)

# cleanup

end

end

if __FILE__ == $0

# Start the main processing loop

record_processor = SampleRecordProcessor.new

driver = Aws::KCLrb::KCLProcess.new(record_processor)

driver.run

endKCL

-

Connects to the stream

-

Enumerates the shards

-

Coordinates shard associations with other workers (if any)

-

Instantiates a record processor for every shard it manages

-

Pulls data records from the stream

-

Pushes the records to the corresponding record processor

-

Checkpoints processed records

-

Balances shard-worker associations when the worker instance count changes

-

Balances shard-worker associations when shards are split or merged

Pricing

| Shard Hour (1MB/second ingress, 2MB/second egress) | $0.015 |

| PUT Payload Units, per 1,000,000 units | $0.014 |

| Extended Data Retention (Up to 7 days), per Shard Hour | $0.020 |

Pricing

For $1.68 per day ($52.14 per month)

4MB of data per second, or 337GB of data per day (4 shards)

Increase Amazon Kinesis stream’s data retention period from 24 hours to up to 7 days for an extra $59.52 per month.

https://aws.amazon.com/kinesis/streams/pricing/

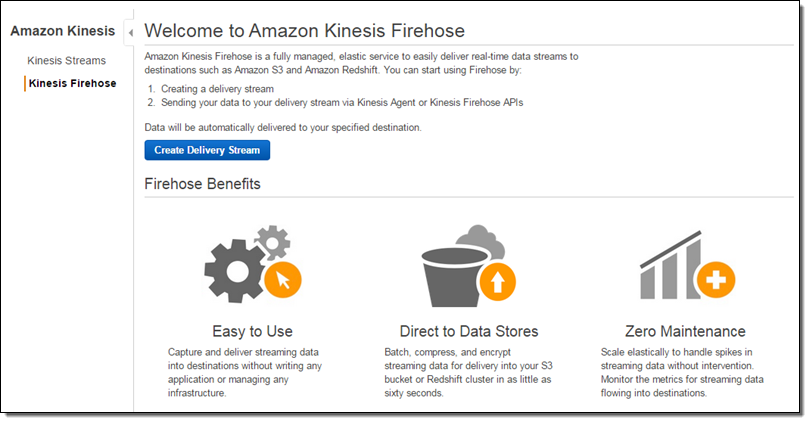

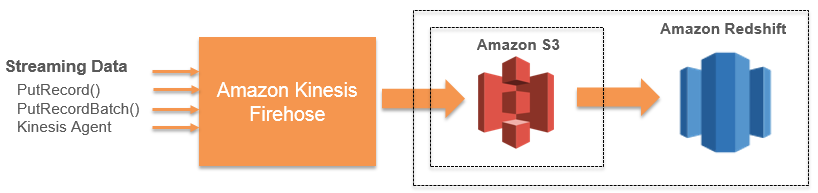

Kinesis Firehose

Kinesis Firehose

Easy integration with AWS

- S3

- Redshift

- DynamoDB

- API Gateway

Fully managed

TL;DR

Kinesis is pretty cool

careers.coveo.com

?

AWS Kinesis

By Guillaume Simard

AWS Kinesis

AWS Redshift introduction

- 2,760