OTF

Scenario 1

progress data management

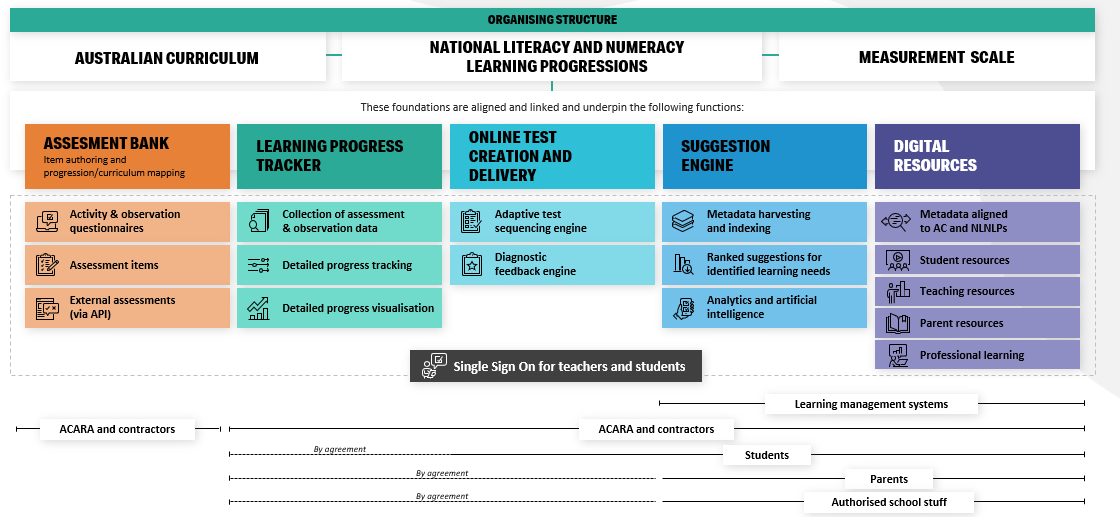

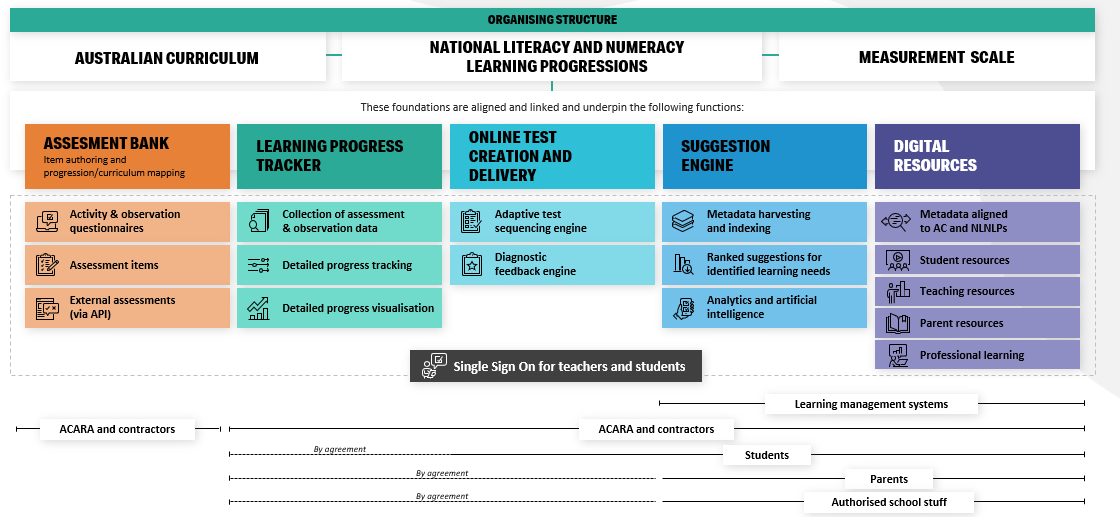

Online Formative Assessment

Capabilities

Online Formative Assessment

Capabilities

- Version 3 National Learning Progressions; (all areas)

- feedback

- signposts / AC linking

- embedding

- Formative assessment data; (input)

- transformation

- alignment

- scaling

- Progress data; (input/output)

- Standardised, aligned records

- Next Steps support; (output)

- targeted resources (student, teacher, group)

- professional development resources

- External systems integration; (output)

- Progress data & next step resources made available to existing teaching and learning tools

the scenario challenge

multiple assessments from

different sources

multiple input types:

measurement,

summary,

observation...

highly variable

data encoding

collections of student &

cohort data that keep

intrinsic variation

but which are consistent

and comparable

and keep all provenance and

decision-making attributes

pre-map/

classify

align

level

match (1)

match (2)

store

data-flow

identify

data

source,

pre-specify

capabilities/

constraints.

One record

per event.

align

record

data

content

to

NLPs

determine

'scaled'

result

for

record

link

record to

establishment

data/

assessment

schedule

store

consolidated

comparable

comprehensive

record.

building blocks

pre-map/

classify

align

level

match (1)

match (2)

store

key

standalone application

un-orchestrated flow

persistent stream

service

orchestrated flow

'enhanced' process

topology

pre-map/

classify

align

level

match (1)

match (2)

store

key

binary app(s)

application action

nats-streaming topic

web-services

benthos workflow

stages require n3 contexts

topology

pre-map/

classify

align

level

match (1)

match (2)

store

key

binary app(s)

application action

nats-streaming topic

web-services

benthos workflow

all stages monitored

topology

pre-map/

classify

store

Data here structured in multiple formats...

Needs to be

structured in common format

here

brief interlude

on

OTF-MESSAGE

pre-map/

classify

store

inbound

'random' data

outbound otf-message

vendor system id

student id

level_method

level

nlp_alignment

align_method

align_input

level_input

the core triple

we need

timestamps

provenance &

decision-

making

data

original data

for

audit &

re-analysis

otf-message

vendor system id

student id

level_method

level

nlp_alignment

align_method

align_input

level_input

timestamps

initial read

should set minimum

data based on config

-

all other manipulations

should be part of

workflow...

externalised &

configurable.

otf-message

vendor system id

student id

level_method

level

nlp_alignment

align_method

align_input

level_input

timestamps

we will also need to append :

establishment data (from matching) -

- school

- teaching-group

- staff

- timetable etc.

- obs. session id

reference data -

- scales used

- rules used

- service versions

- aggregate members

so that in future all steps can

be audited and all

decisions and assumptions can

be changed if data is reprocessed

pre-map/

classify

align

level

match (1)

match (2)

store

data ingest

pre-map / classify

- read 3rd-party data

- transform to json

- add identifiers

- add business flags

- encode in standard record format

- publish to ingest topic

2 levels of capability

configured (starting point)

intelligent (future)

configured pre-map

Reads data from known location, location

and configuration determine data type.

e.g. we have app ./datareader

> VENDOR_ID="MathsPathway" \

FORMAT="csv" \

LEVEL_METHOD="prescribed" \

ALIGN_METHOD="map" \

NATS_URL="localhost:4222" \

PUBLISH_TOPIC="otf.ingest" \

READER_FOLDER="/me/data/MathsPathway" \

./datareader

we pass all necessary decision-making

params in the environment and start the

relatively simple reader to

ingest records & send the results to a stream.

intelligent classifier

Reads data from any location

converts data to json

passes data to classifier (a la n3)

which determines source

and applies rules to reformat

output.

Flexible, elegant, and overkill for now.

./datareader

needs to:

read csv and convert to json

wrap the original message inside

an

otf-message

structure

set other key otf-message

fields

publish resulting message to nats stream

pre-map/

classify

align

level

match (1)

match (2)

store

align & level

align

level

store

align

determines where

the record

places the student

on the NLPs

level

assigns a position

on a common

scale

(probably mastery based)

align needs to happen

before level as in the case of

aggregated or inferred levelling

the alignment is an input

parameter

workflow pulls messages from the stream

populated by ingest readers

align

store

the align service

takes a contextual

parameter from the original record,

will be vendor-specific,

and related to the align_method

appropriate for that vendor

/otf-services/align

{

"vendor": "MathsPathway",

"method": "map",

"value": "16272cd5-8ce2-4c76-8dad-4fa4ee5628b1"

}

/otf-services/align

{

"vendor": "BrightPath",

"method": "infer",

"value": "May vary sentence structure

for effect."

}

align_methods:

map: vendor or system map between known identifiers

prescribed: uses known NLP identifier

infer: text statement/descriptor

returns full { G: E: S: D: I: } locator block

infer

store

the align service Facade

align

NLP

graph

map

uses appropriate

alignment method

to find NLP

descriptor

e.g. CrT9

uses NLP graph

to find full

location

a1

b2

service request

service response

General Capability, Element, Sub-Element, Development Level, Indicator

map/lookup

service

text classifier service

nias3 context

b1

level

store

the level

service

takes a contextual

parameter from the original record,

not necessarily vendor-specific.

determined by the level_method

indicator

/otf-services/level

{

"vendor": "MathsPathway",

"method": "prescribed",

"value": "mastery"

}

/otf-services/level

{

"vendor": "BrightPath",

"method": "scaled",

"value":

{

"scaleid":"Narative",

"type": "NAPLAN",

"score": 645

}

}

/otf-services/level

{

"vendor": "SPA",

"method": "rules",

"value":

{

"perIndicator": 3,

"indicatorPcnt": 40

}

}

}

level_methods:

prescribed: issued by 3rd party

scaled: uses known mappable scale such as NAPLAN

rules: eg. no. observations of an indicator and %coverage of indicators in a dev. level

returns scale value: e.g. 'mastery'

rules

store

the Level service Facade

level

NLP

graph

scale

service request

service response

level value | accepted | error

equate between known scales & nlp scale

nias3 context

prescribed requests need no action as

they explicitly specify level.

derive level from e.g. observations through rules: (n) observations per indicator x %age of indicators per development-level.

aggregates return value only when rule threshold reached

pre-map/

classify

align

level

match (1)

match (2)

store

align & level

at the end of this

workflow all successful records

are passed on to the next

persistent stream,

all unsuccessful records should have been passed to a DLQ,

all traffic monitored and traced.

pre-map/

classify

align

level

match (1)

match (2)

store

matching

pre-map/

classify

match (1)

match (2)

matching

match(1)

links student NLP results to establishment data: school, teaching-group, timetable etc.

match(2)

links to LPOFA

managed sessions

and AC

workflow pulls messages from the stream

populated by align/level services

match

store

match service (1)

takes a contextual

identifier from the original record,

will typically be student id

locates all establishment data

related to that student

/otf-services/match

{

"student_id": "16272cd5-8ce2-4c76-8dad-4fa4ee5628b1"

}

/otf-services/match

{

"student_id": "John Doe"

}

uses nais3 traversal query

returns:

student local identifier(s)

school identifier(s)

teaching-group details

timetable details

match

store

match service (2)

takes a contextual

identifier from the original record,

such as indicator or level

locates all reference data

related to that indicator

uses nais3 traversal query

returns:

Australian Curriculum links,

LPOFA session links,

etc.

/otf-services/match

{

"descriptor_value": "CrT9"

}

/otf-services/match

{

"descriptor_value": "May vary sentence structure for effect."

}

infer

store

the match service Facade

match

ref.

data

est.

data

uses student id

to locate; school, staff, teaching-groups, timetable etc.

uses direct references, or inferred references to attach contextual

reference data such

as curriculum

a1

b1

service request

service response

establishment_data block

reference_data block

nias3 hits data

lookup

service

text classifier service

nias3 reference data context; Australian Curriculum, LPOFA sessions etc.

b2

student identifier

GESDI identifier

question/indicator text

pre-map/

classify

align

level

match (1)

match (2)

store

storage

store

STORE

is a proxy service in Alpha as

progress-tracker will not

be delivered in this phase.

Store will be a nias3 context

for saving & retrieving

student progress records.

standard n3 graphql exposure

of student progress records

workflow pulls messages from the stream

populated by align/level services

or match

services if

available.

otf-message

vendor system id

student id

level_method

level

nlp_alignment

align_method

align_input

level_input

timestamps

otf-result

otf-metadata

original_data block

establishment_data block

reference_data block

what gets stored...

pre-map/

classify

align

level

match (1)

match (2)

store

monitoring & tracing

monitoring & tracing

- OpenTracing in workflows (benthos)

- Prometheus monitoring for nats streaming and benthos

- monitoring endpoints enabled in nats

- visualised in Grafana

OTF

Scenario 1

progress data management

fin

...for now...

OTF-PDM

By matt_farmer

OTF-PDM

- 373