Caching

priyatam mudivarti

principal engineer, architect

level money platform, capital one

half a billion

USER TRANSACTIONS

1 million downloads

iOS, Android, Web (on-boarding only)

8GB of daily Cache

a lot of data

32k Clojure LOC, ~1k Cljs LOC

3.5k Python LOC for tests

~400 million user transactions

Level App + platform

User transaction is a single amount (credit/debit) from your bank, credit card, etc.,

Programs exhibit spatial & temporal locality

application caches focus on

temporal locality

If you access data x at time t, you are likely to access data x at time t +/- delta

Cache

t1

tn

level

Naming

EviCtion

INVALIDATION

INVALIDATION

There must be a proper way to get informed that the information held by a cache needs to be discarded.

who do I tell?

Same user requests the same information multiple times, or multiple users request the same information.

EVICTION

How long do I exist?

Most algorithms you heard about are on evictions

DecoupLE them

EVICTION & INVALIDATION

why are they coupled?

Eviction may not exist without invalidation*

Invalidation has a property of being able to evict. It exists as a function of eviction

Memcache knows how to evict stale objects.

Let it do its job.

the question is not what time a function is called, but how invalidation requests are relative to time

*unless the cache runs out of memory

Naming

{cache-name}-{uid}-{cache-version}-{logical-timestamp}

auto-invalidation comes for free, whenever we bump the cache-version or logical-timestamps

immutable CACHE KEYS

{cache-name}-{uid}-{cache-version}-{logical-timestamp}

Naming

EviCtion

INVALIDATION

local.cache

remote.cache

generational.cache

Level

Chapters

Application cache

Level appliction cache is a distributed generational cache, built on Memcached and core.cache

Name - description of what we’re caching

Dependencies: Other caches it relies on (hierarchical)

Compute - a function invoked to fill the cache.

Serialize/deserialize fns - you know what this means

Version - increments when “compute” semantics change: to prevent reading data computed by the old function

LEVEL Cache

(def level-cache

{:name "suggested-new-funds"

:version 7

:dependencies #{:suggested-budget-update}

:compute (fn [uid now computed]

(bf/make

bf/funds-holder

{:funds (filter (complement :fund-id)

(:funds (:suggested-budget-update computed)))}))

:serialize bf/pb->bytebuffer

:deserialize #(bf/decode bf/funds-holder %)})

Users' transactions

Funds

Predicted transactions

Balance projection (derived from predicted transactions)

Suggested funds & buckets (pattern detection)

Buckets, including matched transactions

Budgets, synthetic blobs like Funds & Spendable

OUR ApplicAtion CAche

texans

base funds

monthly texans

projection

all funds

suggested budget update

insights

texans

projection

transactions

projection

balance

projection

suggested new

funds

budget

snaphot

suggested funds

LEVEL Cache - Dependencies

(def cache-order [:base-funds

:raw-insight-rows

:stripped-texans

:multi-month-texans-projection

:texans-projection

:transactions-projection

:full-funds

:balance-projection

:suggested-budget-update

:suggested-funds

:suggested-new-funds

:insights

:cards

:budget-snapshot])Dependency graph is a DAG—an ordered list.

No branches.

(def cache-order [:full-funds

:balance-projection

:suggested-budget-update

:suggested-funds

:suggested-new-funds

:insights

:cards

:budget-snapshot])Invalidate full funds?

Invalidation is simple: invalidate rest

Hierarchical cache.

Caches can have as many dependencies as you want

Pass current transactions to predict their future transactions. Make the future transactions cache depend on the current transactions one - conceptually it queries that cache

Dependency Loops are a compile-time error

On dependencies

(defn validate

"Validates cache config and dependencies."

[cachez]

(when-not (= (set cache-order) (set (keys cachez)))

(throw (Exception. (str "Inconsistent cache config: " cache-order " and " (keys cachez)))))

(doseq [cache-name cache-order]

(doseq [dependency (:dependencies (cachez cache-name))]

(when-not (cachez dependency)

(throw (Exception. (str "Inconsistent cache config: Unrecognized dependency "

dependency " for " cache-name))))

(when-not (> (cache-offsets cache-name) (cache-offsets dependency))

(throw (Exception. (str "Dependency " dependency " is calculated

after dependent cache" cache-name)))))))

@platform

#dynamo

LEVEL CachE

cache logic

API

Queues

query returns nil? recompute

incrementing the timestamp means there won’t be anything in Memcache with that name, so we’ll get a cache miss

queues ensure cache is "filled", invalidate every 24 hrs

atomically query the logical timestamps

logical timestamps start at 1, get atomically incremented to invalidate the cache

An immutable key tied to how values in the cache are produced.

{cache-name}-{uid}-{cache-version}-{logical-timestamp}

prevents race conditions, maybe

A Queue-backed service gets a message whenever we invalidate the cache, and refills the cache

Each key also is associated with a “generation” (“user-logical-timestamps” ). To invalidate we simply increment the cache generation. Anyone still using an old generation still has access to it.

RACE CONDITIONS

@platform

#dynamo

#memcache

REMOTE Cache (memcache)

API

Queues

cache logic

cache component

Memcache LRU eviction strategy removes invalid data or TTL after 24 hrs kicks in

delegate to

Gzip + Protocol Buffers

atomically query the logical timestamps

Often caches don't know how often they’re full

A Queue-backed service gets a message whenever we invalidate the cache, and refills the cache

New transactions coming in (including pending) will result in the transactions cache being invalidated, then queried, so it ends up full

When is it full?

If they are slow and being called from the API

Don't add if they’re only called occasionally from the backend, as we’ll be wasting CPU cycles keeping the cache filled

When should I add?

Serialization and deserialization is slow

MEM CACHE - Problems

Gzipping 1MB payload takes 100ms

Nippy is faster, but still ...

Core cache

CacheProtocol

Nice implementations of common (and uncommon) eviction strategies: FIFO, TTL, LRU, LIRS

Many implementations by third party libraries, including Spycache clojure lib.

Composable

(defprotocol CacheProtocol

"This is the protocol describing the basic cache capability."

(lookup [cache e]

[cache e not-found]

"Retrieve the value associated with `e` if it exists, else `nil` in

the 2-arg case. Retrieve the value associated with `e` if it exists,

else `not-found` in the 3-arg case.")

(has? [cache e]

"Checks if the cache contains a value associated with `e`")

(hit [cache e]

"Is meant to be called if the cache is determined to contain a value

associated with `e`")

(miss [cache e ret]

"Is meant to be called if the cache is determined to **not** contain a

value associated with `e`")

(evict [cache e]

"Removes an entry from the cache")

(seed [cache base]

"Is used to signal that the cache should be created with a seed.

The contract is that said cache should return an instance of its

own type."))@platform

#dynamo

#memcache

Local Cache (Core.Cache)

API

Queues

cache logic

cache component

core

cache

Write-through/Read-through

Delays

memoize DONE RIGHT

http://kotka.de/blog/2010/03/memoize_done_right.html

Clojure does not provide ready-made solutions, but it provides a lot of tools. The art is to combine them correctly. And when you are done, you end up with something small and elegant.

— Meikel Brandmeyer

public Delay(IFn fn){

this.fn = fn;

this.val = null;

this.exception = null;

}

synchronized public Object deref() {

if(fn != null) {

try {

val = fn.invoke();

}

catch(Throwable t) {

exception = t;

}

fn = null;

}

if(exception != null)

throw Util.sneakyThrow(exception);

return val;

}

Delay => Future => Promise

delay is guaranteed to run only once, and caches the results the remaining times

(ns cache.core)

(defn query-cache

"Top level query api for application access."

[uid timestamp tz cache-name params]

(if-let [cache (caches cache-name)]

(let [logical-ts (core/get-user-logical-timestamps cache-name uid)

base-key [cache-name uid (:version cache) params logical-ts]

query-dag (fn [dep-name]

(let [default-params (:default-params (caches dep-name))]

[dep-name (query-cache dep-name

uid

timestamp

tz

(default-params uid timestamp))])) gencache! (swap! cache-component/instance

cache-component/query-through base-key delayed-val!

(:serialize cache)

(:deserialize cache))] delayed-val! (delay

(let [deps (into {} (map query-dag (:dependencies cache)))]

(recalculate! cache-name uid params timestamp deps)))the atom's result holds a delayed value ... derefed elsewhere

@(:result gencache!))

(throw (Exception. (str "Querying unknown cache:" cache-name "for" uid)))))

delay recalculate! which calls the compute function of given cache

get the logical timestamp and recursively compute deps and pass to others

return the swapped value in the atom

(let [memcache-delay (delay

(if-let [memcache-value (try (core/item->value

(core-cache/lookup memcache key)

deserialize-fn)

(catch Exception e nil))]

memcache-value(ns cache.component)

(def instance (atom nil))

(defn query-through

[{:keys [memcache localcache] :as gencache} base-key value-delay serialize-fn deserialize-fn]

(let [key (apply core/stringify-key base-key )]

(if-let [local-delay (core-cache/lookup localcache key)]

(merge gencache

{:localcache (core-cache/hit localcache key)

:result local-delay})) (merge gencache

{:localcache (core-cache/miss localcache key memcache-delay)

:result memcache-delay})))))

first, look at local cache, if found cach-hit ... put it in :result

(do

(core-cache/miss memcache key

(core/value->item @value-delay serialize-fn))

@value-delay)))]then, look at mem cache and return its delayed value

if not, cache-miss and deref delayed value

finally, return memcache delay

:result => memcache-delay => recompute value delay

delay the recalculcuation

@platform

#dynamo

#memcache

A genertion cache, with local and remote providers

API

Queues

cache logic

cache component

core

cache

One physical cache supports many logical caches

That queue shouldn’t back up—compute faster or provision more machines

Most caches don’t actually need to invalidate every day

Cache misses can be slow —profiling could help

Things to remember

Performance & Metrics

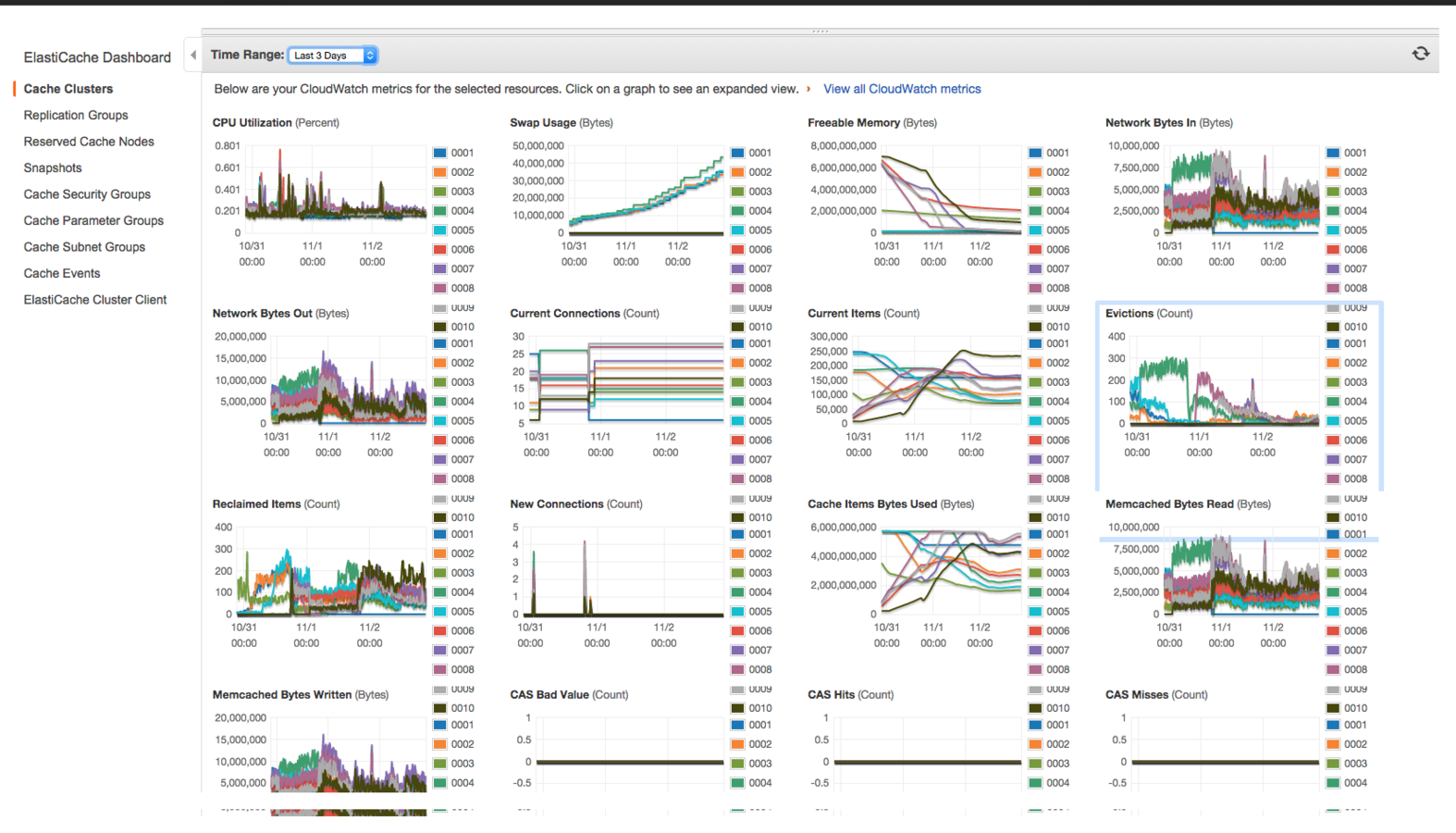

memcache

node

local

cache

local

cache

load balancer

~1-2 ms

~100x

Network latencies on PROD

local

cache

~3000x

median times

dynamo

Memcache uses consistent hashing, however ...

Watch your Dashboards

Metrics

simple wrappers in clojure

cache-component wraps metrics for each miss, hit, and query

timers can be logged via cache-component

p99

Clojure Performance

profile compute functions

understand clojure seqs are slow

try to sort first

make good use of rseq in filters

when in doubt, read the clojure source

(defn recent-bucketed? [tz new-card cards texan]

(first-filter #(and (recent-txn? tz % texan)

(= :bucketed-transactions (:card-type %))

(= (:bucket-ids (:bucketed-transactions %))

(:bucket-ids (:bucketed-transactions new-card)))

(every? (comp #{:legacy-insight :debit-insight :credit-insight}

:bucket-type)

(:buckets (meta new-card))))

cards))

(defn- recent-cards

"Optimized version of recent cards. Reverses the cards internally, and works

iff cards are ordered from oldest to newest."

[utz texan cards]

(take-while (partial recent-card? utz texan) (rseq cards)))

(defn recent-bucketed? [utz new-card cards texan]

(first-filter #(and (= :bucketed-transactions (:card-type %))

(= (:bucket-ids (:bucketed-transactions %))

(:bucket-ids (:bucketed-transactions new-card)))

(every? (comp #{:legacy-insight :debit-insight :credit-insight}

:bucket-type)

(:buckets (meta new-card))))

(recent-cards utz texan cards)))

;; create-cards is a reducer over all cards (of transactions)

;; create-cards => bundle-cards => recent-bucketed?

Protocol

it's just a single function

(defprotocol GenCache

(query [uid timestamp timezone cache-name]

"For a given user id, at a given instant, query a cache-name"))

Hello, again

3 files

~600 lines

1 protocol

decoupled compute, serialize, deserialize

component with local and remote providers

generational cache

Adding a new cache entry takes a few hours ... less than a day

The future

Expand DAG with Component pattern

Each cache is a component

Compose Caches

Optimize Load Balancer — Load Cache

Async computation?

Dave Fayram

Gregor Stocks

Gregory Sizemore

Jeff Shecter

Jonathan Polin

Noodles

Priyatam Mudivarti

Director, Chief Architect

Backend Lead

Senior Performance Engineer

Data Science Lead

Software engineer

Github PR Reviewer*

Principal Engineer, Architect

Thanks!

- Level Money Platform

Big thanks to ...

References

- http://kotka.de/blog/2010/03/memoize_done_right.html

- https://github.com/clojure/core.cache

- https://www.adayinthelifeof.nl/2011/02/06/memcache-internals/

- https://www.mikeperham.com/2009/01/14/consistent-hashing-in-memcache-client/-

- https://github.com/memcached/memcached/blob/master/doc/protocol.txt

https://slides.com/priyatam/caching-half-a-billion-user-txns

Caching half a billion user transactions

By Priyatam Mudivarti

Caching half a billion user transactions

While many robust Cache libraries exists, understanding cache invalidation and eviction in a distributed platform requires a deeper understanding of your data access patterns. At Level Money we built our own caching solution, backed by a Memcached Farm. In this talk I will share our story: how we name and version our caches, grow dependency graphs, find expensive computations, avoid race conditions, and define compute functions to serialize financial data using a simple Protocol.

- 1,232