Deep Generative AI

florpi

HerWILL 2/8/24

https://florpi.github.io/

IAIFI Fellow

Carol Cuesta-Lazaro

A 2D animation of a folk music band composed of anthropomorphic autumn leaves, each playing traditional bluegrass instruments, amidst a rustic forest setting dappled with the soft light of a harvest moon

BEFORE

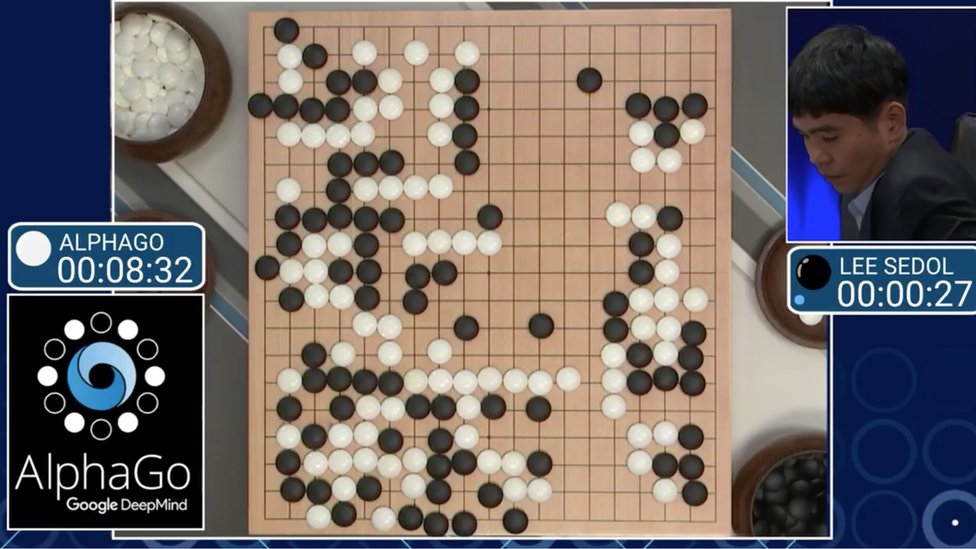

Artificial General Intelligence?

AFTER

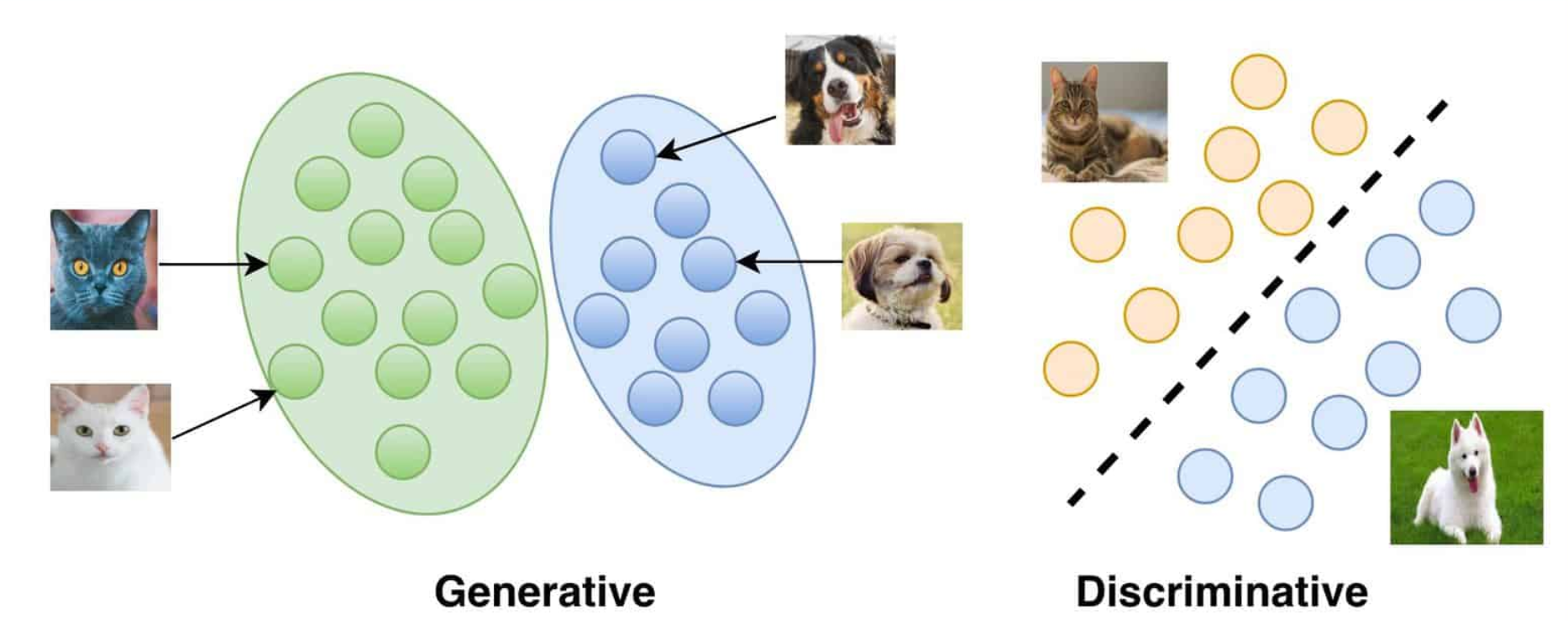

https://vitalflux.com/generative-vs-discriminative-models-examples/

Generation vs Discrimination

Maximize the likelihood of the training samples

Model

Training Samples

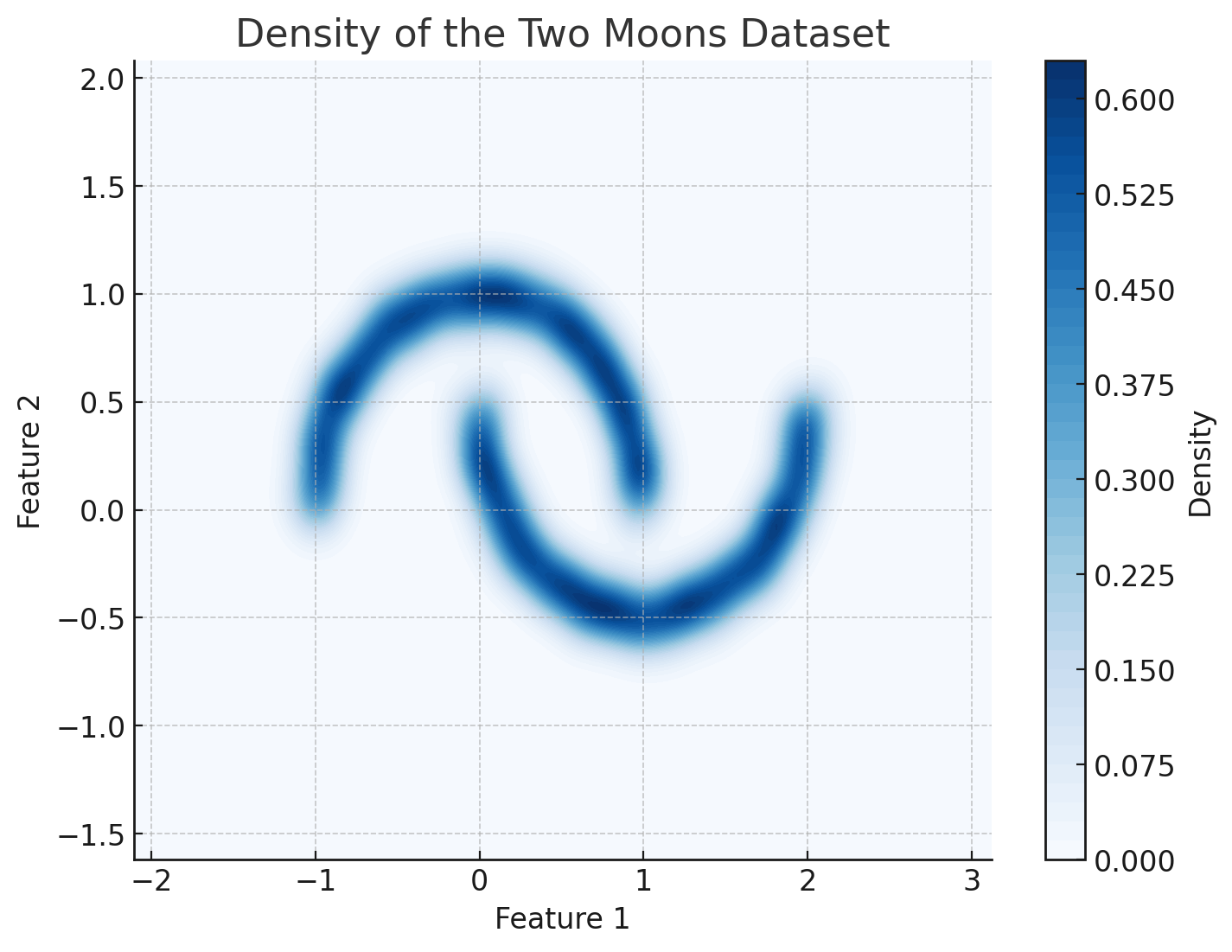

Generative Models 101

The curse of dimensionality

Trained Model

Generate Novel Samples

Evaluate probabilities

Anomaly detection, model comparison...

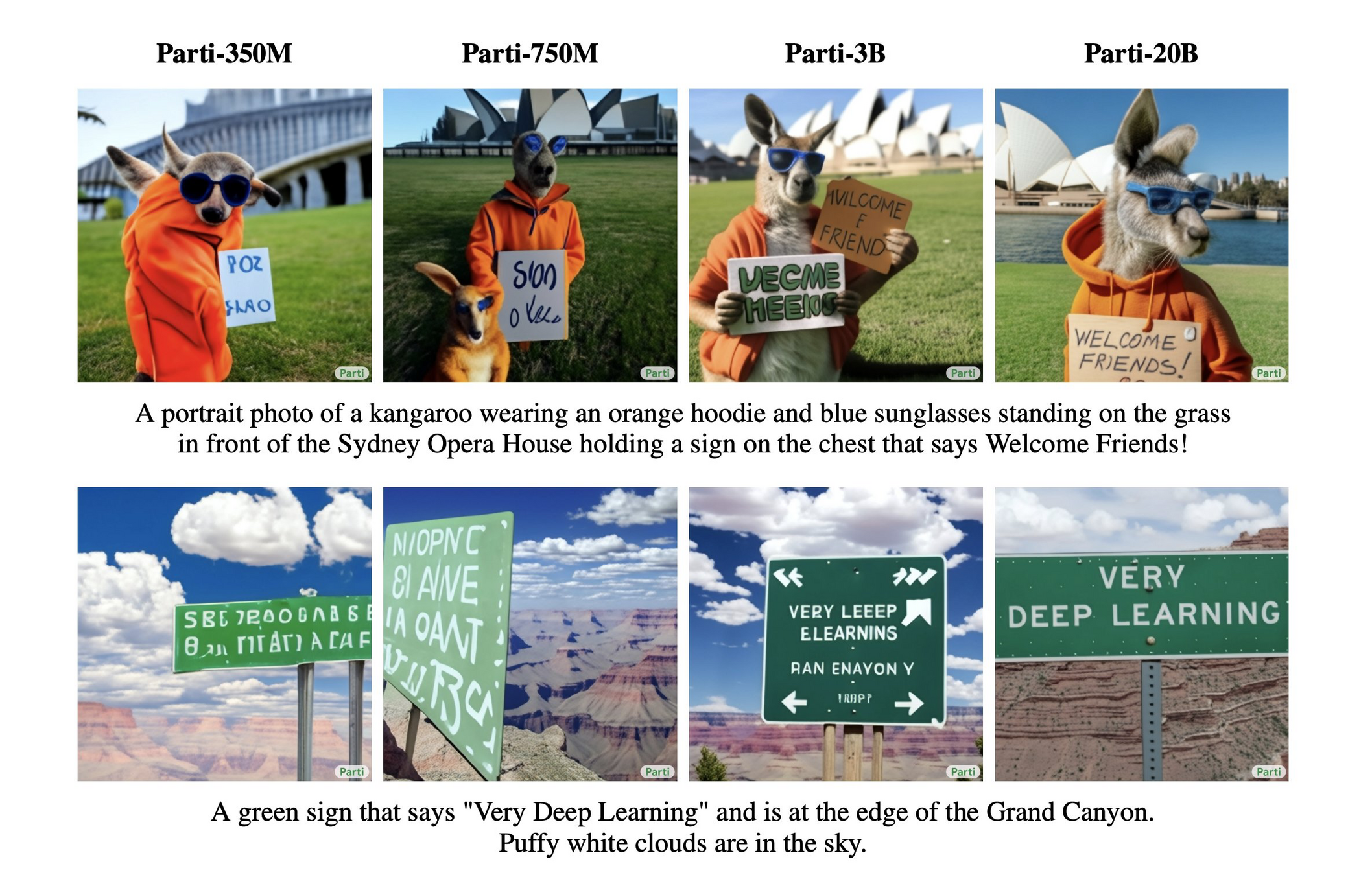

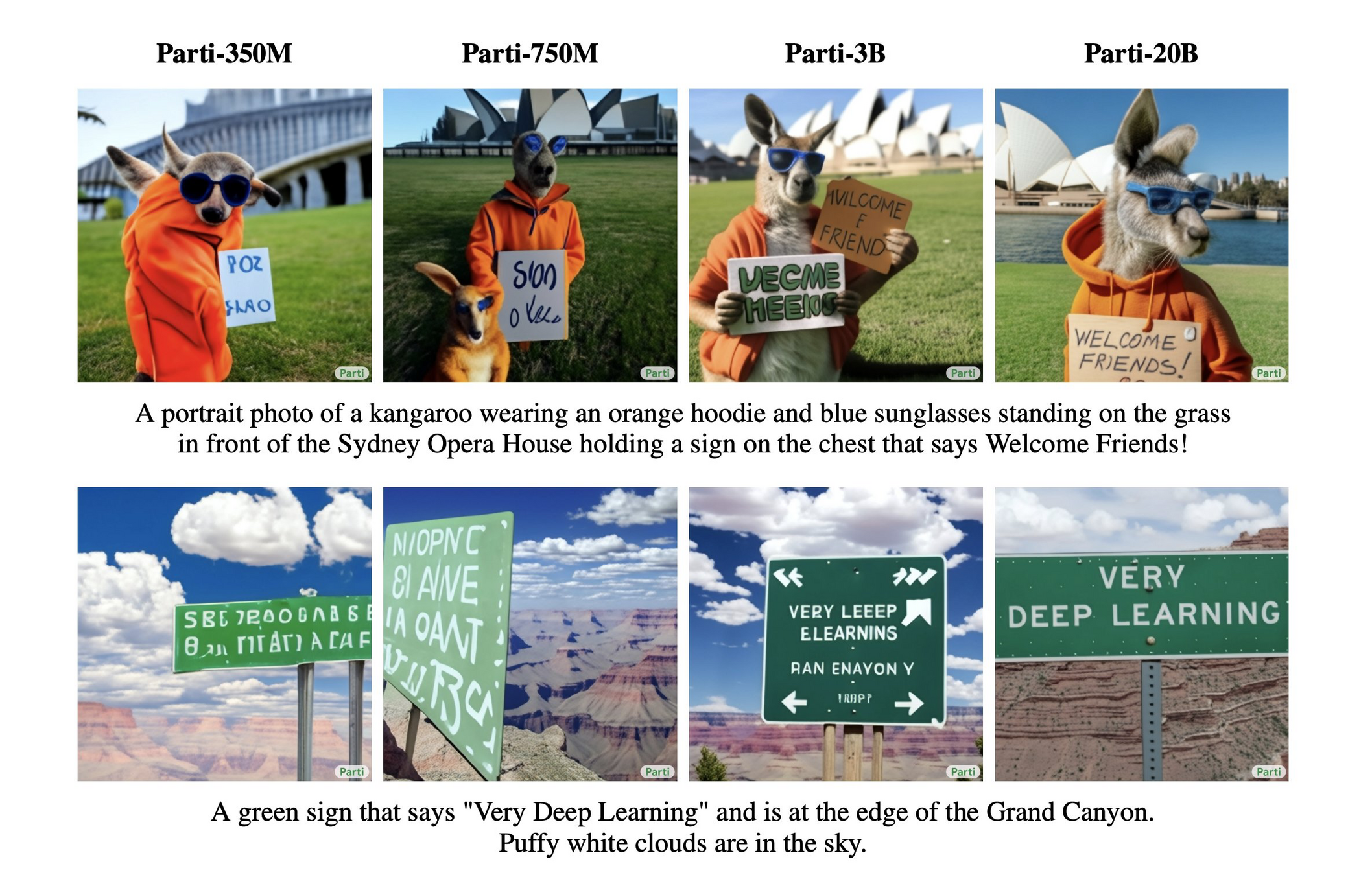

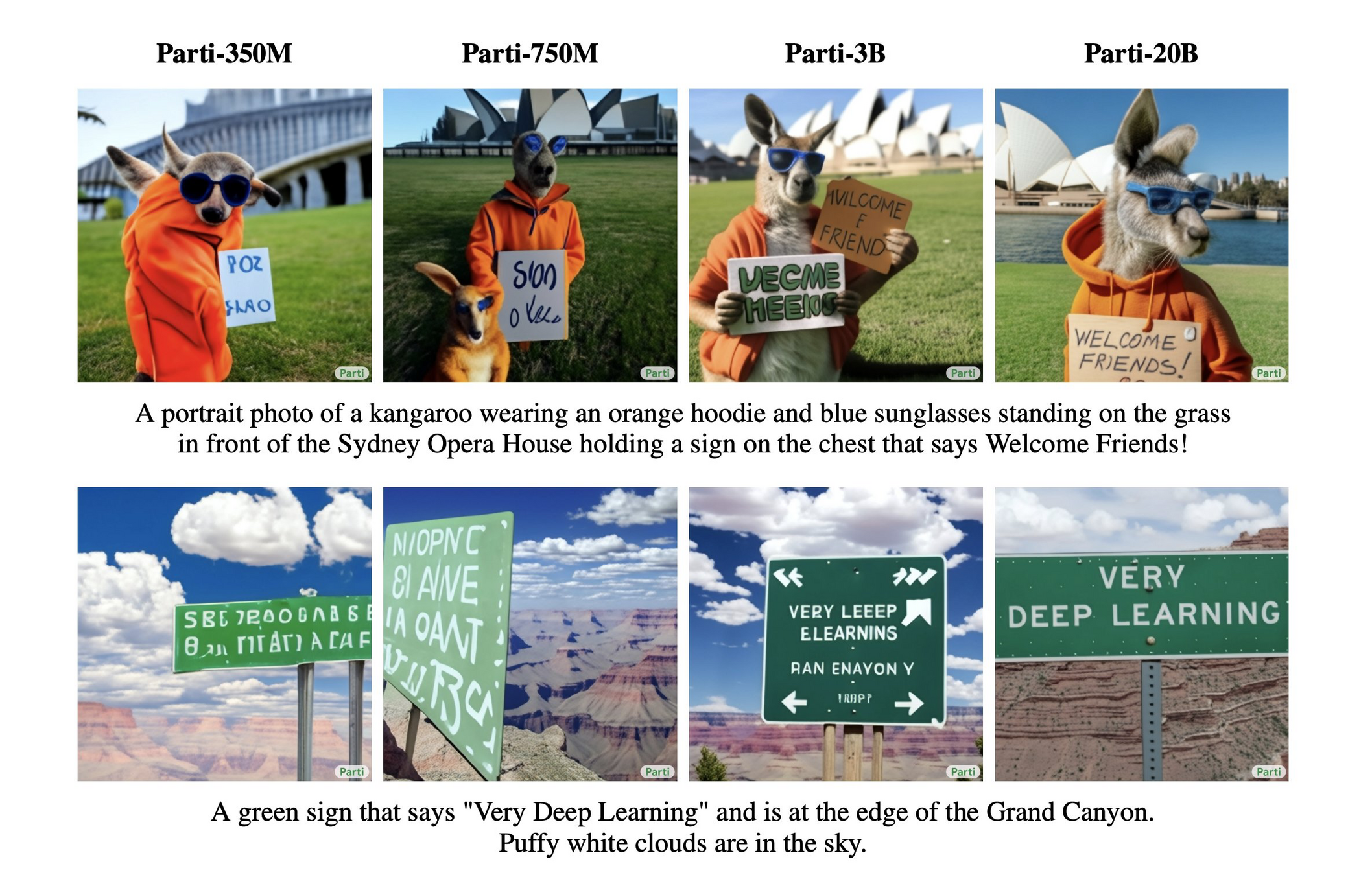

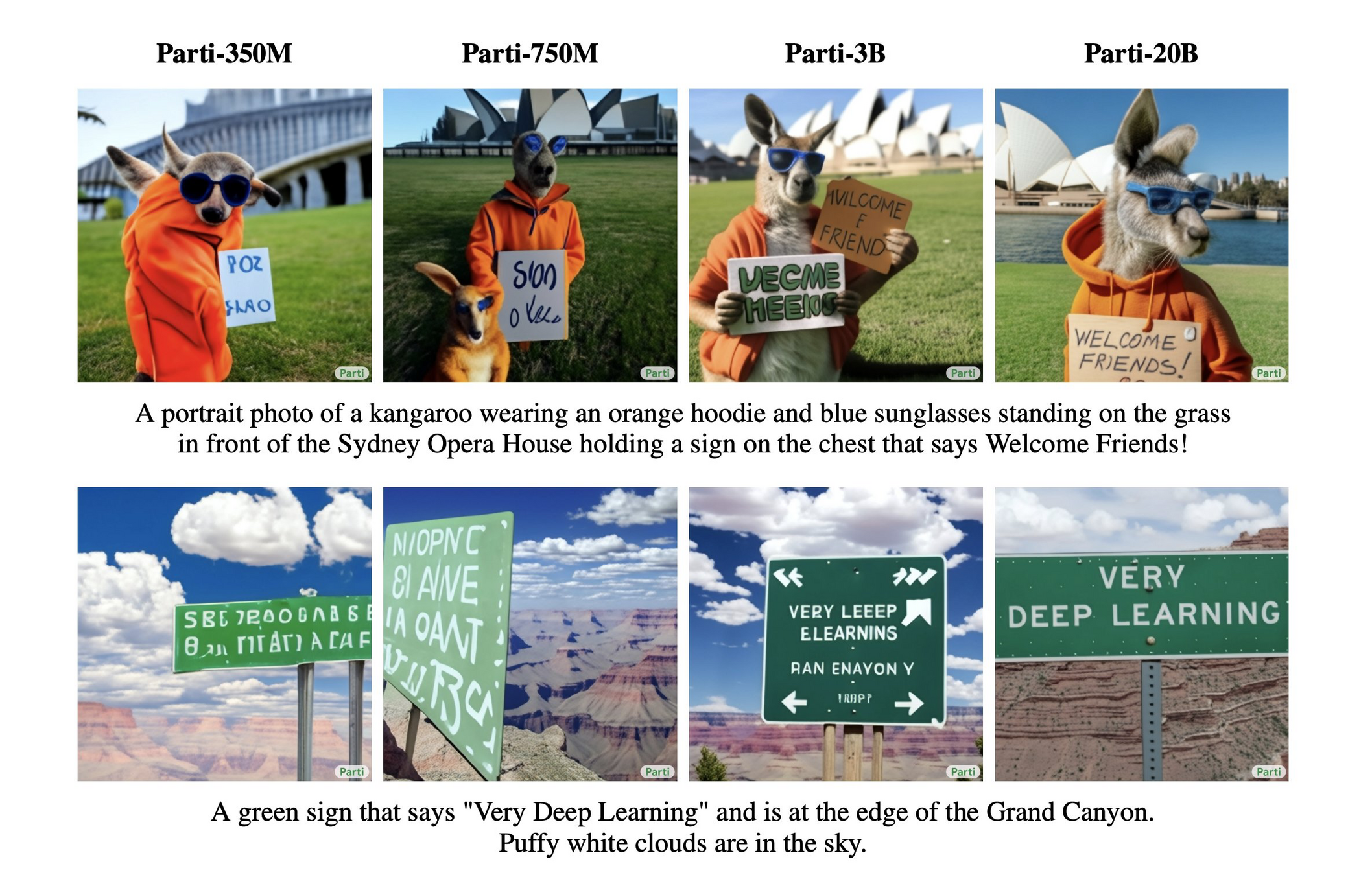

https://parti.research.google

A portrait photo of a kangaroo wearing an orange hoodie and blue sunglasses standing on the grass in front of the Sydney Opera House holding a sign on the chest that says Welcome Friends!

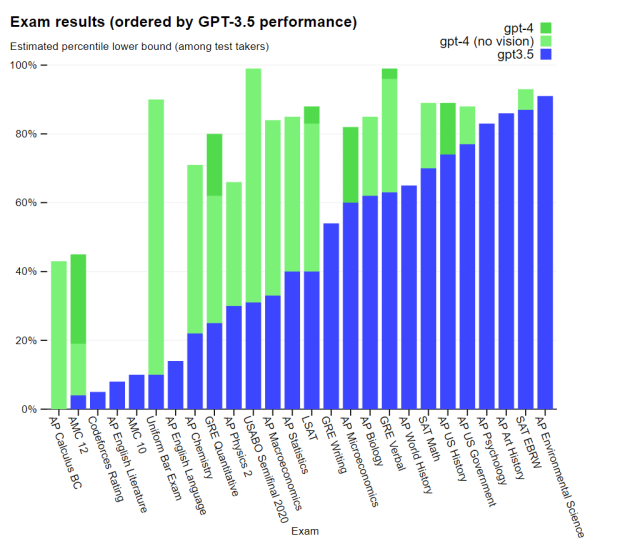

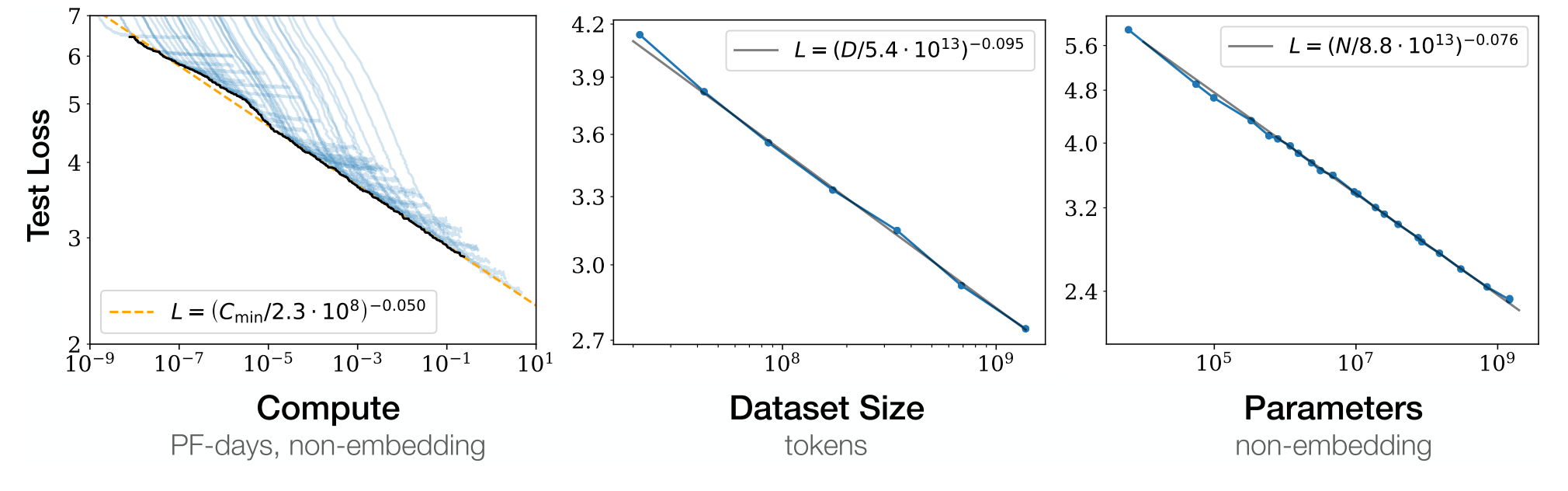

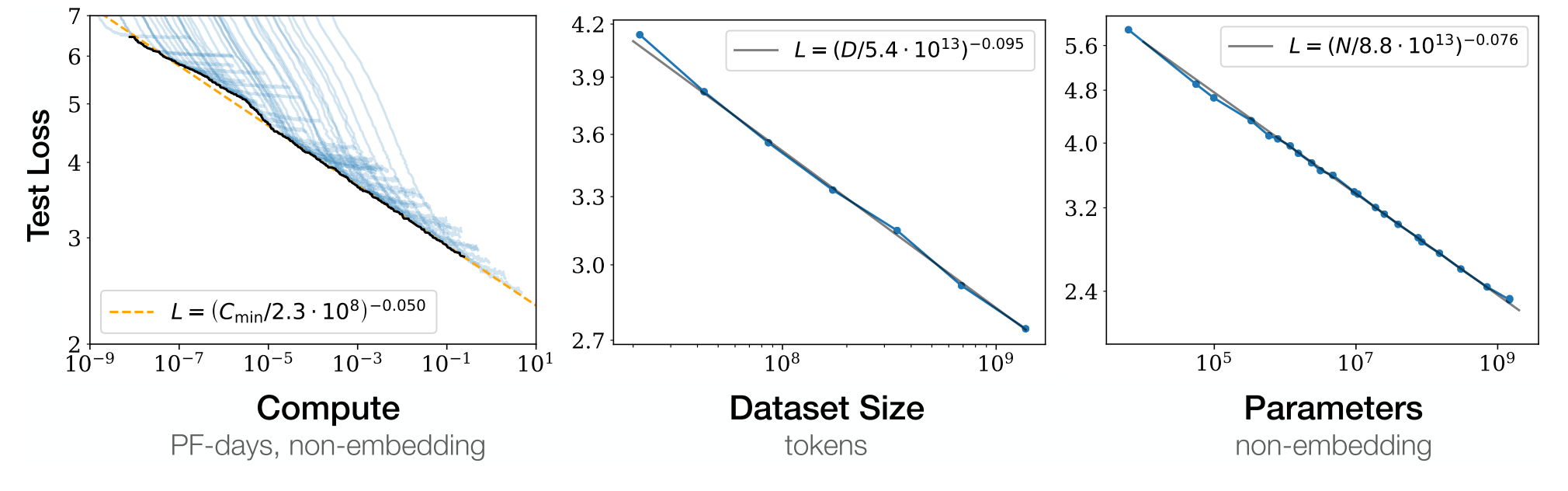

Scaling laws and emergent abilities

"Scaling Laws for Neural Language Models" Kaplan et al

Explicit Density

Implicit Density

Tractable Density

Approximate Density

Normalising flows

Variational Autoencoders

Diffusion models

Generative Adversarial Networks

The zoo of generative models

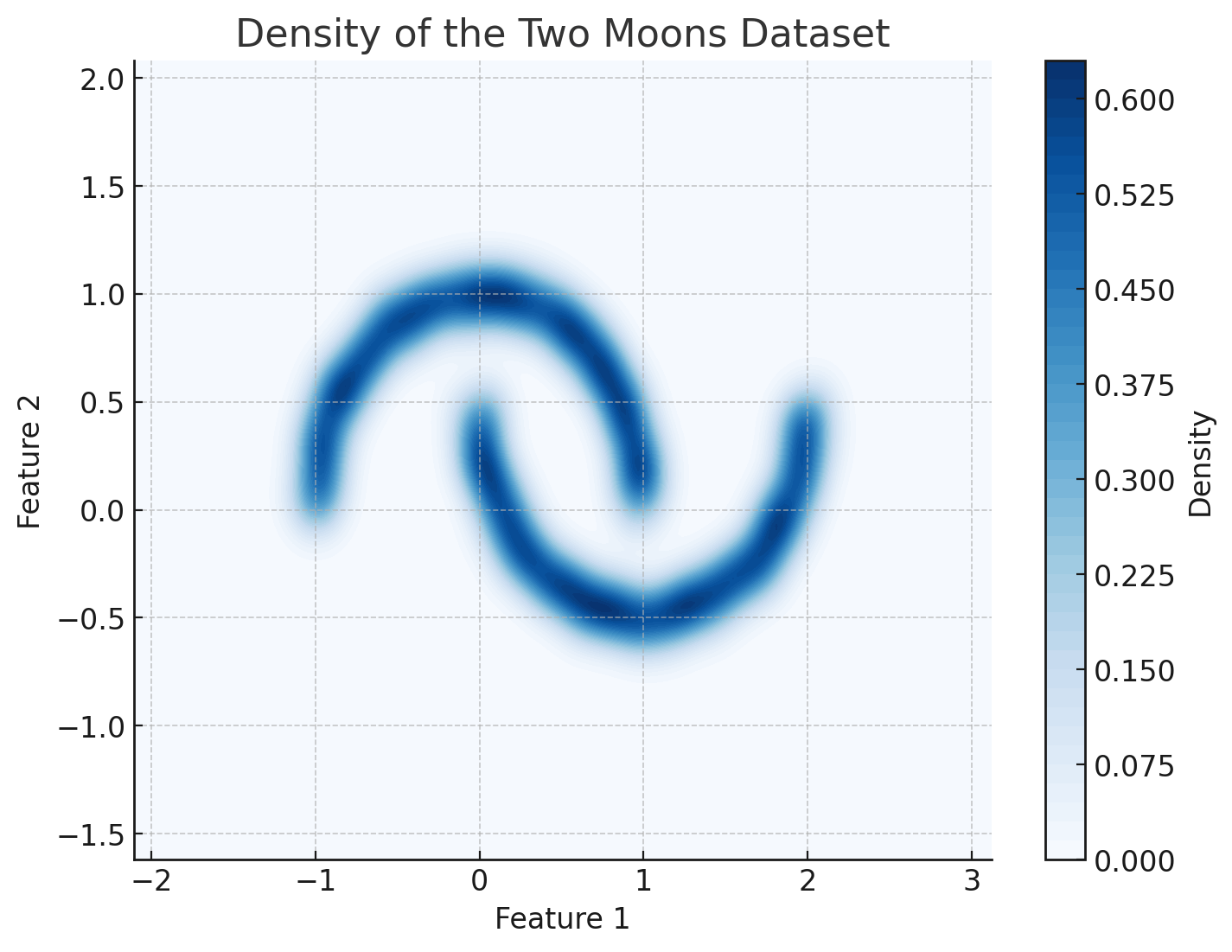

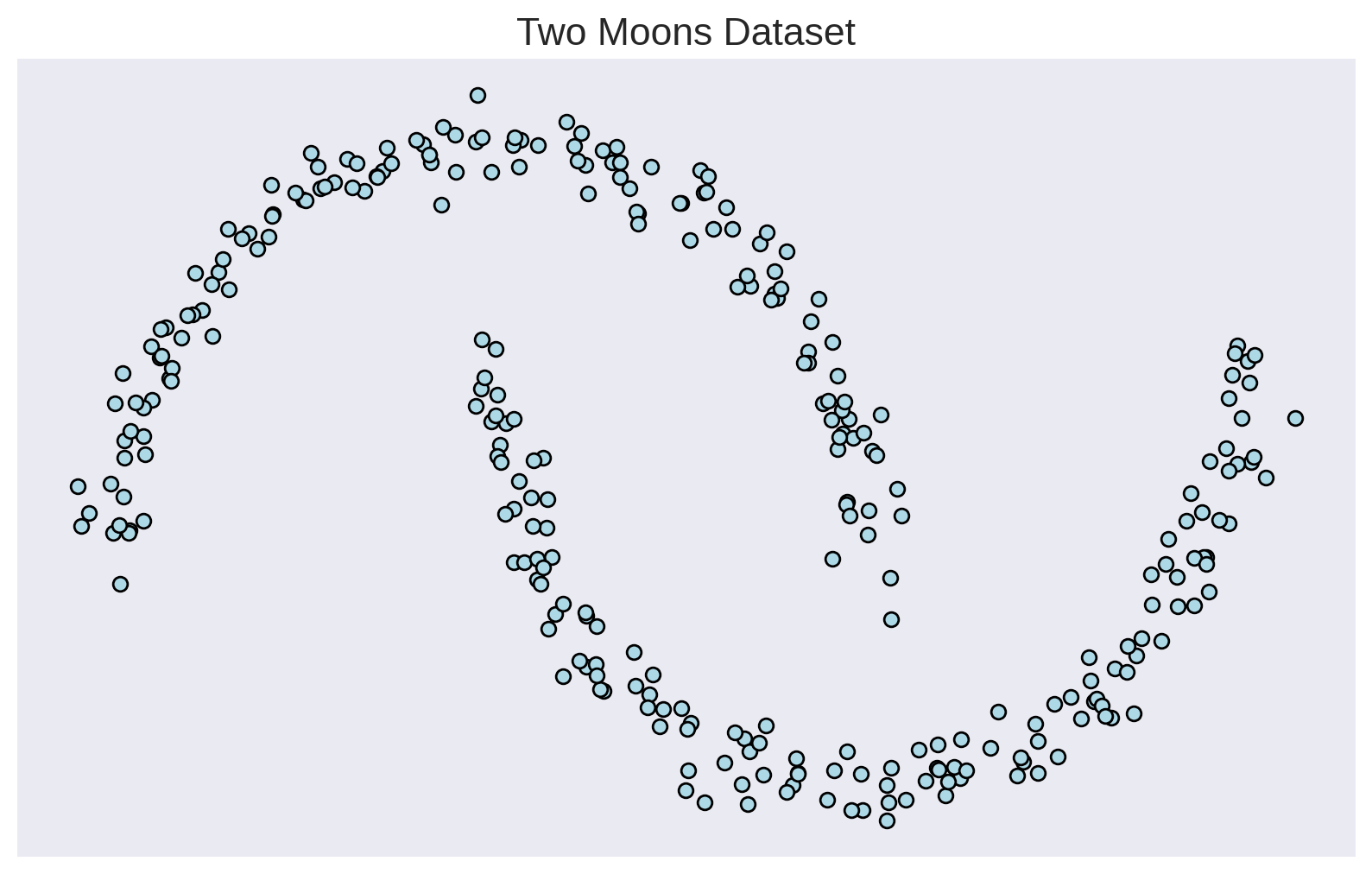

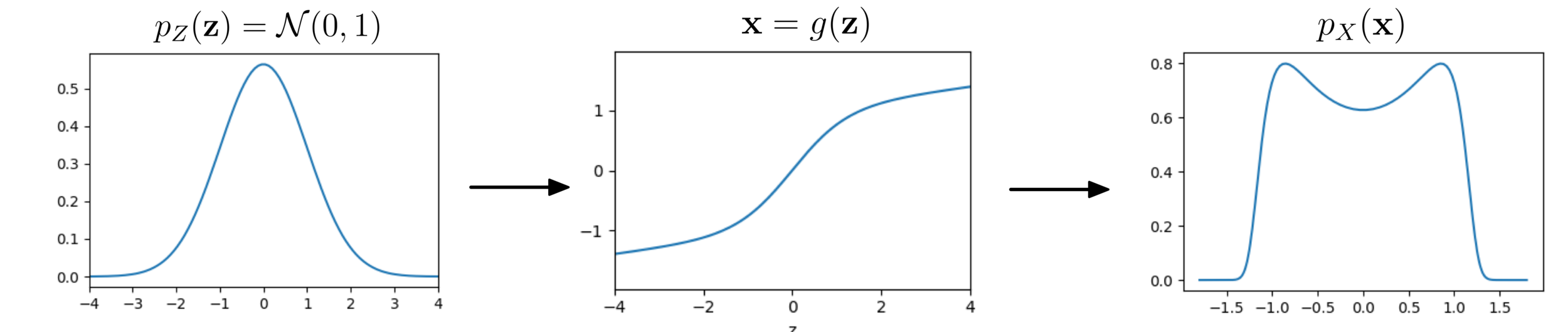

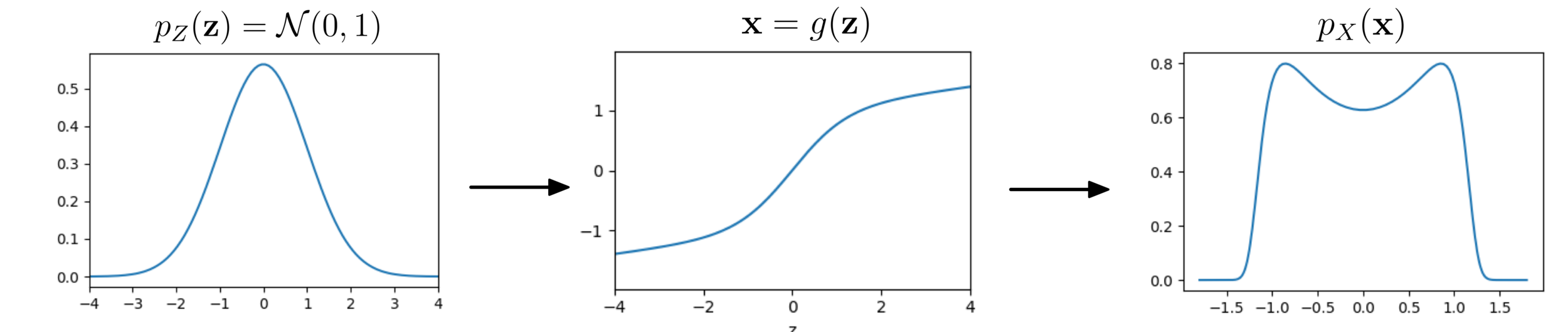

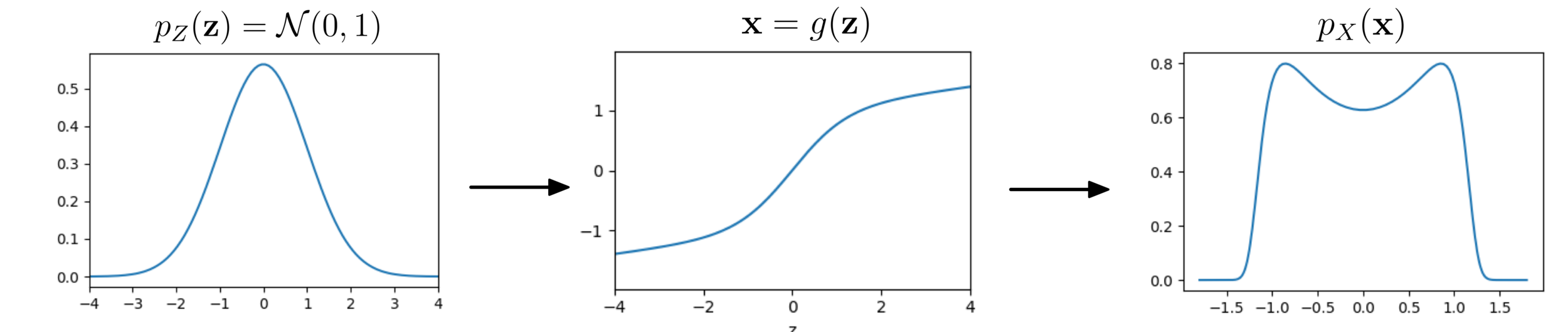

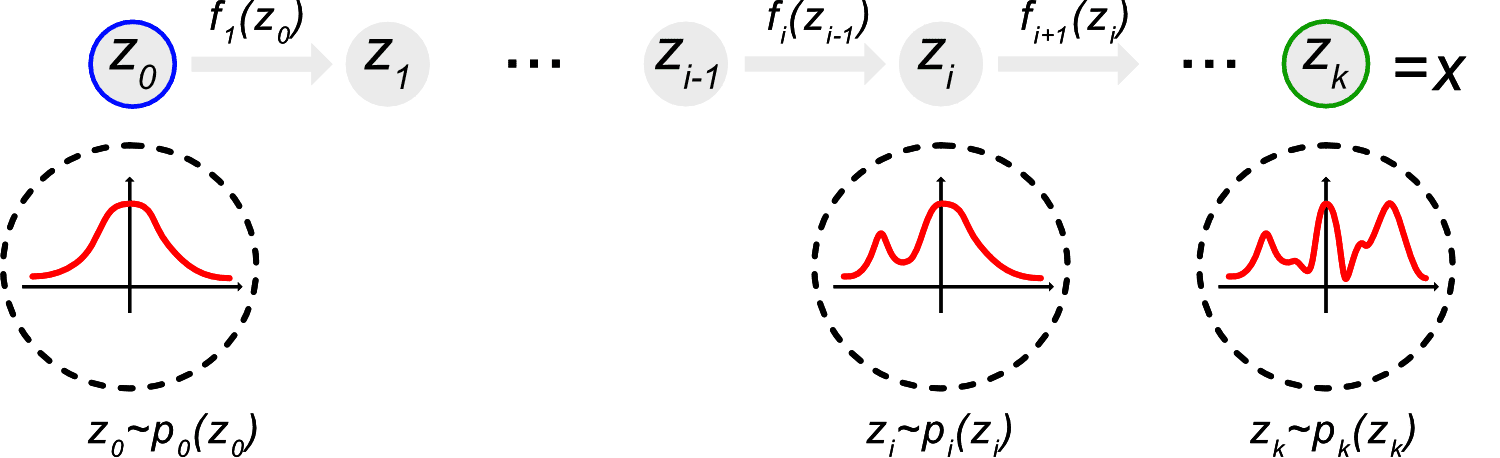

Base distribution

Target distribution

Invertible transformation

Normalizing flows

(Image Credit: Phillip Lippe)

z: Latent variables

Invertible functions aren't that common!

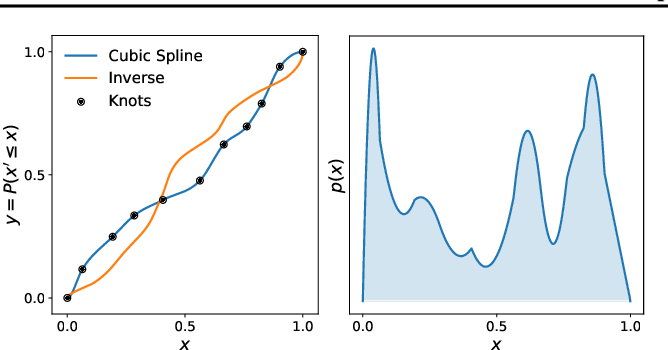

Splines

arXiv:1911.01429

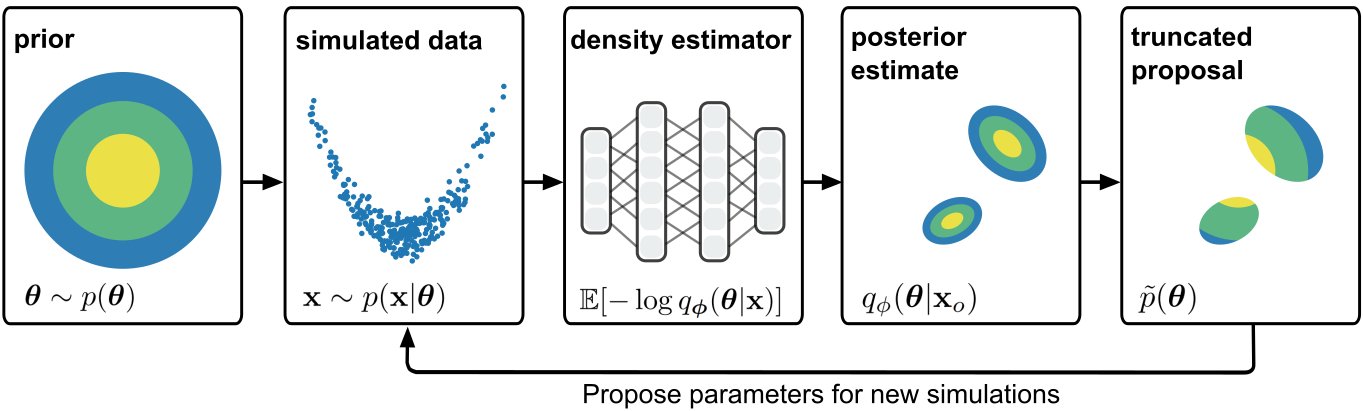

Simulation-based inference

But ODE solutions are always invertible!

Issues NFs: Lack of flexibility

- Invertible functions

- Tractable Jacobians

Chen et al. (2018), Grathwohl et al. (2018)

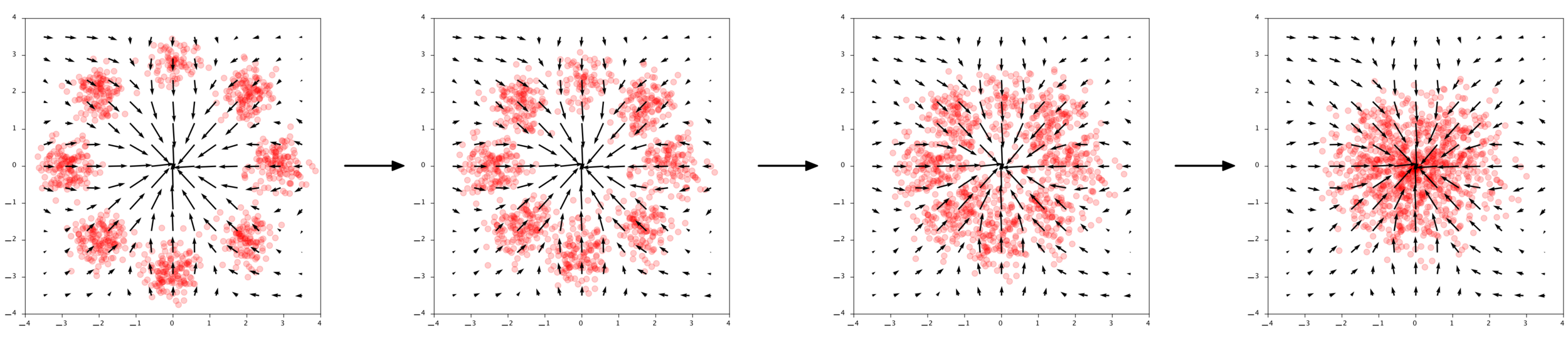

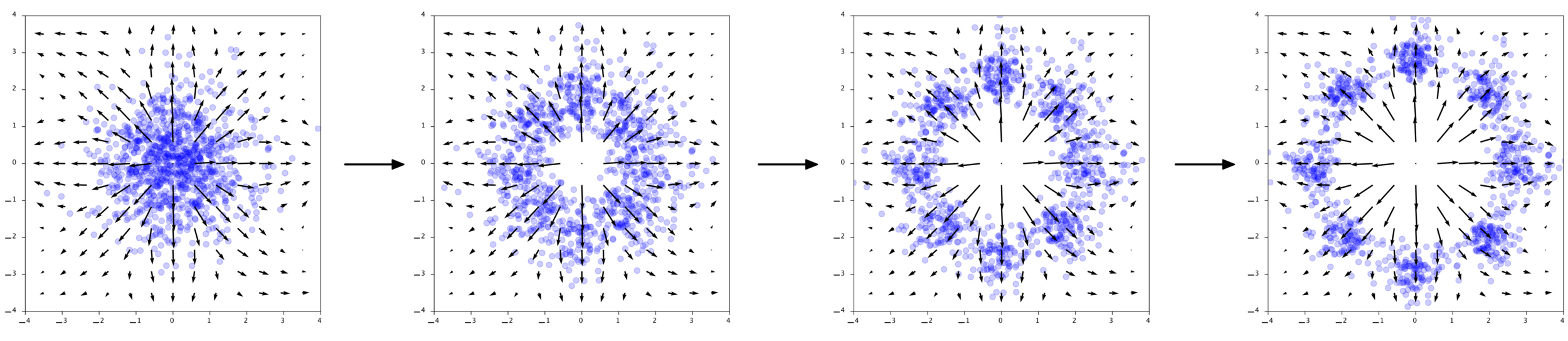

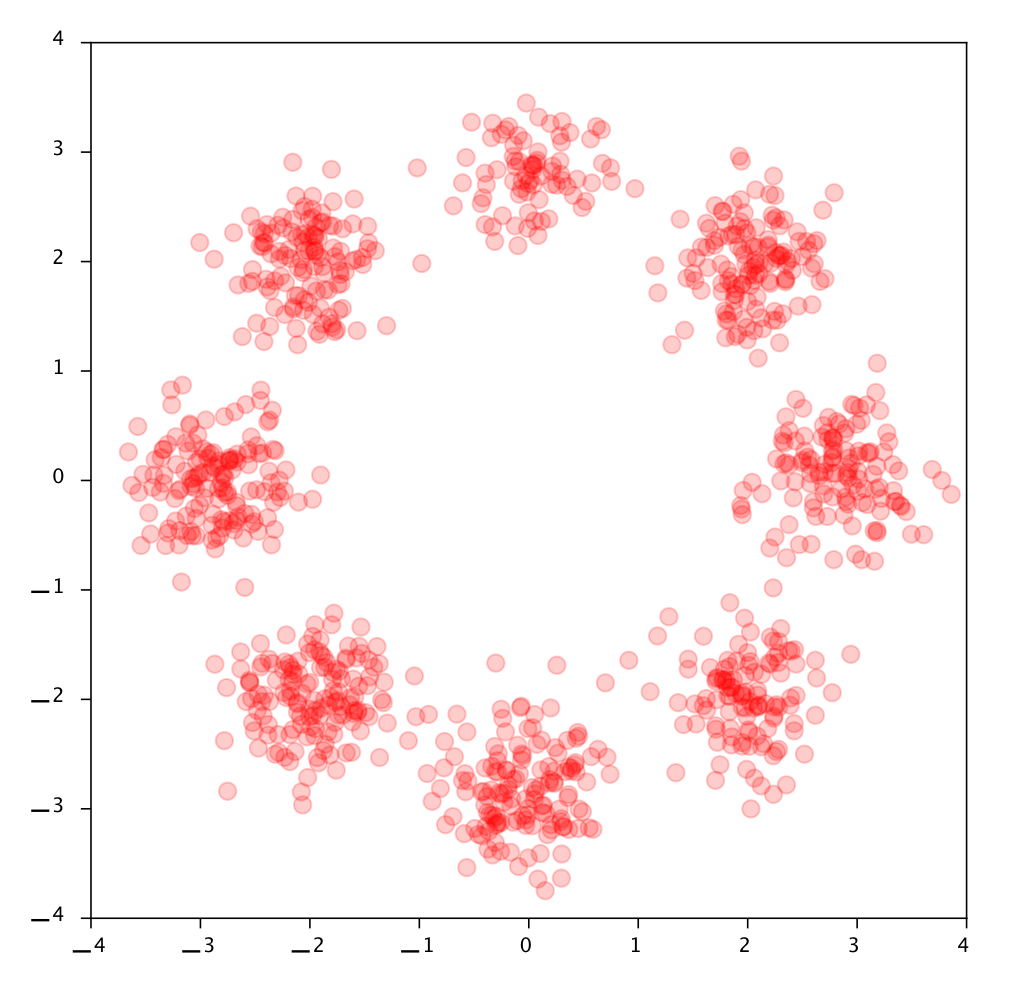

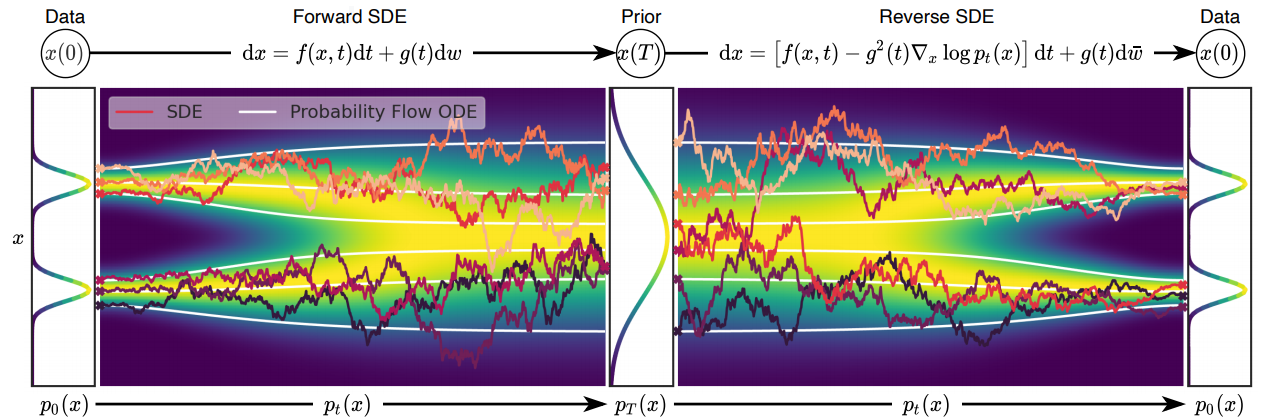

Reverse diffusion: Denoise previous step

Forward diffusion: Add Gaussian noise (fixed)

Diffusion generative models

Score

"Equivariant Diffusion for Molecule Generation in 3D" Hongeboom et al

Speeding up drug discovery

A person half Yoda half Gandalf

Desired molecule properties

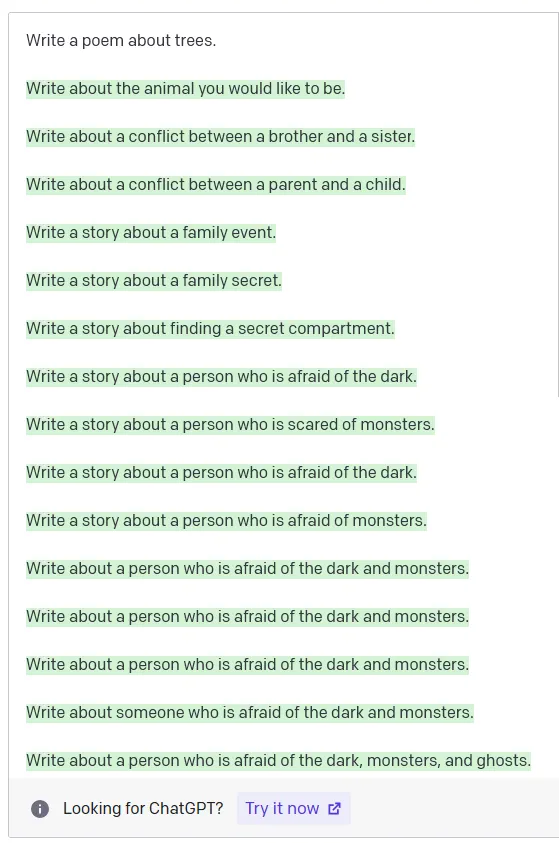

Students at MIT are

Large Language Models

Pre-trained on next word prediction

...

OVER-CAFFEINATED

NERDS

SMART

ATHLETIC

https://www.astralcodexten.com/p/janus-simulatorsHow do we encode "helpful" in the loss function?

BEFORE RLHF

AFTER RLHF

RLHF: Reinforcement Learning from Human Feedback

Step 1

Human teaches desired output

Explain RLHF

After training the model...

Step 2

Human scores outputs

+ teaches Reward model to score

it is the method by which ...

Explain means to tell someone...

Explain RLHF

Step 3

Tune the Language Model to produce high rewards!

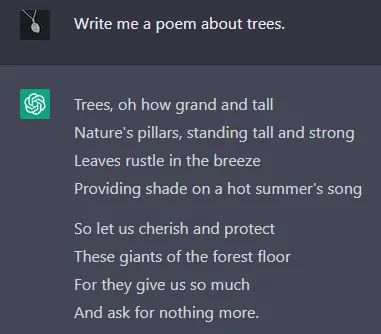

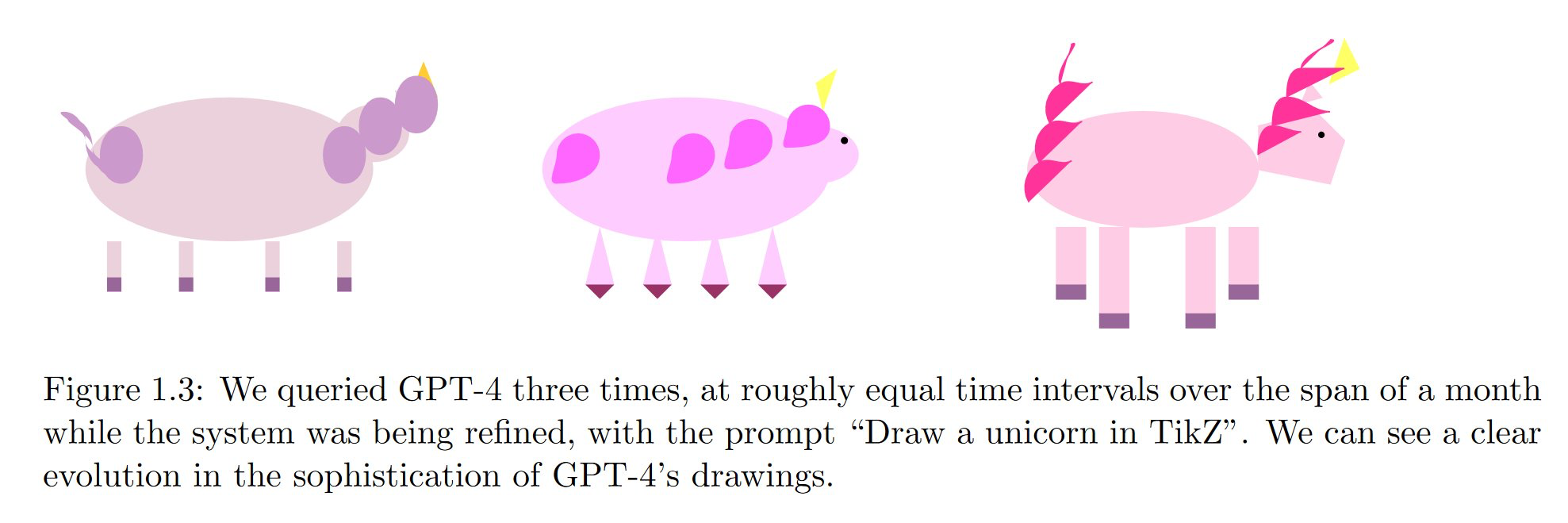

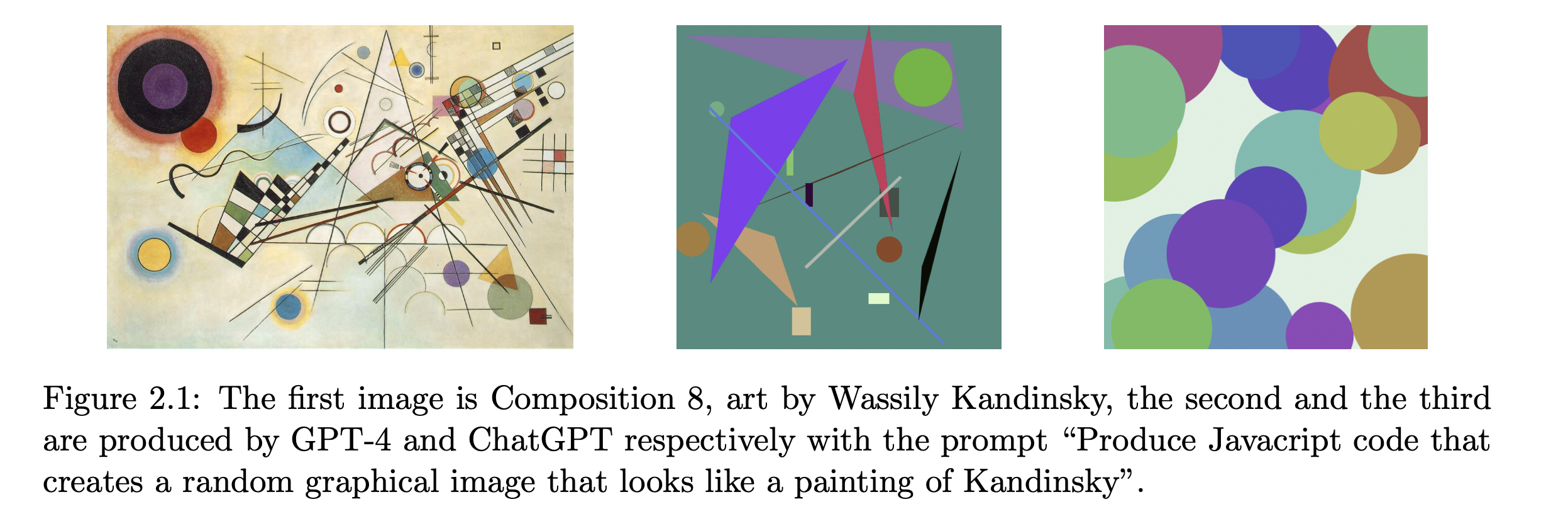

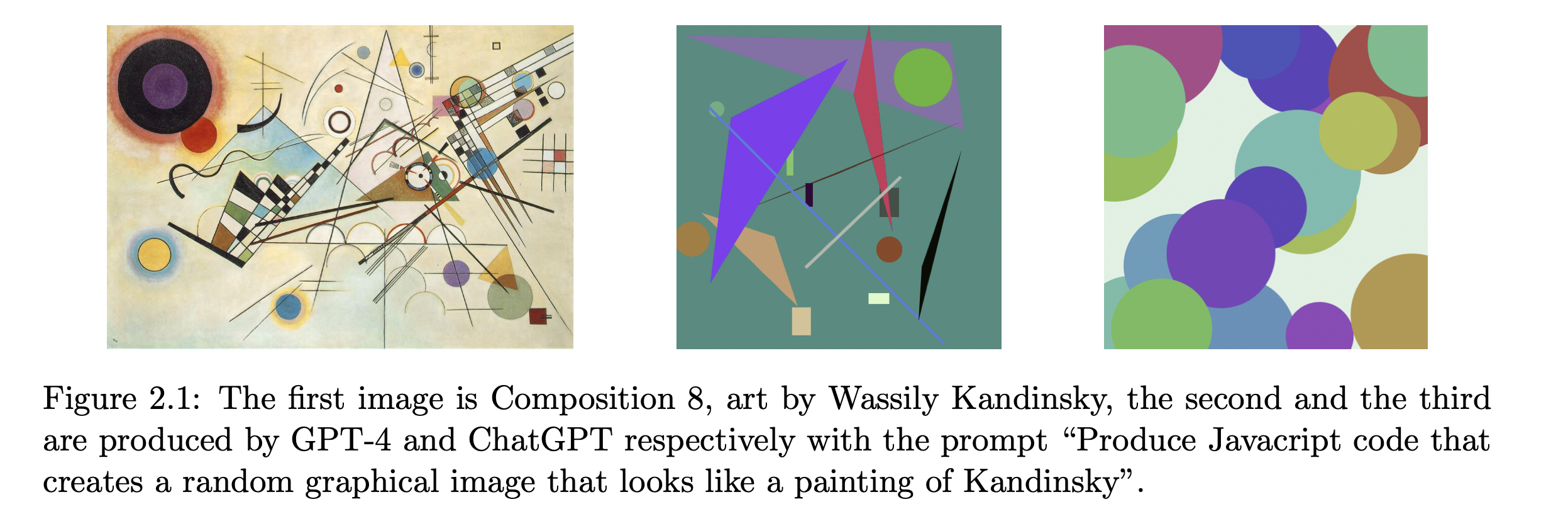

"Sparks of Artificial General Intelligence: Early experiments with GPT-4" Bubeck et al

Produce Javascript code that creates a random graphical image that looks like a painting of Kandinsky

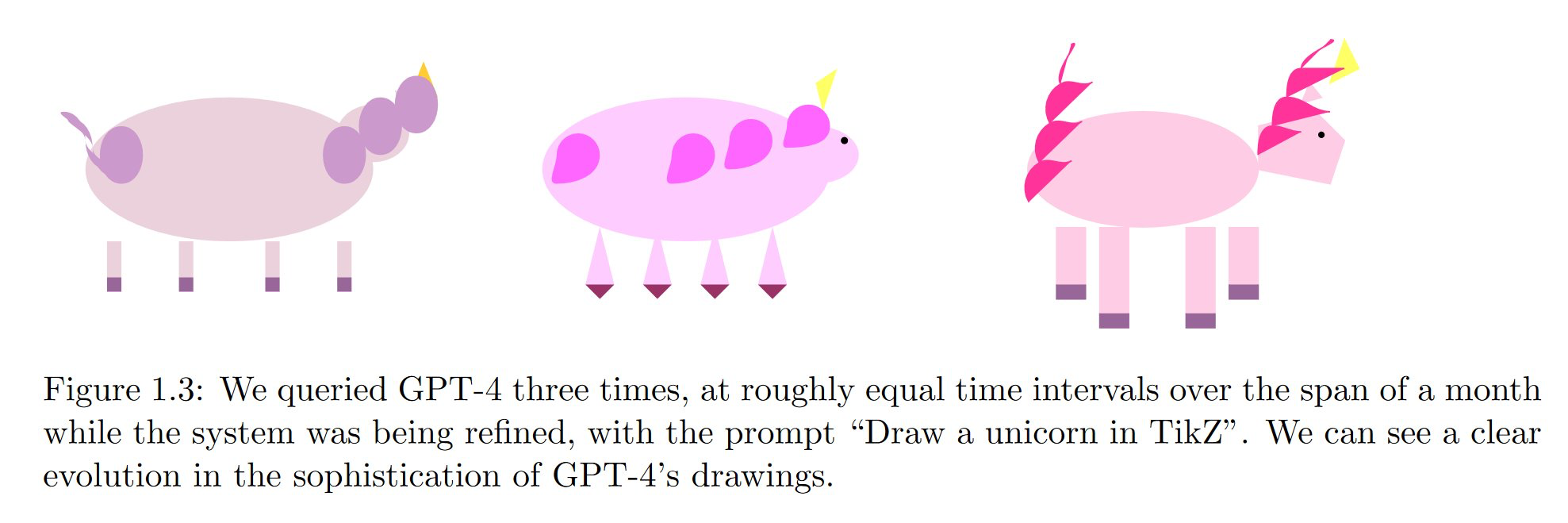

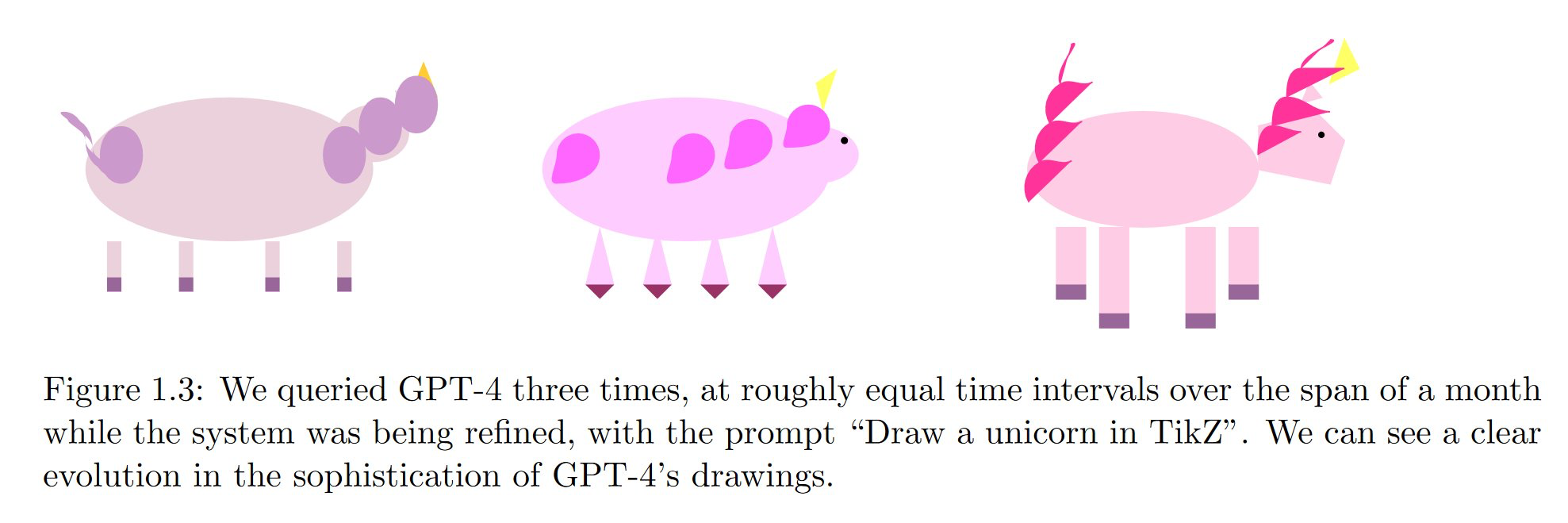

Draw a unicorn in TikZ

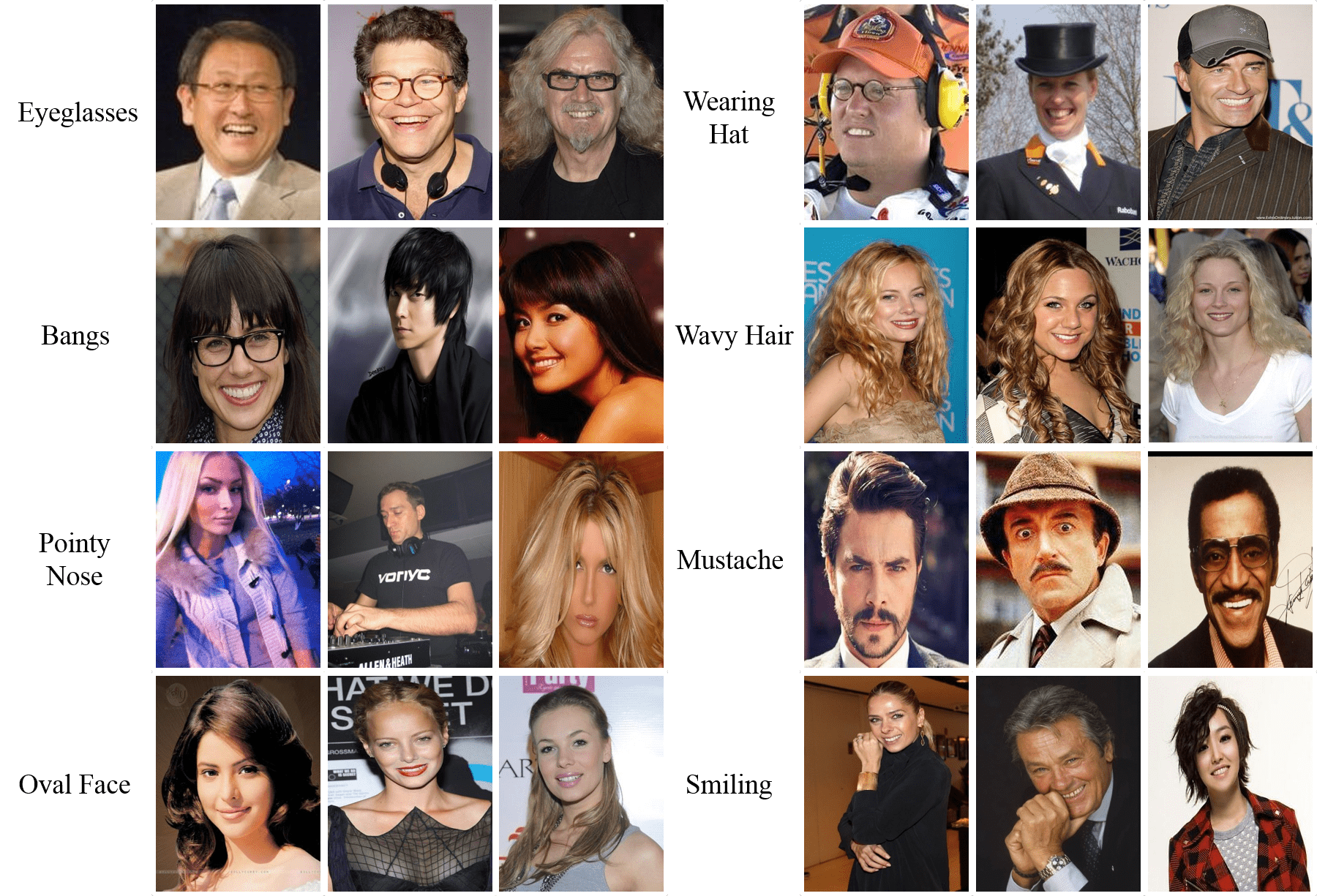

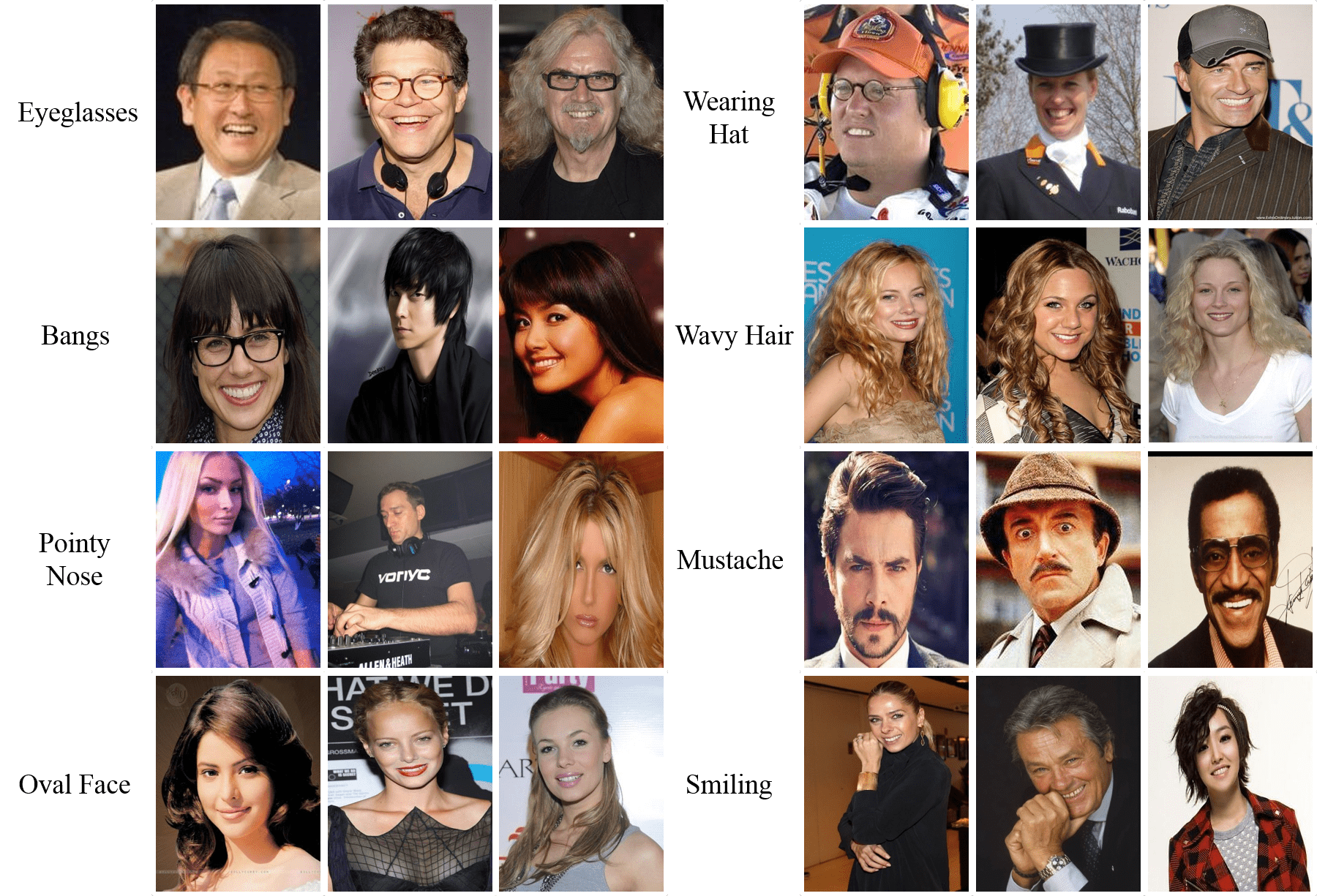

ImageBind: Multimodality

"ImageBind: One Embedding Space To Bind Them All" Girdhar et al

-

Books by Kevin P. Murphy

- Machine learning, a probabilistic perspective

- Probabilistic Machine Learning: advanced topics

- IAIFI Summer school

- Tutorials

- Blogposts

cuestalz@mit.edu

HerWill - Summer School 2024

By carol cuesta

HerWill - Summer School 2024

- 560