Building Large Multimodal Foundation Models for Survey Astronomy

AAS 247 - Special Session: Advancing AI Infrastructure for Large Astronomy Datasets

François Lanusse

CNRS Researcher @ AIM, CEA Paris-Saclay

Polymathic AI

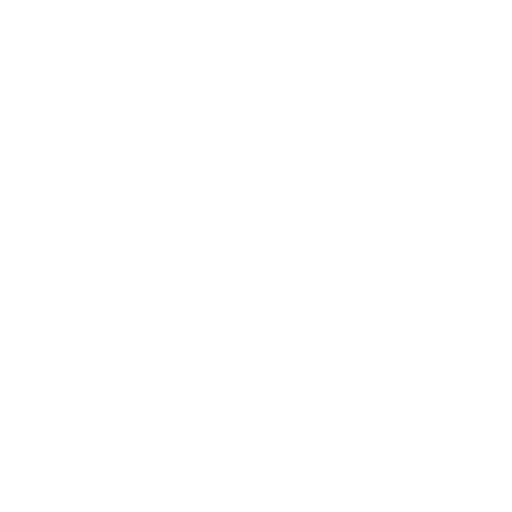

The Rise of The Foundation Model Paradigm

-

Foundation Model approach

-

Pretrain models on pretext tasks, without supervision, on very large scale datasets.

- Adapt pretrained models to downstream tasks.

-

Pretrain models on pretext tasks, without supervision, on very large scale datasets.

Rethinking the way we use Deep Learning

Conventional scientific workflow with deep learning

- Build a large training set of realistic data

- Design a neural network architecture for your data

- Deal with data preprocessing/normalization issues

- Train your network on some GPUs for a day or so

- Apply your network to your problem

-

Throw the network away...

=> Because it's completely specific to your data, and to the one task it's trained for.

Conventional researchers @ CMU

Circa 2016

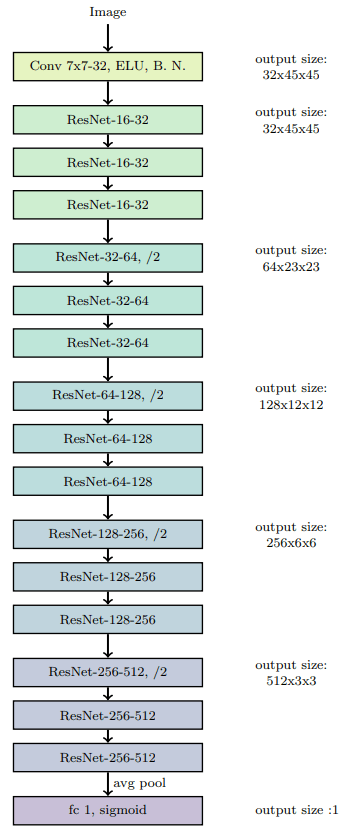

CMU DeepLens (Lanusse et al 2017)

Rethinking the way we use Deep Learning

Foundation Model-based Scientific Workflow

- Build a small training set of realistic data

- Design a neural network architecture for your data

- Deal with data preprocessing/normalization issues

- Adapt a model in a matter of minutes

- Apply your model to your problem

- Throw the network away...

=> Because it's completely specific to your data, and to the one task it's trained for.

Already taken care of

=> Greatly reduces time to science

What This New Paradigm Could Mean for Us

-

Never have to retrain my own neural networks from scratch

-

Existing pre-trained models would already be near optimal, no matter the task at hand

-

Existing pre-trained models would already be near optimal, no matter the task at hand

- Practical large scale Deep Learning even in very few example regime

-

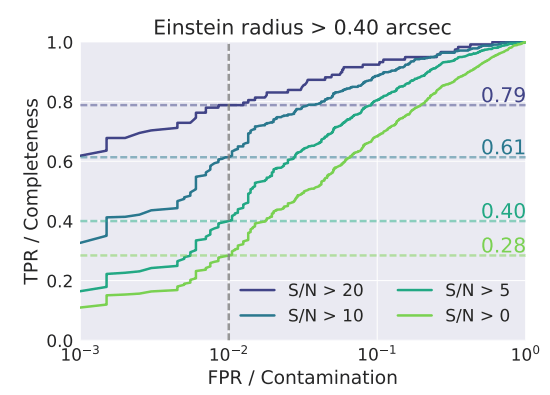

Searching for very rare objects in large surveys like Euclid or LSST becomes possible

-

Searching for very rare objects in large surveys like Euclid or LSST becomes possible

- If the information is embedded in a space where it becomes linearly accessible, very simple analysis tools are enough for downstream analysis

- In the future, survey pipelines may add vector embedding of detected objects into catalogs, these would be enough for most tasks, without the need to go back to pixels

=> Alright, lets make it happen!

Data

Challenge

The Challenges of Scientific Data

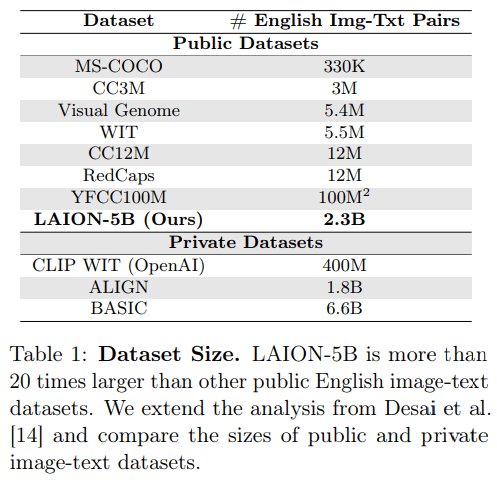

- Success of recent foundation models is driven by large corpora of uniform data (e.g LAION 5B).

- Scientific data comes with many additional challenges:

- Metadata matters

- Wide variety of measurements/observations

- Accessing and formatting data requires very specific expertise

Credit: Melchior et al. 2021

Credit:DESI collaboration/DESI Legacy Imaging Surveys/LBNL/DOE & KPNO/CTIO/NOIRLab/NSF/AURA/unWISE

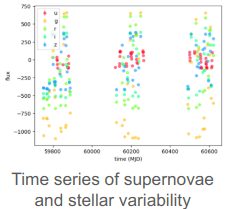

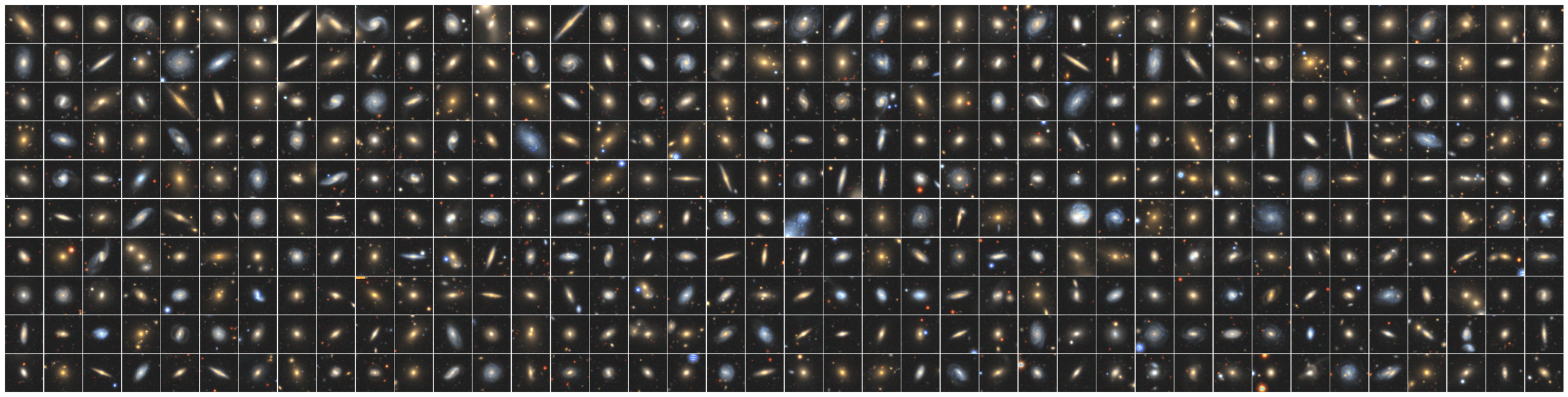

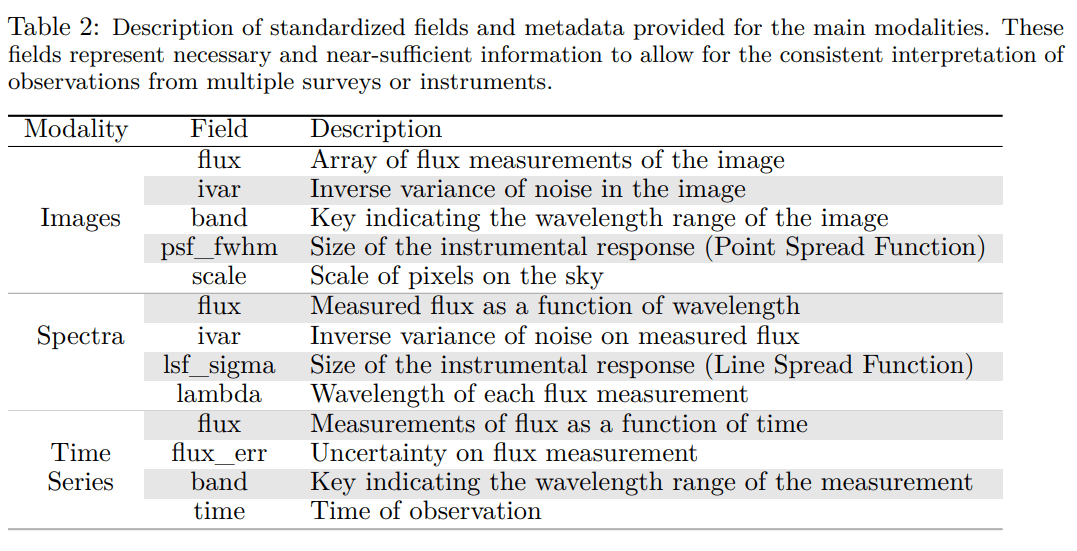

The Multimodal Universe

Enabling Large-Scale Machine Learning with 100TBs of Astronomical Scientific Data

Collaborative project with about 30 contributors

Presented at NeurIPS 2024 Datasets & Benchmark track

The MultiModal Universe Project

- Goal: Assemble the first large-scale multimodal dataset for machine learning in astrophysics.

-

Strategy:

- Engage with a broad community of AI+Astro experts.

- Target large astronomical surveys, varied types of instruments, many different astrophysics sub-fields.

- Adopt standardized conventions for storing and accessing data and metadata through mainstream tools (e.g. Hugging Face Datasets).

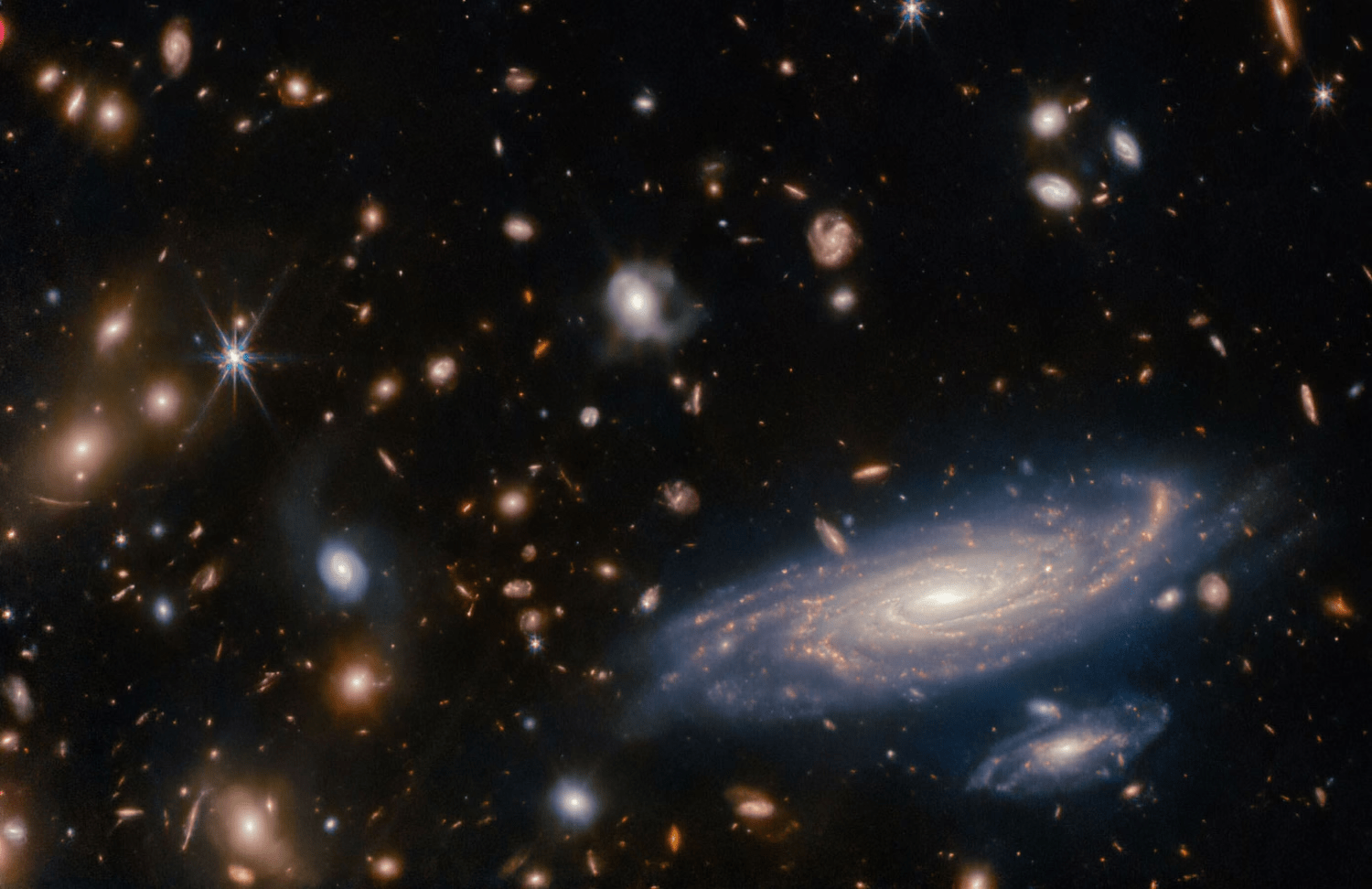

Ground-based imaging from Legacy Survey

Space-based imaging from JWST

MultiModal Universe Infrastructure

Architecture

Challenge

The Universal Neural Architecture Challenge

Most General

Most Specific

Single model capable of processing all types of data

Independent models for all types of data

The Universal Neural Architecture Challenge

Most General

Most Specific

Independent models for all types of data

Single model capable of processing all types of data

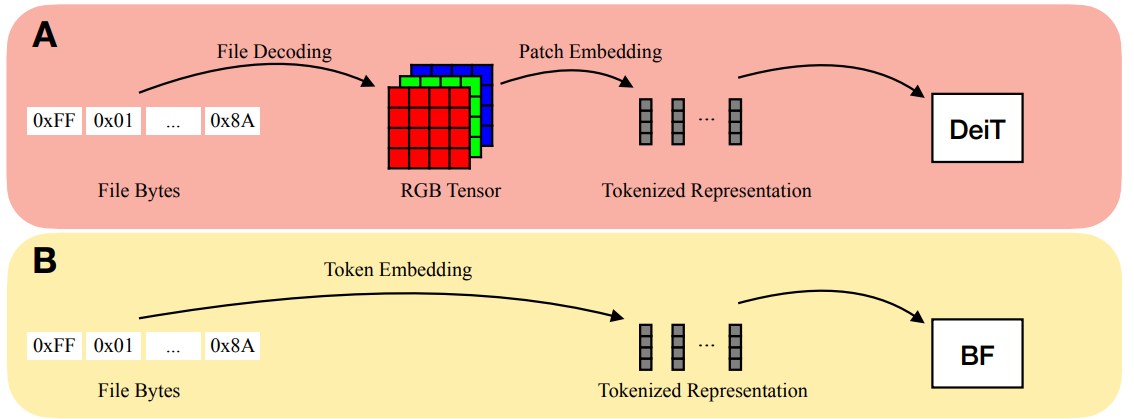

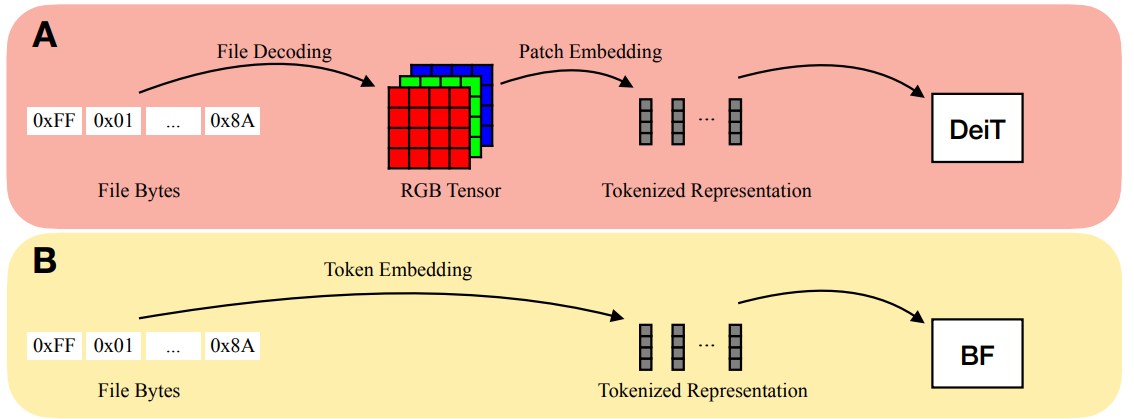

Bytes Are All You Need (Horton et al. 2023)

The Universal Neural Architecture Challenge

Most General

Most Specific

Independent models for all types of data

Single model capable of processing all types of data

Bytes Are All You Need (Horton et al. 2023)

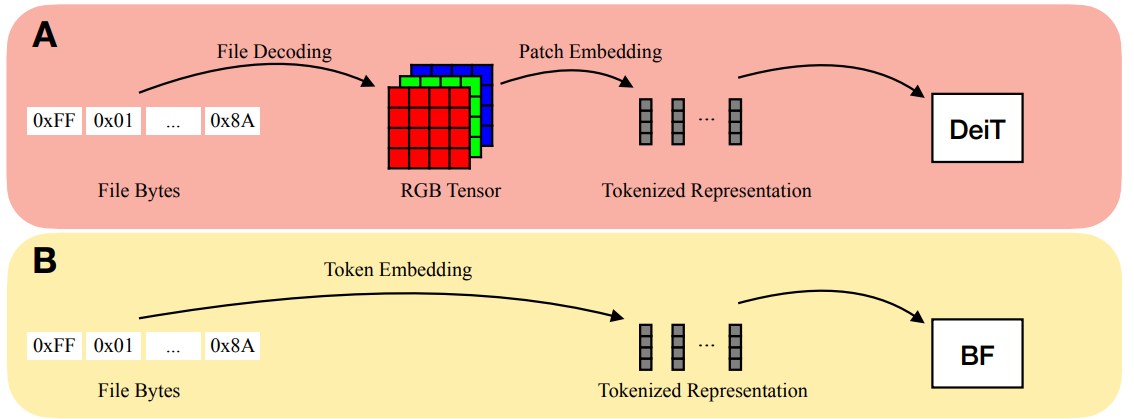

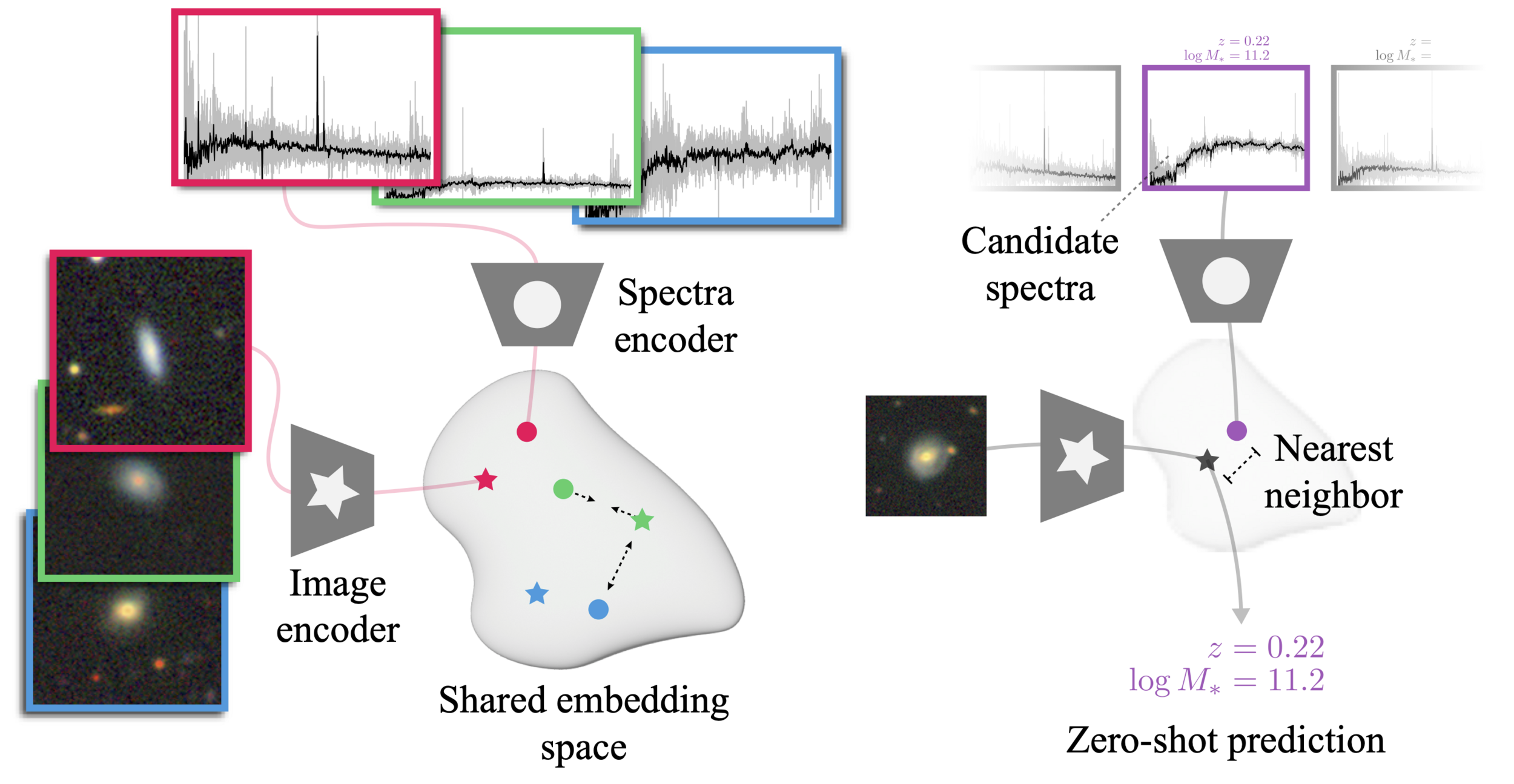

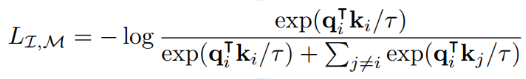

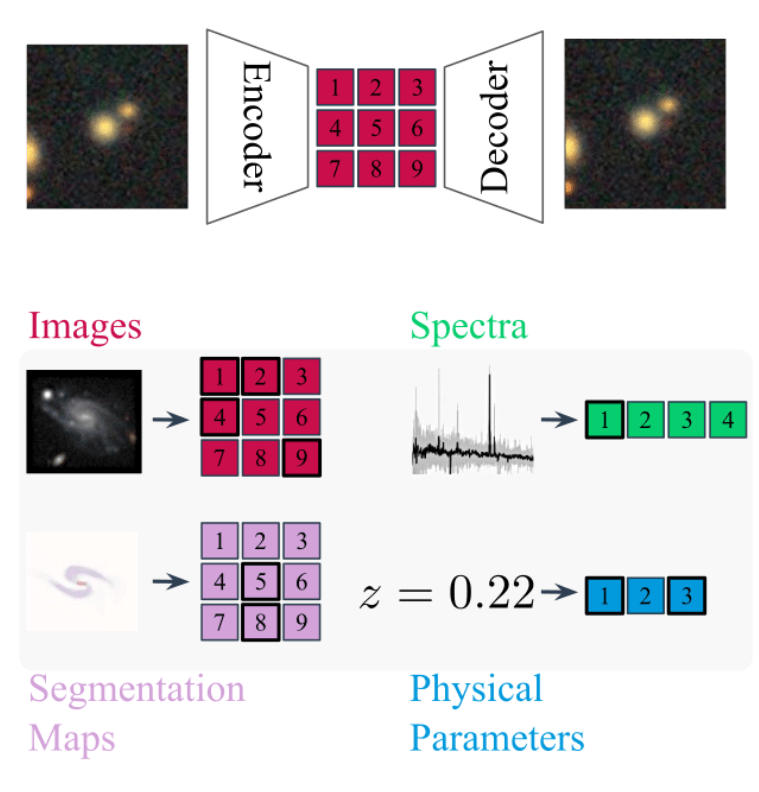

AstroCLIP (Parker et al. 2024)

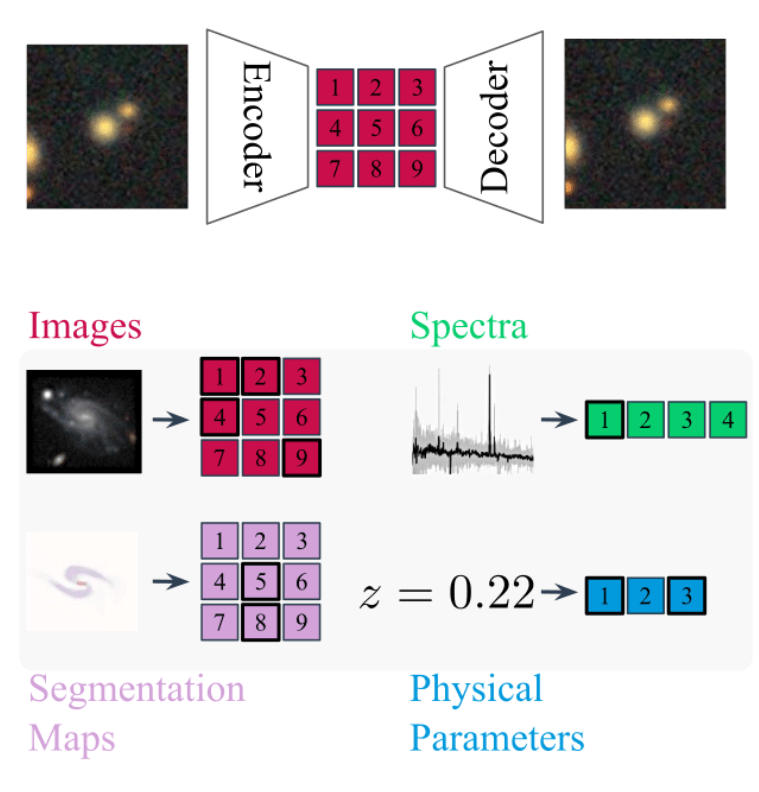

AstroCLIP

The Universal Neural Architecture Challenge

Most General

Most Specific

Independent models for all types of data

Single model capable of processing all types of data

Bytes Are All You Need (Horton et al. 2023)

Early Fusion Multimodal Models

AstroCLIP (Parker et al. 2024)

AION-1

Omnimodal Foundation Model for

Astronomical Surveys

Accepted at NeurIPS 2025, spotlight presentation at NeurIPS 2025 AI4Science Workshop

Project led by:

Francois

Lanusse

Liam

Parker

Jeff

Shen

Tom

Hehir

Ollie

Liu

Lucas

Meyer

Sebastian Wagner-Carena

Helen

Qu

Micah

Bowles

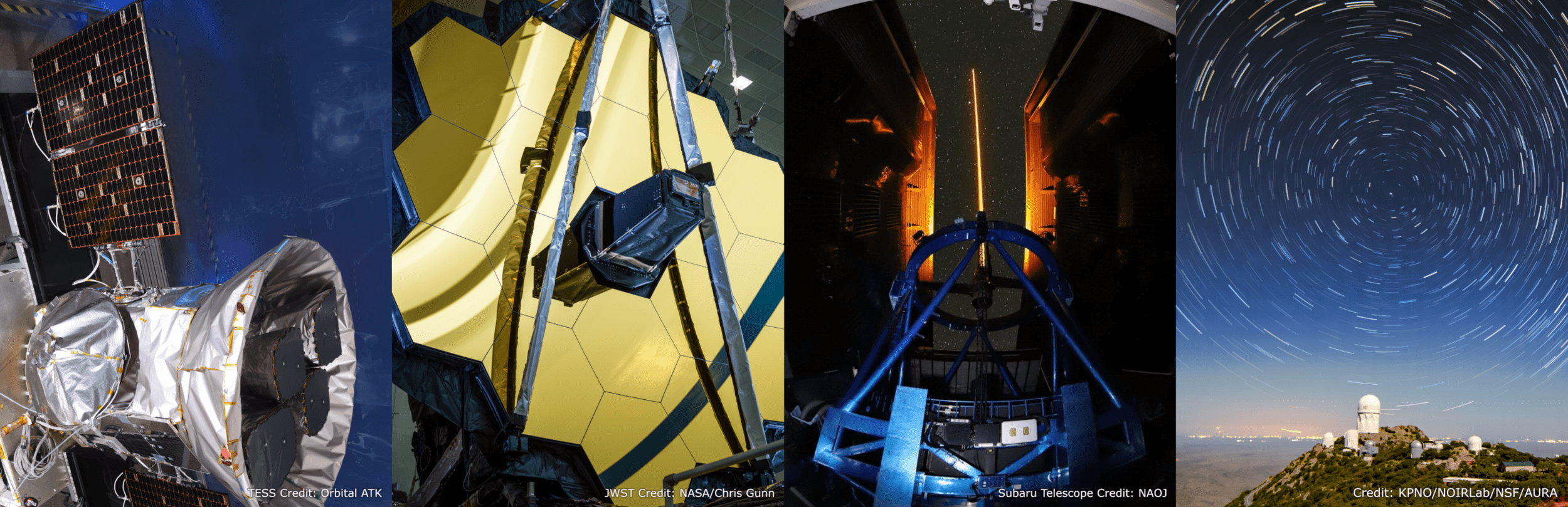

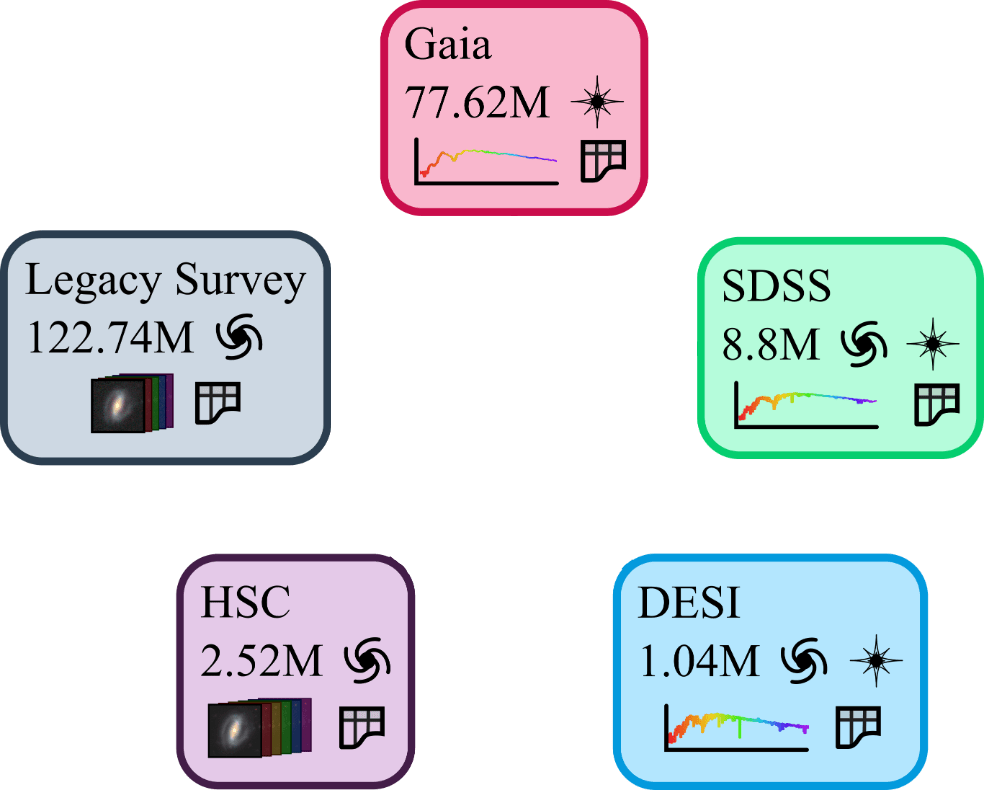

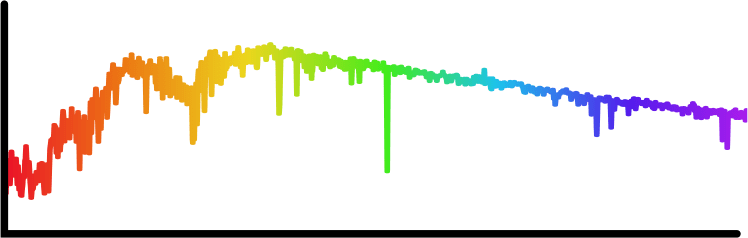

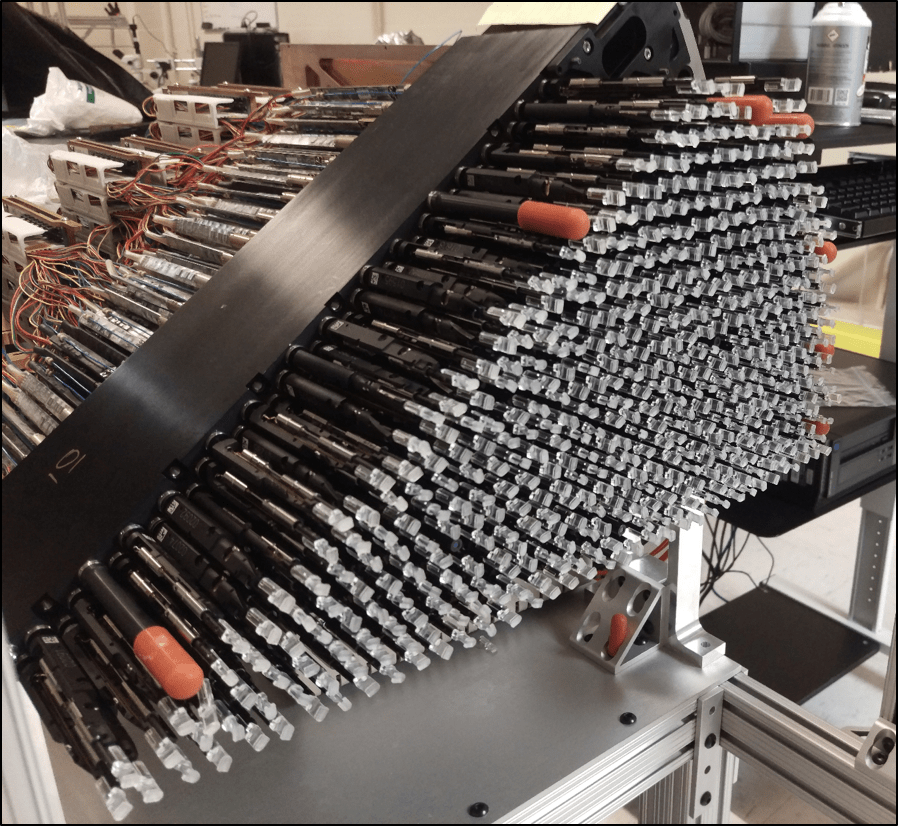

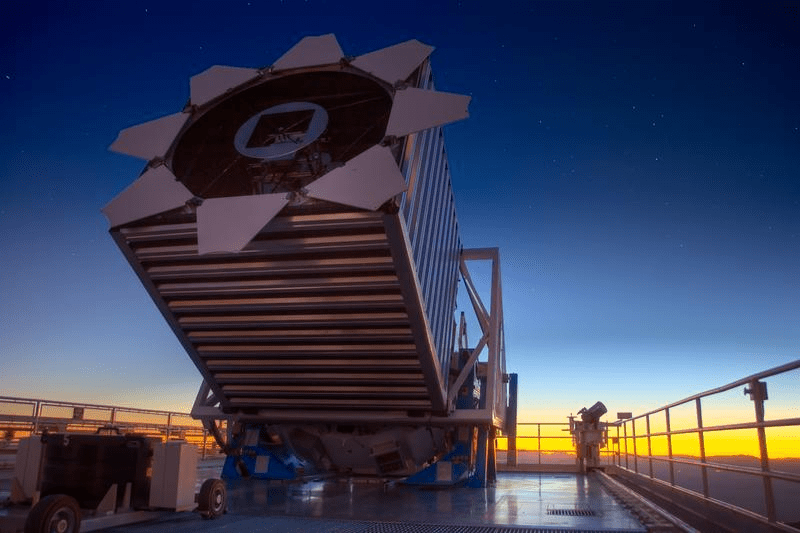

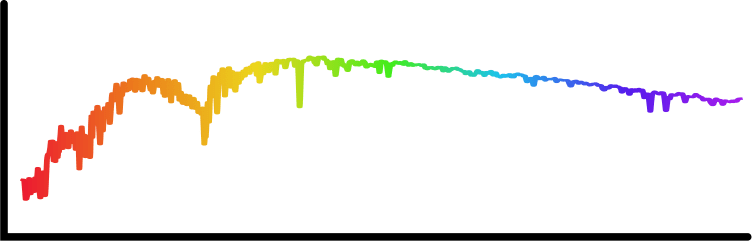

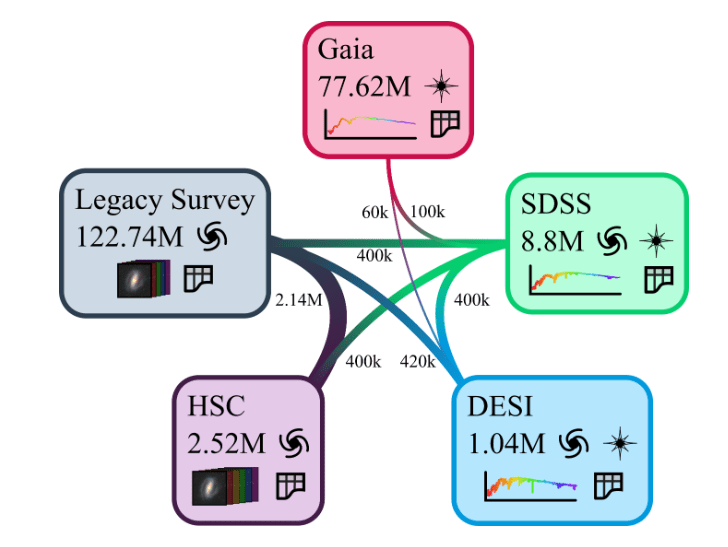

The AION-1 Data Pile

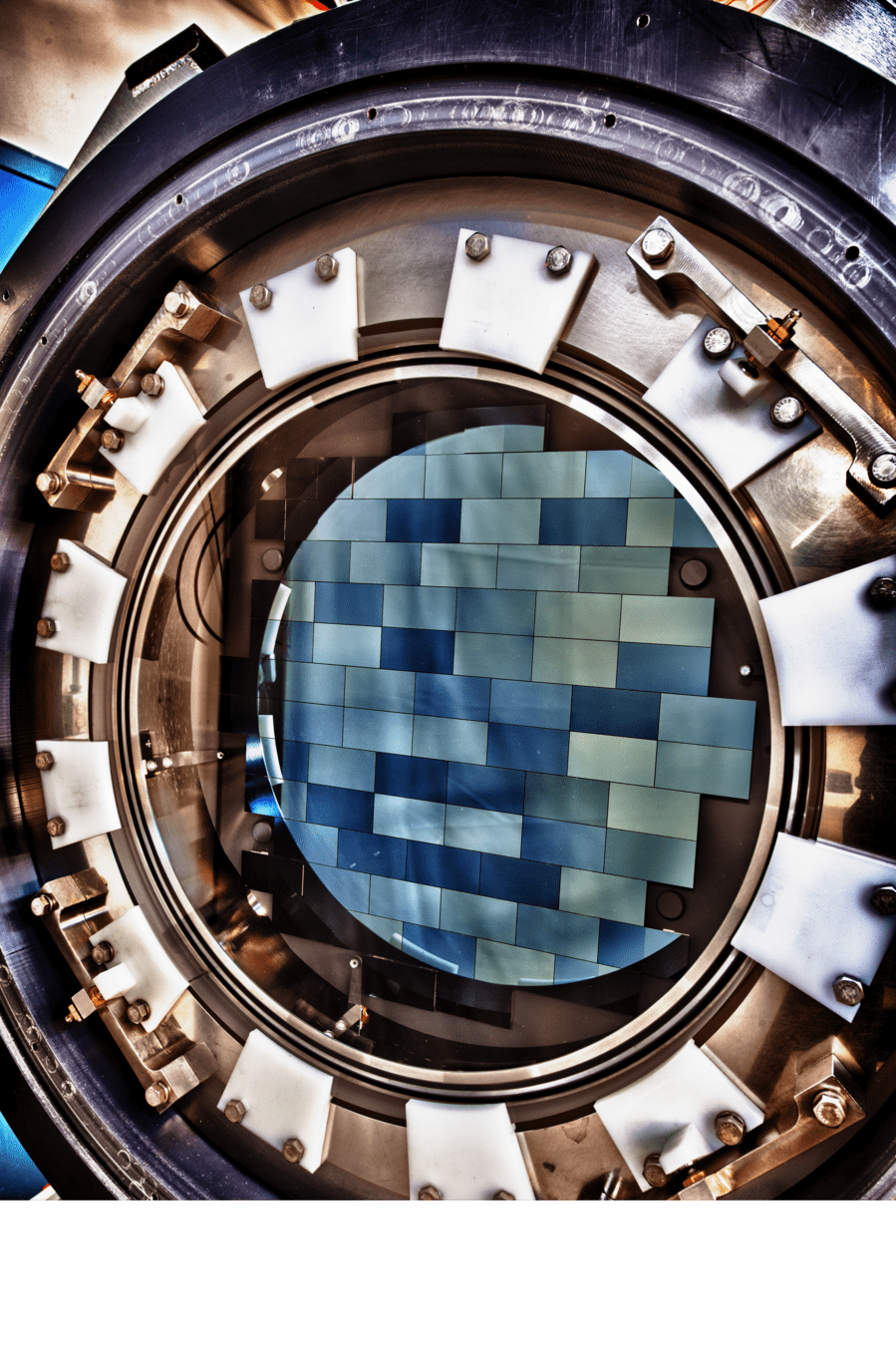

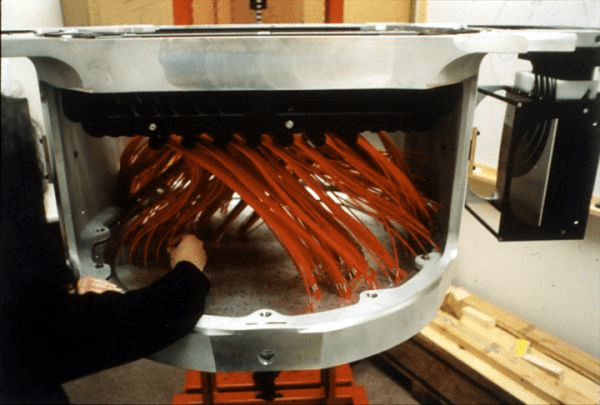

(Blanco Telescope and Dark Energy Camera.

Credit: Reidar Hahn/Fermi National Accelerator Laboratory)

(Subaru Telescope and Hyper Suprime Cam. Credit: NAOJ)

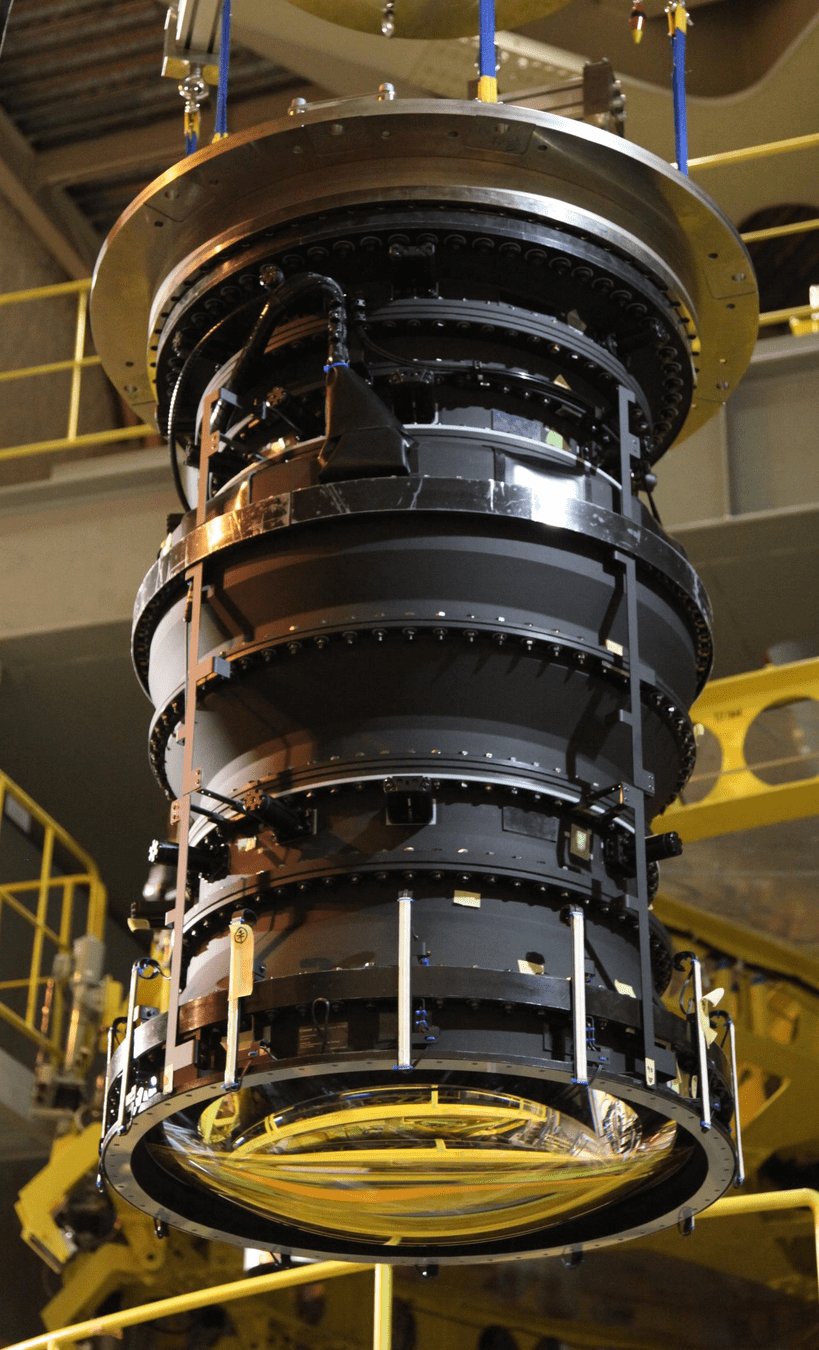

(Dark Energy Spectroscopic Instrument)

(Sloan Digital Sky Survey. Credit: SDSS)

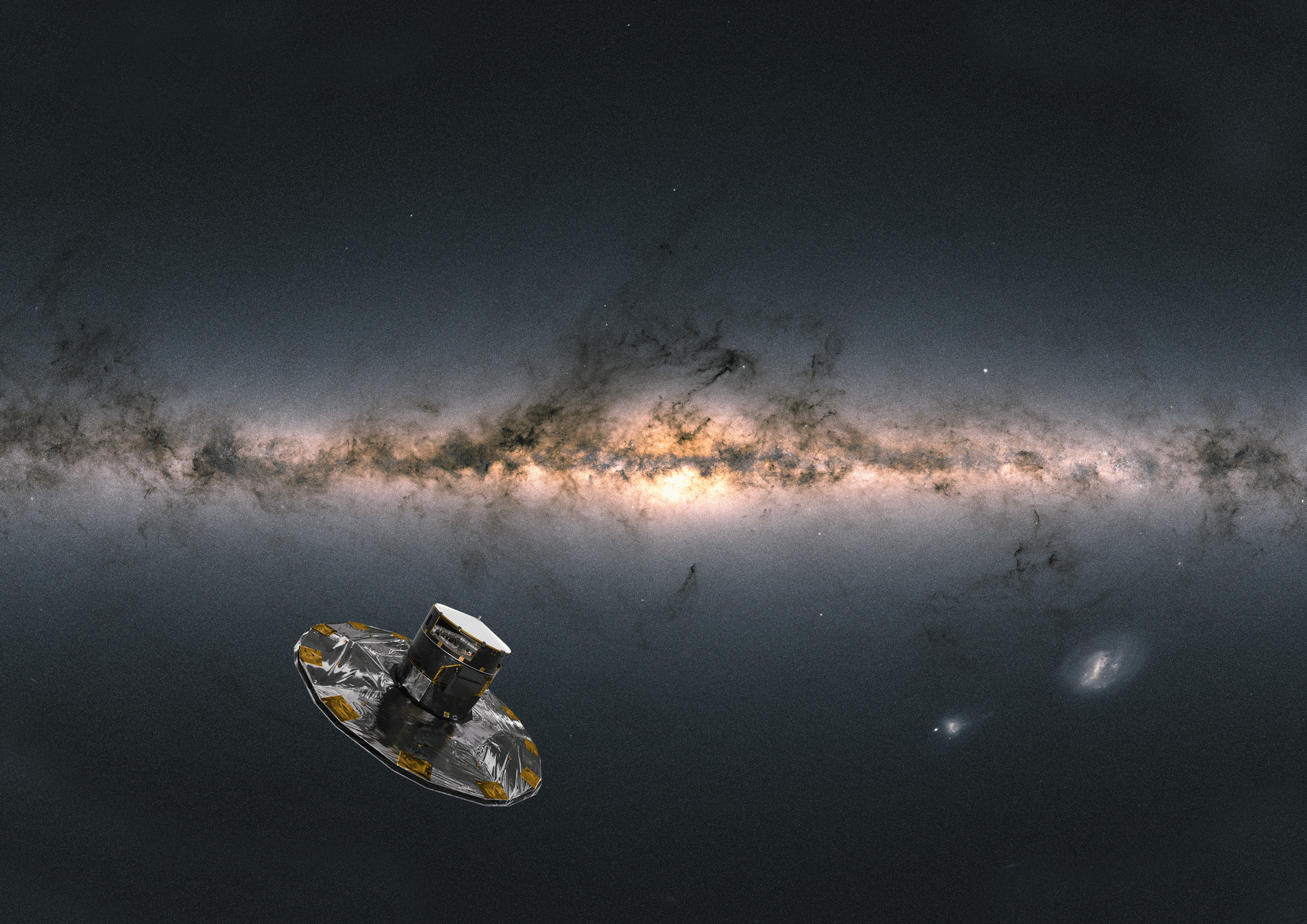

(Gaia Satellite. Credit: ESA/ATG)

Cuts: extended, full color griz, z < 21

Cuts: extended, full color grizy, z < 21

Cuts: parallax / parallax_error > 10

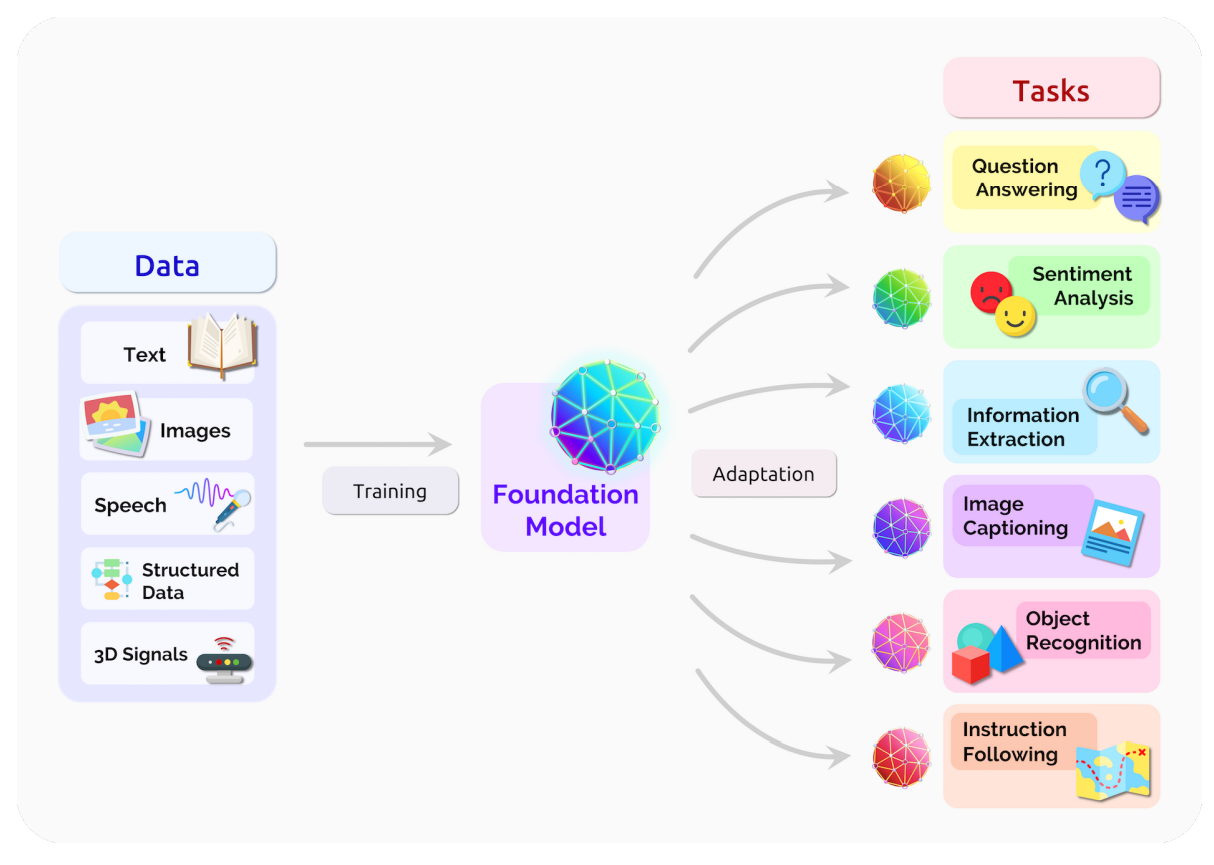

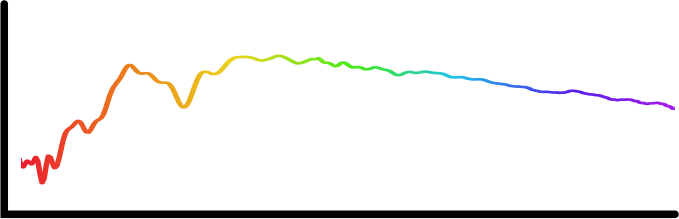

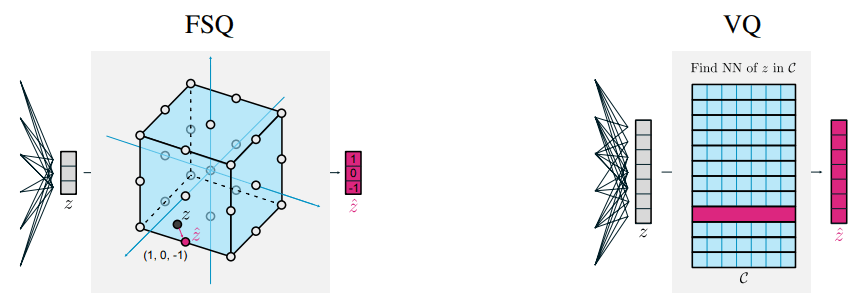

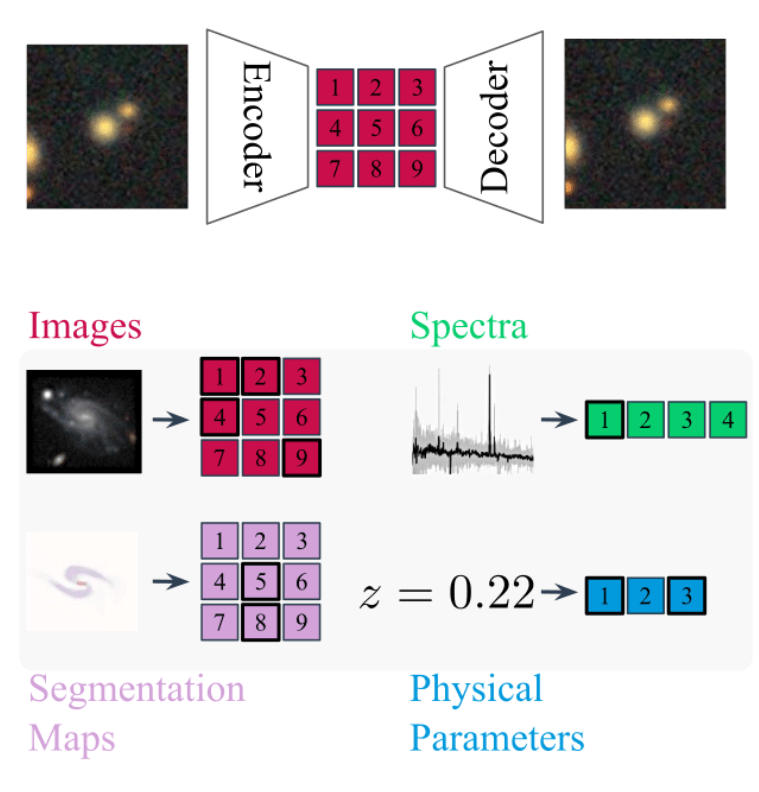

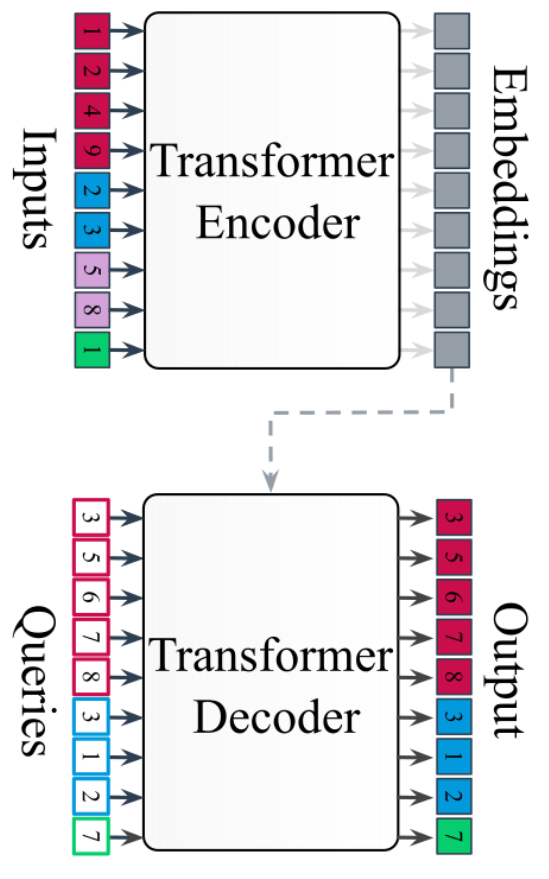

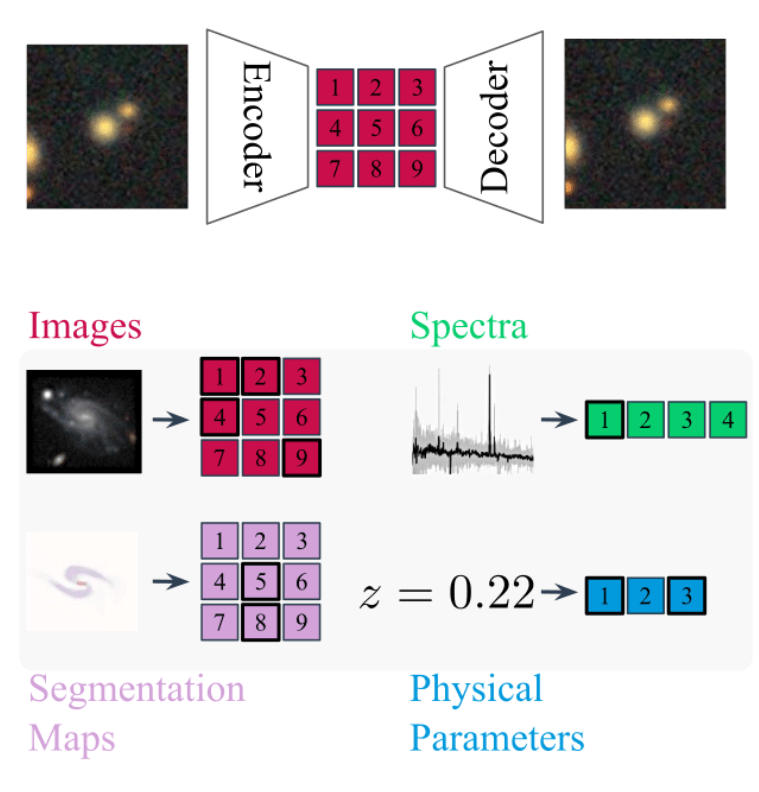

Standardizing all modalities through tokenization

- For each modality class (e.g. image, spectrum) we build dedicated metadata-aware tokenizers

- For AION-1, we integrate 39 different modalities (different instruments, different measurements, etc.)

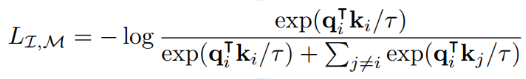

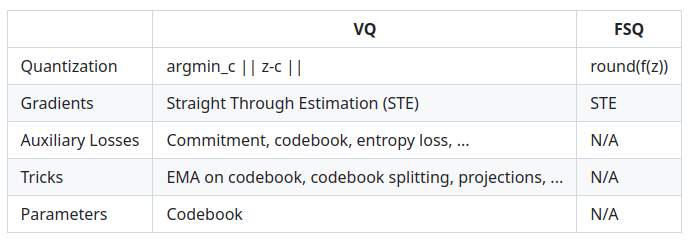

Any-to-Any Modeling with Generative Masked Modeling

- Training is done by pairing observations of the same objects from different instruments.

- Each input token is tagged with a modality embedding that specifies provenance metadata.

- Model is trained by cross-modal generative masked modeling (Mizrahi et al. 2023)

=> Learns the joint and all conditional distributions of provided modalities:

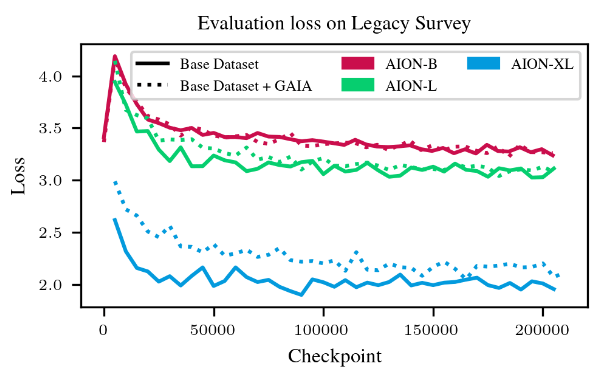

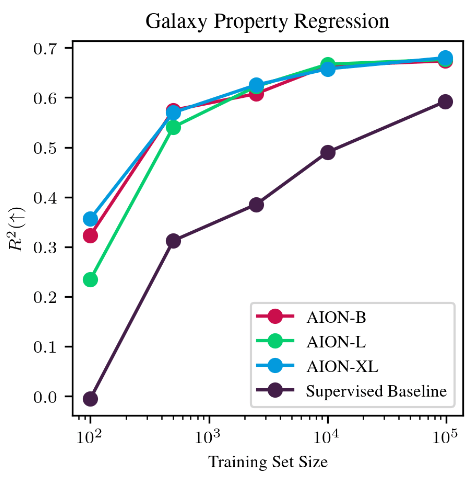

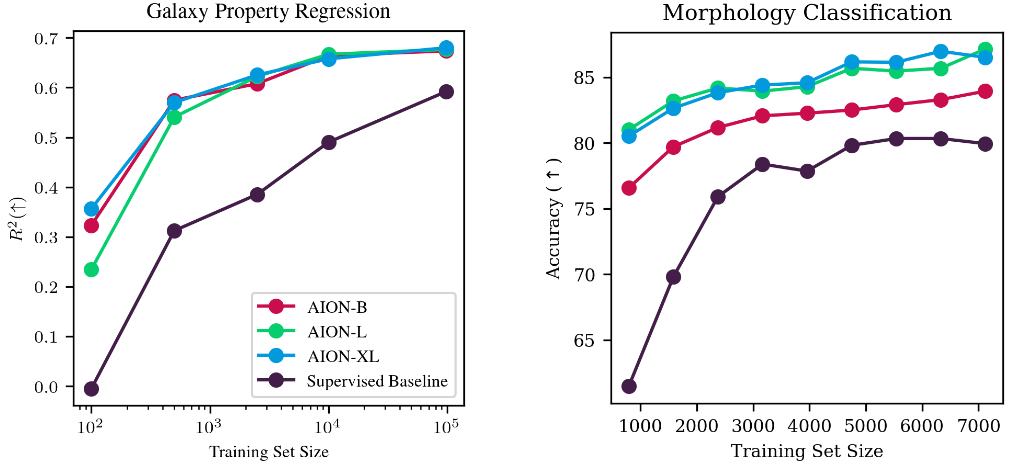

AION-1 family of models

- Models trained as part of the 2024 Jean Zay Grand Challenge, following an extension to a new partition of 1400 H100s

- AION-1 Base: 300 M parameters

- 64 H100s - 1.5 days

- AION-1 Large: 800 M parameters

- 100 H100s - 2.5 days

- AION-1 XLarge: 3B parameters

- 288 H100s - 3.5 days

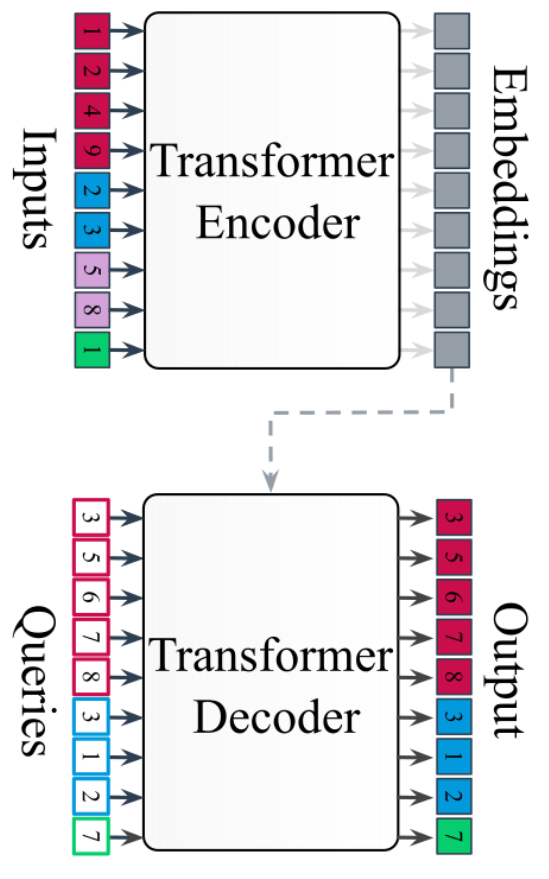

Adaptation of AION-1 embeddings

Adaptation at low cost

with simple strategies:

- Mean pooling + linear probing

- Attentive pooling

- Can be used trivially on any input data

- Flexible to varying number/types of inputs

=> Allows for trivial data fusion

x_train = Tokenize(hsc_images, modality='HSC')

model = FineTunedModel(base='Aion-B',

adaptation='AttentivePooling')

model.fit(x_train, y_train)

y_test = model.predict(x_test)

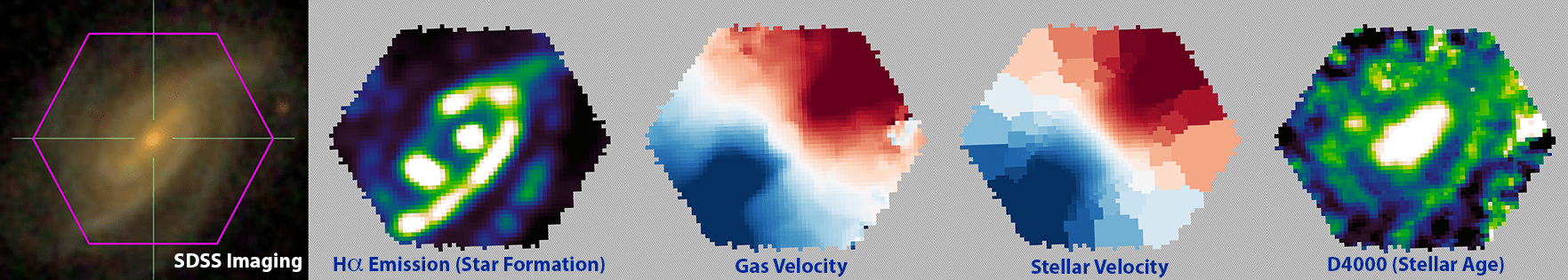

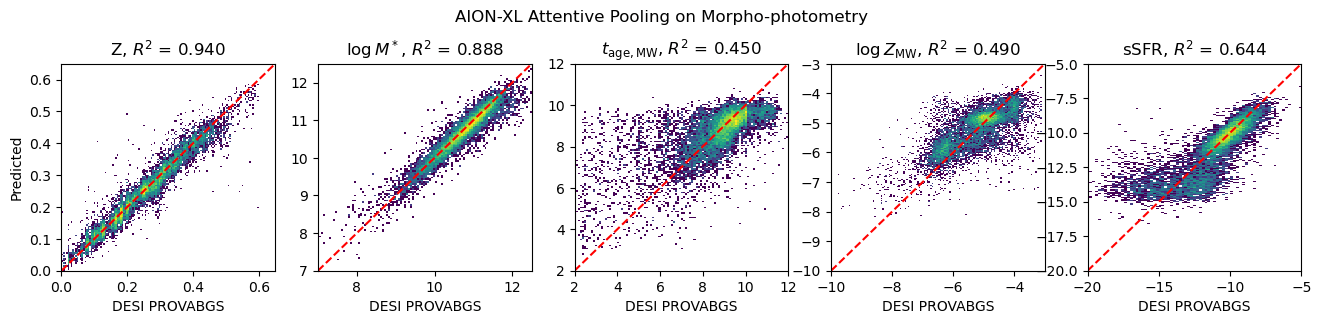

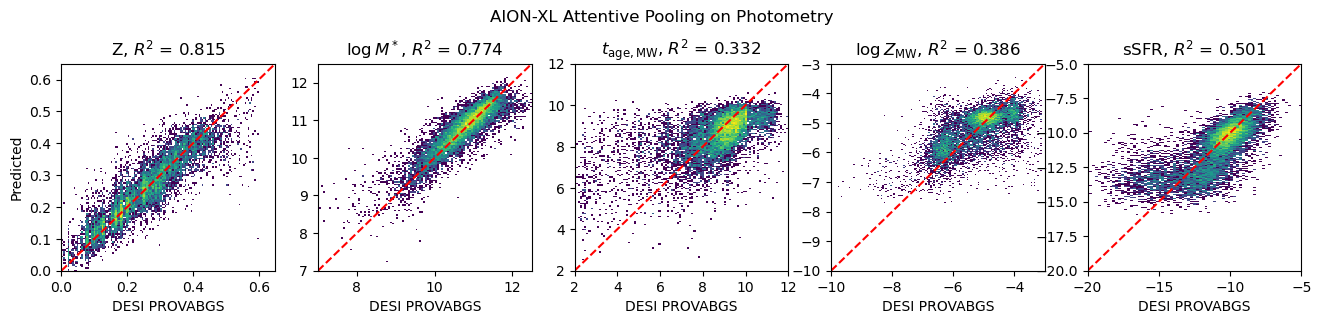

Physical parameter estimation and data fusion for galaxies

Inputs:

measured fluxes

Inputs:

measured fluxes + image

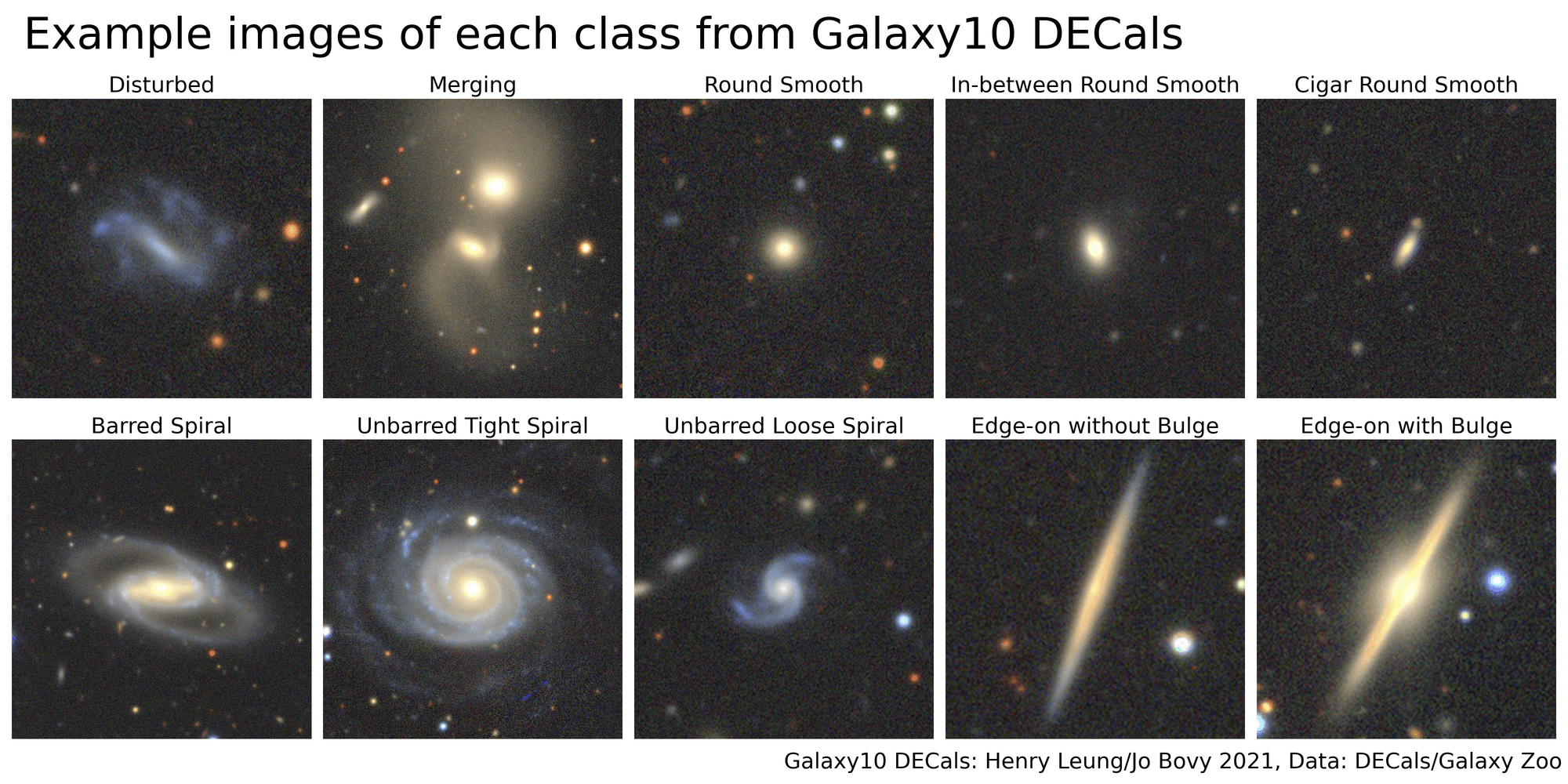

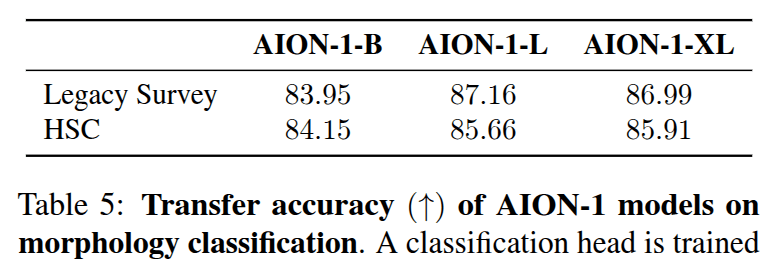

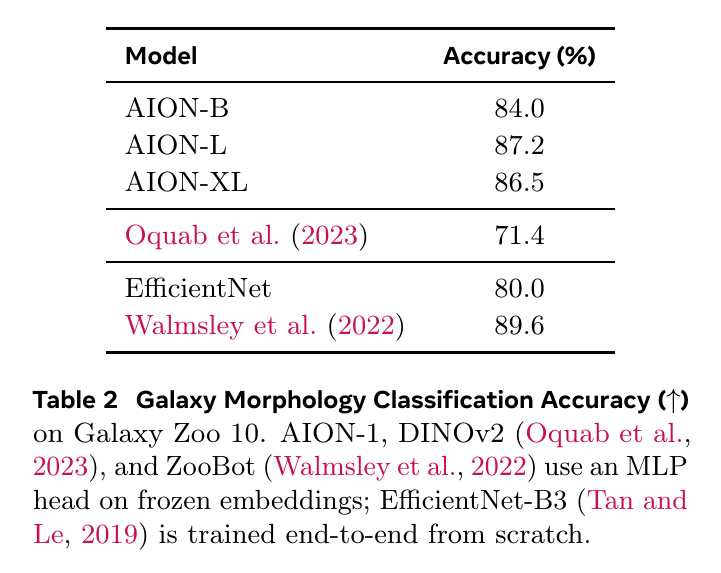

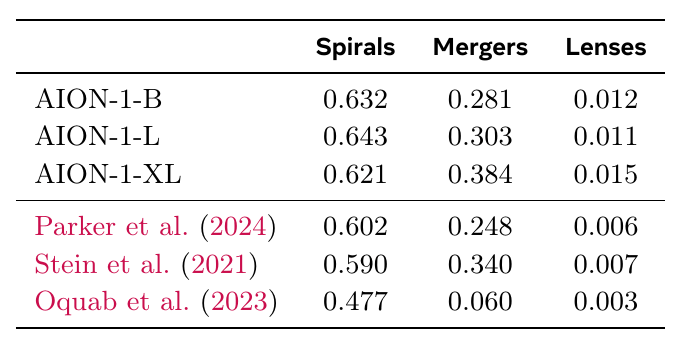

Morphology classification by Linear Probing

Trained on ->

Eval on ->

DiNOv2

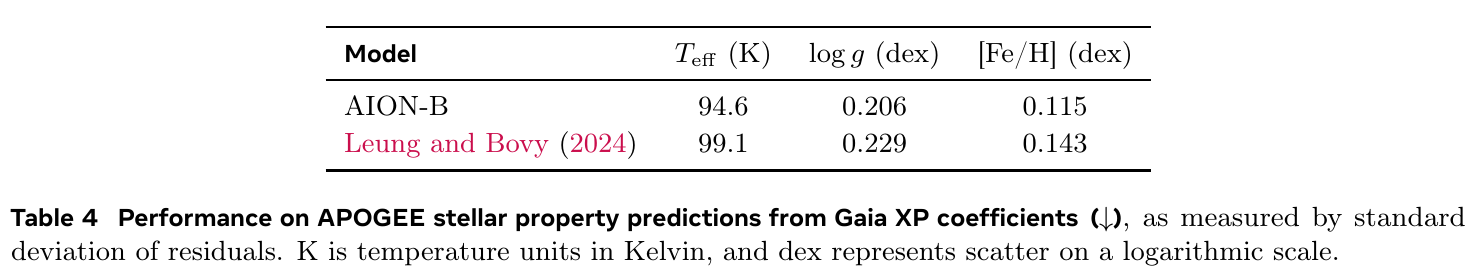

Physical parameter estimation for stars

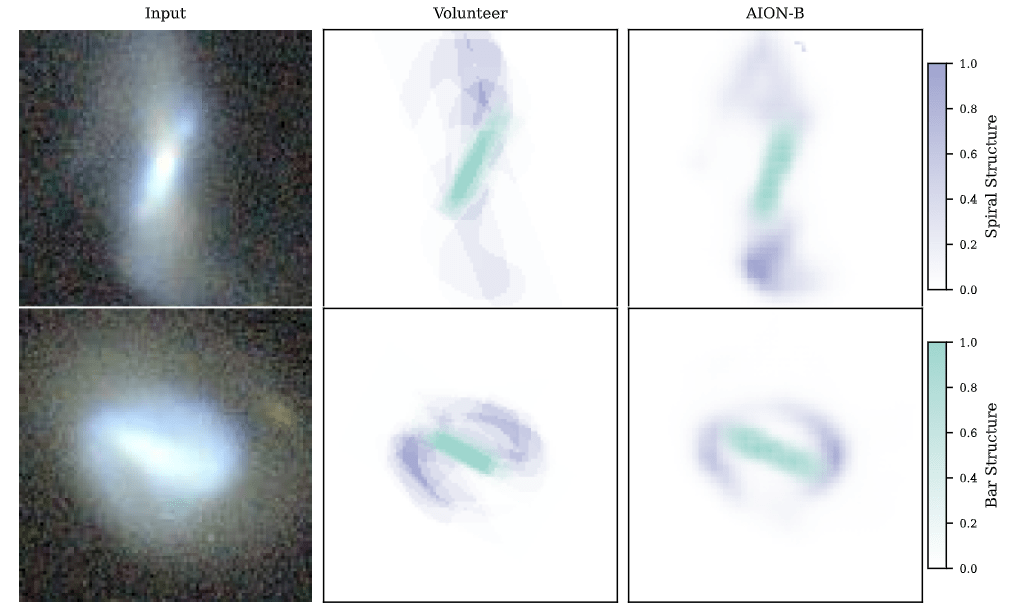

Semantic segmentation

Segmenting central bar and spiral arms in galaxy images based on Galaxy Zoo 3D

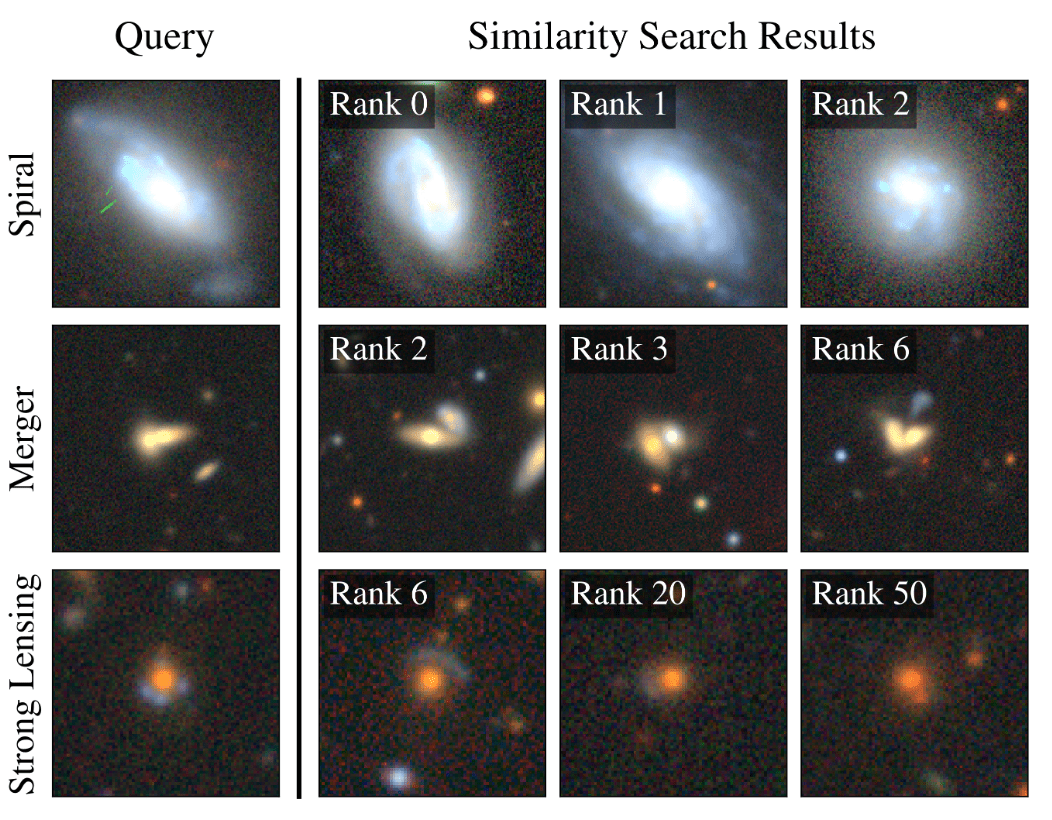

Example-based retrieval

nDCG@10 score

Building the Future of AI Infrastructure for Astronomy

- Rethinking Data Infrastructure: standardizing access to data (not just catalogs) across surveys

-

Multimodal training requires cross-matching data (not just catalogs) across surveys

- requires O(1000) postage stamps per seconds between 2 surveys

- With MMU and training AION-1 this cross-matching is done offline: slow, not very flexible

-

Production of static curated datasets is challenging

-

Requires a local copy of all datasets (postage stamps APIs of most surveys are not fast enough to support generating millions of stamps), non trivial storage, and non trivial compute.

-

Requires a local copy of all datasets (postage stamps APIs of most surveys are not fast enough to support generating millions of stamps), non trivial storage, and non trivial compute.

-

Multimodal training requires cross-matching data (not just catalogs) across surveys

- Developing AI Standards and Tools to Facilitate Downstream Science

- Embeddings could be included in data releases, as an extension of current catalogs

-

Standard API access to inference endpoints could allow for easy use and adaptation of Foundation Models

- Tracking Progress with Robust and Thorough Benchmarking is essential

- Foundation Models aim at serving a wide variety of science cases, it will take a community effort to build such benchmarks,

Follow us online!

Thank you for listening!

Building Large Multimodal Foundation Models for Survey Astronomy

By eiffl

Building Large Multimodal Foundation Models for Survey Astronomy

Talk at the AAS 247 special session on Advancing AI Infrastructure for Large Astronomy Datasets.

- 174