Abdullah Fathi

Manual deployment of Containers is hard to maintain, error-prone and annoying

(even beyond security and configuration concerns

Containers might crash/ go down and need to be replacedWe might need more container instances upon traffic spikesIncoming traffic should be distributed equallyContainer healthchecks + automatic re-deploymentAutoscalingLoad BalancerProblem we are facing

Kubernetes to The Rescue

An open-source system for orchestrating container deployments

Automatic DeploymentScaling & Load BalancingManagement

Apa itu Kubernetes?

- Kubernetes adalah platform orkestrasi container yang mengautomasikan proses penempatan, pengurusan dan penskalaan sistem aplikasi

- Kubernetes dilengkapi dengan fungsi high availability (HA) kepada persekitaran container dan menyokong

ciri-ciri self healing serta auto scaling

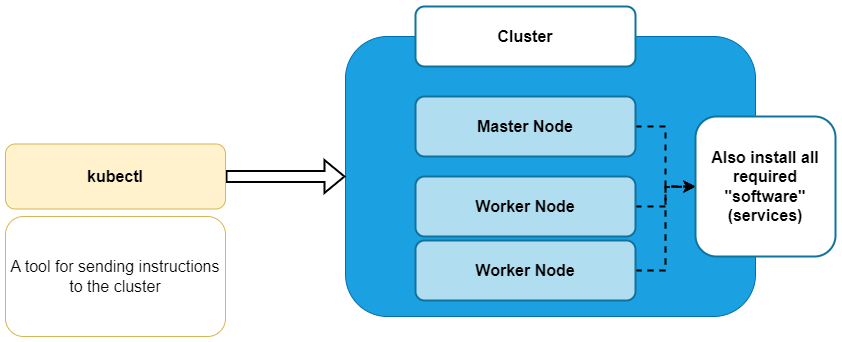

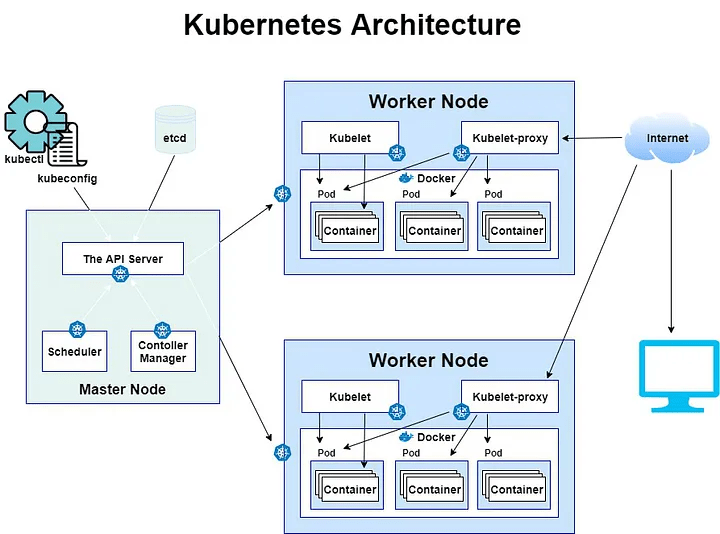

Kubernetes Architecture

- Various Components which help the Worker Nodes

- The Master Node controls your deployment

- Worker Nodes run the container of your application

- "Nodes" are your machine / virtual instances

- Multiple Pods can be created and removed to scale your app

Master Node

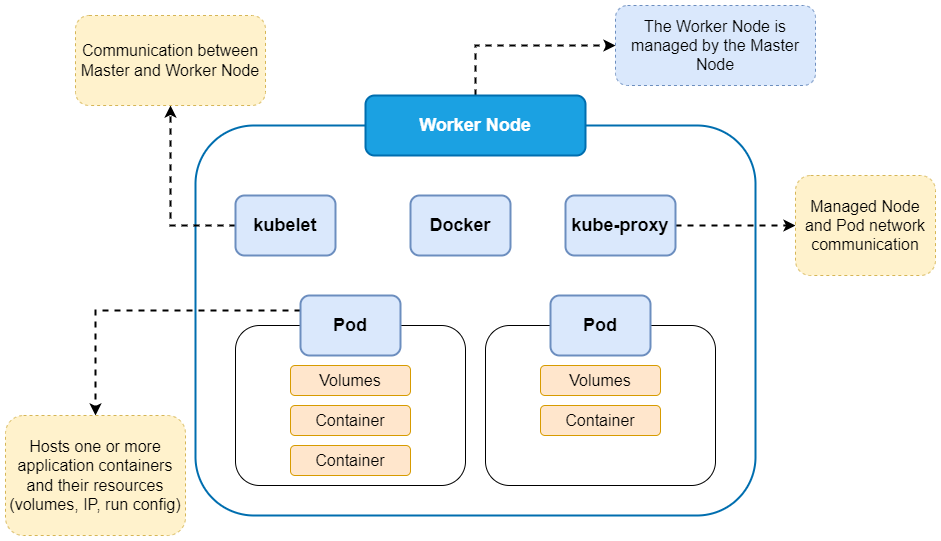

Worker Node

- Responsible for the overall management of Kubernetes clusters.

- Consist of three components that take care of communication, scheduling, and controllers:

- The API Server

- Scheduler

- Controller Manage

Master Node

The scheduler watches created Pods that do not have a Node design yet and designs the Pod run on a specific node

Scheduler

Allows you to interact with the Kubernetes API its the front end of the Kubernetes control plane.

API Server

The controller Manager runs controllers. These are background threads that run tasks in a cluster

Controller Manager

- This is a distributed key-value stored.

- Kubernetes uses etcd as its Achitecture database and stores all cluster data here.

- Some of the information are job scheduling info, Pod details, stage information among others

etcd

- The kubelet handles communication with a worker node.

- It’s the agent that communicates with the API server to see if pods have been assigned to the Nodes

Kubelet

- This is the network proxy and load balancer for service, on a single Worker Node.

- It handles the networking routing for TCP and UDP packets and performs connection forwarding.

Kube-proxy

- Various Components which help the Worker Nodes

- The Master Node controls your deployment

- Worker Nodes run the container of your application

- "Nodes" are your machine / virtual instances

- Multiple Pods can be created and removed to scale your app

Apa itu Rancher?

Rancher adalah platform untuk menguruskan kluster Kubernetes melalui antara muka web

Install Kubectl

Connect to remote k8s cluster

Windows

- Set PATH environment variable

- Import kubeconfig file

- Set default kubeconfig: $env:KUBECONFIG = "D:\FOTIA\training\kubernetes\microk8s-config.yaml"

- Verify connection: kubectl cluster-info

Kubernetes

Object

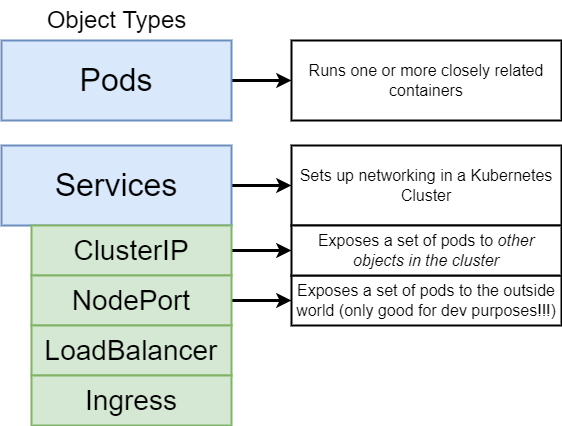

- Smallest unit of k8s

- Contains and runs one or multiple containers

- Pods contain shared resources (e.g. volumes) for all Pod containers

- Has a cluster-internal IP by default

- Usually 1 container per Pod

- Each Pod gets its own cluster-internal IP by default

- New IP address on re-creation:

Pods are ephemeral (k8s will start, stop and replace them as needed)

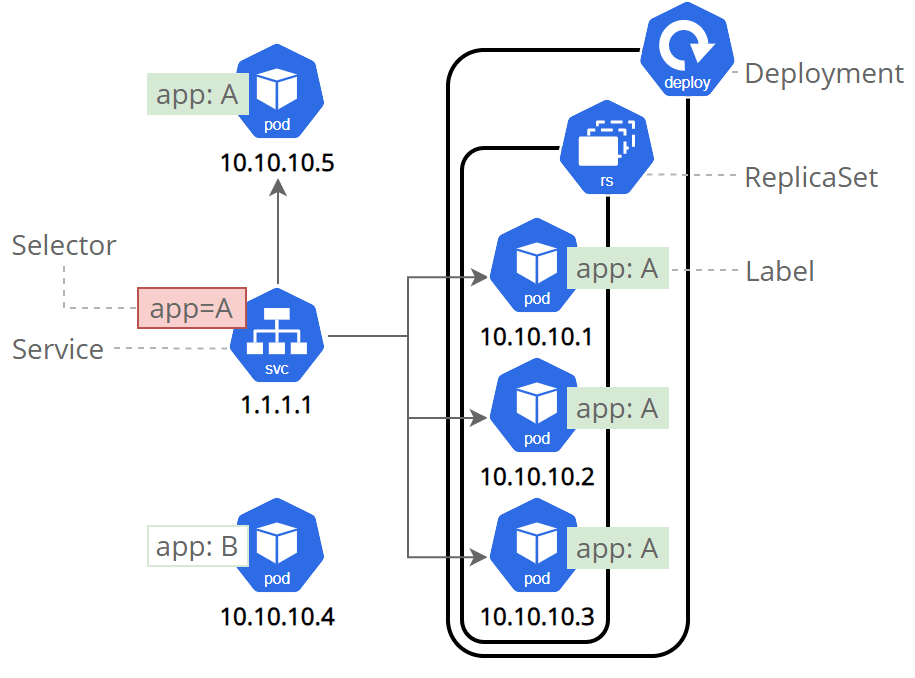

- Controls (multiple) Pods

- Blueprint for Pods

- Abstraction of Pods

- DB can't be replicated via Deployment

- Deployment can be paused, deleted and rolled back

- Deployments can be scaled dynamically (and automatically)

- Permanent IP address and also a load balancer

- Lifecycle of Pod and Service is not connected:

Even if pod dies, the service and its IP address will stay - Type of service:

- External Service: Accessible from public request

- Internal Service: Not exposed to public request

- Forward to service

- External configuration of the application

- Don't put credentials into ConfigMap

- Used to store secret data

- base64 encoded format

- Reference secret in Deployment/Pod

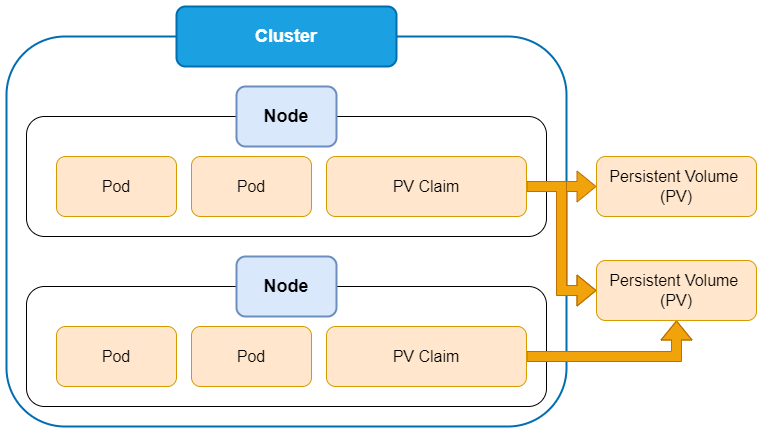

- Data Persistence

- Storage on local machine

(on same server node where pod is running) - Remote storage outside of k8s cluster

8. Stateful Set

- Stateful apps or database

- Avoid data inconsistencies:

Manage which Pod is writing or reading the storage

Imperative VS Declarative

Ways to configure resources under kubernetes

Imperative

The imperative approach involves directly running kubectl commands to create, update, or delete resources

# Create Deployment

kubectl create deployment first-app --image=<image from registry>

kubctl get deployments

kubectl get pods

#create service

#expose service

kubectl expose deployment first-app --type=LoadBalancer --port=8080

kubectl get svc

# Scale Up

kubectl scale deployments/first-app --replicas=3

# Rolling update

kubectl set image deployment/first-app kube-sample-app=<new image tag>

# Delete Deployment

kubectl delete deployment first-app

Kubernetes Configuration File

Declarative

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels: ...

spec:

replicas: 2

selector: ...

template: ...apiVersion: v1

kind: Service

metadata:

name: nginx-service

labels: ...

spec:

selector: ...

ports: ...Deployment

Service

1) Metadata

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels: ...

spec:

replicas: 2

selector: ...

template: ...apiVersion: v1

kind: Service

metadata:

name: nginx-service

labels: ...

spec:

selector: ...

ports: ...2) Specification

Each configuration file has 3 parts

Attributes of "spec" are specific to the kind

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels: ...

spec:

replicas: 2

selector: ...

template: ...Each configuration file has 3 parts

3) Status (automatically generated by k8s)

- k8s update state continuously

- desired state == actual state

- etcd holds the current status of any k8s component

Template

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels: ...

spec:

replicas: 2

selector: ...

template:

metadata:

labels:

app:nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort:8080- Has it's own "metadata" and "spec" section

- Applies to Prod

- Blueprint for a Pod

Template

Connecting components

(Labels & Selectors & Ports)

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app:nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort:8080Deployment

Service

apiVersion: v1

kind: Service

metadata:

name: nginx-service

labels: ...

spec:

selector:

app: nginx

ports: ...Metadata contains label

Specification contains selector

Labels & Selectors

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app:nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort:8080Deployment

- any key-value pair from component

Connecting Deployment to Pods

labels:

app: nginx- Pods get the label through the template blueprint

- This label is matched by the selector

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort:8080Deployment

Connecting Services to Deployments

Service

apiVersion: v1

kind: Service

metadata:

name: nginx-service

labels: ...

spec:

selector:

app: nginx

ports: ...Connection is made through the Selector of the Labels

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort:8080Deployment

Ports in Service and Pod

Service

apiVersion: v1

kind: Service

metadata:

name: nginx-service

labels: ...

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 8080Other Service

Nginx Service

Pod

port: 80

targetPort: 8080

targetPort: Port to forward request (containerPort of Deployment)

containerPort: Port which pod listening

External Service

Make service as an external service

nodePort: between 30000-32767

IP address and port is not opened

Kubernetes: External Service

apiVersion: v1

kind: Service

metadata:

name: system-a-external-service

spec:

selector:

app: system-a

type: NodePort

ports:

- protocol: TCP

port: 8080

targetPort: 8080

nodePort: 30001YAML File: External Service

Assign external IP address to service

Kubernetes Volume

Kubernetes can mount volume into containers

A broad variety of Volume Types/drivers are supported

- "Local" Volumes (i.e. on Nodes)

- Cloud-Provider specific Volumes

Volume lifetime depends on the Pod lifetime

- Volumes survive Container restarts (and removal)

- Volumes are removed when Pods are destroyed

Kubernetes Volumes

- Supports many different Drivers and Types

- Volumes are not necessarily persistent

- Volumes survive Container restarts and removals

Docker Volumes

- Basically no Driver / Type Support

- Volumes persist until manually cleared

- Volumes survive Container restarts and removals

Network File System (NFS)

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-2g-pv

spec:

storageClassName: nfs

accessModes:

- ReadWriteMany

capacity:

storage: 2Gi

nfs:

path: /tmp/nfs/2g-pv

server: 10.23.22.201

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc

spec:

storageClassName: nfs

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2Gi

---

apiVersion: v1

kind: Pod

metadata:

name: test-pod

spec:

volumes:

- name: nfs-pvc-vol

persistentVolumeClaim:

claimName: nfs-pvc

containers:

- name: nfs-pvc-test

image: nginx:alpine

ports:

- containerPort: 80

volumeMounts:

- name: nfs-pvc-vol

mountPath: /tmpIngress

Kubernetes: Ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: system-a-ingress

spec:

rules:

- host: system-a.fotia.com.my

http:

paths:

- backend:

serviceName: system-a-internal-service

servicePort: 8080- kind: Ingress

- Routing rules:

- Forward request to the internal service

- paths: the URL path

YAML File: Ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: system-a-ingress

spec:

rules:

- host: system-a.fotia.com.my

http:

paths:

- backend:

serviceName: system-a-internal-service

servicePort: 8080apiVersion: v1

kind: Service

metadata:

name: system-a-internal-service

spec:

selector:

app: system-a

ports:

- protocol: TCP

port: 8080

targetPort: 8080- No nodePort in Internal Service

- Instead of LoadBalancer, default type: ClusterIP

Ingress and Internal Service Configuration

Configure Ingress in Kubernetes Cluster

- We need an Ingress Controller to do an implementation for ingress

- Ingress Controller: Evaluates and processes Ingress rules

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: system-a-ingress

spec:

rules:

- host: system-a.fotia.com.my

http:

paths:

- backend:

serviceName: system-a-internal-service

servicePort: 8080

What is Ingress Controller?

- Evaluate all the rules

- Manages redirections

- Entrypoint to cluster

- Many third-party implementations

- K8s Nginx Ingress Controller

Ingress Controller behind Proxy/LB

No server in Kubernetes cluster is accessible from outside

- Good security practice

- Separate server

- Public IP address and open ports

- Entrypoint to cluster

Multiple paths for same host

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: system-a-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: system-a.fotia.com.my

http:

paths:

- path: /dashboard

backend:

serviceName: dashboard-service

servicePort: 8080

- path: /cart

backend:

serviceName: cart-service

servicePort: 3000

Multiple sub-domains or domains

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: system-a-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: dashboard.system-a.com.my

http:

paths:

backend:

serviceName: dashboard-service

servicePort: 8080

- host: cart.system-a.com.my

http:

paths:

backend:

serviceName: cart-service

servicePort: 3000

Configure TLS Certificate - https

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: tls-ingress

spec:

tls:

- hosts:

- system-a.fotia.com.my

secretName: system-a-secret-tls

rules:

- host: system-a.fotia.com.my

http:

paths:

- path: /

backend:

serviceName: system-a-internal-service

servicePort: 8080apiVersion: v1

kind: Secret

metadata:

name: system-a-secret-tls

namespace: default

data:

tls.crt: base64 encoded cert

tls.key: base64 encoded key

type: kubernetes.io/tls- Data keys need to be "tls.crt" and "tls.key"

- Values are file content not file paths/location

- Secret component must be in the same namespace as the ingress component

Auto-Scaling

HorizontalPodAutoScaler

apiVersion: apps/v1

kind: Deployment

metadata:

name: php-apache

spec:

selector:

matchLabels:

run: php-apache

replicas: 1

template:

metadata:

labels:

run: php-apache

spec:

containers:

- name: php-apache

image: k8s.gcr.io/hpa-example

ports:

- containerPort: 80

resources:

limits:

memory: 500Mi

cpu: 100m

requests:

memory: 250Mi

cpu: 80m

---

apiVersion: v1

kind: Service

metadata:

name: php-apache-service

labels:

run: php-apache

spec:

ports:

- port: 80

nodePort: 30002

selector:

run: php-apache

type: NodePort

---

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: php-apache

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

minReplicas: 2

maxReplicas: 10

targetCPUUtilizationPercentage: 50Demo App

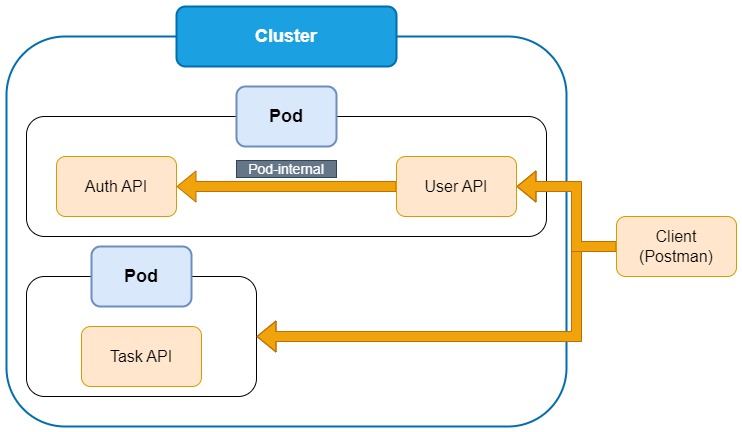

Pod Internal Communication

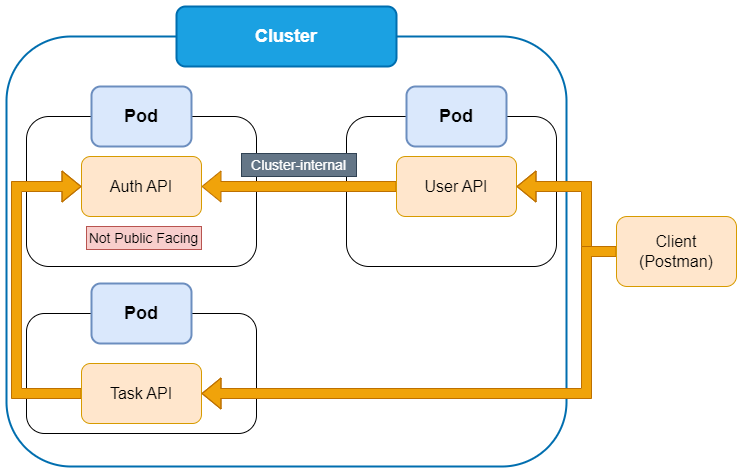

Pod to Pod Communication

There are no secrets to success. It is the result of preparation, hard work, and learning from failure. - Colin Powell

THANK YOU

Kubernetes Training (JPK)

By Abdullah Fathi

Kubernetes Training (JPK)

- 39