Deep Learning

- Crash Course -

UNIFEI - August, 2018

Hello!

Prof. Luiz Eduardo

Hanneli Tavante

<3

Canada

Prof. Maurilio

<3

Deutschland

Questions

- What is this course?

- A: An attempt to bring interesting subjects to the courses

- Why are the slides in English?

- I am reusing some material that I prepared before

- What should I expect?

- This is the first time we give this course. We will be looking forward to hearing your feedback

Rules

- Don't skip classes

- The final assignment is optional

- We will have AN OPTIONAL LECTURE on Friday

- Feel free to ask questions

- Don't feel discouraged with the mathematics

- Contact us for study group options

[GIF time]

Agenda

- Why is deep learning so popular now?

- Introduction to Machine Learning

- Introduction to Neural Networks

- Deep Neural Networks

- Training and measuring the performance of the N.N.

- Regularization

- Creating Deep Learning Projects

- CNNs and RNNs

- (OPTIONAL): Tensorflow intro

Note: this list can change

Topics

- Why is deep learning so popular now?

- Introduction to Machine Learning

- Introduction to Neural Networks

- Deep Neural Networks

- Training and measuring the performance of the N.N.

- Regularization

- Creating Deep Learning Projects

- CNNs and RNNs

- (OPTIONAL): Tensorflow intro

Note: this list can change

What is the difference between Machine Learning (ML) and Deep Learning (DL)? And AI?

AI

ML

Representation Learning

DL

Why is it so popular?

- Big companies are using it

- Business opportunities

- It is easy to understand the main idea

- More accessible outside the Academia

- Lots os commercial applications

- Large amount of data

- GPUs and fast computers

Agenda

- Why is deep learning so popular now?

- Introduction to Machine Learning

- Introduction to Neural Networks

- Deep Neural Networks

- Training and measuring the performance of the N.N.

- Regularization

- Creating Deep Learning Projects

- CNNs and RNNs

- (OPTIONAL): Tensorflow intro

WHERE ARE THE

NEURAL

NETWORKS??////??

<3

Canada

Agenda

- Why is deep learning so popular now?

- Introduction to Machine Learning (we are still here)

- Introduction to Neural Networks

- Deep Neural Networks

- Training and measuring the performance of the N.N.

- Regularization

- Creating Deep Learning Projects

- CNNs and RNNs

- (OPTIONAL): Tensorflow intro

Example: predict if a given image is the picture of a dog

Our Data and Notation

Let us use the Logistic Regression example

Single training example

We will have multiple training examples:

m training examples in a set

Warning:

( [

],

| First x vector | First y (number) |

|---|---|

| Second x vector | Second y (number) |

| ... | ... |

)

Warning:

( [

],

| First x vector | First y (number) |

|---|---|

| Second x vector | Second y (number) |

| ... | ... |

)

( [

],

)

Pro tip:

[

| First x vector | First y (number) |

|---|---|

| Second x vector | Second y (number) |

| ... | ... |

...]

m

n

Pro tip:

[

| First x vector | First y (number) |

|---|---|

| Second x vector | Second y (number) |

| ... | ... |

...]

m

n

[

...]

Data:

| [salary, location, tech...] | Probability y=1 |

|---|---|

| [RGB values] | 0.8353213 |

| ... | ... |

In logistic regression, we want to know the probability of y=1 given a vector of x

(note: we could have more columns for extra features)

Logistic regression

Recap: for logistic regression, what is the equation format?

Tip: Sigmoid, transpose, wx+b

*

Logistic regression

Recap: for logistic regression, what is the equation format?

Tip: Sigmoid, transpose, wx+b

*

+

b

z

Logistic regression

Recap: for logistic regression, what is the equation format?

Tip: Sigmoid, transpose, wx+b

*

+

b

z

(

)

Logistic regression

Recap: given a training set

You want

What do we do now?

For each training example

Logistic regression

For each training example

If y = 1, you want

large

If y = 0, you want

small

What do we do now?

Cost function: applies the loss function to the entire training set

Logistic regression

What do we do now?

Cost function: measures how well your parameters are doing in the training set

We want to find w and b that minimise J(w, b)

Gradient Descent: it points downhill

It's time for the partial derivatives

Logistic regression

Gradient Descent: It's time for the partial derivatives

source: https://www.wikihow.com/Take-Derivatives

Logistic regression

We are looking at the previous step to calculate the derivative

source: https://www.wikihow.com/Take-Derivatives

Whiteboard time - computation graphs and derivatives

Let's put these concepts together

x

w

b

Forward pass

What is our goal?

Adjust the values of w and b in order to MINIMIZE difference at the loss function

x

w

b

How do we compute the derivative?

The equations: (whiteboard)

Backwards step

x

w

b

Good news: this looks like a Neural Network!

Agenda

- Why is deep learning so popular now?

- Introduction to Machine Learning

- Introduction to Neural Networks

- Deep Neural Networks

- Training and measuring the performance of the N.N.

- Regularization

- Creating Deep Learning Projects

- CNNs and RNNs

- (OPTIONAL): Tensorflow intro

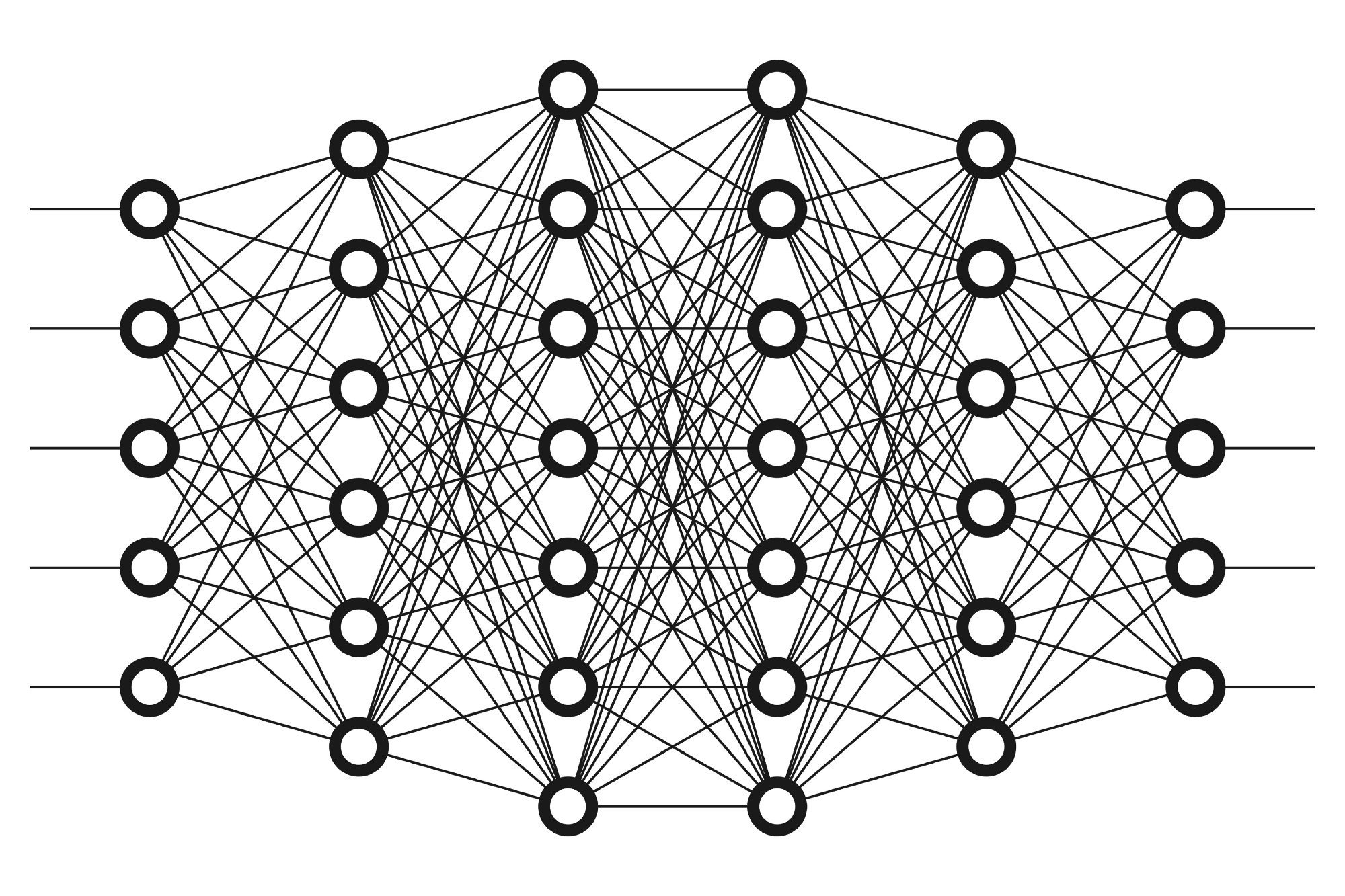

Basic structure of a Neural Network

Hidden

Layer

More details

Hidden Layer

Hidden Layer

In each hidden unit of each layer, a computation happens. For example:

Naming convention

Hidden Layer

Hidden Layer

Key question: Every time we see a, what do we need to compute?

To make it clear

Hidden Layer

Hidden Layer

Questions

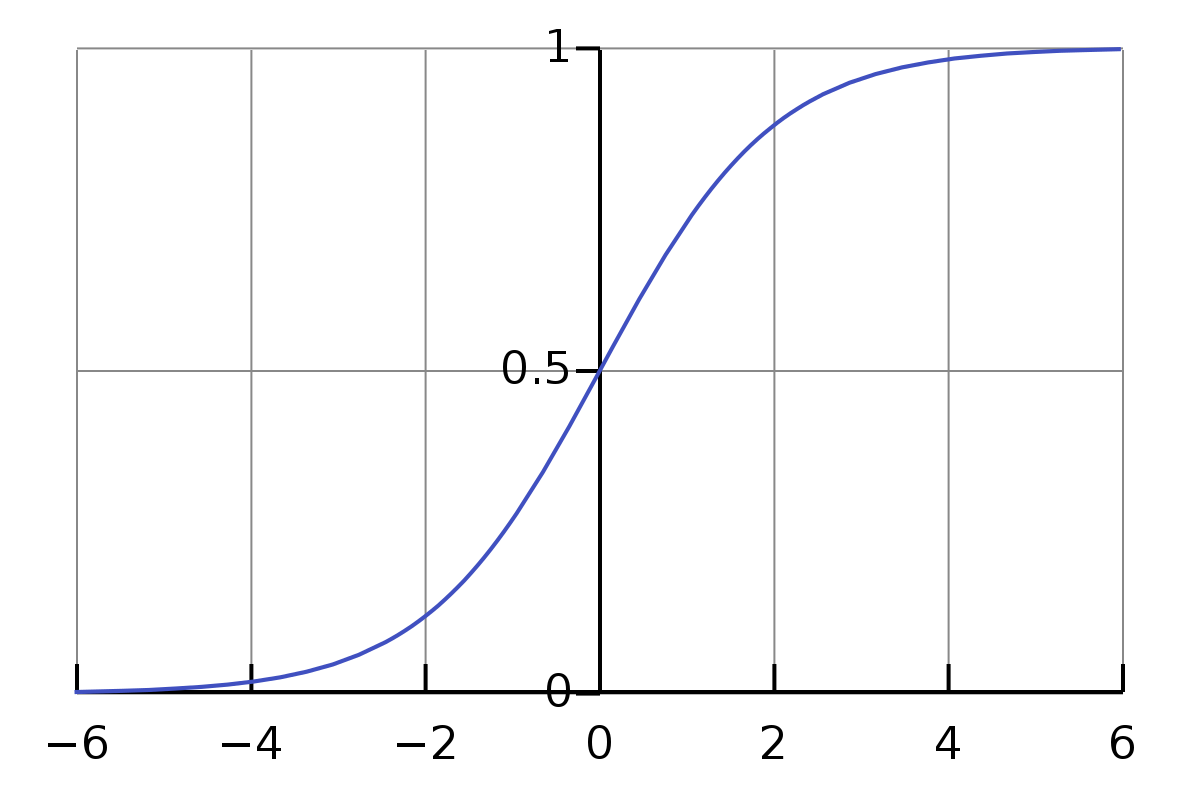

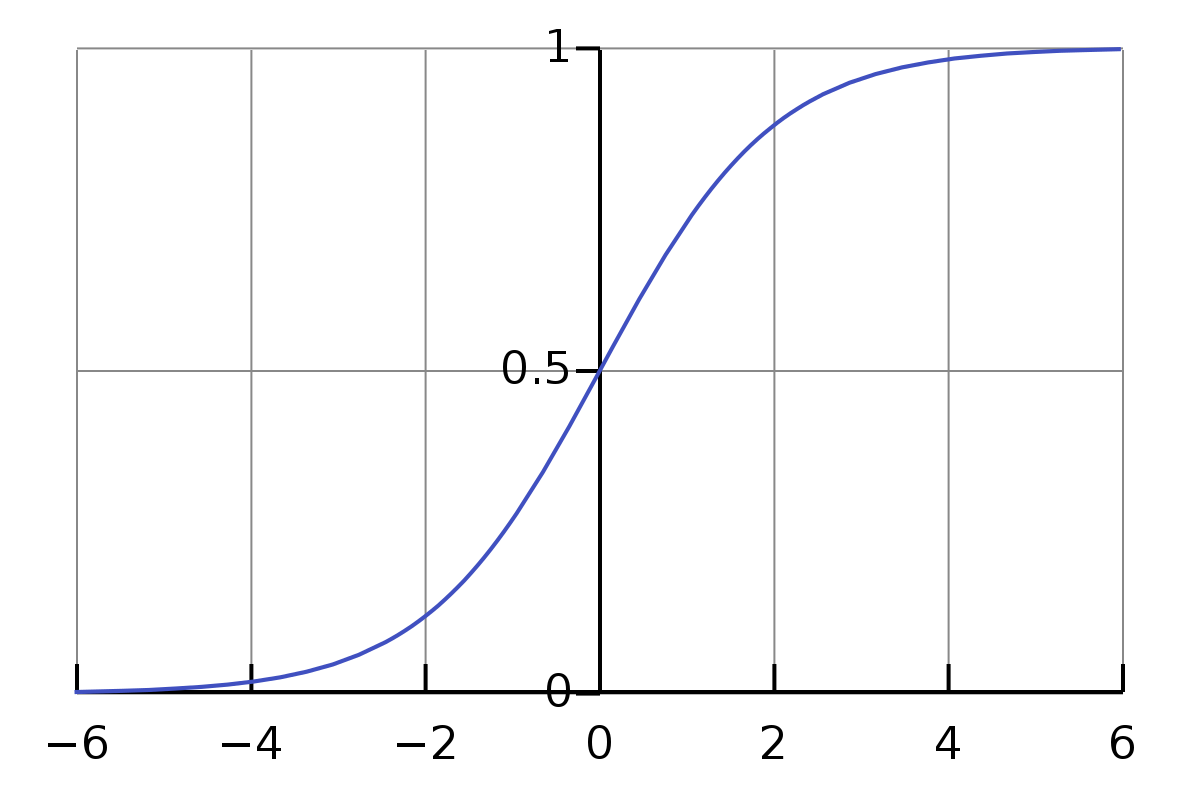

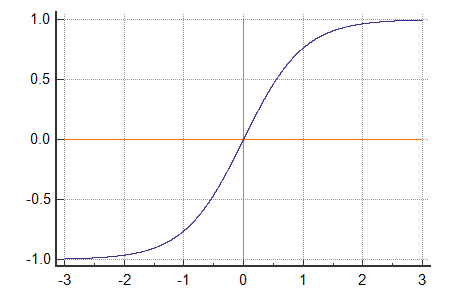

1. What was the formula for the sigmoid function?

2. Why do we need the sigmoid function? How does it look like?

Questions

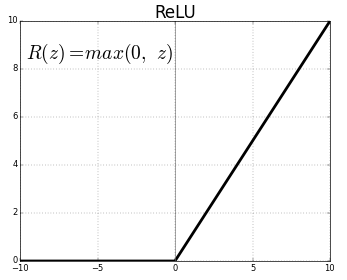

3. Can we use any other type of function?

The sigmoid function is what we call Activation Function

Activation Functions

Activation Functions

Rectified Linear Unit

(ReLU)

Note: The activation function can vary across the layers

Each of these activation functions has a derivative

[Question: Why do we need to know the derivatives? :) ]

Note

GIF Time! Too many things going on

Which parameters do we have until now?

Our Parameters

This is a matrix!

Our Parameters

Is b a vector or a matrix?

A: It is a vector

Our Parameters

Our Parameters

Our Parameters

Now we need to calculate the derivatives - we must go backwards

Note: from now on we shall use the vectorized formulas

Summary: Vecorized Forward propagation

X

W

b

Summary: Vecorized Forward propagation

Summary: Vecorized backward propagation

x

w

b

Summary: Vecorized backward propagation

Last tip: randomly initialize the values of W and b.

<3

Canada

Will the gradient always work?

What's the best activation function?

How many hidden layers should I have?

Agenda

- Why is deep learning so popular now?

- Introduction to Machine Learning

- Introduction to Neural Networks

- Deep Neural Networks

- Training and measuring the performance of the N.N.

- Regularization

- Creating Deep Learning Projects

- CNNs and RNNs

- (OPTIONAL): Tensorflow intro

Source: https://medium.freecodecamp.org/want-to-know-how-deep-learning-works-heres-a-quick-guide-for-everyone-1aedeca88076

6 Layers; L=6

=number of units in a layer L

What should we compute in each of these layers L?

1. Forward step: Z and A

General idea

Input:

Output:

Z works like a cache

2. Backpropagation: derivatives

General idea

Input:

Output:

Basic building block

In a layer l,

Forward

Backprop

<3

Canada

My predictions are awful. HELP

Agenda

- Why is deep learning so popular now?

- Introduction to Machine Learning

- Introduction to Neural Networks

- Deep Neural Networks

- Training and measuring the performance of the N.N.

- Regularization

- Creating Deep Learning Projects

- CNNs and RNNs

- (OPTIONAL): Tensorflow intro

When should I stop training?

Select a fraction of your data set to check which model performs best.

Once you find the best model, make a final test (unbiased estimate)

Data

Training set

C.V. = Cross Validation set (select the best model)

C.V.

T = Test set (unbiased estimate)

T

Problem

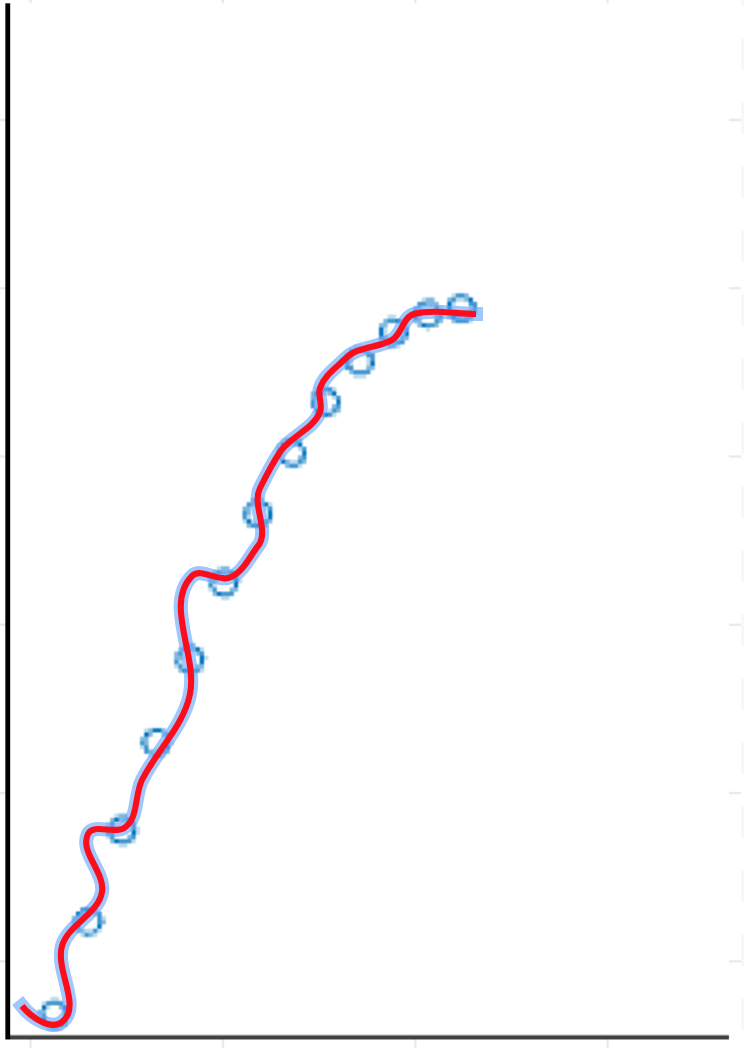

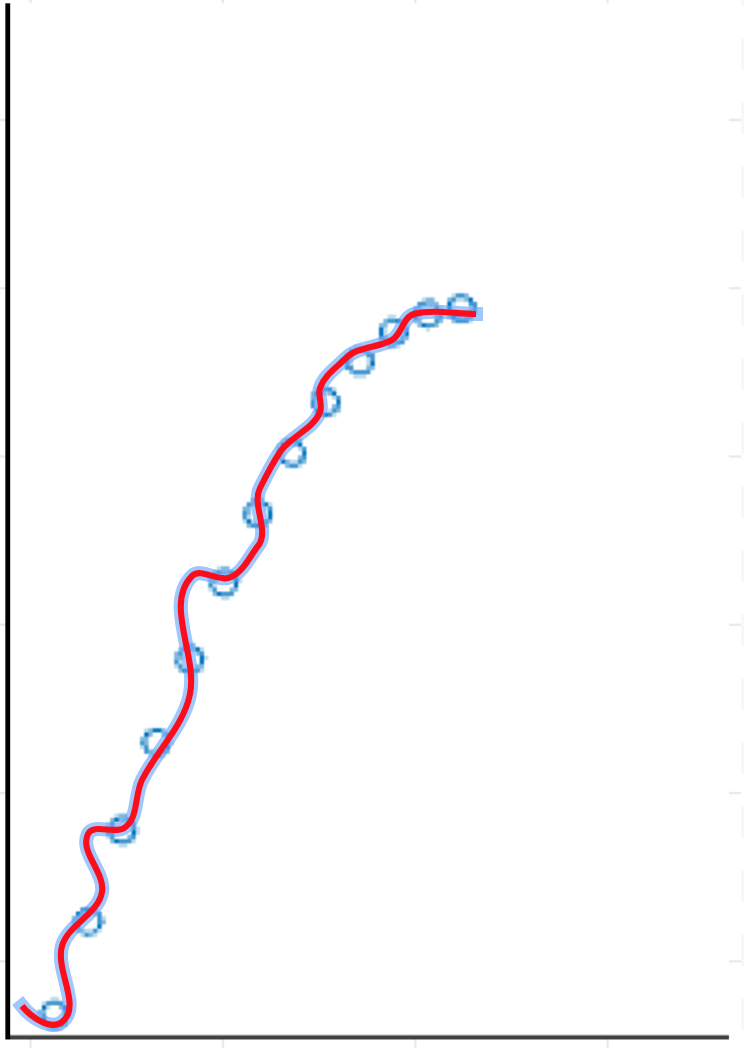

Does it look good?

Underfitting

The predicted output is not even fitting the training data!

Problem

Does it look good?

Overfitting

The model fits everything

[Over, Under]fitting are problems related to the capacity

Capacity: Ability to fit a wide variety of functions

Overfitting

Underfitting

[Over, Under]fitting are problems related to the capacity

Capacity: Ability to fit a wide variety of functions

High variance

High Bias

How can we properly identify bias and variance problems?

A: check the error rates of the training and C.V. sets

| Training set error | 1% |

|---|---|

| C.V. Error | 11% |

High variance problem

| Training set error | 15% |

|---|---|

| C.V. Error | 16% |

High bias problem

(not even fitting the training set properly!)

| Training set error | 15% |

|---|---|

| C.V. Error | 36% |

High bias problem AND high variance problem

How can we control the capacity?

Are you fitting the data of the training set?

No

High bias

Bigger network (more hidden layers and units)

Train more

Different N.N. architecture

Yes

Is your C.V. error low?

No

High variance

More data

Regularization

Different N.N. architecture

Yes

DONE!

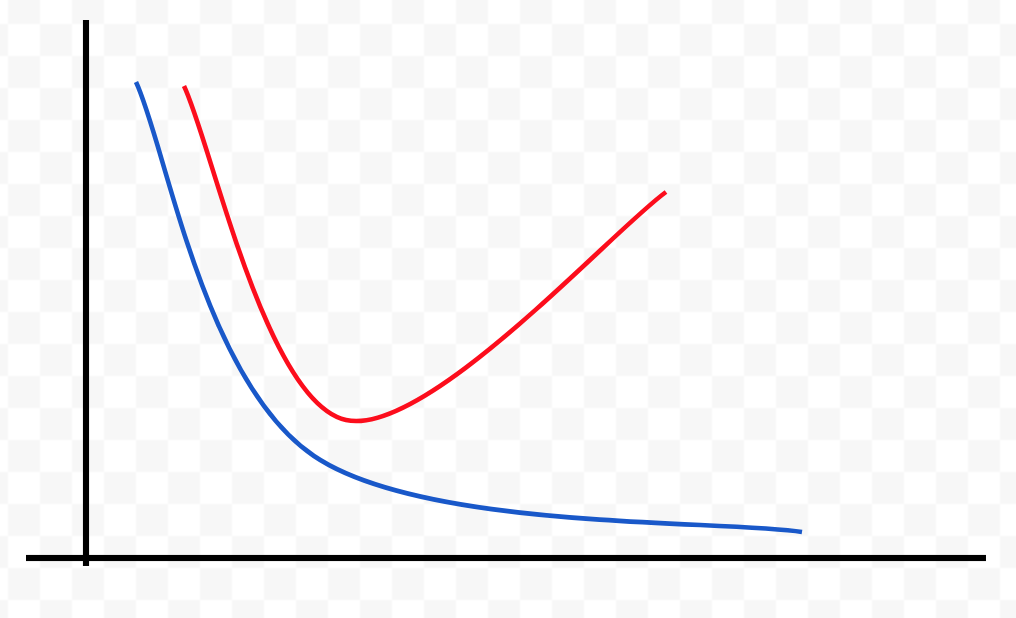

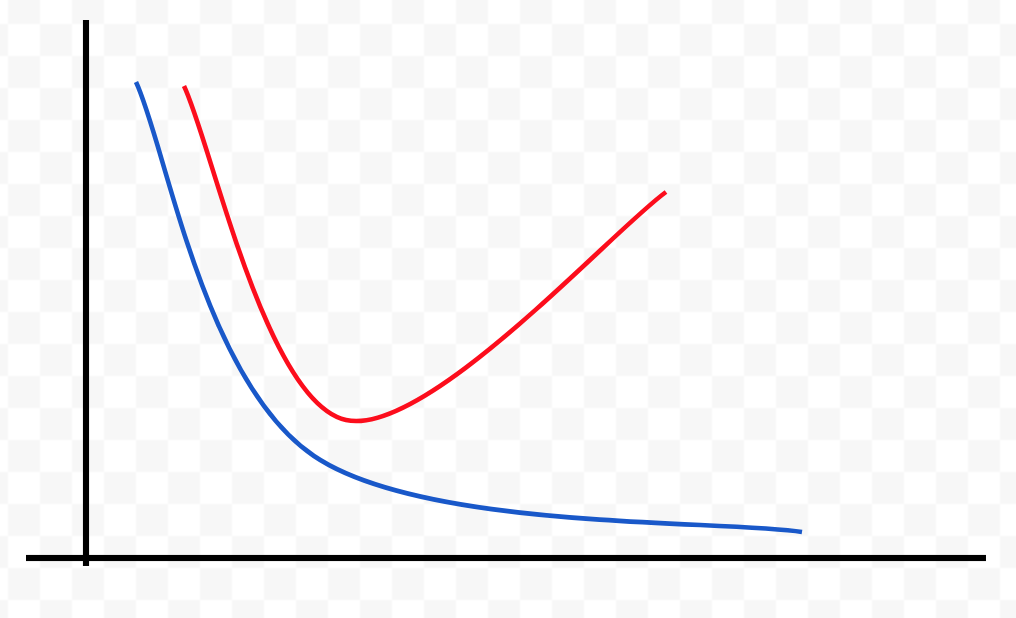

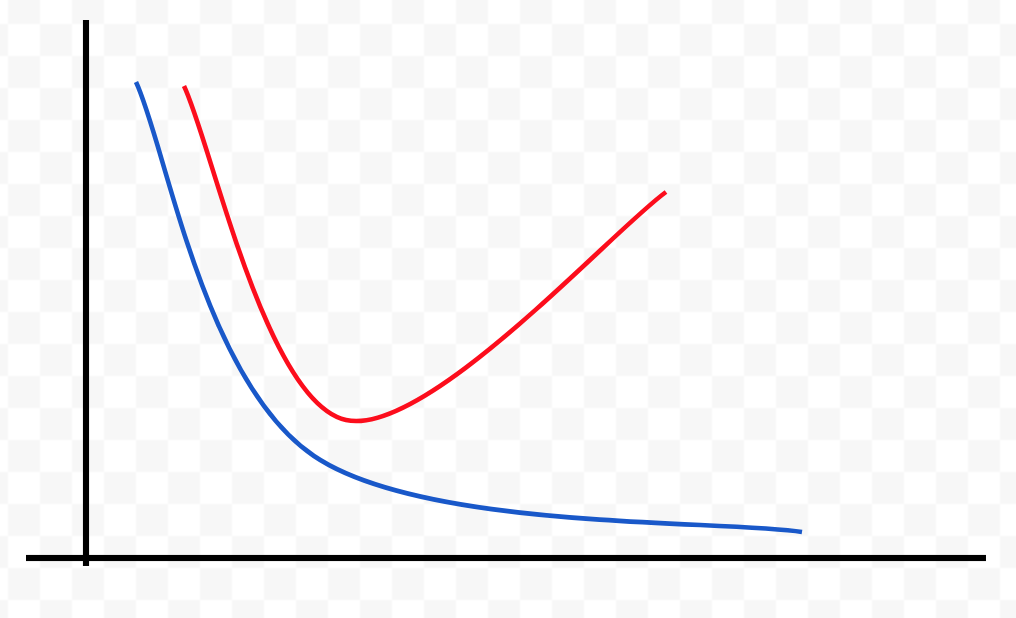

Error

Capacity

J(θ) test error

J(θ) train error

Error

Capacity

J(θ) cv error

J(θ) train error

Bias problem (underfitting)

J(θ) train error is high

J(θ) train ≈ J(θ) cv

J(θ) train error is low

J(θ) cv >> J(θ) train

Variance problem (overfitting)

Error

Capacity

J(θ) test error

J(θ) train error

Stop here!

Let's talk about overfitting

Why does it happen?

You end up having a very complex N.N., with the elements of the matrix W being too large.

w: HIIIIIIIIIIIIIIIIII

w: HELOOO

w: WOAHHH

w: !!111!!!

W's with a strong presence in every layer can cause overfitting. How can we solve that?

w: HIIIIIIIIIIIIIIIIII

w: HELOOO

w: WOAHHH

w: !!111!!!

SHH

HHHHHH

Who is

Agenda

- Why is deep learning so popular now?

- Introduction to Machine Learning

- Introduction to Neural Networks

- Deep Neural Networks

- Training and measuring the performance of the N.N.

- Regularization

- Creating Deep Learning Projects

- CNNs and RNNs

- (OPTIONAL): Tensorflow intro

Regularization paramter

It penalizes the W matrix for being too large.

In terms of mathematics, how does it happen?

Norm

Norm

L2-Norm (Euclidean)

L1-Norm

Forbenius Norm

Final combo (L2-norm)

<3

Canada

Are there other ways to perform regularization, without calculating a norm?

More slides here (Part II):

https://slides.com/hannelitavante-hannelita/deep-learning-unifei-ii#/

Deep Learning UNIFEI

By Hanneli Tavante (hannelita)

Deep Learning UNIFEI

- 2,865