The fast Death of

NLP Chatbots

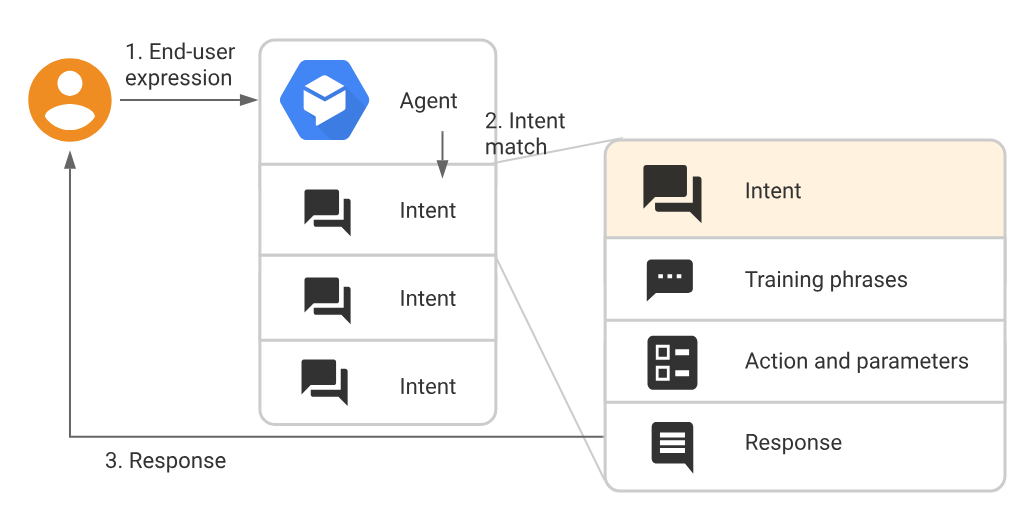

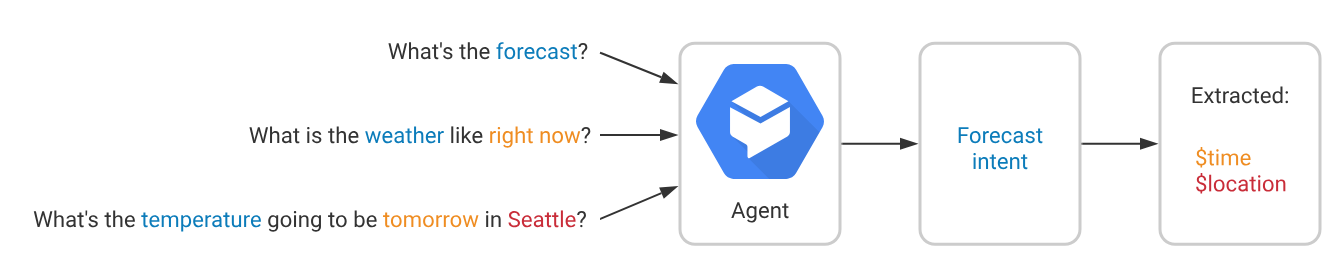

Classic NLP Chatbots

- Google DialogFlow

- Rasa

- AWS Lex

- Azure Bot Service

- Watson

Training

Usability

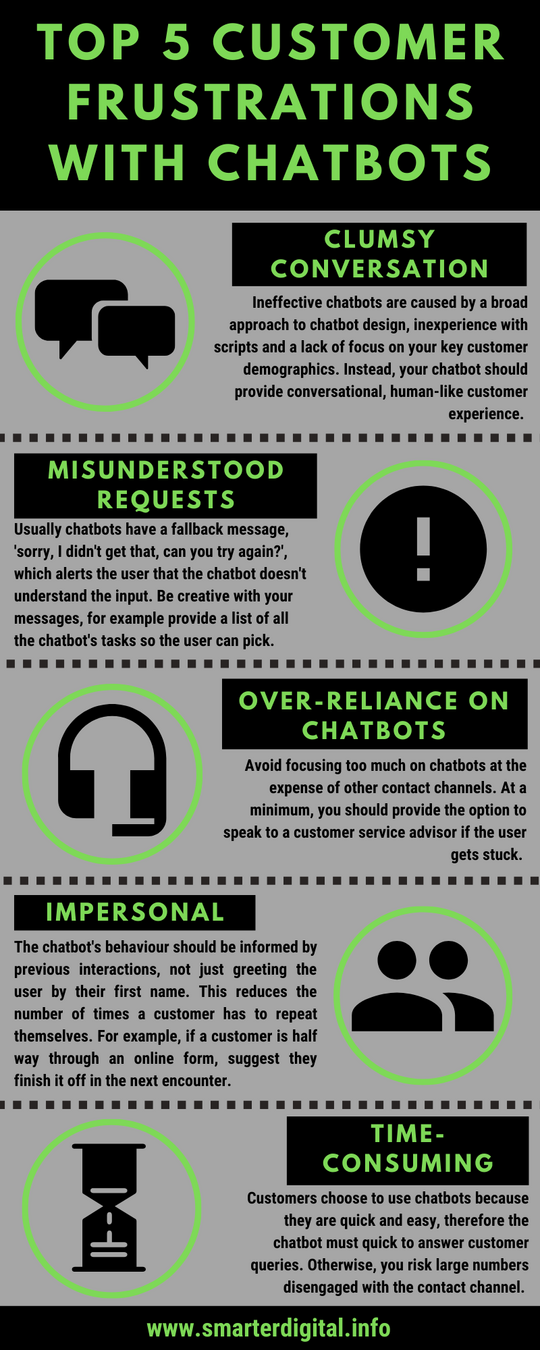

Usability for chatbots is hard.

They look like they'd understand you, but they don't.

You use them to be faster, but you aren't.

You're guessing what they will understand.

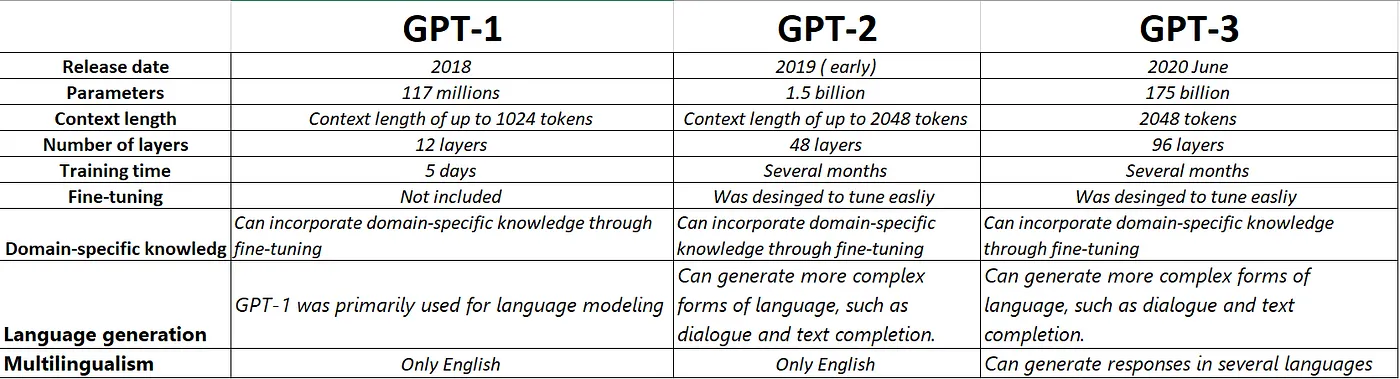

Large Language Models

- Foundation Model: Language and World Knowledge

- > 100.000.000 parameters emergent capabilities

- zero shot in-context learning "Prompting"

- Chatbot batteries included

- intent recognition without training

- entitity recognition without training or definition

- flow definition

- Inversion of control

- the model does the work instead of you

Unstructured Knowledge

- Vector Database: semantic lookups

- save knowledge and retrieve it when needed

- shared knowledge and reuse it using LLMs

- ChatBots are just one client

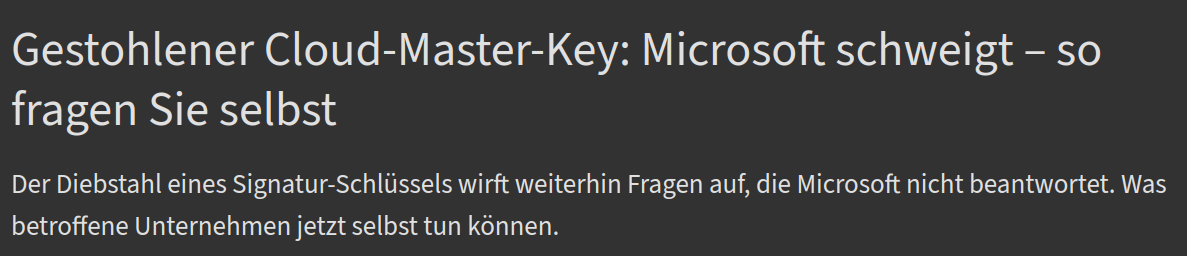

- "Open" is there because it sounds good

- Actually a Microsoft product now

Open Source LLMs

- Rasa because it's trustworthy

- i like rasa and had fun using it

- i like rasa and had fun using it

- Llama, Vicuna, WizardML, GPT4ALL: Research only

- Falcon, Mosaic, Dolly: reasoning--, german--

- Finally: LLama 2

- kinda Apache 2 for everyone but FAANG

- 70B at GPT-3.5-Level

- Runtime-Platforms exist

- vllm, TGI, SkyPilot

- Finetuning easy but mostly not needed

How to deal with it

- LLM based chatbots are effortless and fast to implement

- Some integrations are missing

- voice exists, but it's not easy

- flow design is hard but

What to do next?

- If you already got an NLP-Bot

- wait :-) - and do some experiments

- wait :-) - and do some experiments

- If you don't: experiment or start

- QA & Knowledge retrieval is cheap and easy

- Agents are more work, but very efficient

- standards aren't there yet, but the business value is.

deck

By Johann-Peter Hartmann

deck

- 349