Dagger.jl for Task Parallel HPC

By Julian Samaroo

What is Dagger?

- Pure-Julia task parallelism library

- Unified task API supports multiple high-level APIs (arrays, tables, graphs, streaming, data dependencies)

- Supports multi-threading, multi-node, multi-GPU out of the box

- Machine learning-based scheduler optimizes computational DAGs based on learned task behavior

- Advanced data dependency system automatically parallelizes operations on overlapping data regions (like OpenMP's "task depend" on steroids)

Why Dagger?

- Highly scalable for multi-CPU, multi-GPU, and multi-node

- Powerful alternative to libraries like ScaLAPACK, Elemental, PLASMA

- Custom APIs are easy to build on task API

- No need for inconsistent vendor-specific compilers

- Can (eventually) do away with MPI

Highly Scalable

Dagger's Superpower: ??????

# Cholesky

function cholesky!(M, mt, nt)

for k in range(1, mt)

LAPACK.potrf!('L', M[k, k])

for m in range(k+1, mt)

BLAS.trsm!('R', 'L', 'T', 'N', 1.0,

M[k, k], M[m, k])

end

for n in range(k+1, nt)

BLAS.syrk!('L', 'N', -1.0,

M[n, k], 1.0,

M[n, n])

for m in range(n+1, mt)

BLAS.gemm!('N', 'T', -1.0,

M[m, k], M[n, k],

1.0, M[m, n])

end

end

end

endDagger's Superpower: Datadeps

# Cholesky written with Dagger Datadeps

Dagger.spawn_datadeps() do

for k in range(1, mt)

Dagger.@spawn LAPACK.potrf!('L', ReadWrite(M[k, k]))

for m in range(k+1, mt)

Dagger.@spawn BLAS.trsm!('R', 'L', 'T', 'N', 1.0,

Read(M[k, k]), ReadWrite(M[m, k]))

end

for n in range(k+1, nt)

Dagger.@spawn BLAS.syrk!('L', 'N', -1.0,

Read(M[n, k]), 1.0,

ReadWrite(M[n, n]))

for m in range(n+1, mt)

Dagger.@spawn BLAS.gemm!('N', 'T', -1.0,

Read(M[m, k]), Read(M[n, k]),

1.0, ReadWrite(M[m, n]))

end

end

end

endWhat is Datadeps?

- API for automatic parallelism of serial code

- Data aliasing/locality aware (CPU, GPU, node, etc.)

- Handles data transfers automatically and efficiently

- Supports data larger than RAM/VRAM ("out-of-core")

- Single algorithm becomes infinitely scalable

- (Coming Soon) Auto MPI

- (Coming Soon) Auto multi-precision

- (Coming Soon) Optimal scheduler

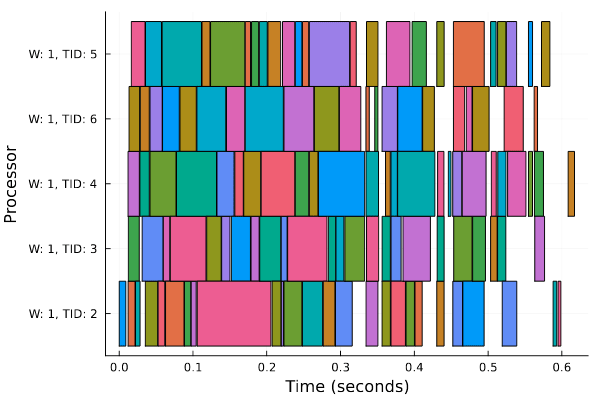

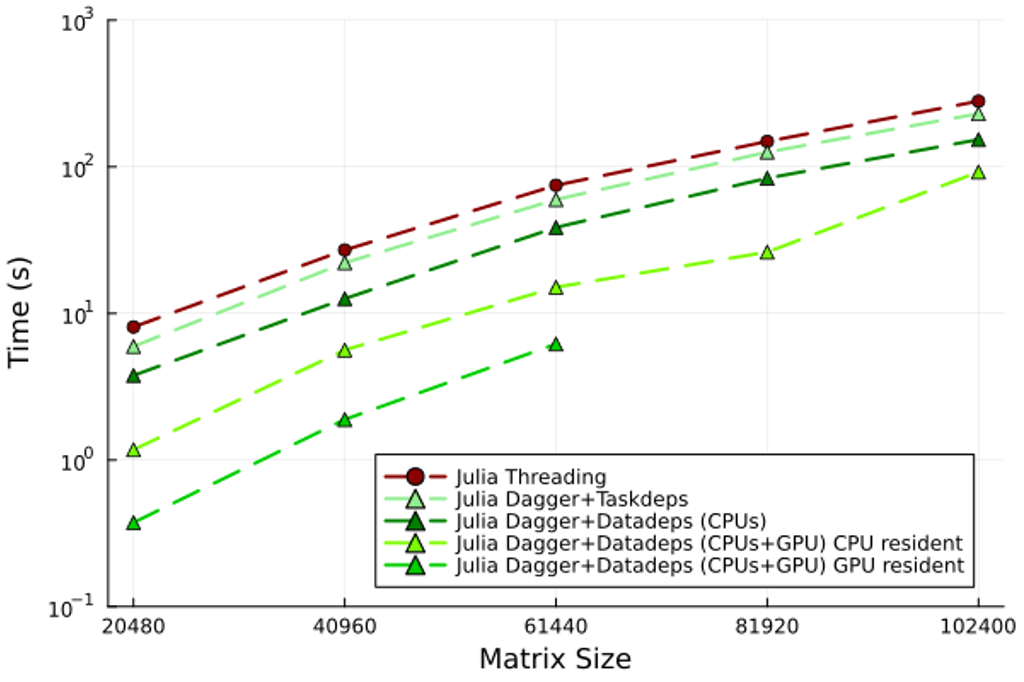

deck

By Julian Samaroo

deck

- 182