Intro to Artificial Intelligence

October 2016, Stavros Vassos Helvia.io

(a very basic)

Intro to

(some part of)

Artificial Intelligence

Artificial Intelligence through some applications

Self-driving cars

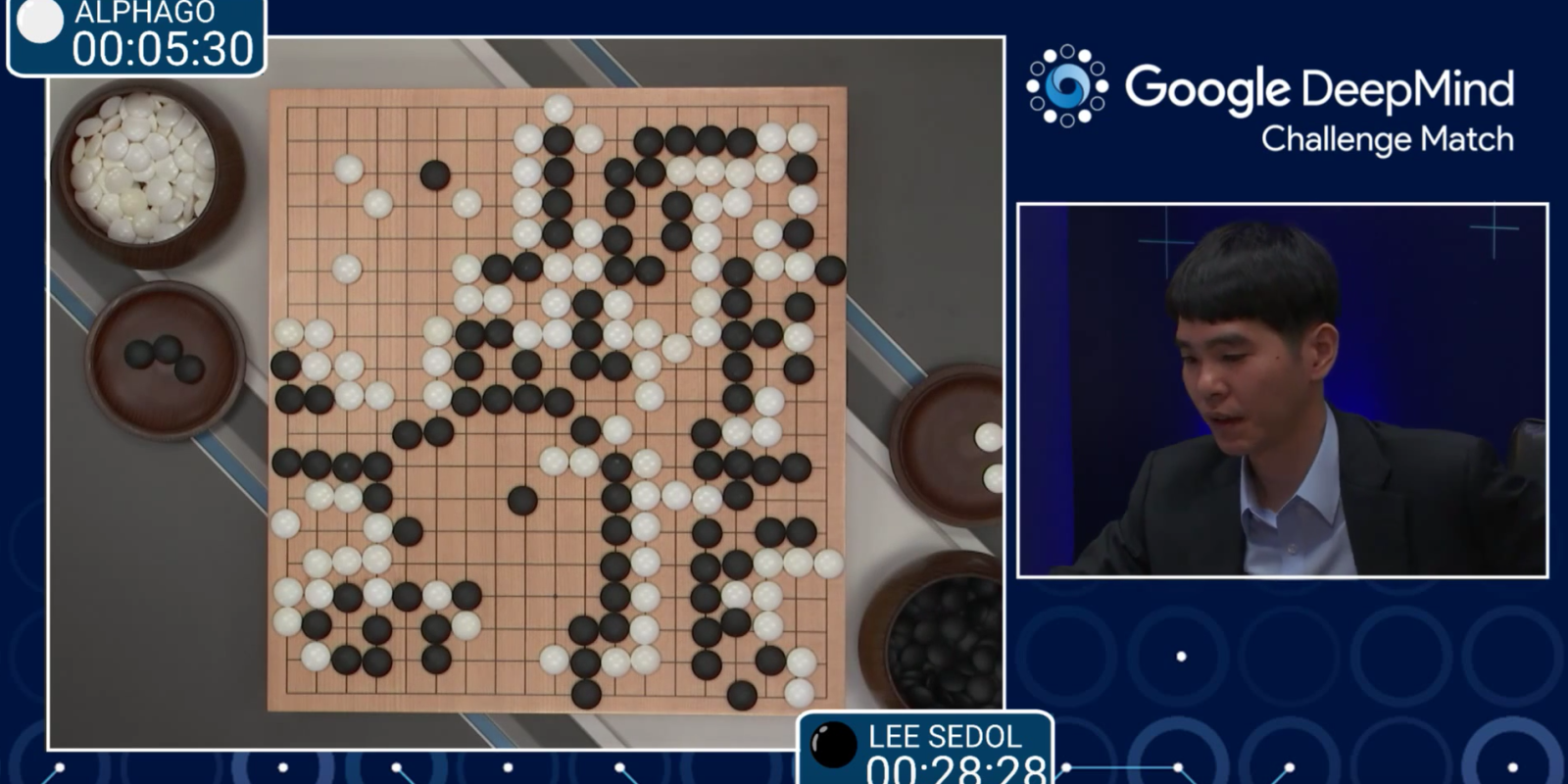

Games: Go

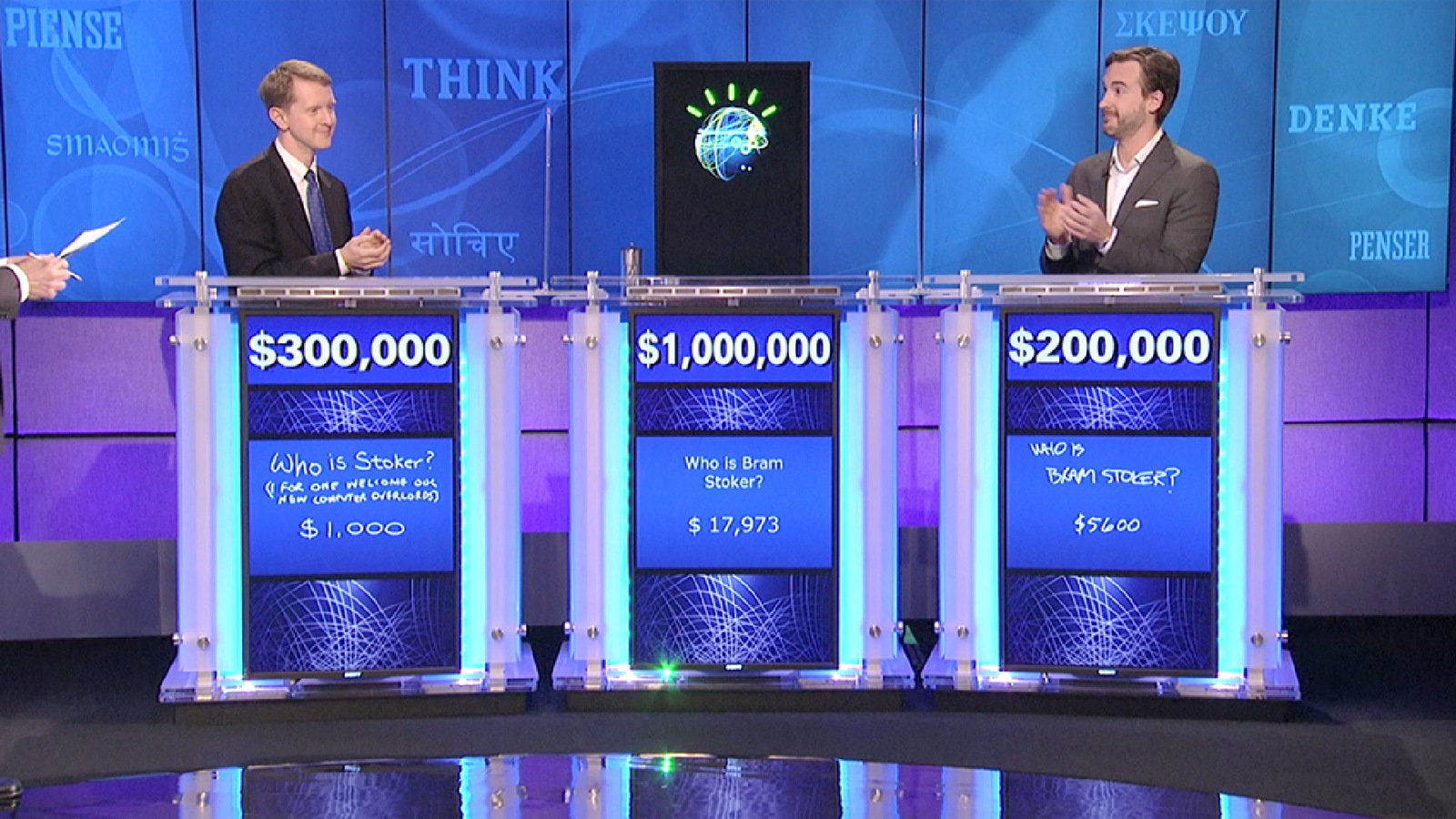

Games: Jeopardy

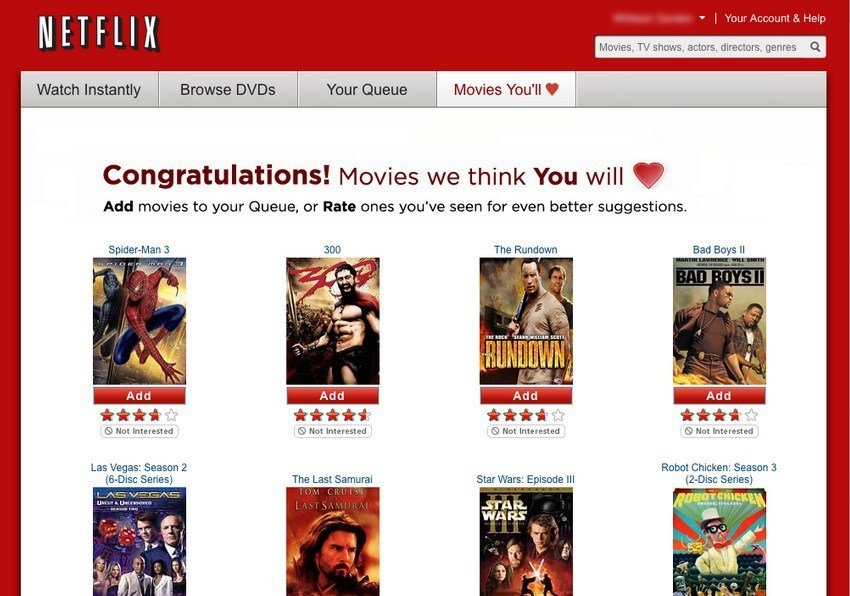

Movie recommendations

Predictive text

Artificial Intelligence through some applications

- Some cases are clear in our head that this is AI because we relate them with "thinking" abilities.

- Some cases are less exciting and we tend to not acknowledge AI behind them.

- E.g, in principle spam filtering uses similar AI techniques as some of the previous examples.

Artificial Intelligence through some applications

- Typically the exciting cases require a complex mix of methods from many subfields of AI and Data Science.

- In any case, many exciting results have been driven mostly by the recent advances Machine Learning.

- So let's go through some basic concepts and terminology!

Overview

- Machine Learning

- Classification

- Deep Learning

- Live experiment!

- More

Machine Learning

Machine Learning (ML)

- Arthur Samuel way back in 1959: “[Machine Learning is the] field of study that gives computers the ability to learn without being explicitly programmed.”

Programming vs ML

- What does it mean to program something explicitly?

- ML systems are programmed of course, but the way to achieve their functionality is not given by the programmer.

- For instance, an ML system detects cats in images; the programmer has not given "cat detection" rules.

- In contrast, the program that handles an ATM transaction has a very explicit and fixed rules.

Learning by example

- Ok, so what is learning and how it works?

- The programmer needs to give something to the ML system in order to program itself.

- And this is data, in particular lots of data!

- For instance, in an ML system detects cats in images; the programmer has not given "cat detection" rules.

- But the programmer gives a huge number of cat images so that the system can learn on its own!

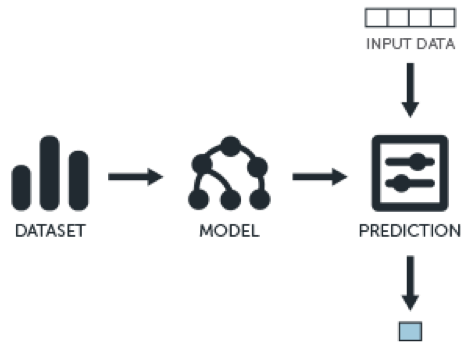

Machine Learning (ML)

- We prepare a big dataset of instances of the problem we want to solve, e.g., lots of cat images.

- The ML system uses the dataset to train itself and create a model of problem we want to solve.

- Then the model can be used to predict the answer to new problem instances, e.g., is this new image a cat?

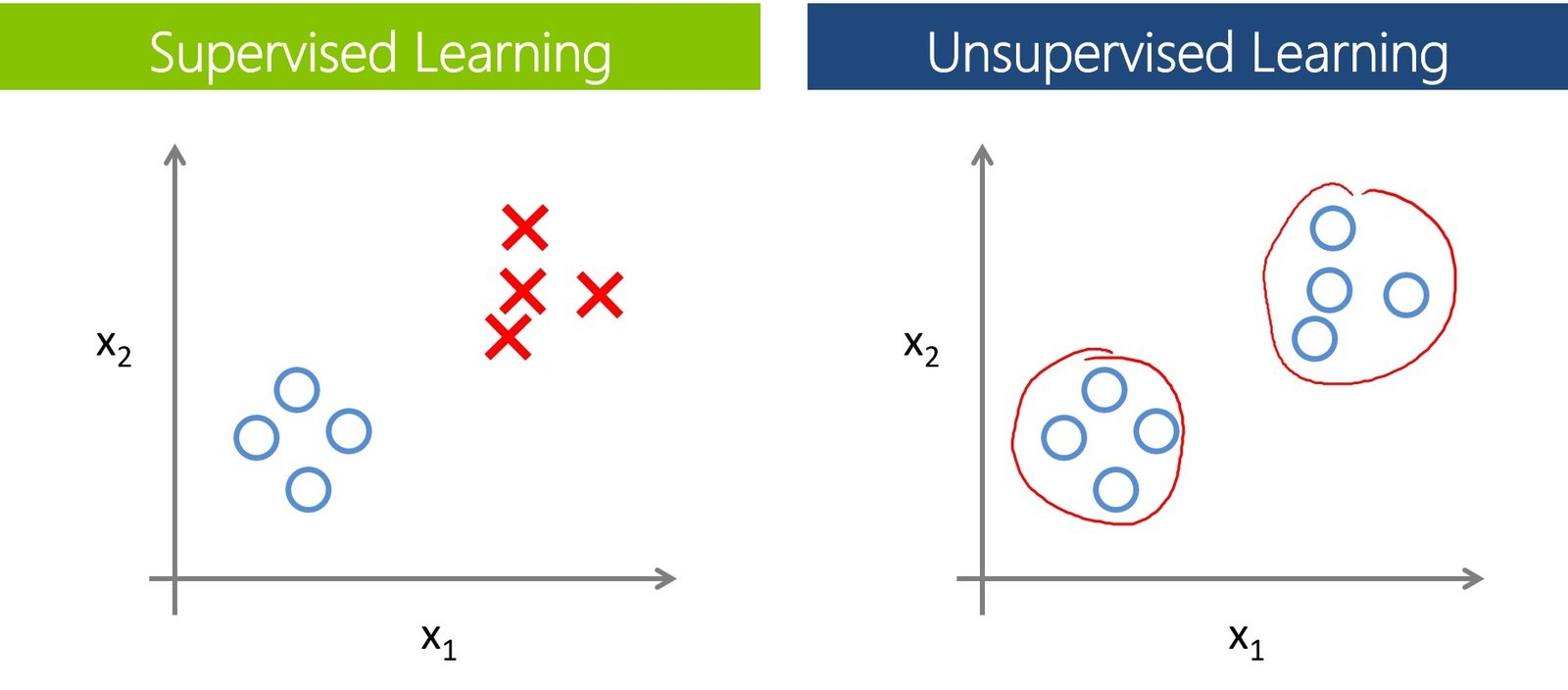

Un/Supervised ML

- There are two big categories depending on the dataset we provide (and different results we can get).

- Supervised learning: we go over the dataset and mark ourselves the right answer.

- Unsupervised learning: we give the dataset without hints and let the system figure out patterns.

Un/Supervised ML

- There are two big categories depending on the dataset we provide (and different results we can get).

- Supervised learning: we go over the dataset and mark ourselves the right answer.

- Unsupervised learning: we give the dataset without hints and let the system figure out patterns.

ML input/output?

- How do we actually give something as input to an ML system, e.g., a picture?

- What is the output we received?

- Let's see a specific class of problems and an example to make things more concrete.

Classification

Ice-cream example

- Here's a simple (silly but useful) scenario.

- We have data about how people eat their ice cream.

- In particular we know per person:

- how much time it took them to eat it;

- how much noise they were making while eating it;

- whether they are kids or not.

- We want to predict whether a new person is a kid based on their ice-cream eating behavior.

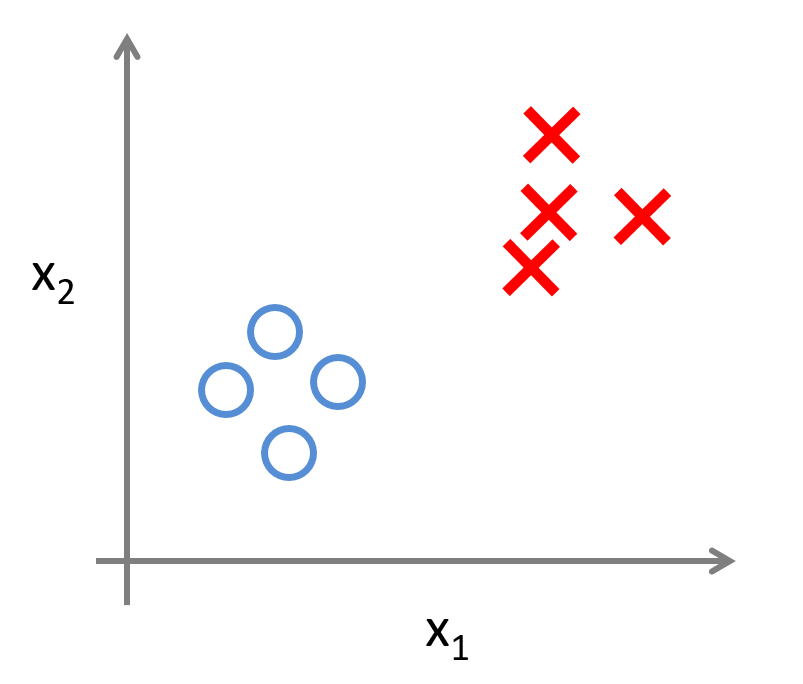

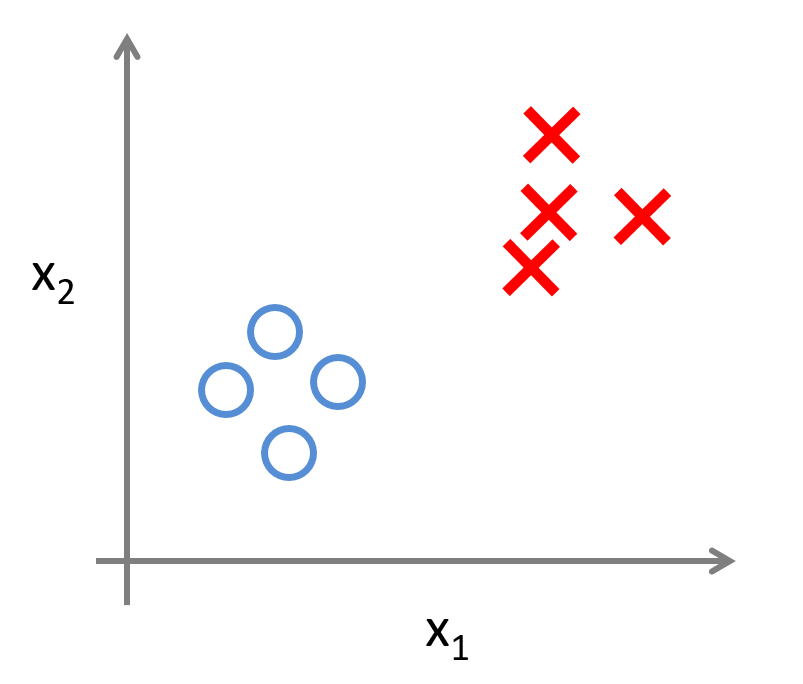

Dataset

- Let's make a training dataset for supervised ML:

- x1: time to eat the ice cream;

- x2: noise while eating the ice cream;

- we put an O if they are kids and X otherwise.

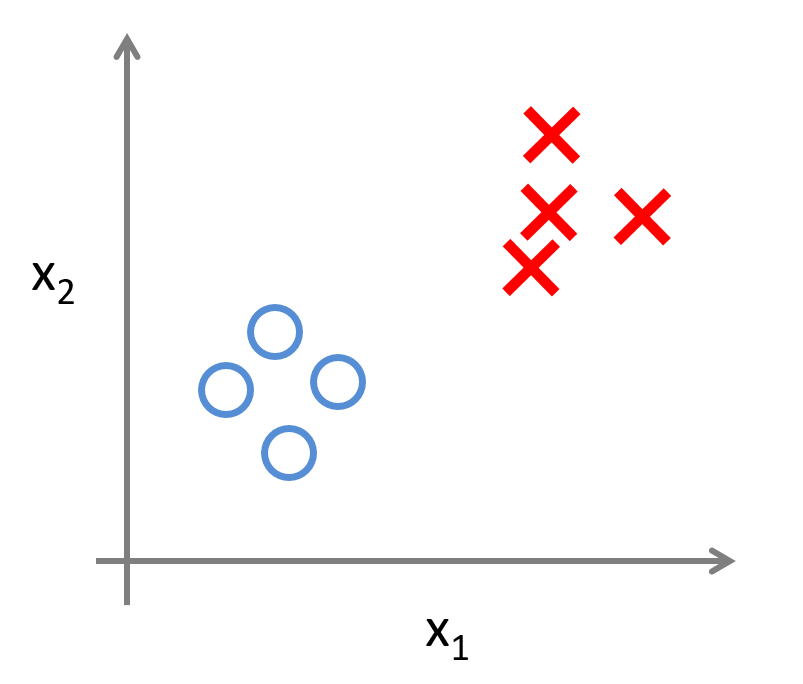

Model

- Let's assume we can separate the two classes of ice cream eaters using a simple linear function (model):

f(x_1,x_2) =

\begin{cases}

1, & \text{if}\ w_1 x_1 + w_2 x_2 + b > 0 \\

0, & \text{otherwise}

\end{cases}

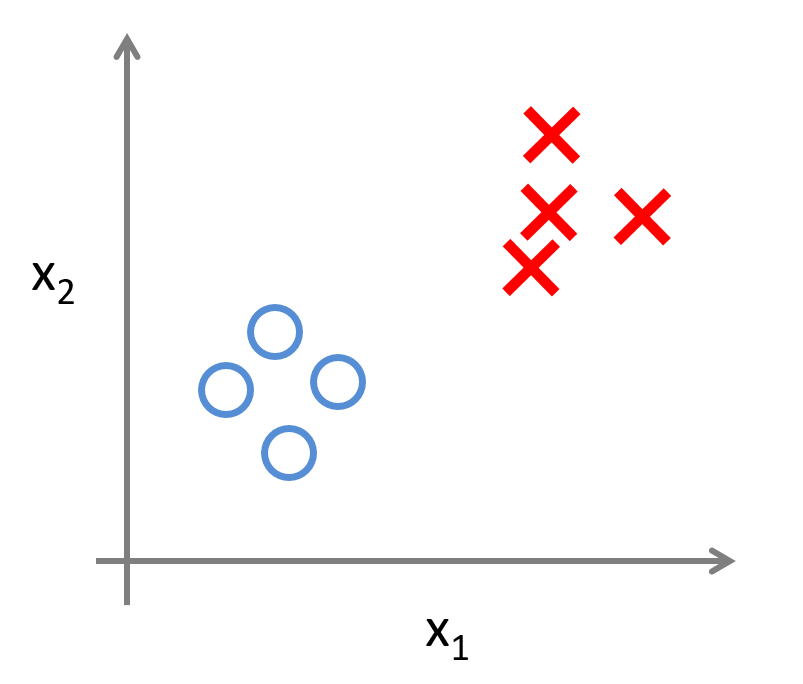

Training

- In the training phase, the ML system looks into the labelled data and learns the parameters of the function so that when f=1 then the person is a kid.

f(x_1,x_2) =

\begin{cases}

1, & \text{if}\ w_1 x_1 + w_2 x_2 + b > 0 \\

0, & \text{otherwise}

\end{cases}

Training

- There is no single solution and the way to find one is numerical in the sense that we start with a random setting and update until it fits the data.

f(x_1,x_2) =

\begin{cases}

1, & \text{if}\ w_1 x_1 + w_2 x_2 + b > 0 \\

0, & \text{otherwise}

\end{cases}

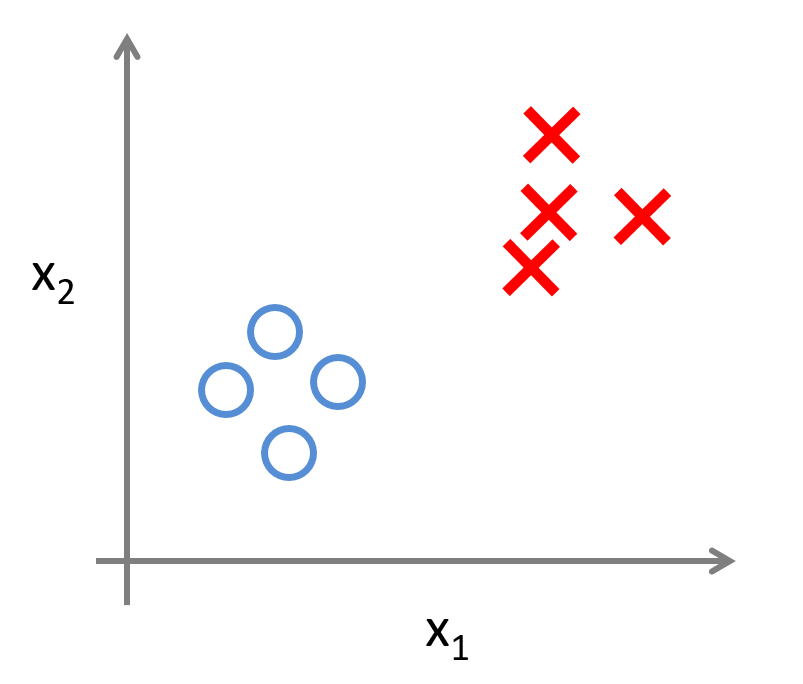

Classification

- Now when we have information about a new person in terms of time and noise, the ML system can predict whether they are a kid.

f(x_1,x_2) =

\begin{cases}

1, & \text{if}\ w_1 x_1 + w_2 x_2 + b > 0 \\

0, & \text{otherwise}

\end{cases}

Classification

- We want an ML system to learn to identify kids based on their ice cream eating behavior (time, noise).

- We prepare a dataset with positive and negative examples (the Xs and the Os) and train an ML system to create a model for kid-ness.

- Then the model can be used to predict kid-ness.

Perceptron

- This simple method is a linear classifier called perceptron.

- The perceptron is trained using backpropagation.

- This essentially goes like this: we try out the labelled data, check the error and then go backwards tweaking the parameters to make the error smaller.

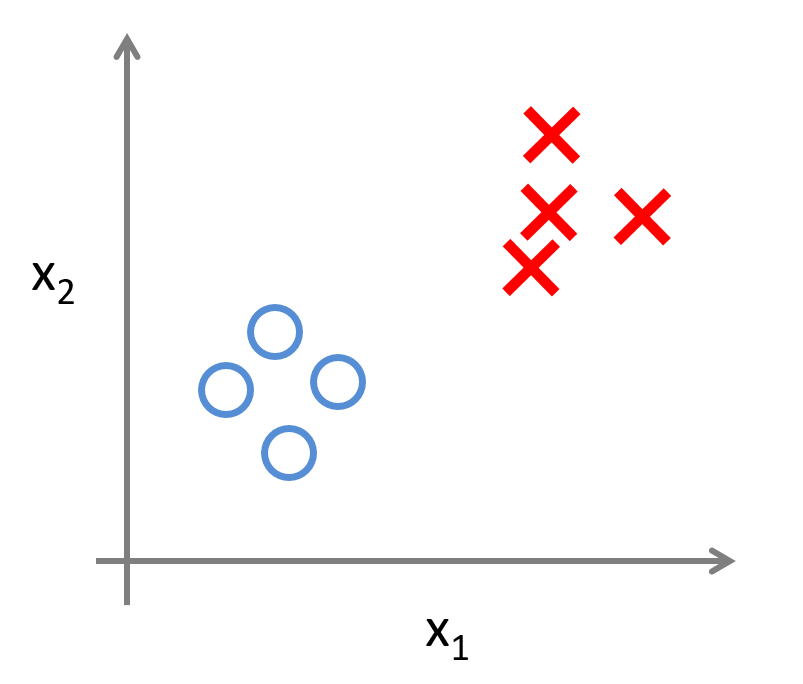

That was pretty easy

- Why did we have to do this with ML?

- Well, it's easy because we have just two inputs and it happened that the data is very well separated.

That was pretty easy

- What if we have 100s of inputs, e.g., the user rankings for all the movies they have seen?

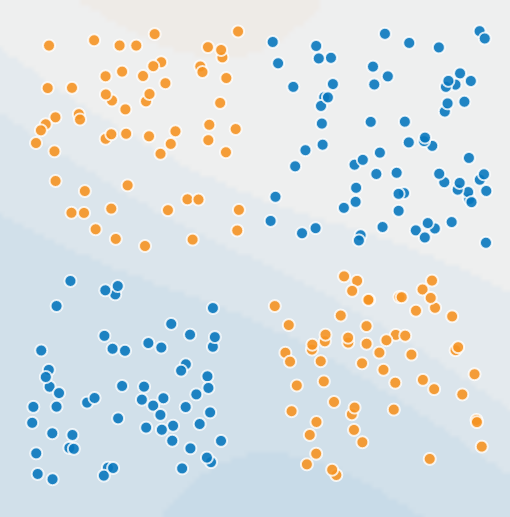

- What if the data was like the this?

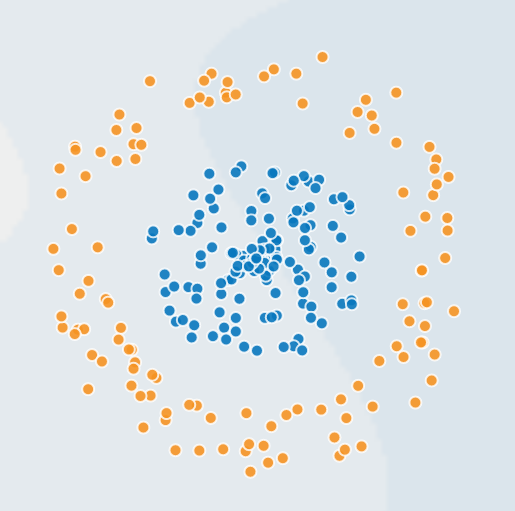

Deep Learning

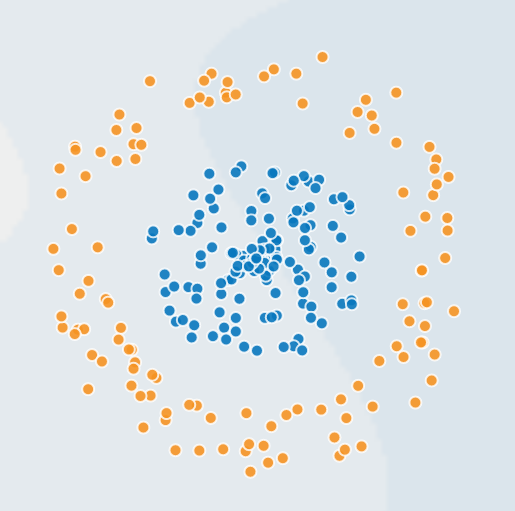

Ice-cream example

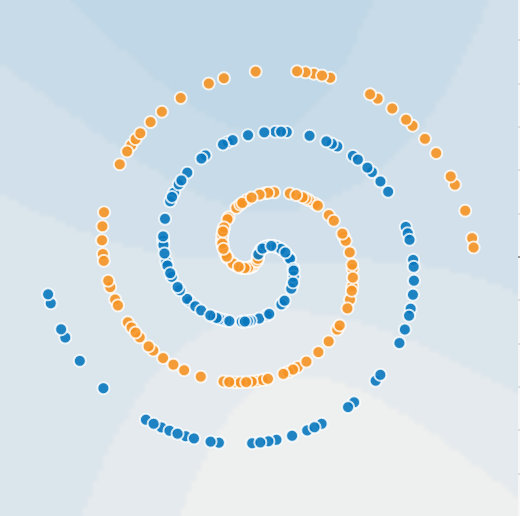

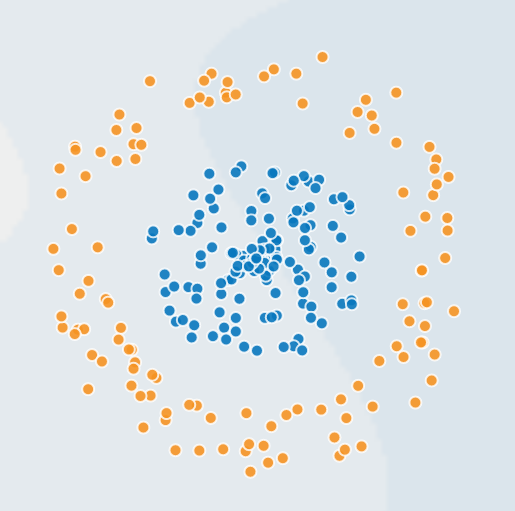

- Consider a more tricky dataset like this:

- We cannot separate the two classes using a linear function like before.

- We could try different functions, e.g, a circle.

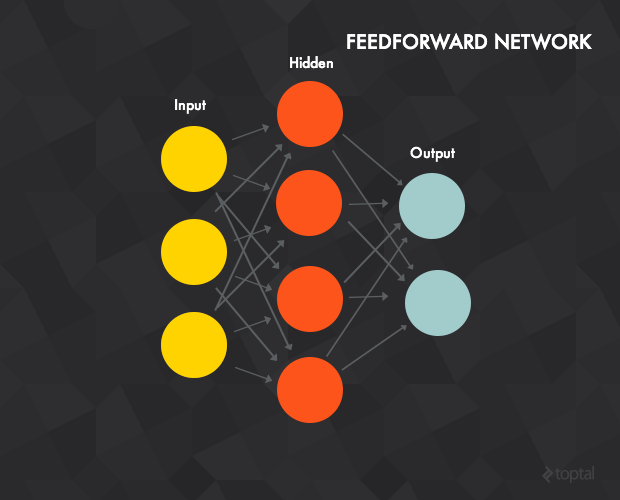

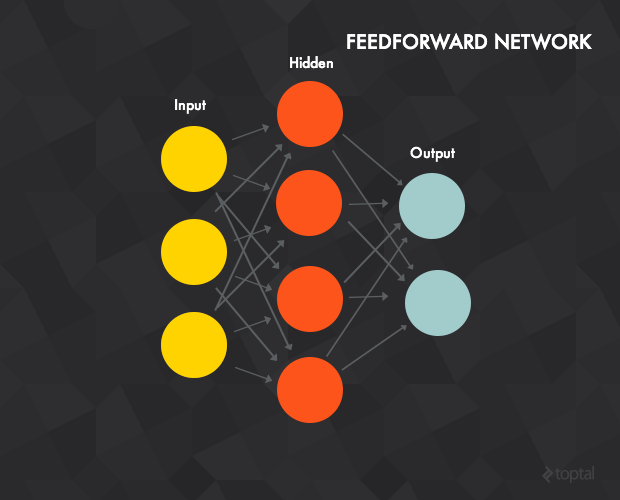

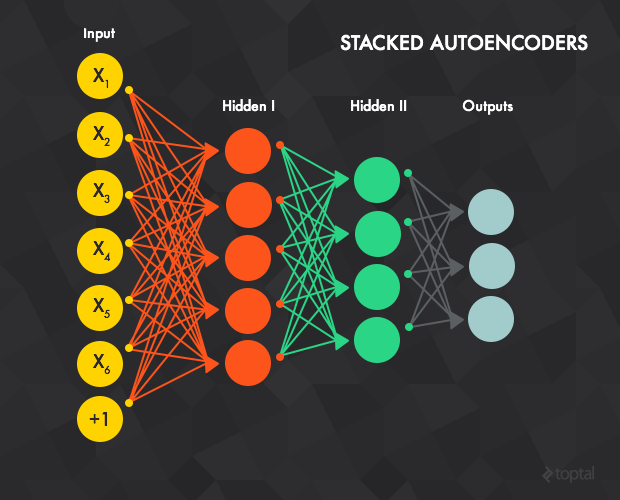

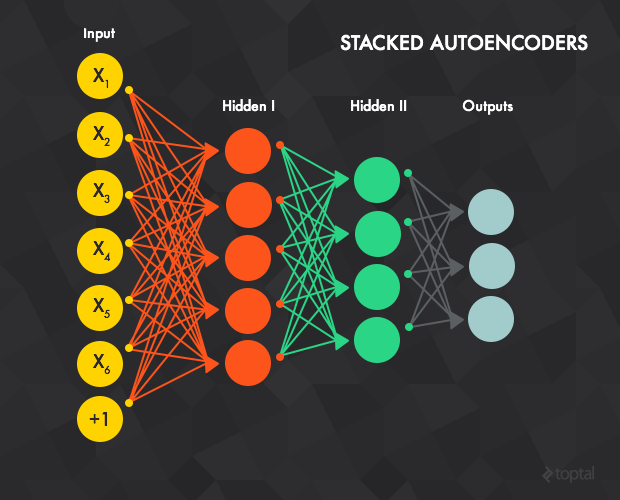

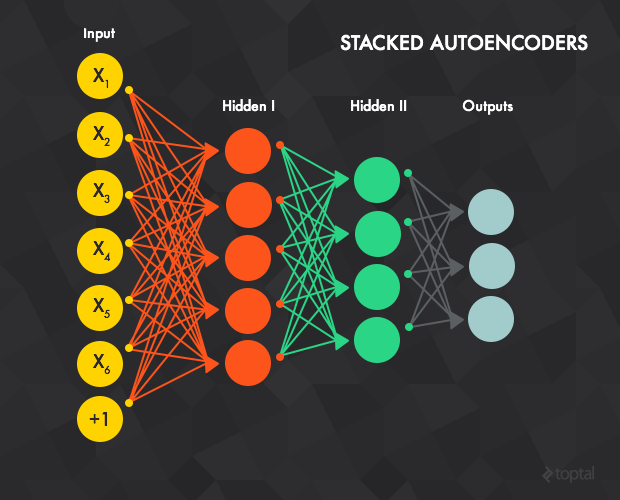

Neural Networks

- But the point is that we want the ML system to be flexible to "devise" it's own function (i.e., the model) in order to classify the data correctly.

- Neural Networks is a way to achieve this.

- Instead of writing down a bigger function, we can link together many simple functions.

- This is inspired by the neurons that are interconnected and trigger each other.

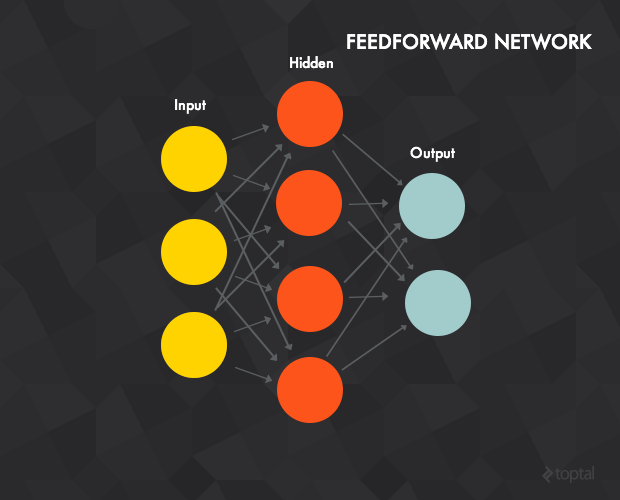

Neural Networks

- Let's link together many classifiers like the linear one and form a Neural Network!

Input-Hidden-Output

- Input nodes on the left (one per input).

- Linear classifiers on the middle (as many as we want).

- Output nodes on the right (one per output).

Ice-cream example

- In the example before: 2 input nodes (noise,time), 1 hidden node (the linear classifier), 1 output (kid-ness) .

- The hidden node learned to identify kids.

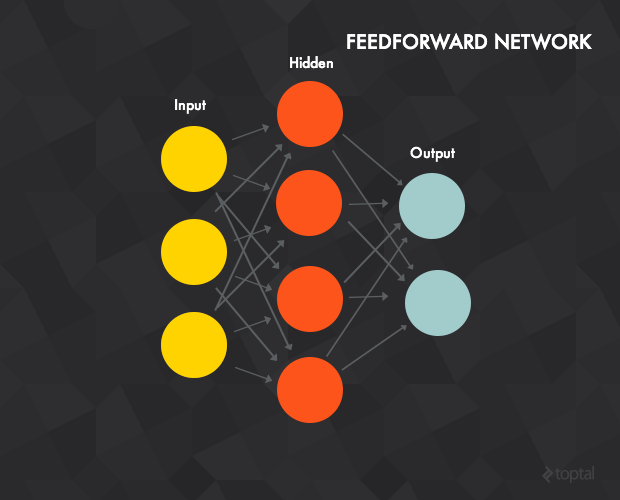

Many inputs

- When there are many inputs (e.g., 100s of them),

each hidden node will learn a different aspect of the problem we try to solve.

High-level classifiers

- The output nodes will combine the information they get from the classifiers (hidden nodes) and work as more high-level classifiers to generate the answer.

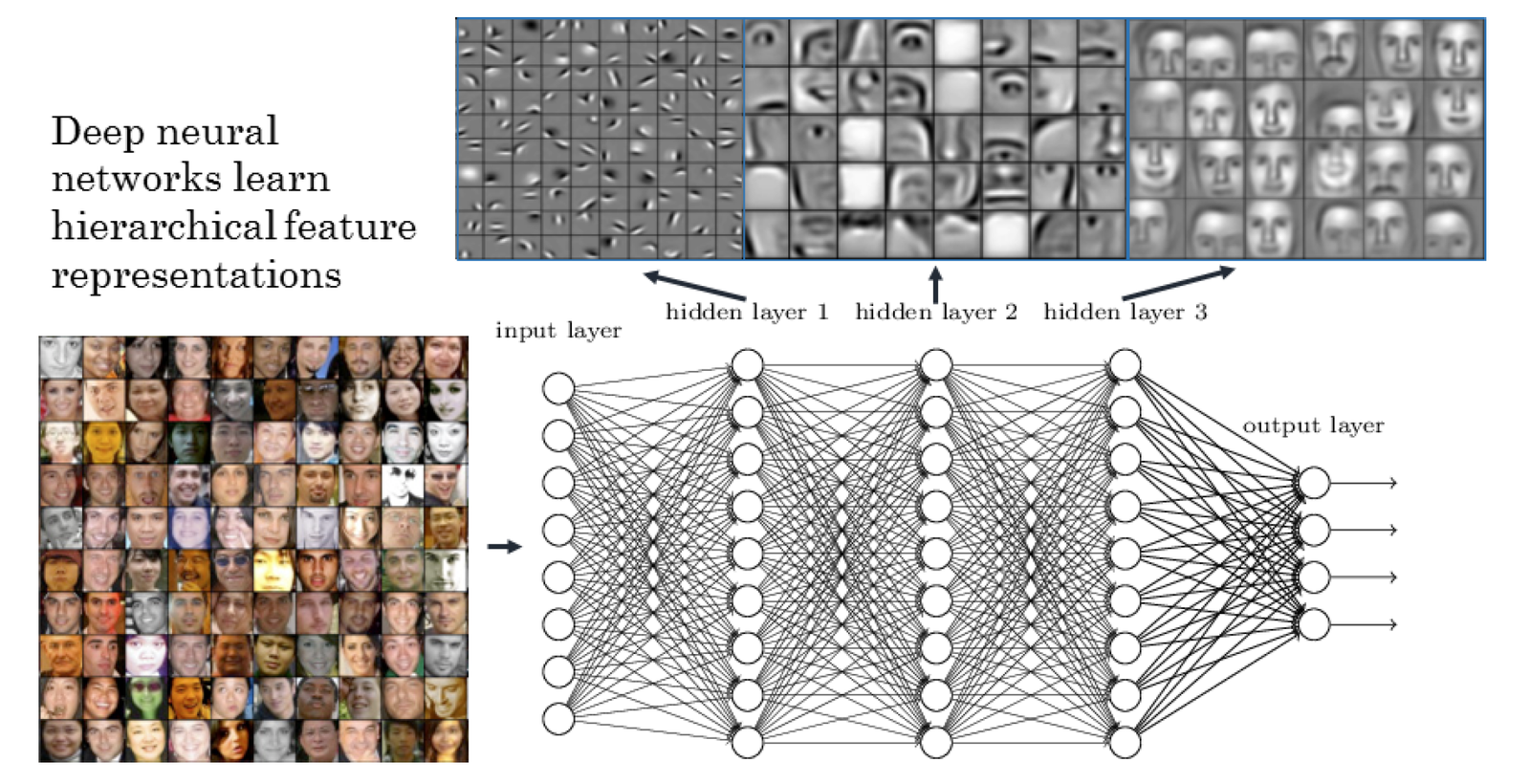

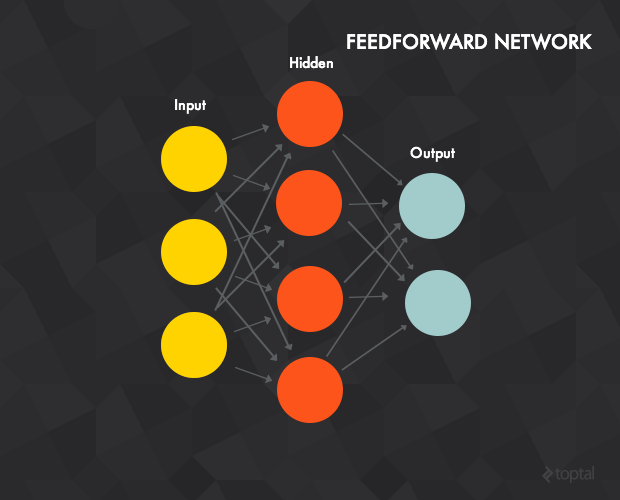

Going deeper

- We can do this for many hidden layers.

- Each layer will work as if the previous hidden layer is the input it is trying to learn.

Deep Learning

- This is a Neural Network that is Deep.

- And we use the Machine Learning paradigm.

- This is where the name Deep Learning comes from.

Deep Learning

- The main idea is the same as in the case of f(x1,x2).

- Essentially, the whole Deep NN is a just one complicated function that can fit itself to the data.

Deep Learning

- Let's focus on the case of two inputs (x1, x2) and let's play with a visual tool to experiment with layers and nodes.

Live Experiment

NNs with Tensorflow

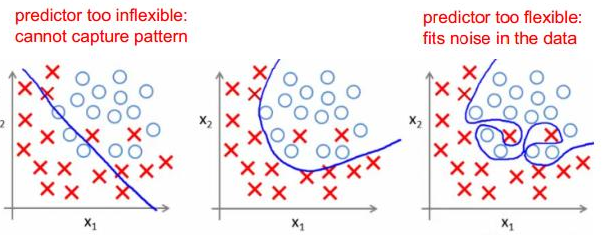

Overfitting

- You need to find the right balance in defining the function that fits in the training dataset

More

- Vision

- Games

- Text

Convolutional NNs

- Vision: exploit "local" relation of features in images.

Convolutional NNs

Reinforcement Learning

- Games: exploit "rules" to do learn by playing thousands of games alone or to itself as an opponent.

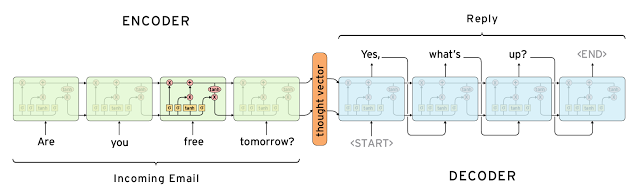

Recurrent NNs

- Text: exploit "temporal" relation between words.

- Generative models for many scenarios.

Recurrent NNs

- Text: exploit "temporal" relation between words.

- Generative models for many scenarios.

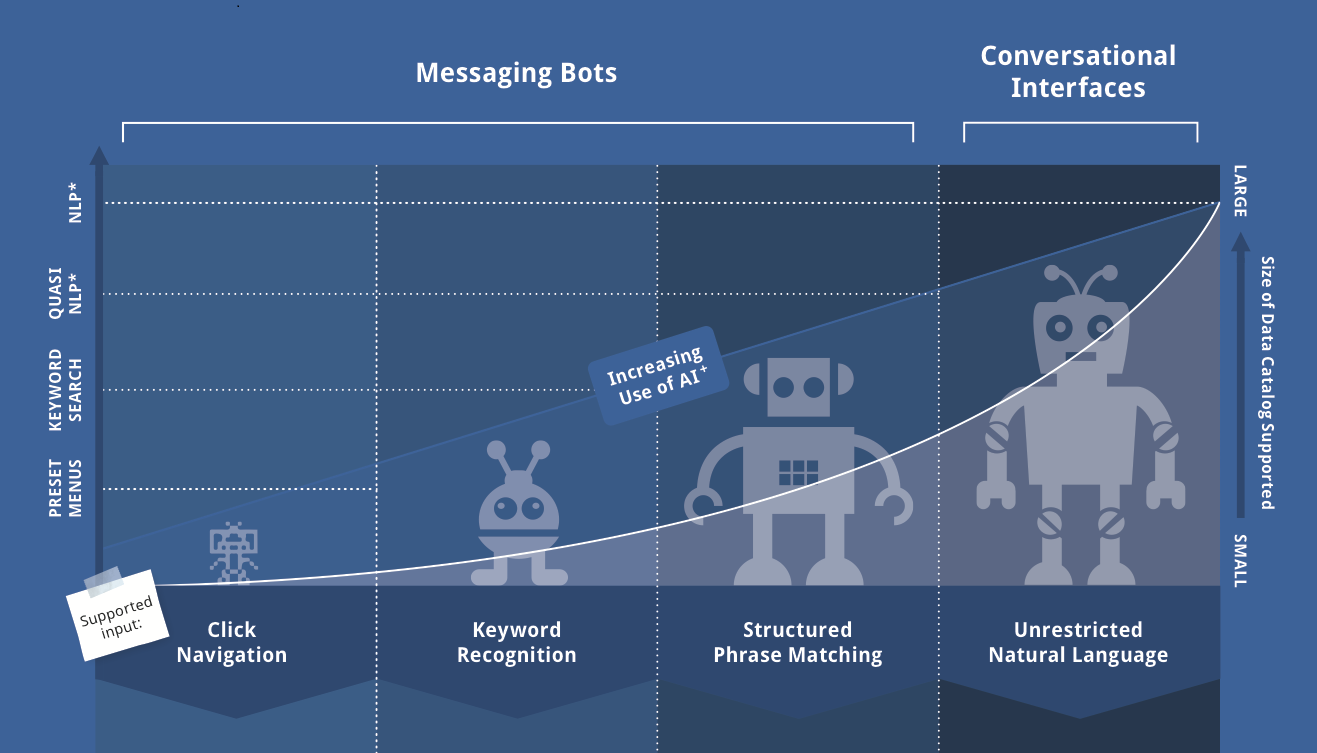

NLP + AI = Chatbots!

- Messaging apps as a uniform and familiar UI.

- Natural language as a new UI element.

AI is not only ML

- Knowledge representation

- Action languages

- Automated logical reasoning

- Verification

- ...

Takeaways

Applications?

- The range can be confusing.

- From analyzing item transactions that we mentioned in the beginning..

- ..to AI that we fear that it may one day rule the world!

Applications?

- ML research and tools progress rapidly.

- Computing resources progress rapidly.

Applications?

- On the one side you can use "black box" methods for implementing "users who liked X also liked Y".

- On the other side you can expect that it is feasible to use lots of data to do problem solving of the form:

- "evaluate this situation based on inputs";

- "decide the best action based on inputs";

- "classify data into categories";

- combinations of all of the above.

It starts here

- There is a vast (vast) amount of information online.

- Here's some introductory tutorials on ML and DL.

- Machine Learning theory: An introductory primer.

-

An introduction to Deep Learning from

Perceptrons to Deep Networks. - Click on the slide images to go to relevant posts!

- Deep Learning Book

- Tensor programming tutorials with Python

- There is a big need for more practitioners to apply these methods in many domains.

Questions?

Some info: https://about.me/stavrosv

Email: stavros@helvia.io

Twitter: @stavros.vassos

Copy of Intro to AΙ

By Khanh Duc Nguyen

Copy of Intro to AΙ

- 27