Slides based on the book:

\(F_0 = 0, F_1 = 1\)

\(F_{n+2} = F_{n+1} + F_n\) for \(n = 0,1,2,\ldots\)

\(F_0, F_1, F_2, \ldots \)

#1. Fibonacci Numbers, Quickly

The Fibonacci numbers were first described in Indian mathematics, as early as 200 BC in work by Pingala on enumerating possible patterns of Sanskrit poetry formed from syllables of two lengths.

Hemachandra (c. 1150) is credited with knowledge of the sequence as well, writing that "the sum of the last and the one before the last is the number ... of the next mātrā-vṛtta."

\(\underbrace{\left(\begin{array}{cc}\star & \star \\\star & \star\end{array}\right)}_{M}\left(\begin{array}{c}F_{n+1} \\F_n\end{array}\right) = \left(\begin{array}{c}F_{n+2} \\F_{n+1}\end{array}\right)\)

#1. Fibonacci Numbers, Quickly

\(\underbrace{\left(\begin{array}{cc}{\color{IndianRed}\star} & {\color{IndianRed}\star} \\\star & \star\end{array}\right)}_{M}\left(\begin{array}{c}F_{n+1} \\F_n\end{array}\right) = \left(\begin{array}{c}F_{n+2} \\F_{n+1}\end{array}\right)\)

#1. Fibonacci Numbers, Quickly

\(\underbrace{\left(\begin{array}{cc}{\color{IndianRed}1} & {\color{IndianRed}1} \\\star & \star\end{array}\right)}_{M}\left(\begin{array}{c}F_{n+1} \\F_n\end{array}\right) = \left(\begin{array}{c}F_{n+2} \\F_{n+1}\end{array}\right)\)

#1. Fibonacci Numbers, Quickly

\(\underbrace{\left(\begin{array}{cc}{\color{IndianRed}1} & {\color{IndianRed}1} \\{\color{DodgerBlue}\star} & {\color{DodgerBlue}\star}\end{array}\right)}_{M}\left(\begin{array}{c}F_{n+1} \\F_n\end{array}\right) = \left(\begin{array}{c}F_{n+2} \\F_{n+1}\end{array}\right)\)

#1. Fibonacci Numbers, Quickly

\(\underbrace{\left(\begin{array}{cc}{\color{IndianRed}1} & {\color{IndianRed}1} \\{\color{DodgerBlue}1} & {\color{DodgerBlue}0}\end{array}\right)}_{M}\left(\begin{array}{c}F_{n+1} \\F_n\end{array}\right) = \left(\begin{array}{c}F_{n+2} \\F_{n+1}\end{array}\right)\)

#1. Fibonacci Numbers, Quickly

\(\underbrace{\left(\begin{array}{cc}{\color{IndianRed}1} & {\color{IndianRed}1} \\{\color{DodgerBlue}1} & {\color{DodgerBlue}0}\end{array}\right)}_{M}\left(\begin{array}{c}F_{n+1} \\F_n\end{array}\right) = \left(\begin{array}{c}F_{n+2} \\F_{n+1}\end{array}\right)\)

\(M\left(\begin{array}{c}F_{n+1} \\F_n\end{array}\right)=\left(\begin{array}{c}F_{n+2} \\F_{n+1}\end{array}\right)\)

#1. Fibonacci Numbers, Quickly

\(\underbrace{\left(\begin{array}{cc}{\color{IndianRed}1} & {\color{IndianRed}1} \\{\color{DodgerBlue}1} & {\color{DodgerBlue}0}\end{array}\right)}_{M}\left(\begin{array}{c}F_{n+1} \\F_n\end{array}\right) = \left(\begin{array}{c}F_{n+2} \\F_{n+1}\end{array}\right)\)

\(M\left(\begin{array}{c}1 \\0\end{array}\right)=\left(\begin{array}{c}F_{2} \\F_{1}\end{array}\right)\)

\(M\left(\begin{array}{c}F_{n+1} \\F_n\end{array}\right)=\left(\begin{array}{c}F_{n+2} \\F_{n+1}\end{array}\right)\)

#1. Fibonacci Numbers, Quickly

\(\underbrace{\left(\begin{array}{cc}{\color{IndianRed}1} & {\color{IndianRed}1} \\{\color{DodgerBlue}1} & {\color{DodgerBlue}0}\end{array}\right)}_{M}\left(\begin{array}{c}F_{n+1} \\F_n\end{array}\right) = \left(\begin{array}{c}F_{n+2} \\F_{n+1}\end{array}\right)\)

\(M\left(\begin{array}{c}1 \\0\end{array}\right)=\left(\begin{array}{c}F_{2} \\F_{1}\end{array}\right)\)

#1. Fibonacci Numbers, Quickly

\(\underbrace{\left(\begin{array}{cc}{\color{IndianRed}1} & {\color{IndianRed}1} \\{\color{DodgerBlue}1} & {\color{DodgerBlue}0}\end{array}\right)}_{M}\left(\begin{array}{c}F_{n+1} \\F_n\end{array}\right) = \left(\begin{array}{c}F_{n+2} \\F_{n+1}\end{array}\right)\)

\(M\left(\begin{array}{c}1 \\0\end{array}\right)=\left(\begin{array}{c}F_{2} \\F_{1}\end{array}\right)\)

\(M\left(\begin{array}{c}F_2 \\F_1\end{array}\right)=\left(\begin{array}{c}F_{3} \\F_{2}\end{array}\right)\)

#1. Fibonacci Numbers, Quickly

\(\underbrace{\left(\begin{array}{cc}{\color{IndianRed}1} & {\color{IndianRed}1} \\{\color{DodgerBlue}1} & {\color{DodgerBlue}0}\end{array}\right)}_{M}\left(\begin{array}{c}F_{n+1} \\F_n\end{array}\right) = \left(\begin{array}{c}F_{n+2} \\F_{n+1}\end{array}\right)\)

\(M\left(\begin{array}{c}1 \\0\end{array}\right)=\left(\begin{array}{c}F_{2} \\F_{1}\end{array}\right)\)

\(M{\color{Orange}\left(\begin{array}{c}F_2 \\F_1\end{array}\right)}=\left(\begin{array}{c}F_{3} \\F_{2}\end{array}\right)\)

#1. Fibonacci Numbers, Quickly

\(\underbrace{\left(\begin{array}{cc}{\color{IndianRed}1} & {\color{IndianRed}1} \\{\color{DodgerBlue}1} & {\color{DodgerBlue}0}\end{array}\right)}_{M}\left(\begin{array}{c}F_{n+1} \\F_n\end{array}\right) = \left(\begin{array}{c}F_{n+2} \\F_{n+1}\end{array}\right)\)

\(M\left(\begin{array}{c}1 \\0\end{array}\right)=\left(\begin{array}{c}F_{2} \\F_{1}\end{array}\right)\)

\(M \cdot {\color{Orange}M \left(\begin{array}{c}1 \\0\end{array}\right)}=\left(\begin{array}{c}F_{3} \\F_{2}\end{array}\right)\)

#1. Fibonacci Numbers, Quickly

\(\underbrace{\left(\begin{array}{cc}{\color{IndianRed}1} & {\color{IndianRed}1} \\{\color{DodgerBlue}1} & {\color{DodgerBlue}0}\end{array}\right)}_{M}\left(\begin{array}{c}F_{n+1} \\F_n\end{array}\right) = \left(\begin{array}{c}F_{n+2} \\F_{n+1}\end{array}\right)\)

\(M\left(\begin{array}{c}1 \\0\end{array}\right)=\left(\begin{array}{c}F_{2} \\F_{1}\end{array}\right)\)

\(M^2\left(\begin{array}{c}1 \\0\end{array}\right)=\left(\begin{array}{c}F_{3} \\F_{2}\end{array}\right)\)

#1. Fibonacci Numbers, Quickly

\(\underbrace{\left(\begin{array}{cc}{\color{IndianRed}1} & {\color{IndianRed}1} \\{\color{DodgerBlue}1} & {\color{DodgerBlue}0}\end{array}\right)}_{M}\left(\begin{array}{c}F_{n+1} \\F_n\end{array}\right) = \left(\begin{array}{c}F_{n+2} \\F_{n+1}\end{array}\right)\)

\(M\left(\begin{array}{c}1 \\0\end{array}\right)=\left(\begin{array}{c}F_{2} \\F_{1}\end{array}\right)\)

\(M^n\left(\begin{array}{c}1 \\0\end{array}\right)=\left(\begin{array}{c}F_{n+1} \\F_{n}\end{array}\right)\)

#1. Fibonacci Numbers, Quickly

\(M^n\left(\begin{array}{c}1 \\0\end{array}\right)=\left(\begin{array}{c}F_{n+1} \\F_{n}\end{array}\right)\)

#1. Fibonacci Numbers, Quickly

\(\underbrace{M \longrightarrow M^{2} \longrightarrow M^{4} \longrightarrow M^{8} \longrightarrow M^{16} \longrightarrow M^{32} \longrightarrow \cdots}_{}\)

repeated squaring

\(\mathcal{O}(\log_2 n)\) multiplications of \(2 \times 2\) matrices.

#1. Fibonacci Numbers, Quickly

\(\underbrace{M \longrightarrow M^{2} \longrightarrow M^{4} \longrightarrow M^{8} \longrightarrow M^{16} \longrightarrow M^{32} \longrightarrow \cdots}_{}\)

repeated squaring

\(\mathcal{O}(\log_2 n)\) multiplications of \(2 \times 2\) matrices.

\(M^n = M^{(2^{k_1} + 2^{k_2} + \cdots + 2^{k_\ell})} = M^{2^{k_1}} M^{2^{k_2}} \cdots M^{2^{k_\ell}} \)

\(\mathcal{O}(\log_2 n)\) multiplications of \(2 \times 2\) matrices.

#1. Fibonacci Numbers, Quickly

If we want to compute the Fibonacci numbers by this method, we have to be careful, since the \(F_n\) grow very fast.

#1. Fibonacci Numbers, Quickly

As we will see later, the number of decimal digits of \(F_n\) is of order \(n\).

Thus we must use multiple precision arithmetic, and so the arithmetic operations will be relatively slow.

#1. Fibonacci Numbers, Quickly

#2. Fibonacci Numbers, The Formula

Consider the vector space of all infinite sequences:

\((u_0, u_1, u_2, \ldots )\)

...with coordinate-wise addition and multiplication by real numbers.

In this space we define a subspace \(\mathcal{W}\) of all sequences satisfying the equation:

\(u_{n+2} = u_{n+1}+u_n\) for all \(n = 0, 1, ...\)

Easy to verify: \(\mathcal{W}\) is a subspace.

Claim: \(dim(\mathcal{W}) = 2\).

Observe that \((0,1,1,2,3,\ldots)\) and \((1,0,1,1,2,\ldots)\) is a basis.

Goal. Find a closed-form formula for \(F_n\).

\(= (0,1,1,2,3,5,8,13,21,\ldots)\)

\(= (\ldots,\alpha^n,\ldots)\)

\(= (\ldots,\beta^n,\ldots)\)

=

+

\(\mathcal{W}\)

#2. Fibonacci Numbers, The Formula

\((\tau^0,\tau^1,\tau^2,\tau^3,\cdots) \in \mathcal{W} \)

\(\tau^k = \tau^{k-1}+\tau^{k-2}\)

\(\tau^2 = \tau+1\)

\(\tau_{1,2}=(1 \pm \sqrt{5}) / 2\)

\(\alpha=(1 + \sqrt{5}) / 2\)

\(\beta=(1 - \sqrt{5}) / 2\)

#2. Fibonacci Numbers, The Formula

=

+

\(F = c \cdot (\alpha^0,\alpha^1,\alpha^2,\alpha^3,\cdots) + d \cdot (\beta^0,\beta^1,\beta^2,\beta^3,\cdots)\)

\(0 = c + d\)

\(1 = c\alpha + d\beta\)

\(d = 1/(\beta -\alpha)\)

\(c = 1/(\alpha -\beta)\)

#2. Fibonacci Numbers, The Formula

#2. Fibonacci Numbers, The Formula

\(F_n = c \cdot \alpha^n + d \cdot \beta^n\)

\(d = 1/(\beta -\alpha)\)

\(c = 1/(\alpha -\beta)\)

\(\alpha=(1 + \sqrt{5}) / 2\)

\(\beta=(1 - \sqrt{5}) / 2\)

\(F_n = \frac{1}{\sqrt{5}} \cdot \left[ \left( \frac{1+\sqrt{5}}{2} \right)^n - \left( \frac{1-\sqrt{5}}{2} \right)^n \right] \)

#2. Fibonacci Numbers, The Formula

\(F_n = \left\lfloor \frac{1}{\sqrt{5}} \cdot \left( \frac{1+\sqrt{5}}{2} \right)^n \right\rfloor \)

Exercise 1. Show that:

Exercise 2. Use this method to work out a closed form for:

\(y_{n+2}=2 y_{n+1}-y_n\)

(Source: generatingfunctionology, Wilf;

h/t Matthew Drescher and John Azariah for a fun Twitter discussion on this.)

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

Their main occupation was forming various clubs,

which at some point started threatening the very survival of the city.

⚠️ There could be as many as \(2^n\) distinct clubs!

(Well, \(2^n - 1\) if you would prefer to exclude the empty club.)

In order to limit the number of clubs, the city council decreed the following innocent-looking rules:

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

Their main occupation was forming various clubs,

which at some point started threatening the very survival of the city.

⚠️ There could be as many as \(2^n\) distinct clubs!

(Well, \(2^n - 1\) if you would prefer to exclude the empty club.)

In order to limit the number of clubs, the city council decreed the following innocent-looking rules:

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

Their main occupation was forming various clubs,

which at some point started threatening the very survival of the city.

⚠️ There could be as many as \(2^n\) distinct clubs!

(Well, \(2^n - 1\) if you would prefer to exclude the empty club.)

In order to limit the number of clubs, the city council decreed the following innocent-looking rules:

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

Their main occupation was forming various clubs,

which at some point started threatening the very survival of the city.

⚠️ There could be as many as \(2^n\) distinct clubs!

(Well, \(2^n - 1\) if you would prefer to exclude the empty club.)

In order to limit the number of clubs, the city council decreed the following innocent-looking rules:

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

Example: \(\{\{1\},\{2\}, \ldots, \{n\}\}\).

(The Singletons Clubs.)

Example: \(\{\{1,2,3\},\{1,2,4\}, \ldots, \{1,2,n\}\}\).

(Where 1 and 2 are popular.)

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

subsets of \([n]\)

vectors in \(n\)-dimensional space

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

sets that satisfy (1) & (2)

linearly independent vectors

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

1

0

0

0

0

1

1

1

\(\in \mathbb{F}^n_2\)

1

0

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

1

0

0

0

0

1

1

1

1

0

\(\{1,3,5,6,7\} \subseteq [10] \longrightarrow (1,0,1,0,1,1,1,0,0,0)\)

Another Example:

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

1

0

0

0

0

1

1

1

1

0

\(\{{\color{IndianRed}1},{\color{DodgerBlue}3},{\color{SeaGreen}5},{\color{Tomato}6},{\color{Purple}7}\} \subseteq [10] \longrightarrow ({\color{IndianRed}1},0,{\color{DodgerBlue}1},0,{\color{SeaGreen}1},{\color{Tomato}1},{\color{Purple}1},0,0,0)\)

Another Example:

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

Claim. If \(\{S_1, \ldots, S_t\}\) forms a valid set of clubs over \([n]\),

then \(\{v_1, \ldots v_t\}\) is a linearly independent collection of vectors in \(\mathbb{F}_2^n\), where \(v_i := f(S_i)\).

\(\alpha_1 v_1 + \cdots + \alpha_i v_i + \cdots + \alpha_j v_j + \cdots + \alpha_t v_t = 0\)

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

Claim. If \(\{S_1, \ldots, S_t\}\) forms a valid set of clubs over \([n]\),

then \(\{v_1, \ldots v_t\}\) is a linearly independent collection of vectors in \(\mathbb{F}_2^n\), where \(v_i := f(S_i)\).

\(\alpha_1 (v_1 \cdot v_i) + \cdots + \alpha_i (v_i \cdot v_i) + \cdots + \alpha_j (v_j \cdot v_i) + \cdots + \alpha_t (v_t \cdot v_i) = 0\)

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

Claim. If \(\{S_1, \ldots, S_t\}\) forms a valid set of clubs over \([n]\),

then \(\{v_1, \ldots v_t\}\) is a linearly independent collection of vectors in \(\mathbb{F}_2^n\), where \(v_i := f(S_i)\).

\(\alpha_1 (v_1 \cdot v_i) + \cdots + \alpha_i ({\color{IndianRed}v_i \cdot v_i}) + \cdots + \alpha_j (v_j \cdot v_i) + \cdots + \alpha_t (v_t \cdot v_i) = 0\)

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

\(\{{\color{IndianRed}1},{\color{DodgerBlue}3},{\color{SeaGreen}5},{\color{Tomato}6},{\color{Purple}7}\} \subseteq [10] \longrightarrow ({\color{IndianRed}1},0,{\color{DodgerBlue}1},0,{\color{SeaGreen}1},{\color{Tomato}1},{\color{Purple}1},0,0,0)\)

\( ({\color{IndianRed}1},0,{\color{DodgerBlue}1},0,{\color{SeaGreen}1},{\color{Tomato}1},{\color{Purple}1},0,0,0)\)

\( ({\color{IndianRed}1},0,{\color{DodgerBlue}1},0,{\color{SeaGreen}1},{\color{Tomato}1},{\color{Purple}1},0,0,0)\)

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

Claim. If \(\{S_1, \ldots, S_t\}\) forms a valid set of clubs over \([n]\),

then \(\{v_1, \ldots v_t\}\) is a linearly independent collection of vectors in \(\mathbb{F}_2^n\), where \(v_i := f(S_i)\).

\(\alpha_1 (v_1 \cdot v_i) + \cdots + \alpha_i ({\color{IndianRed}v_i \cdot v_i}) + \cdots + \alpha_j (v_j \cdot v_i) + \cdots + \alpha_t (v_t \cdot v_i) = 0\)

\({\color{IndianRed}|S_i| \mod 2}\)

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

Claim. If \(\{S_1, \ldots, S_t\}\) forms a valid set of clubs over \([n]\),

then \(\{v_1, \ldots v_t\}\) is a linearly independent collection of vectors in \(\mathbb{F}_2^n\), where \(v_i := f(S_i)\).

\(\alpha_1 (v_1 \cdot v_i) + \cdots + \alpha_i ({\color{IndianRed}v_i \cdot v_i}) + \cdots + \alpha_j ({\color{DodgerBlue}v_j \cdot v_i}) + \cdots + \alpha_t (v_t \cdot v_i) = 0\)

\({\color{IndianRed}|S_i| \mod 2}\)

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

\(\{{\color{IndianRed}1},{\color{DodgerBlue}3},{\color{Tomato}5},{\color{Tomato}6},{\color{Tomato}7}\} \subseteq [10] \longrightarrow ({\color{IndianRed}1},0,{\color{DodgerBlue}1},0,{\color{Tomato}1},{\color{Tomato}1},{\color{Tomato}1},0,0,0)\)

\(\{{\color{IndianRed}1},{\color{DodgerBlue}3},{\color{SeaGreen}4},{\color{SeaGreen}8},{\color{SeaGreen}9}\} \subseteq [10] \longrightarrow ({\color{IndianRed}1},0,{\color{DodgerBlue}1},{\color{SeaGreen}1},0,0,0,{\color{SeaGreen}1},{\color{SeaGreen}1},0)\)

\(({\color{IndianRed}1},0,{\color{DodgerBlue}1},0,0,0,0,0,0,0)\)

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

Claim. If \(\{S_1, \ldots, S_t\}\) forms a valid set of clubs over \([n]\),

then \(\{v_1, \ldots v_t\}\) is a linearly independent collection of vectors in \(\mathbb{F}_2^n\), where \(v_i := f(S_i)\).

\(\alpha_1 (v_1 \cdot v_i) + \cdots + \alpha_i ({\color{IndianRed}v_i \cdot v_i}) + \cdots + \alpha_j ({\color{DodgerBlue}v_j \cdot v_i}) + \cdots + \alpha_t (v_t \cdot v_i) = 0\)

\({\color{IndianRed}|S_i| \mod 2}\)

\({\color{DodgerBlue}|S_i \cap S_j| \mod 2}\)

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

Claim. If \(\{S_1, \ldots, S_t\}\) forms a valid set of clubs over \([n]\),

then \(\{v_1, \ldots v_t\}\) is a linearly independent collection of vectors in \(\mathbb{F}_2^n\), where \(v_i := f(S_i)\).

\(\alpha_1 (v_1 \cdot v_i) + \cdots + \alpha_i ({\color{IndianRed}v_i \cdot v_i}) + \cdots + \alpha_j ({\color{DodgerBlue}v_j \cdot v_i}) + \cdots + \alpha_t (v_t \cdot v_i) = 0\)

\({\color{IndianRed}1}\)

\({\color{DodgerBlue}0}\)

#3. The Clubs of OddTown

There are \(n\) citizens living in Oddtown.

(1) Each club has to have an odd number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(n\) clubs.

Claim. If \(\{S_1, \ldots, S_t\}\) forms a valid set of clubs over \([n]\),

then \(\{v_1, \ldots v_t\}\) is a linearly independent collection of vectors in \(\mathbb{F}_2^n\), where \(v_i := f(S_i)\).

\({\color{Silver}\alpha_i (v_1 \cdot v_i) + \cdots +} \alpha_i ({\color{IndianRed}v_i \cdot v_i}){\color{Silver} + \cdots + \alpha_j (v_j \cdot v_i) + \cdots + \alpha_t (v_t \cdot v_i)} = 0\)

\(\implies \alpha_i = 0\), \(\forall i \in [n]\)

#3. The Clubs of OddTown

What about Eventown?

#3. The Clubs of OddTown

There are \(n\) citizens living in Eventown.

(1) Each club has to have an even number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(2^{\lfloor \frac{n}{2} \rfloor}\) clubs.

Claim. If \(\{S_1, \ldots, S_t\}\) is a maximal and valid set of clubs over \([n]\),

then \(\{v_1, \ldots v_t\}\) is a totally isotropic subspace of dimension at most \(\lfloor \frac{n}{2} \rfloor\).

Note that \(v_i \cdot v_j = 0\) for all \(1 \leqslant i,j \leqslant t\)

Let \(X := \{v_1, \ldots, v_t\}\).

In other words, \(X \perp X\), implying that \(X \subseteq X^{\perp}\).

#3. The Clubs of OddTown

There are \(n\) citizens living in Eventown.

(1) Each club has to have an even number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(2^{\lfloor \frac{n}{2} \rfloor}\) clubs.

Claim. If \(\{S_1, \ldots, S_t\}\) is a maximal and valid set of clubs over \([n]\),

then \(\{v_1, \ldots v_t\}\) is a totally isotropic subspace of dimension at most \(\lfloor \frac{n}{2} \rfloor\).

Let \(X := \{v_1, \ldots, v_t\}\).

In other words, \(X \perp X\), implying that \(X \subseteq X^{\perp}\).

If \(v\) is in span\((X)\), then \(v \perp X\): therefore \(X\) is closed

(since \(\{S_1, \ldots, S_t\}\) is maximal).

#3. The Clubs of OddTown

There are \(n\) citizens living in Eventown.

(1) Each club has to have an even number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(2^{\lfloor \frac{n}{2} \rfloor}\) clubs.

Claim. If \(\{S_1, \ldots, S_t\}\) is a maximal and valid set of clubs over \([n]\),

then \(\{v_1, \ldots v_t\}\) is a totally isotropic subspace of dimension at most \(\lfloor \frac{n}{2} \rfloor\).

Let \(X := \{v_1, \ldots, v_t\}\).

In other words, \(X \perp X\), implying that \(X \subseteq X^{\perp}\).

Therefore, \(X\) is a subspace.

#3. The Clubs of OddTown

There are \(n\) citizens living in Eventown.

(1) Each club has to have an even number of members.

(2) Every two clubs must have an even number of members in common.

Under these rules, it is impossible to form more than \(2^{\lfloor \frac{n}{2} \rfloor}\) clubs.

Claim. If \(\{S_1, \ldots, S_t\}\) is a maximal and valid set of clubs over \([n]\),

then \(\{v_1, \ldots v_t\}\) is a totally isotropic subspace of dimension at most \(\lfloor \frac{n}{2} \rfloor\).

Let \(X := \{v_1, \ldots, v_t\}\).

In other words, \(X \perp X\), implying that \(X \subseteq X^{\perp}\).

\(\dim(X) + \dim(X^\perp) = n \implies \dim(X) \leqslant \lfloor \frac{n}{2} \rfloor\).

#4. Same-Size Intersections

Generalized Fisher inequality.

If \(C_1, C_2, \ldots, C_m\) are

distinct and nonempty subsets

of an \(n\)-element set

such that

all the intersections \(C_i \cap C_j, i \neq j\),

have the same size (say \(t\)),

then

\(m \leqslant n\).

#4. Same-Size Intersections

Given: \(C_1, C_2, \ldots, C_m \in 2^{[n]}\)

where \(|C_i \cap C_j| = t\) for all \(1\leqslant i < j \leqslant m\)

To Prove: \(m \leqslant n\).

Case 1. \(|C_i| = t\) for some \(i \in [m]\).

At most \(n-t\) other sets.

Total # of sets \(\leqslant n-t + 1 \leqslant n\).

#4. Same-Size Intersections

Given: \(C_1, C_2, \ldots, C_m \in 2^{[n]}\)

where \(|C_i \cap C_j| = t\) for all \(1\leqslant i < j \leqslant m\)

To Prove: \(m \leqslant n\).

Case 2. \(|C_i| > t\) for all \(i \in [m]\).

\(a_{i j}= \begin{cases}1 & \text { if } j \in C_i, \text { and } \\ 0 & \text { otherwise }\end{cases}\)

Let \(A\) be the \(m \times n\) matrix with entries:

#4. Same-Size Intersections

Given: \(C_1, C_2, \ldots, C_m \in 2^{[n]}\)

where \(|C_i \cap C_j| = t\) for all \(1\leqslant i < j \leqslant m\)

To Prove: \(m \leqslant n\).

Case 2. \(|C_i| > t\) for all \(i \in [m]\).

\(\{{\color{IndianRed}1,2,5}\},\{{\color{DodgerBlue}2,3}\},\{{\color{DarkSeaGreen}3,4,5}\}\)

\(\left(\begin{array}{ccccc}{\color{IndianRed}1} & {\color{IndianRed}1} & 0 & 0 & {\color{IndianRed}1} \\0 & {\color{DodgerBlue}1} & {\color{DodgerBlue}1} & 0 & 0 \\ 0 & 0 & {\color{DarkSeaGreen}1} & {\color{DarkSeaGreen}1} & {\color{DarkSeaGreen}1} \end{array}\right)\)

#4. Same-Size Intersections

Given: \(C_1, C_2, \ldots, C_m \in 2^{[n]}\)

where \(|C_i \cap C_j| = t\) for all \(1\leqslant i < j \leqslant m\)

To Prove: \(m \leqslant n\).

Case 2. \(|C_i| > t\) for all \(i \in [m]\).

\(B := AA^T\)

\(=\)

\(A\)

\(A^T\)

\(B\)

\(C_i\)

\(C_j\)

#4. Same-Size Intersections

\(=\)

\(A\)

\(A^T\)

\(B\)

\(C_i\)

\(C_j\)

\(t\)

#4. Same-Size Intersections

\(=\)

\(A\)

\(A^T\)

\(B\)

\(C_i\)

\(C_i\)

#4. Same-Size Intersections

\(=\)

\(A\)

\(A^T\)

\(B\)

\(C_i\)

\(C_i\)

\(d_i\)

#4. Same-Size Intersections

#4. Same-Size Intersections

Given: \(C_1, C_2, \ldots, C_m \in 2^{[n]}\)

where \(|C_i \cap C_j| = t\) for all \(1\leqslant i < j \leqslant m\)

To Prove: \(m \leqslant n\).

Case 2. \(|C_i| > t\) for all \(i \in [m]\).

\(B := AA^T\)

\(B=\left(\begin{array}{ccccc}d_1 & t & t & \ldots & t \\t & d_2 & t & \ldots & t \\\vdots & \vdots & \vdots & \vdots & \vdots \\t & t & t & \ldots & d_m\end{array}\right)\)

Recall that \(A\) is a \(m \times n\) matrix, so: rank\((A) \leqslant n\)

#4. Same-Size Intersections

Given: \(C_1, C_2, \ldots, C_m \in 2^{[n]}\)

where \(|C_i \cap C_j| = t\) for all \(1\leqslant i < j \leqslant m\)

To Prove: \(m \leqslant n\).

Case 2. \(|C_i| > t\) for all \(i \in [m]\).

\(B := AA^T\)

\(B=\left(\begin{array}{ccccc}d_1 & t & t & \ldots & t \\t & d_2 & t & \ldots & t \\\vdots & \vdots & \vdots & \vdots & \vdots \\t & t & t & \ldots & d_m\end{array}\right)\)

\({\color{White}m = }\)rank\((B) \leqslant \) rank\((A) \leqslant n\)

#4. Same-Size Intersections

Given: \(C_1, C_2, \ldots, C_m \in 2^{[n]}\)

where \(|C_i \cap C_j| = t\) for all \(1\leqslant i < j \leqslant m\)

To Prove: \(m \leqslant n\).

Case 2. \(|C_i| > t\) for all \(i \in [m]\).

\(B := AA^T\)

\(B=\left(\begin{array}{ccccc}d_1 & t & t & \ldots & t \\t & d_2 & t & \ldots & t \\\vdots & \vdots & \vdots & \vdots & \vdots \\t & t & t & \ldots & d_m\end{array}\right)\)

\(m = \) rank\((B) \leqslant \) rank\((A) \leqslant n\)

Given: \(C_1, C_2, \ldots, C_m \in 2^{[n]}\)

where \(|C_i \cap C_j| = t\) for all \(1\leqslant i < j \leqslant m\)

To Prove: \(m \leqslant n\).

Case 2. \(|C_i| > t\) for all \(i \in [m]\).

\(B := AA^T\)

\(B=\left(\begin{array}{ccccc}d_1 & t & t & \ldots & t \\t & d_2 & t & \ldots & t \\\vdots & \vdots & \vdots & \vdots & \vdots \\t & t & t & \ldots & d_m\end{array}\right)\)

\({\color{red}m = }\) rank\({\color{red}(B)} \leqslant \) rank\((A) \leqslant n\)

#4. Same-Size Intersections

#4. Same-Size Intersections

\(B=\left(\begin{array}{ccccc}d_1 & t & t & \ldots & t \\t & d_2 & t & \ldots & t \\\vdots & \vdots & \vdots & \vdots & \vdots \\t & t & t & \ldots & d_m\end{array}\right)\)

Suffices to show: \(\mathbf{x}^T B \mathbf{x}>0 \text { for all nonzero } \mathbf{x} \in \mathbb{R}^m \text {. }\)

because once we have the above,

if \(B\mathbf{x} = \mathbf{0}\), then \(\mathbf{x}^TB\mathbf{x} = \mathbf{x}^T\mathbf{0} = 0\), hence \(\mathbf{x} = 0\).

#4. Same-Size Intersections

\(B=\left(\begin{array}{ccccc}d_1 & t & t & \ldots & t \\t & d_2 & t & \ldots & t \\\vdots & \vdots & \vdots & \vdots & \vdots \\t & t & t & \ldots & d_m\end{array}\right)\)

Suffices to show: \(\mathbf{x}^T B \mathbf{x}>0 \text { for all nonzero } \mathbf{x} \in \mathbb{R}^m \text {. }\)

We can write \(B=t J_n+D\), where \(J_n\) is the all 1's matrix and

\(D\) is the diagonal matrix with \(d_1-t, d_2-t, \ldots, d_n-t\) on the diagonal.

#4. Same-Size Intersections

\(B=\left(\begin{array}{ccccc}d_1 & t & t & \ldots & t \\t & d_2 & t & \ldots & t \\\vdots & \vdots & \vdots & \vdots & \vdots \\t & t & t & \ldots & d_m\end{array}\right)\)

\(\left(\begin{array}{ccccc}d_1 & t & t & t \\t & d_2 & t & t \\t & t & d_3 & t \\t & t & t & d_4\end{array}\right) = \)

\(\left(\begin{array}{ccccc}0 & ~t~ & ~t~ & ~t~ \\t & ~0~ & ~t~ & ~t~ \\t & ~t~ & ~0~ & ~t~ \\t & ~t~ & ~t~ & ~0~ \end{array}\right)\)

+ \(\left(\begin{array}{ccccc}d_1 & 0 & 0 & 0 \\0 & d_2 & 0 & 0 \\0 & 0 & d_3 & 0 \\0 & 0 & 0 & d_4\end{array}\right) \)

#4. Same-Size Intersections

\(B=\left(\begin{array}{ccccc}d_1 & t & t & \ldots & t \\t & d_2 & t & \ldots & t \\\vdots & \vdots & \vdots & \vdots & \vdots \\t & t & t & \ldots & d_m\end{array}\right)\)

\(\left(\begin{array}{ccccc}~~~~~~t~~~~~~ & ~~~~~~t~~~~~~ & ~~~~~~t~~~~~~ & ~~~~~~t~~~~~~ \\~~~~~~t~~~~~~ & ~~~~~~t~~~~~~ & ~~~~~~t~~~~~~ & ~~~~~~t~~~~~~ \\~~~~~~t~~~~~~ & ~~~~~~t~~~~~~ & ~~~~~~t~~~~~~ & ~~~~~~t~~~~~~ \\~~~~~~t~~~~~~ & ~~~~~~t~~~~~~ & ~~~~~t~~~~~ & ~~~~~t~~~~~ \end{array}\right)\)

+ \(\left(\begin{array}{ccccc}(d_1-t) & 0 & 0 & 0 \\0 & (d_2-t) & 0 & 0 \\0 & 0 & (d_3-t) & 0 \\0 & 0 & 0 & (d_4-t)\end{array}\right) \)

\(\left(\begin{array}{ccccc}d_1 & t & t & t \\t & d_2 & t & t \\t & t & d_3 & t \\t & t & t & d_4\end{array}\right) = \)

#4. Same-Size Intersections

\(B=\left(\begin{array}{ccccc}d_1 & t & t & \ldots & t \\t & d_2 & t & \ldots & t \\\vdots & \vdots & \vdots & \vdots & \vdots \\t & t & t & \ldots & d_m\end{array}\right)\)

Suffices to show: \(\mathbf{x}^T B \mathbf{x}>0 \text { for all nonzero } \mathbf{x} \in \mathbb{R}^m \text {. }\)

We can write \(B=t J_n+D\), where \(J_n\) is the all 1's matrix and

\(D\) is the diagonal matrix with \(d_1-t, d_2-t, \ldots, d_n-t\) on the diagonal.

\(\mathbf{x}^T B \mathbf{x}=\mathbf{x}^T\left(t J_n+D\right) \mathbf{x}=t \mathbf{x}^T J_n \mathbf{x}+\mathbf{x}^T D \mathbf{x}\)

#4. Same-Size Intersections

\(B=\left(\begin{array}{ccccc}d_1 & t & t & \ldots & t \\t & d_2 & t & \ldots & t \\\vdots & \vdots & \vdots & \vdots & \vdots \\t & t & t & \ldots & d_m\end{array}\right)\)

Suffices to show: \(\mathbf{x}^T B \mathbf{x}>0 \text { for all nonzero } \mathbf{x} \in \mathbb{R}^m \text {. }\)

We can write \(B=t J_n+D\), where \(J_n\) is the all 1's matrix and

\(D\) is the diagonal matrix with \(d_1-t, d_2-t, \ldots, d_n-t\) on the diagonal.

\(\mathbf{x}^T B \mathbf{x}=\mathbf{x}^T\left(t J_n+D\right) \mathbf{x}=t {\color{IndianRed}\mathbf{x}^T J_n \mathbf{x}}+\mathbf{x}^T D \mathbf{x}\)

\({\color{IndianRed}\sum_{i, j=1}^n x_i x_j=\left(\sum_{i=1}^n x_i\right)^2 \geqslant 0}\)

#4. Same-Size Intersections

\(B=\left(\begin{array}{ccccc}d_1 & t & t & \ldots & t \\t & d_2 & t & \ldots & t \\\vdots & \vdots & \vdots & \vdots & \vdots \\t & t & t & \ldots & d_m\end{array}\right)\)

Suffices to show: \(\mathbf{x}^T B \mathbf{x}>0 \text { for all nonzero } \mathbf{x} \in \mathbb{R}^m \text {. }\)

We can write \(B=t J_n+D\), where \(J_n\) is the all 1's matrix and

\(D\) is the diagonal matrix with \(d_1-t, d_2-t, \ldots, d_n-t\) on the diagonal.

\(\mathbf{x}^T B \mathbf{x}=\mathbf{x}^T\left(t J_n+D\right) \mathbf{x}=t \mathbf{x}^T J_n \mathbf{x}+{\color{Olive}\mathbf{x}^T D \mathbf{x}}\)

\({\color{Olive}\mathbf{x}^T D \mathbf{x}=\sum_{i=1}^n\left(d_i-t\right) x_i^2>0}\)

#4. Same-Size Intersections

\(B=\left(\begin{array}{ccccc}d_1 & t & t & \ldots & t \\t & d_2 & t & \ldots & t \\\vdots & \vdots & \vdots & \vdots & \vdots \\t & t & t & \ldots & d_m\end{array}\right)\)

Suffices to show: \(\mathbf{x}^T B \mathbf{x}>0 \text { for all nonzero } \mathbf{x} \in \mathbb{R}^m \text {. }\)

We can write \(B=t J_n+D\), where \(J_n\) is the all 1's matrix and

\(D\) is the diagonal matrix with \(d_1-t, d_2-t, \ldots, d_n-t\) on the diagonal.

\(\mathbf{x}^T B \mathbf{x}=\mathbf{x}^T\left(t J_n+D\right) \mathbf{x}=t \mathbf{x}^T J_n \mathbf{x}+\mathbf{x}^T D \mathbf{x}\)

\(> 0\)

#4. Same-Size Intersections

Let \(L\) be a set of \(s\) nonnegative integers and

\(\mathscr{F}\) a family of subsets of an \(n\)-element set \(X\).

Suppose that for any two distinct members \(A, B \in \mathscr{F}\) we have \(|A \cap B| \in L\).

Assuming in addition that \(\mathscr{F}\) is uniform,

i.e. each member of \(\mathscr{F}\) has the same cardinality,

a celebrated theorem of D. K. Ray-Chaudhuri and R. M. Wilson asserts that:

\(|\mathscr{F}| \leqq\left(\begin{array}{l}n \\ s\end{array}\right)\).

#4. Same-Size Intersections

Let \(L\) be a set of \(s\) nonnegative integers and

\(\mathscr{F}\) a family of subsets of an \(n\)-element set \(X\).

Suppose that for any two distinct members \(A, B \in \mathscr{F}\) we have \(|A \cap B| \in L\).

P. Frankl and R. M. Wilson proved that

without the uniformity assumption, we have:

\(|\mathscr{F}| \leqq\left(\begin{array}{l}n \\s\end{array}\right)+\left(\begin{array}{c}n \\s-1\end{array}\right)+\ldots+\left(\begin{array}{l}n \\0\end{array}\right)\)

#4. Same-Size Intersections

#5. Error Correcting Codes

1 0 1 1 0 0 1 0 0 1 1 0 1 1 1 0

1 0 1 1 0 0 1 0 0 1 1 0 1 1 0 1

Noisy Channel

#5. Error Correcting Codes

1 0 1 1 0 0 1 0 0 1 1 0 1 1 1 0

1 0 1 1 0 0 1 0 0 1 1 0 1 1 0 1Noisy Channel

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

1 0 1 1 0 0 1 0 0 1 1 0 1 1 1 0

1 0 1 1 0 0 1 0 0 1 1 0 1 1 1 0

1 0 1 1 0 0 1 0 0 1 1 0 1 1 1 0

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

0.33... = message | 0.66... = redundancy

#5. Error Correcting Codes

\(\mathbb{F}^k_2\)

\(\mathbb{F}^n_2\)

#5. Error Correcting Codes

\(\mathbb{F}^k_2\)

\(\mathbb{F}^n_2\)

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

1 0 1 1 0 0 1 0 0 1 0 0 1 1 1 0

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

1 1 0 1

0 0 0 1

1

0.64 = message | 0.35 = redundancy

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X A B 1 C 0 1 1 D 0 0 1 0 0 1 1

1 0 1 1 0 0 1 0 0 1 1

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X A B 1 C 0 1 1 D 0 0 1 0 0 1 1

1 0 1 1 0 0 1 0 0 1 1

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 0 B 1 C 0 1 1 D 0 0 1 0 0 1 1

1 0 1 1 0 0 1 0 0 1 1

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 0 B 1 C 0 1 1 D 0 0 1 0 0 1 1

1 0 1 1 0 0 1 0 0 1 1

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 0 B 1 C 0 1 1 D 0 0 1 0 0 1 1

1 0 1 1 0 0 1 0 0 1 1

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 0 0 1 C 0 1 1 D 0 0 1 0 0 1 1

1 0 1 1 0 0 1 0 0 1 1

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 0 0 1 C 0 1 1 D 0 0 1 0 0 1 1

1 0 1 1 0 0 1 0 0 1 1

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 0 0 1 C 0 1 1 D 0 0 1 0 0 1 1

1 0 1 1 0 0 1 0 0 1 1

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 0 0 1 0 0 1 1 D 0 0 1 0 0 1 1

1 0 1 1 0 0 1 0 0 1 1

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 0 0 1 0 0 1 1 D 0 0 1 0 0 1 1

1 0 1 1 0 0 1 0 0 1 1

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 0 0 1

0 0 1 1

D 0 0 1

0 0 1 11 0 1 1 0 0 1 0 0 1 1

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 0 0 1

0 0 1 1

1 0 0 1

0 0 1 11 0 1 1 0 0 1 0 0 1 1

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 0 0 1 0 0 1 1 1 0 0 1 0 0 1 1

1 0 1 1 0 0 1 0 0 1 1

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

1 0 1 1 0 0 1 0 0 1 1

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 0 0 1 0 0 1 1 1 0 0 1 0 0 1 1

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 1 1 1 0 1 0 0 1 1 1 1 1 1 1 0

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 1 1 1 0 1 0 0 1 1 1 1 1 1 1 0

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 1 1 1 0 1 0 0 1 1 1 1 1 1 1 0

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 1 1 1 0 1 0 0 1 1 1 1 1 1 1 0

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 1 1 1 0 1 0 0 1 1 1 1 1 1 1 0

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 1 1 1 0 1 0 0 1 1 1 1 1 1 1 0

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 1 1 1 0 1 0 0 1 1 1 1 1 1 1 0

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 1 1 1 0 1 0 0 1 1 1 1 1 1 1 0

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 1 1 1 0 1 0 0 1 1 1 1 1 1 1 0

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 1 1 1 0 1 0 0 1 1 1 1 1 1 1 0

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 1 1 1 0 1 0 0 1 1 1 1 1 1 1 0

1 1 0 0 1 0 1 1 1 1 0

#5. Error Correcting Codes

Goal: Detect and correct as many errors as possible.

Tools: redundancy/additional storage.

Hope: minimize tool usage: it is expensive!

A baby step: correct any one bit flip.

X 1 1 1 0 1 0 0 1 1 1 1 1 1 1 0

1 1 0 0 1 0 1 1 1 1 0

Exercise: Use the X-bit to detect if there is more than one error.

#5. Error Correcting Codes

00000001001000110100010101100111100010011010101111001101111011110123456789101112131415#5. Error Correcting Codes

00000001001000110100010101100111100010011010101111001101111011110123456789101112131415#5. Error Correcting Codes

00000001001000110100010101100111100010011010101111001101111011110123456789101112131415#5. Error Correcting Codes

00000001001000110100010101100111100010011010101111001101111011110123456789101112131415#5. Error Correcting Codes

00000001001000110100010101100111100010011010101111001101111011110123456789101112131415#5. Error Correcting Codes

\(M_{16 \times 11} \cdot [1,1,0,0,1,0,1,1,1,1,0]^T = w\)

\(\begin{bmatrix} \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \end{bmatrix}\)

#5. Error Correcting Codes

\(M_{16 \times 11} \cdot [{\color{IndianRed}1},{\color{IndianRed}1},0,{\color{IndianRed}0},{\color{IndianRed}1},0,{\color{IndianRed}1},1,{\color{IndianRed}1},1,{\color{IndianRed}0}]^T = w\)

\(\begin{bmatrix} \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \end{bmatrix}\)

#5. Error Correcting Codes

\(M_{16 \times 11} \cdot [{\color{IndianRed}1},{\color{IndianRed}1},0,{\color{IndianRed}0},{\color{IndianRed}1},0,{\color{IndianRed}1},1,{\color{IndianRed}1},1,{\color{IndianRed}0}]^T = w\)

\(\begin{bmatrix} \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ 1 & 1 & 0 & 1 & 1 & 0 & 1 & 0 & 1 & 0 & 1\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \end{bmatrix}\)

#5. Error Correcting Codes

\(M_{16 \times 11} \cdot [{\color{IndianRed}1},1,{\color{IndianRed}0},{\color{IndianRed}0},1,{\color{IndianRed}0},{\color{IndianRed}1},1,1,{\color{IndianRed}1},{\color{IndianRed}0}]^T = w\)

\(\begin{bmatrix} \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ 1 & 1 & 0 & 1 & 1 & 0 & 1 & 0 & 1 & 0 & 1\\\star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star \\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \end{bmatrix}\)

#5. Error Correcting Codes

\(M_{16 \times 11} \cdot [{\color{IndianRed}1},1,{\color{IndianRed}0},{\color{IndianRed}0},1,{\color{IndianRed}0},{\color{IndianRed}1},1,1,{\color{IndianRed}1},{\color{IndianRed}0}]^T = w\)

\(\begin{bmatrix} \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ 1 & 1 & 0 & 1 & 1 & 0 & 1 & 0 & 1 & 0 & 1\\ 1 & 0 & 1 & 1 & 0 & 1 & 1 & 0 & 0 & 1 & 1 \\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \end{bmatrix}\)

#5. Error Correcting Codes

\(M_{16 \times 11} \cdot [1,1,0,0,1,0,1,1,1,1,0]^T = w\)

\(\begin{bmatrix} \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ 1 & 1 & 0 & 1 & 1 & 0 & 1 & 0 & 1 & 0 & 1\\ 1 & 0 & 1 & 1 & 0 & 1 & 1 & 0 & 0 & 1 & 1 \\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \end{bmatrix}\)

#5. Error Correcting Codes

\(M_{16 \times 11} \cdot [1,1,0,0,1,0,1,1,1,1,0]^T = w\)

\(\begin{bmatrix} \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ 1 & 1 & 0 & 1 & 1 & 0 & 1 & 0 & 1 & 0 & 1\\ 1 & 0 & 1 & 1 & 0 & 1 & 1 & 0 & 0 & 1 & 1 \\ {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \end{bmatrix}\)

#5. Error Correcting Codes

\(M_{16 \times 11} \cdot [1,{\color{IndianRed}1},{\color{IndianRed}0},{\color{IndianRed}0},1,0,1,{\color{IndianRed}1},{\color{IndianRed}1},{\color{IndianRed}1},{\color{IndianRed}0}]^T = w\)

\(\begin{bmatrix} \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ 1 & 1 & 0 & 1 & 1 & 0 & 1 & 0 & 1 & 0 & 1\\ 1 & 0 & 1 & 1 & 0 & 1 & 1 & 0 & 0 & 1 & 1 \\ {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \end{bmatrix}\)

#5. Error Correcting Codes

\(M_{16 \times 11} \cdot [1,{\color{IndianRed}1},{\color{IndianRed}0},{\color{IndianRed}0},1,0,1,{\color{IndianRed}1},{\color{IndianRed}1},{\color{IndianRed}1},{\color{IndianRed}0}]^T = w\)

\(\begin{bmatrix} \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ 1 & 1 & 0 & 1 & 1 & 0 & 1 & 0 & 1 & 0 & 1\\ 1 & 0 & 1 & 1 & 0 & 1 & 1 & 0 & 0 & 1 & 1 \\ {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 1 & 1 & 1 & 0 & 0 & 0 & 1 & 1 & 1 & 1\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \end{bmatrix}\)

#5. Error Correcting Codes

\(M_{16 \times 11} \cdot [1,1,0,0,1,0,1,1,1,1,0]^T = w\)

\(\begin{bmatrix} \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ 1 & 1 & 0 & 1 & 1 & 0 & 1 & 0 & 1 & 0 & 1\\ 1 & 0 & 1 & 1 & 0 & 1 & 1 & 0 & 0 & 1 & 1 \\ {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 1 & 1 & 1 & 0 & 0 & 0 & 1 & 1 & 1 & 1\\ 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \end{bmatrix}\)

#5. Error Correcting Codes

\(M_{16 \times 11} \cdot [1,1,0,0,{\color{IndianRed}1},{\color{IndianRed}0},{\color{IndianRed}1},{\color{IndianRed}1},{\color{IndianRed}1},{\color{IndianRed}1},{\color{IndianRed}0}]^T = w\)

\(\begin{bmatrix} \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ 1 & 1 & 0 & 1 & 1 & 0 & 1 & 0 & 1 & 0 & 1\\ 1 & 0 & 1 & 1 & 0 & 1 & 1 & 0 & 0 & 1 & 1 \\ {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 1 & 1 & 1 & 0 & 0 & 0 & 1 & 1 & 1 & 1\\ 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \end{bmatrix}\)

#5. Error Correcting Codes

\(M_{16 \times 11} \cdot [1,1,0,0,{\color{IndianRed}1},{\color{IndianRed}0},{\color{IndianRed}1},{\color{IndianRed}1},{\color{IndianRed}1},{\color{IndianRed}1},{\color{IndianRed}0}]^T = w\)

\(\begin{bmatrix} \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ 1 & 1 & 0 & 1 & 1 & 0 & 1 & 0 & 1 & 0 & 1\\ 1 & 0 & 1 & 1 & 0 & 1 & 1 & 0 & 0 & 1 & 1 \\ {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 1 & 1 & 1 & 0 & 0 & 0 & 1 & 1 & 1 & 1\\ 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 1 & 1 & 1 & 1 & 1 & 1 & 1\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ \end{bmatrix}\)

#5. Error Correcting Codes

\(M_{16 \times 11} \cdot [1,1,0,0,1,0,1,1,1,1,0]^T = w\)

\(\begin{bmatrix} \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\ 1 & 1 & 0 & 1 & 1 & 0 & 1 & 0 & 1 & 0 & 1\\ 1 & 0 & 1 & 1 & 0 & 1 & 1 & 0 & 0 & 1 & 1 \\ {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 1 & 1 & 1 & 0 & 0 & 0 & 1 & 1 & 1 & 1\\ 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ \ 0 & 0 & 0 & 0 & 1 & 1 & 1 & 1 & 1 & 1 & 1\\0 & 0 & 0 & 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & {\color{DodgerBlue}1} & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & {\color{DodgerBlue}1} & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & {\color{DodgerBlue}1} & 0 \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & {\color{DodgerBlue}1} \\ \end{bmatrix}\)

#5. Error Correcting Codes

\(M_{16 \times 11} \cdot [1,1,0,0,1,0,1,1,1,1,0]^T = w\)

\(\begin{bmatrix} \star & \star & \star & \star & \star & \star & \star & \star & \star & \star & \star\\{\color{Blue}1} & {\color{Blue}1} & 0 & {\color{Blue}1} & {\color{Blue}1} & 0 & {\color{Blue}1} & 0 & {\color{Blue}1} & 0 & {\color{Blue}1}\\ {\color{Orange}1} & 0 & {\color{Orange}1} & {\color{Orange}1} & 0 & {\color{Orange}1} & {\color{Orange}1} & 0 & 0 & {\color{Orange}1} & {\color{Orange}1} \\ {\color{Thistle}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & {\color{SeaGreen}1} & {\color{SeaGreen}1} & {\color{SeaGreen}1} & 0 & 0 & 0 & {\color{SeaGreen}1} & {\color{SeaGreen}1} & {\color{SeaGreen}1} & {\color{SeaGreen}1}\\ 0 & {\color{Thistle}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & {\color{Thistle}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & {\color{Thistle}1} & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ \ 0 & 0 & 0 & 0 & {\color{IndianRed}1} & {\color{IndianRed}1} & {\color{IndianRed}1} & {\color{IndianRed}1} & {\color{IndianRed}1} & {\color{IndianRed}1} & {\color{IndianRed}1}\\0 & 0 & 0 & 0 & {\color{Thistle}1} & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & {\color{Thistle}1} & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & 0 & {\color{Thistle}1} & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & {\color{Thistle}1} & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & {\color{Thistle}1} & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & {\color{Thistle}1} & 0 \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & {\color{Thistle}1} \\ \end{bmatrix}\)

#5. Error Correcting Codes

\(x_{1} \oplus x_{3} \oplus x_{5} \oplus x_{7} \oplus x_{9} \oplus x_{11} \oplus x_{13} \oplus x_{15} = 0 \\ x_{2} \oplus x_{3} \oplus x_{6} \oplus x_{7} \oplus x_{10} \oplus x_{11} \oplus x_{14} \oplus x_{15} = 0 \\ x_{4} \oplus x_{5} \oplus x_{6} \oplus x_{7} \oplus x_{12} \oplus x_{13} \oplus x_{14} \oplus x_{15} = 0 \\ x_{8} \oplus x_{9} \oplus x_{10} \oplus x_{11} \oplus x_{12} \oplus x_{13} \oplus x_{14} \oplus x_{15} = 0 \\\)

#5. Error Correcting Codes

\(\underbrace{\begin{bmatrix} 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 \\ 0 & 0 & 1 & 1 & 0 & 0 & 1 & 1 & 0 & 0 & 1 & 1 & 0 & 0 & 1 & 1 \\ 0 & 0 & 0 & 0 & 1 & 1 & 1 & 1 & 0 & 0 & 0 & 0 & 1 & 1 & 1 & 1 \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 1 & 1 & 1 & 1 & 1 & 1 & 1 & 1\end{bmatrix}}_{\text{Parity Check Matrix: } P}\)

What is the null space of P?

The set of all valid code words.

#5. Error Correcting Codes

\(\underbrace{\begin{bmatrix} 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 \\ 0 & 0 & 1 & 1 & 0 & 0 & 1 & 1 & 0 & 0 & 1 & 1 & 0 & 0 & 1 & 1 \\ 0 & 0 & 0 & 0 & 1 & 1 & 1 & 1 & 0 & 0 & 0 & 0 & 1 & 1 & 1 & 1 \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 1 & 1 & 1 & 1 & 1 & 1 & 1 & 1\end{bmatrix}}_{\text{Parity Check Matrix: } P}\)

\(Pw = P(v + e)\)

\(P(v + e) = Pv + Pe\)

\(Pv + Pe = 0 + Pe = Pe\)

#5. Error Correcting Codes

\(\underbrace{\begin{bmatrix} 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 \\ 0 & 0 & 1 & 1 & 0 & 0 & 1 & 1 & 0 & 0 & 1 & 1 & 0 & 0 & 1 & 1 \\ 0 & 0 & 0 & 0 & 1 & 1 & 1 & 1 & 0 & 0 & 0 & 0 & 1 & 1 & 1 & 1 \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & 1 & 1 & 1 & 1 & 1 & 1 & 1 & 1\end{bmatrix}}_{\text{Parity Check Matrix: } P}\)

\(\begin{bmatrix} 0 \\ 0 \\ {\color{IndianRed}1} \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \end{bmatrix}\)

#5. Error Correcting Codes

\(\underbrace{\begin{bmatrix} 0 & 1 & {\color{IndianRed}0} & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 \\ 0 & 0 & {\color{IndianRed}1} & 1 & 0 & 0 & 1 & 1 & 0 & 0 & 1 & 1 & 0 & 0 & 1 & 1 \\ 0 & 0 & {\color{IndianRed}0} & 0 & 1 & 1 & 1 & 1 & 0 & 0 & 0 & 0 & 1 & 1 & 1 & 1 \\ 0 & 0 & {\color{IndianRed}0} & 0 & 0 & 0 & 0 & 0 & 1 & 1 & 1 & 1 & 1 & 1 & 1 & 1\end{bmatrix}}_{\text{Parity Check Matrix: } P}\)

\(\begin{bmatrix} 0 \\ 0 \\ {\color{IndianRed}1} \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \end{bmatrix}\)

#5. Error Correcting Codes

\(\underbrace{\begin{bmatrix} 0 & 1 & {\color{IndianRed}0} & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 & 0 & 1 \\ 0 & 0 & {\color{IndianRed}1} & 1 & 0 & 0 & 1 & 1 & 0 & 0 & 1 & 1 & 0 & 0 & 1 & 1 \\ 0 & 0 & {\color{IndianRed}0} & 0 & 1 & 1 & 1 & 1 & 0 & 0 & 0 & 0 & 1 & 1 & 1 & 1 \\ 0 & 0 & {\color{IndianRed}0} & 0 & 0 & 0 & 0 & 0 & 1 & 1 & 1 & 1 & 1 & 1 & 1 & 1\end{bmatrix}}_{\text{Parity Check Matrix: } P}\)

\(\begin{bmatrix} 0 \\ 0 \\ {\color{IndianRed}1} \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \end{bmatrix}\)

\(\begin{bmatrix} 0 \\ 1 \\ 0 \\ 0 \end{bmatrix}\)

=

#5. Error Correcting Codes

The (4 → 7) Hamming Code

001 010 011 100 101 110 111

001 010 011 100 101 110 111

001 010 011 100 101 110 111

001

010

011

100

101

110

111001 010 011 100 101 110 111

xyzabcdx = a + b + d

y = a + c + d

z = b + c + d

#5. Error Correcting Codes

I have a function \(f\) that takes a 16-bit string as input & produces a number between 0 and 15 as output.

You give me a string \(s \in \{0,1\}^{16}\) and a number \(n \in \{0,...,15\}\).

It will turn out that \(f(s) = n\).

Well, this is a bit too much :)

#5. Error Correcting Codes

I have a function \(f\) that takes a 16-bit string as input & produces a number between 0 and 15 as output.

You give me a string \(s \in \{0,1\}^{16}\) and a number \(n \in \{0,...,15\}\).

It will turn out that \(f(t) = n\).

I will flip one bit in \(s\) to get \(t\).

#5. Error Correcting Codes

I have a function \(f\) that takes a 16-bit string as input & produces a number between 0 and 15 as output.

You give me a string \(s \in \{0,1\}^{16}\) and a number \(n \in \{0,...,15\}\).

It will turn out that \(f(t) = n\).

I will flip one bit in \(s\) to get \(t\).

#6. Odd Distances

There are no four points in the plane such that

the distance between each pair is an odd integer.

#6. Odd Distances

Are there four points in the plane such that

the distance between each pair is an even integer?

#6. Odd Distances

How many points can we have on a plane

so that their pairwise distances are integers?

#6. Odd Distances

There are no four points in the plane such that

the distance between each pair is an odd integer.

Let us suppose for contradiction that

there exist 4 points with all the distances odd.

We can assume that one of them is \(\mathbf{0}\),

and we call the three remaining ones \(\mathbf{a}, \mathbf{b}, \mathbf{c}\).

Then \(\|\mathbf{a}\|,\|\mathbf{b}\|,\|\mathbf{c}\|,\|\mathbf{a}-\mathbf{b}\|\), \(\|\mathbf{b}-\mathbf{c}\|\), and \(\|\mathbf{c}-\mathbf{a}\|\) are odd integers.

And also \(\|\mathbf{a}\|^2,\|\mathbf{b}\|^2,\|\mathbf{c}\|^2,\|\mathbf{a}-\mathbf{b}\|^2\), \(\|\mathbf{b}-\mathbf{c}\|^2\), and \(\|\mathbf{c}-\mathbf{a}\|^2\) are \(\equiv 1 \bmod 8\).

#6. Odd Distances

There are no four points in the plane such that

the distance between each pair is an odd integer.

\(m\) odd \(\implies m^2 \equiv 1\) mod \(8\).

\(m^2 = (2k + 1)^2 = 4k^2 + 4k + 1 = 4(k^2 + k) + 1 = 4k(k+1) + 1\)

#6. Odd Distances

There are no four points in the plane such that

the distance between each pair is an odd integer.

\(m\) odd \(\implies m^2 \equiv 1\) mod \(8\).

\(m^2 = (2k + 1)^2 = 4k^2 + 4k + 1 = 4(k^2 + k) + 1 = 4{\color{DodgerBlue}k}(k+1) + 1\)

#6. Odd Distances

There are no four points in the plane such that

the distance between each pair is an odd integer.

\(m\) odd \(\implies m^2 \equiv 1\) mod \(8\).

\(m^2 = (2k + 1)^2 = 4k^2 + 4k + 1 = 4(k^2 + k) + 1 = 4k({\color{DodgerBlue}k+1}) + 1\)

#6. Odd Distances

There are no four points in the plane such that

the distance between each pair is an odd integer.

Let us suppose for contradiction that

there exist 4 points with all the distances odd.

We can assume that one of them is \(\mathbf{0}\),

and we call the three remaining ones \(\mathbf{a}, \mathbf{b}, \mathbf{c}\).

Then \(\|\mathbf{a}\|,\|\mathbf{b}\|,\|\mathbf{c}\|,\|\mathbf{a}-\mathbf{b}\|\), \(\|\mathbf{b}-\mathbf{c}\|\), and \(\|\mathbf{c}-\mathbf{a}\|\) are odd integers.

And also \(\|\mathbf{a}\|^2,\|\mathbf{b}\|^2,\|\mathbf{c}\|^2,\|\mathbf{a}-\mathbf{b}\|^2\), \(\|\mathbf{b}-\mathbf{c}\|^2\), and \(\|\mathbf{c}-\mathbf{a}\|^2\) are \(\equiv 1 \bmod 8\).

#6. Odd Distances

There are no four points in the plane such that

the distance between each pair is an odd integer.

\(2\langle\mathbf{a}, \mathbf{b}\rangle = \|\mathbf{a}\|^2+\|\mathbf{b}\|^2-\|\mathbf{a}-\mathbf{b}\|^2 \equiv 1(\bmod 8)\)

\(\|\mathbf{a}\|,\|\mathbf{b}\|,\|\mathbf{c}\|,\|\mathbf{a}-\mathbf{b}\|\), \(\|\mathbf{b}-\mathbf{c}\|\), and \(\|\mathbf{c}-\mathbf{a}\|\) are odd integers.

\(\|\mathbf{a}\|^2,\|\mathbf{b}\|^2,\|\mathbf{c}\|^2,\|\mathbf{a}-\mathbf{b}\|^2\), \(\|\mathbf{b}-\mathbf{c}\|^2\), and \(\|\mathbf{c}-\mathbf{a}\|^2\) are \(\equiv 1 \bmod 8\).

\(2\langle\mathbf{a}, \mathbf{c}\rangle = \|\mathbf{a}\|^2+\|\mathbf{c}\|^2-\|\mathbf{a}-\mathbf{c}\|^2 \equiv 1(\bmod 8)\)

\(2\langle\mathbf{b}, \mathbf{c}\rangle = \|\mathbf{b}\|^2+\|\mathbf{c}\|^2-\|\mathbf{b}-\mathbf{c}\|^2 \equiv 1(\bmod 8)\)

#6. Odd Distances

There are no four points in the plane such that

the distance between each pair is an odd integer.

\(2\langle\mathbf{a}, \mathbf{b}\rangle = \|\mathbf{a}\|^2+\|\mathbf{b}\|^2-\|\mathbf{a}-\mathbf{b}\|^2 \equiv 1(\bmod 8)\)

\(2\langle\mathbf{a}, \mathbf{c}\rangle = \|\mathbf{a}\|^2+\|\mathbf{c}\|^2-\|\mathbf{a}-\mathbf{c}\|^2 \equiv 1(\bmod 8)\)

\(2\langle\mathbf{b}, \mathbf{c}\rangle = \|\mathbf{b}\|^2+\|\mathbf{c}\|^2-\|\mathbf{b}-\mathbf{c}\|^2 \equiv 1(\bmod 8)\)

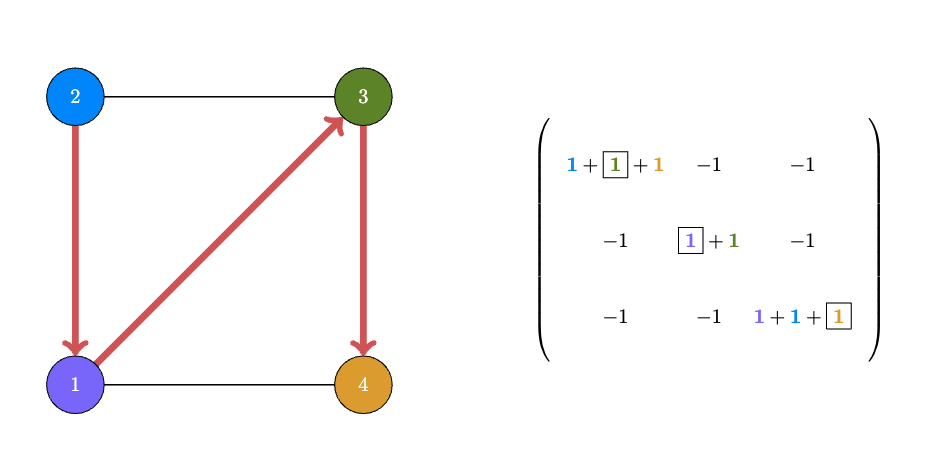

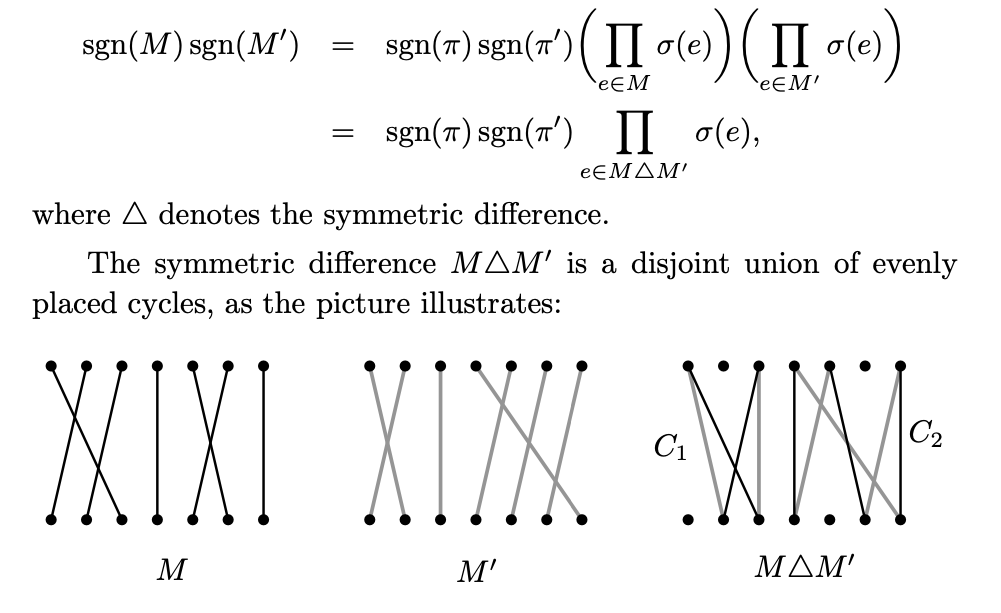

\(2 \cdot \underbrace{\left(\begin{array}{ccc}\langle\mathbf{a}, \mathbf{a}\rangle & \langle\mathbf{a}, \mathbf{b}\rangle & \langle\mathbf{a}, \mathbf{c}\rangle \\\langle\mathbf{b}, \mathbf{a}\rangle & \langle\mathbf{b}, \mathbf{b}\rangle & \langle\mathbf{b}, \mathbf{c}\rangle \\\langle\mathbf{c}, \mathbf{a}\rangle & \langle\mathbf{c}, \mathbf{b}\rangle & \langle\mathbf{c}, \mathbf{c}\rangle\end{array}\right)}_{\text{rank} = 3} \equiv \left(\begin{array}{lll} 2 & 1 & 1 \\ 1 & 2 & 1 \\1 & 1 & 2 \end{array}\right) \bmod 8\)

#6. Odd Distances

There are no four points in the plane such that

the distance between each pair is an odd integer.

\(2 \cdot \underbrace{\left(\begin{array}{ccc}\langle\mathbf{a}, \mathbf{a}\rangle & \langle\mathbf{a}, \mathbf{b}\rangle & \langle\mathbf{a}, \mathbf{c}\rangle \\\langle\mathbf{b}, \mathbf{a}\rangle & \langle\mathbf{b}, \mathbf{b}\rangle & \langle\mathbf{b}, \mathbf{c}\rangle \\\langle\mathbf{c}, \mathbf{a}\rangle & \langle\mathbf{c}, \mathbf{b}\rangle & \langle\mathbf{c}, \mathbf{c}\rangle\end{array}\right)}_{\text{rank} = 3} \equiv \underbrace{\left(\begin{array}{lll} 2 & 1 & 1 \\ 1 & 2 & 1 \\1 & 1 & 2 \end{array}\right)}_{\text{det} = 4} \bmod 8\)

\(\overbrace{\left(\begin{array}{cc} a_1 & a_2 \\ b_1 & b_2 \\ c_1 & c_2 \end{array}\right) \cdot \left(\begin{array}{ccc} a_1 & b_1 & c_1 \\ a_2 & b_2 & c_2 \end{array}\right)}^{\text{rank} = 2}\)

\(=\)

\(\det(2B) \equiv 4 \bmod 8 \neq 0 \implies \det(B) \neq 0.\)

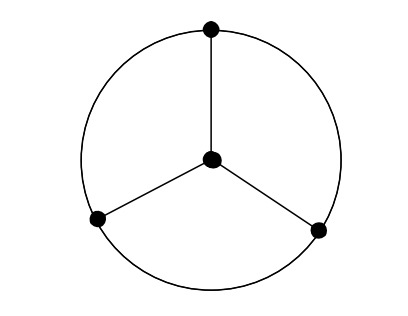

#7. Are These Distances Euclidean?

Can we find three points \(p, q, r\) in the plane whose mutual Euclidean distances are all one?

*picture not to scale 😀

#7. Are These Distances Euclidean?

Can we find \(\mathbf{p}, \mathbf{q}, \mathbf{r}\) with

\(\|\mathbf{p}-\mathbf{q}\|=\|\mathbf{q}-\mathbf{r}\|=1 \text { and }\|\mathbf{p}-\mathbf{r}\|=3\)?

\(\underbrace{\|\mathbf{p}-\mathbf{r}\| \leqslant{\color{DodgerBlue}\|\mathbf{p}-\mathbf{q}\|}+{\color{SeaGreen}\|\mathbf{q}-\mathbf{r}\|}}_{\text{{\color{IndianRed}Triangle Inequality}}}\)

\(\mathbf{p}\)

\(\mathbf{q}\)

\(\mathbf{r}\)

#7. Are These Distances Euclidean?

It turns out that the triangle inequality is the only obstacle for three points.

Whenever nonnegative real numbers \(x, y, z\) satisfy \(x \leqslant y+z, y \leqslant x+z\), and \(z \leqslant x+y\),

then there are \(\mathbf{p}, \mathbf{q}, \mathbf{r} \in \mathbb{R}^2\) such that:

\(\|\mathbf{p}-\mathbf{q}\|=x,\|\mathbf{q}-\mathbf{r}\|=y\), and \(\|\mathbf{p}-\mathbf{r}\|=z\).

These are well known conditions for the existence of a triangle with given side lengths.

#7. Are These Distances Euclidean?

Whenever nonnegative real numbers \(x, y, z\) satisfy \(x \leqslant y+z, y \leqslant x+z\), and \(z \leqslant x+y\),

then there are \(\mathbf{p}, \mathbf{q}, \mathbf{r} \in \mathbb{R}^2\) such that:

\({\color{DodgerBlue}\|\mathbf{p}-\mathbf{q}\|=x},{\color{SeaGreen}\|\mathbf{q}-\mathbf{r}\|=y}\), and \({\color{Orange}\|\mathbf{p}-\mathbf{r}\|=z}\).

\(\mathbf{p}\)

\(\mathbf{q}\)

\(\mathbf{r}\)

#7. Are These Distances Euclidean?

#7. Are These Distances Euclidean?

What about four points in \(\mathbb{R}^3\)?

What about four points in \(\mathbb{R}^2\)?

#7. Are These Distances Euclidean?

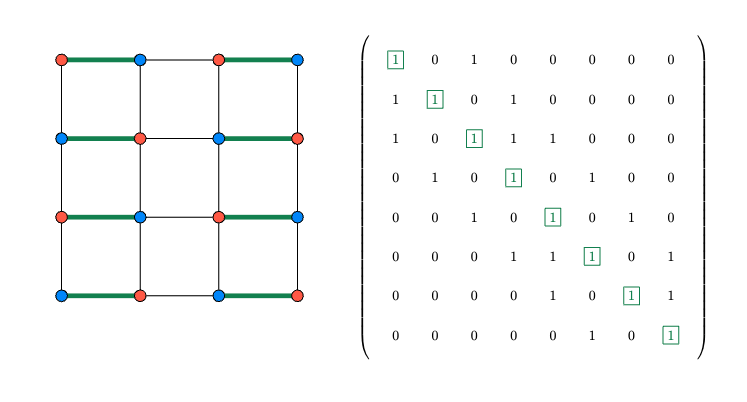

Theorem. Let \(m_{i j}, i, j=0,1, \ldots, n\), be nonnegative real numbers with \(m_{i j}=m_{j i}\) for all \(i, j\) and \(m_{i i}=0\) for all \(i\).

Then points \(\mathbf{p}_0, \mathbf{p}_1, \ldots, \mathbf{p}_n \in \mathbb{R}^n\) with \(\left\|\mathbf{p}_i-\mathbf{p}_j\right\|=m_{i j}\) for all \(i, j\) exist if and only if the \(n \times n\) matrix \(G\) with

\(g_{i j}=\frac{1}{2}\left(m_{0 i}^2+m_{0 j}^2-m_{i j}^2\right)\)

is positive semidefinite.

#7. Are These Distances Euclidean?

Fact. An real symmetric \(n \times n\) matrix \(A\) is positive semidefinite

if and only if

there exists an \(n \times n\) real matrix \(X\) such that \(A=X^T X\).

#7. Are These Distances Euclidean?

\(\begin{bmatrix}\frac{1}{2}(m_{0 1}^2+m_{0 1}^2-m_{1 1}^2) & \frac{1}{2}(m_{0 1}^2+m_{0 2}^2-m_{1 2}^2) & \frac{1}{2}(m_{0 1}^2+m_{0 3}^2-m_{1 3}^2) \\ & & \\ \frac{1}{2}(m_{0 2}^2+m_{0 1}^2-m_{2 1}^2) & \frac{1}{2}(m_{0 2}^2+m_{0 2}^2-m_{2 2}^2) & \frac{1}{2}(m_{0 2}^2+m_{0 3}^2-m_{2 3}^2) \\ & & \\ \frac{1}{2}(m_{0 3}^2+m_{0 1}^2-m_{3 1}^2) & \frac{1}{2}(m_{0 3}^2+m_{0 2}^2-m_{3 2}^2) & \frac{1}{2}(m_{0 3}^2+m_{0 3}^2-m_{3 3}^2) \end{bmatrix}\)

#7. Are These Distances Euclidean?

\(2\langle\mathbf{x}, \mathbf{y}\rangle = \|\mathbf{x}\|^2+\|\mathbf{y}\|^2-\|\mathbf{x}-\mathbf{y}\|^2\)

\( \|\mathbf{x}\|^2+\|\mathbf{y}\|^2-\|\mathbf{x}-\mathbf{y}\|^2 = \)

\( - ((x_1 - y_1)^2 + (x_2-y_2)^2 + (x_3-y_3)^2)\)

\(2 \langle \mathbf{x}, \mathbf{y} \rangle = 2 (x_1 \cdot y_1 + x_2 \cdot y_2 + x_3 \cdot y_3)\)

\( (x_1^2 + x_2^2 + x_3^2) + (y_1^2 + y_2^2 + y_3^2) \)

#7. Are These Distances Euclidean?

\(\mathbf{x}_i:=\mathbf{p}_i-\mathbf{p}_0, i=1,2, \ldots, n\)

\(\langle \mathbf{x_i}, \mathbf{x_j} \rangle = \frac{1}{2} \left(\|\mathbf{x_i}\|^2+\|\mathbf{x_j}\|^2-\|\mathbf{x_i}-\mathbf{x_j}\|^2\right)\)