Introduction to Docker Swarm

A clustering daemon for Docker

What is Docker Swarm?

- A clustering utility for Docker, by Docker

- Announced at DockerCon EU 2014 as a POC

- Written by Victor Vieux and Andrea Luzzardi

- Written in Go 1.2

- Approaching a beta release

Text

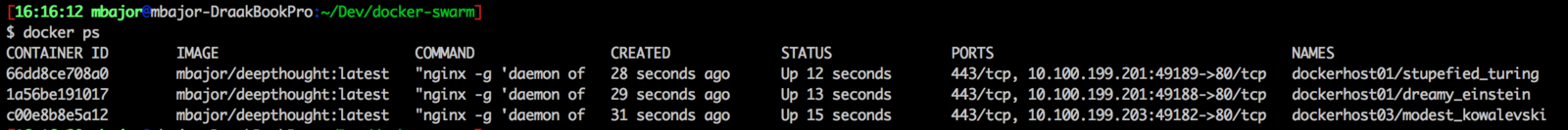

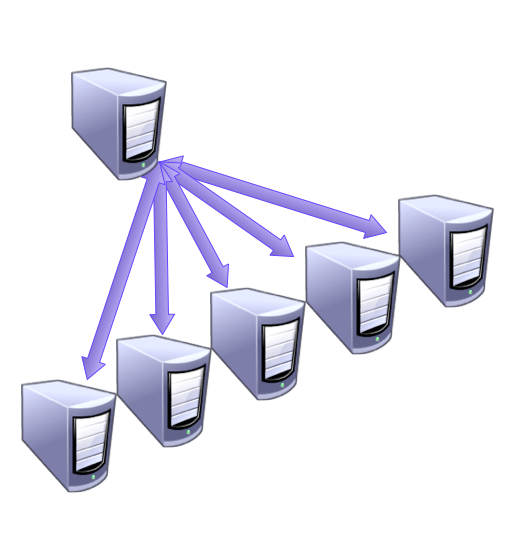

How does it work?

- Runs as a daemon listening on a network port

- Acts as a proxy to the Docker hosts behind it

- Uses a discovery protocol to build the cluster

- Healthchecking keeps things working

- Schedules containers amongst the backends

- Filtering

- Where a cont. can & can't run

- Tags

- Scheduling

- Where to place a cont. amongst

the available nodes

- Where to place a cont. amongst

- Filtering

- Provides an interface similar to a

single Docker host

Does't Proxy Net Ports

Docker Swarm does not handle the proxying of network traffic; only the Docker commands themselves. This means that if Swarm goes down, all of the running containers are unaffected though you can't change their state.

Rebuilds its state db

When Swarm is started or restarted, it rebuilds the database of Docker hosts automatically. This means that as long as your discovery protocol is available, Swarm should be able to figure out what the cluster looks like.

How is it different than a single Docker host?

Multiple hosts; One endpoint

- Made up of multiple discrete

Docker hosts behind a single

Docker-like interface

- Each Docker host is assigned

a set of tags for scheduling

- Ability to partition a cluster

Supports only a subset of commands

- docker run

- docker ps

- docker logs

- docker attach

- docker copy

- docker login

- docker search

Basically: enough to get by

only a proxy...

Docker Swarm does not actually keep the containers running. It only schedules the create commands amongst the available nodes

Docker needs to be started with tags for filtering

In order to help identify the capacities of a given Docker host, it should be started with a set of tags that can be used for filtering (determining which Docker hosts are available to schedule a container).

vagrant@dockerhost01:~$ cat /etc/default/docker

# Managed by Ansible

# Docker Upstart and SysVinit configuration file

# Use DOCKER_OPTS to modify the daemon startup options.

DOCKER_OPTS="--dns=8.8.8.8 --dns=8.8.4.4 \

--label status=master \

--label disk=ssd \

--label zone=internal \

--tlsverify \

--tlscacert=/etc/pki/tls/ca.pem \

--tlscert=/etc/pki/tls/dockerhost01-cert.pem \

--tlskey=/etc/pki/tls/dockerhost01-key.pem \

# Local port for Registrator

-H unix:///var/run/docker.sock \

-H tcp://0.0.0.0:2376"

Interacting with Swarm

Almost the same as with a single host...

- Image Management must be done outside of Swarm

- Most tools still work

- Ansible (TLS Broken)

- Fig

- Docker CLI

# Start a Nginx node on the edge

$ docker run -d -p 80:80 \

--name edge_webserver \

-e constraint:zone==external \

-e constraint:disk==ssd \

-t nginx:latest

# Start an app container on the same Docker host

$ docker run -d -p 5000 \

--name app_1 \

-e affinity:container==edge_webserver \

-t app:latestFiltering

- Constraint Filters

- Based on tags

- Affinity Filters

- Container affinity

- Image affinity

- Port Filters

- One static mapping per host

- Health Filters

- Docker healthy?

Scheduling

- Binpacking

- Fill a Docker host and move to the next

- (Requires setting static resources on containers)

- Random

- Pick a Docker host at random

- Balanced

- In the works

- Pluggable!

..but now with:

Docker Swarm Requirements

Not Many!

- Could be run on an existing Docker host

- File or Node discovery requires no additional services

- Healthchecks still work with static discovery

- Need to build the Go binary right now

- Go dev env

- Place to publish the binary (I use s3)

Configuration Modes

Discovery

- Node - Options passed on CLI

- File - Static file (/etc/swarm/cluster.conf)

- Consul - Uses Consul's key/value store

- EtcD - Use EtcD's key/value store

- Zookeeper - Use Zookeeper as a key/value store

- Token (hosted) - Hosted service by Docker

Links

Scheduling Strategy

- Binpacking - Fill a Docker host and move to the next

- Random - Pick a Docker host at random

- Balanced - Still under development

- Mesos - Still under development

- Ideally fully pluggable

Links

Scheduling Filters

- Constraints - Docker daemon tags

- Affinity - On the same host as..

- A container

- An image

- Port - Don't duplicate statically mapped ports

- Health - Only schedule containers on healthy Docker hosts

Links

TLS enabled/disabled

- TLS Supported

- Client <-> Swarm

- Swarm <-> Docker

- Verify or no-verify

- If verify, all certs need to be from the same CA

- The Swarm cert must be configured for both client and server duty

- Ansible TLS for Docker broken

Routing / Service Discovery

(Extra Credit)

- Almost required due t complex scheduling

- Consul Template or ConfD

- Registrator

- HAProxy or Nginx

- Static hosts with static IPs

- Preferably not containerized

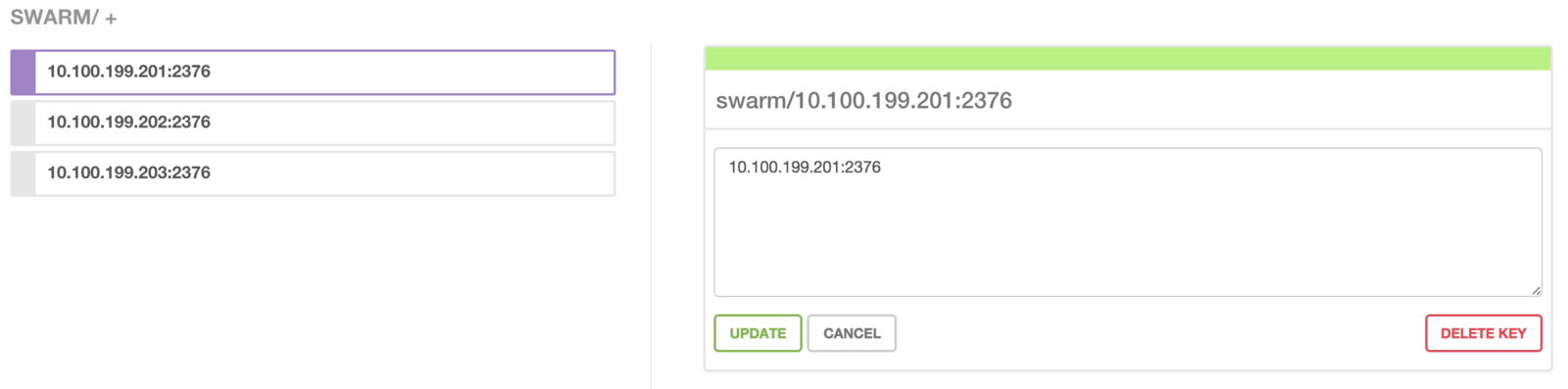

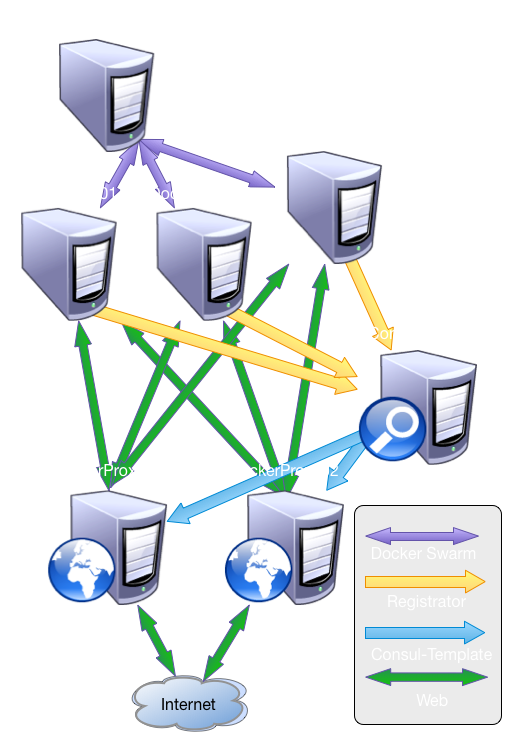

Example Swarm SOA

Hypervisor Layer

- Docker Hosts running Ubuntu

- Docker daemon

- Listening to NW + Socket

- Configured w/ tags

- Swarm daemon

- Pointed at Consul k/v

- /swarm/<dockerip>:<port>

- Registrator daemon

- Pointed at Consul k/v

- /services/<name>-<port>/

dockerhost:container:port

- Monitoring tools (DataDog)

# dockerhost[01:03]

# Docker

/usr/bin/docker -d \

--dns=8.8.8.8 \

--dns=8.8.4.4 \

--label status=master \

--label disk=ssd \

--label zone=internal \

--tlsverify \

--tlscacert=/etc/pki/tls/ca.pem \

--tlscert=/etc/pki/tls/dockerhost01-cert.pem \

--tlskey=/etc/pki/tls/dockerhost01-key.pem \

-H unix:///var/run/docker.sock \

-H tcp://0.0.0.0:2376

# Swarm

/usr/local/bin/swarm join \

--addr=10.100.199.201:2376 \

--discovery consul://dockerswarm01/swarm

# Registrator

/usr/local/bin/registrator \

-ip=10.100.199.201 \

consul://dockerswarm01/services

Cluster Layer

- Running Swarm

- Connects the Docker hosts

- Provides a single interface

- Must be able to connect to

all Docker hosts

- Handles only distribution of

Docker commands; not

proxying of network traffic

- Running containers are not

affected by a Swarm outage

# dockerswarm01

# Swarm daemon

/usr/local/bin/swarm manage \

--tlsverify \

--tlscacert=/etc/pki/tls/ca.pem \

--tlscert=/etc/pki/tls/swarm-cert.pem \

--tlskey=/etc/pki/tls/swarm-key.pem \

-H tcp://0.0.0.0:2376 \

--strategy random \

--discovery consul://dockerswarm01/swarm

Service Discovery Layer

- Provided by Consul

- Key / Value store

- `/swarm` for Swarm

- Used by Swarm

master to maintain

the cluster

- `/services` for Registrator

- Used by the routing layer

to route traffic to services

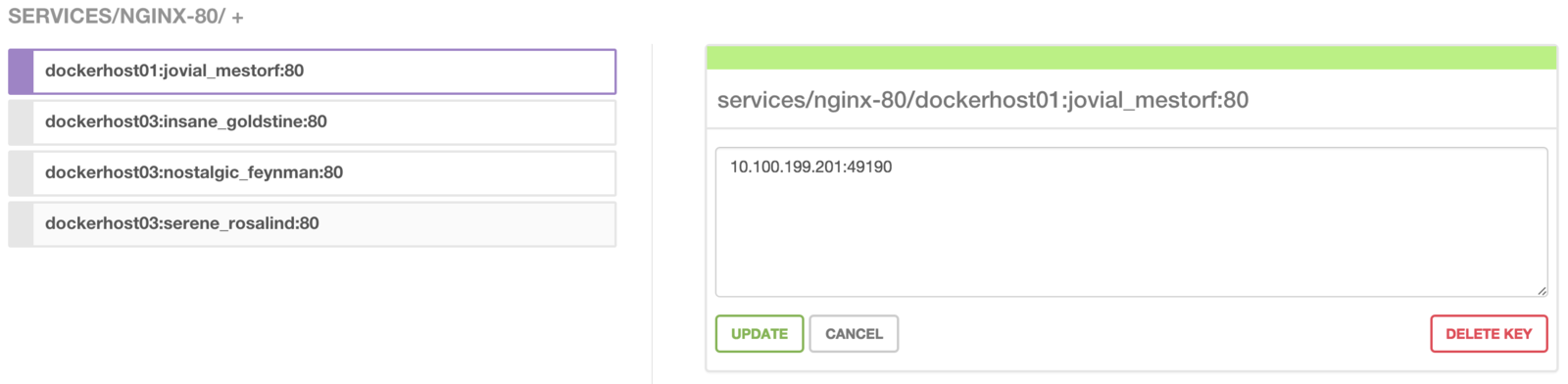

Data under /swarm in Consul

Data under /services in Consul

The Swarm Cluster

Registrator's Services

Routing Layer

- Hosts running:

- Consul-template: to poll

Consul's k/v store and

generate configs based off

of what is in /services

- Nginx/HAProxy: to route

traffic to the proper Docker

host and port for the

application.

Thank you!

The Complete Intro

Related Links

Copy of Introduction to Docker Swarm

By social4hyq

Copy of Introduction to Docker Swarm

An introduction to Docker Swarm for the Docker Denver meetup on 01/26/15

- 1,030