Speech Project

Week 10 Report

b02901085 徐瑞陽

b02901054 方為

Task

- Task 5 from SemEval 2016

- SemEval (Semantic Evaluation):

- an ongoing series of evaluations of computational semantic analysis systems

Task 5:

Aspect Based Sentiment Analysis (ABSA)

- Rise of e-commerce leads to growth of review sites for a variety of services and products

- ABSA: mining opinions from text about specific entities and their aspects

ABSA

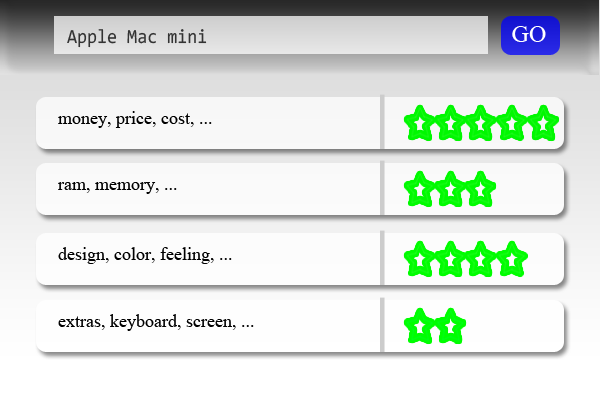

- Given a target of interest (e.g., Apple Mac mini), summarize the content of reviews in an aspect-sentiment table

ABSA

- Aspect: entity type (E) and an attribute type (A) pair, denoted as {E#A}

-

"E" can be:

- the reviewed entity itself (ex. laptop)

- a part or component (ex. battery, customer service)

- other relevant entities (ex. manufacturer of laptop)

- "A": a particular attribute (ex. durability, portability)

-

"E" and "A" do not necessarily occur in the review

- ex: “They sent it back with a huge crack in it and it still didn't work; and that was the fourth time I’ve sent it to them to get fixed”

-----> {customer_support#quality}

- ex: “They sent it back with a huge crack in it and it still didn't work; and that was the fourth time I’ve sent it to them to get fixed”

Domains and Languages

-

Domains & Languages:

- Restaurants: English, Dutch, French, Russian, Spanish, Turkish

- Hotels: English, Arabic

-

Consumer Electronics:

- Laptops: English

- Mobile Phones: Chinese, Dutch

- Digital Cameras: Chinese

- Telecommunications: Turkish

Subtask 1: Sentence-level ABSA

Given a review text about a target entity (laptop, restaurant, etc.),

identify the following information:

-

Slot 1: Aspect Category

- ex. ''It is extremely portable and easily connects to WIFI at the library and elsewhere''

----->{LAPTOP#PORTABILITY}, {LAPTOP#CONNECTIVITY}

- ex. ''It is extremely portable and easily connects to WIFI at the library and elsewhere''

-

Slot 2: Opinion Target Expression (OTE)

- an expression used in the given text to refer to the reviewed E#A

- ex. ''The fajitas were delicious, but expensive''

----->{FOOD#QUALITY, “fajitas”}, {FOOD#PRICES, “fajitas”}

-

Slot 3: Sentiment Polarity

- label: (positive, negative, or neutral)

Subtask 2: Text-level ABSA

Given a set of customer reviews about a target entity (ex. a restaurant), identify a set of {aspect#category, polarity} tuples that summarize the opinions expressed in each review.

Subtask 3: Out-of-domain ABSA

Test system in a previously unseen domain (hotel reviews in SemEval 2015) for which no training data was made available. The gold annotations for Slots 1 and 2 were provided and the teams had to return the sentiment polarity values (Slot 3).

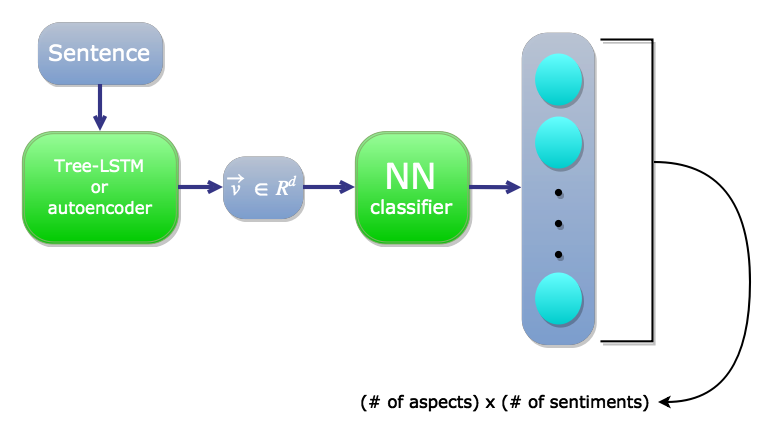

Our Framework

Framework 1

Encode by Tree-LSTM or autoencoder

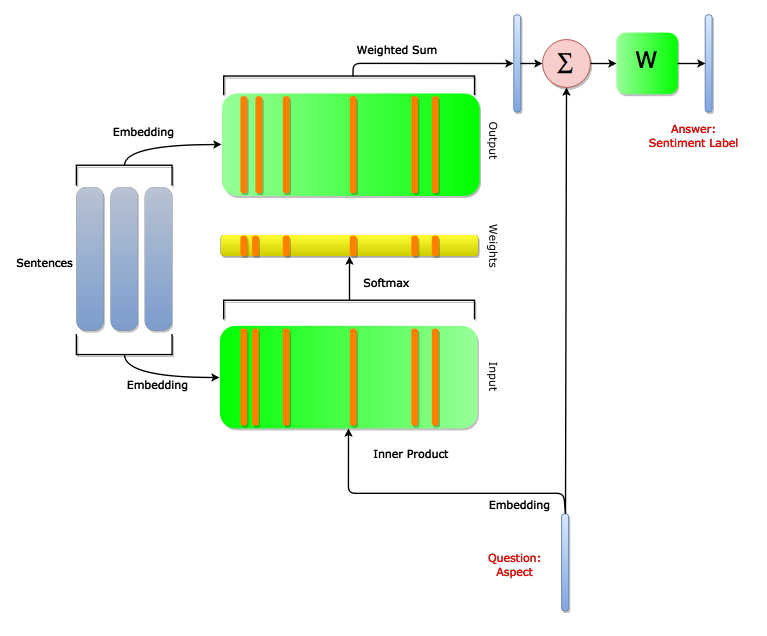

Framework 2

End-to-end MemNN

Paper Study

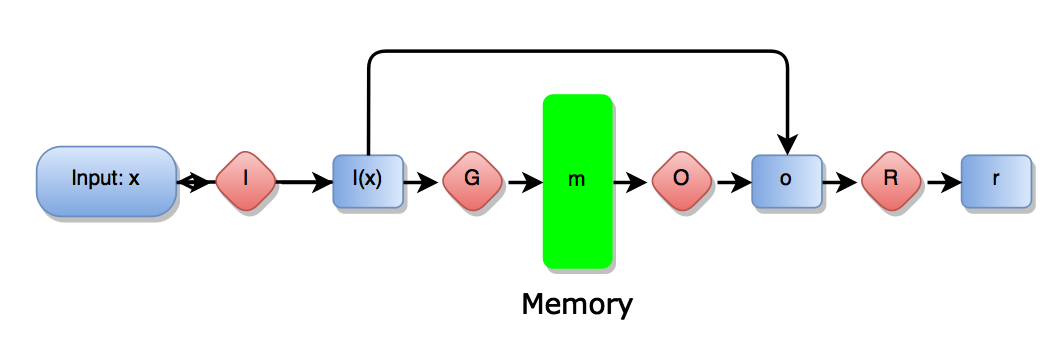

Memory Networks

Memory Networks

-

I component:

- Standard preprocessing (ex. parsing)

- Could also encode into feature representation

-

G component:

- Simple implementation: store I(x) in a slot in the memory

- For huge memory: slot choosing function H

-

O and R components:

- O: Reading from memory and performing inference

- R: Produce final response given O

- ex. R could be implemented by an RNN

- I , G , R , O are pre-trained NN => MemNN

Memory Networks

-

MemNN experiments

- Large-scale QA

- statements (subject, relation, object): ex. (sheep, be-afraid-of, wolf)

- question: ex. "What is sheep afraid of?"

- Good performance

- Implemented hashing techniques for significant speedups

- Simulated World QA

-

Joe went to the kitchen. Fred went to the kitchen. Joe picked up the milk. Joe travelled to the office. Joe left the milk. Joe went to the bathroom.

- Where is the milk now? A: office

- Where is Joe? A: bathroom

- Where was Joe before the office? A: kitchen

- Performs better on "before" questions and "actor+object" questions compared to RNNs and LSTMs

- can store memory for longer period of time

-

Joe went to the kitchen. Fred went to the kitchen. Joe picked up the milk. Joe travelled to the office. Joe left the milk. Joe went to the bathroom.

- Large-scale QA

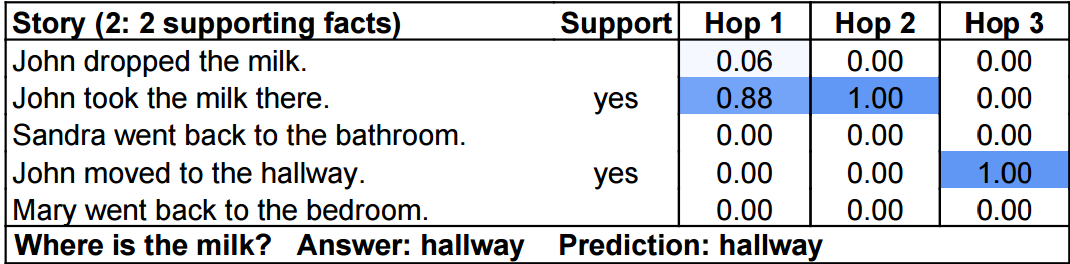

End-to-End Memory Networks

- When training , , in previous model ,

we need to know the mapping

between input sentence and best response

=> Hard in real-world

- This model accept whole facts ,query in one time ,

just have one answer ,

and it model the inference process as RNN (memory hop)

to combine internal state and output in previous hop

is the scoring function for query and memory

is the scoring function for related memory and response

End-to-End Memory Networks

- Modifying RNN's param is like find

but it doesn't need to find argmax directly but softmax ,

use this probability to update next hop hidden state

- When training , it will backpropogate automatically , although there are many memory hop (inference step)

- If well-trained , the ouput of each hop shows the inference step

Problem we encountered

We have no idea

how to achieve

Opinion Target Expression (OTE)

It's not a classification problem...

SpeechProject-week10

By sunprinces

SpeechProject-week10

- 724