automating knowledge?

prediction, agency, and research

POLS Democracy and Technology

Simon Elichko - Social Sciences & Data Librarian

AI tools

- How have you used generative AI tools? (e.g. ChatGPT, Claude, Gemini)

- What have you heard about these tools?

- How do you feel about them? About the discourse related to them?

>_

LLMs are not search engines looking up facts; they are

pattern-spotting

engines that guess the next best option in a sequence.

Interactive work published in Financial Times, September 2023.

Created by FT Visual Storytelling Team and Madhumita Murgia.

Let's try it: bit.ly/gpt-from-scratch

Some considerations

about AI + research

Prediction

Predictive data science is obscure for most people. How does a streaming service guess what to play next? Why does the search engine know where I vote? ...

A deeply opaque computational process anticipates and then shapes routine interpersonal, civic, community, financial, professional, and cultural experiences.

While many of us might blame the algorithm, only those with specialized knowledge know exactly what happens behind the scenes.

Washington, Anne L. Ethical Data Science : Prediction in the Public Interest. New York, NY: Oxford University Press, 2023.

Data & bias

- Using extremely large datasets like Common Crawl to train AI systems doesn't necessarily provide a meaningfully diverse range of viewpoints and ideas. (Consider who is more/less likely to participate online.)

- Bias present in the training data influences output

Environmental and financial cost

...proposed AI solutions can also exploit our cognitive limitations, making us vulnerable to illusions of understanding in which we believe we understand more about the world than we actually do

...vulnerable to an illusion of objectivity, in which they falsely believe that AI tools do not have a standpoint...whereas AI tools actually embed the standpoints of their training data and their developers.

- Output from predictive models can reflect the biases and patterns of the data the system was trained on

- So-called "hallucinations" – statements that seem correct, but aren't, and

"hallu-citations" – citations to non-existent research- Differentiating between accurate and erroneous output sometimes requires domain-relevant expertise and careful attention

- Particular challenges for research in the humanities and humanistic social sciences (like political theory), even with AI tools that provide citations to peer-reviewed research:

- Most draw citations from the science-heavy Semantic Scholar database

- Emphasize journal articles and ignore books (important in humanities research)

- Goals for the task vs. strengths and weaknesses of the tools

- General summary versus nuanced, contextually-relevant analysis

- Consider the purpose - product? process?

Generative AI and research:

some key considerations

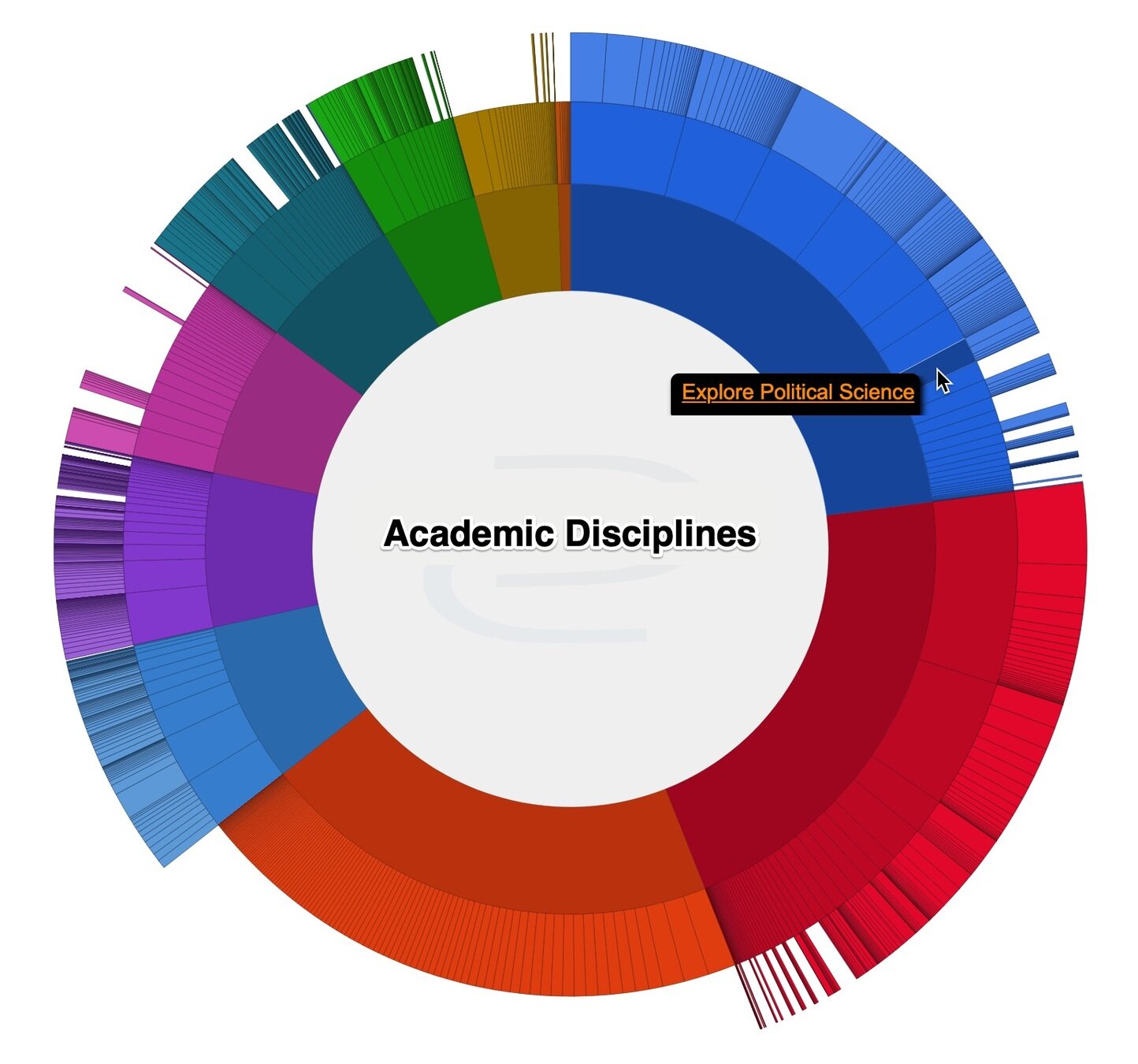

Semantic Scholar

Open Alex

Let's try comparing two data sources. Search for utilitarianism in:

Develop your research skills

& get support with your projects

Meet with Simon

Schedule at bit.ly/selichk1

Email them at selichk1@swarthmore.edu

(including if you need to meet at a different time)

Use the chat in Tripod to get help from librarians and Research & Information Associates (RIAs).

You can also email librarian@swarthmore.edu

POLS 033

By Swarthmore Reference

POLS 033

- 807