t-tests and ANOVA as regressions

PSY 716

"Your model is a special case of mine" is the greatest insult in all of mathematics.

-unknown

Case 1: Independent samples t. tests, ANOVA's, and ANCOVA's

where:

- \(Y_i\) is the dependent variable

- \(X_i\) is the independent variable (group membership or continuous covariate)

- \(\beta_0\) is the intercept

- \(\beta_1\) is the regression coefficient

- \(\epsilon_i\) is the error term

$$ Y_i = \beta_0 + \beta_1 X_i + \epsilon_i $$

Case 1: Independent samples t. tests, ANOVA's, and ANCOVA's

where:

- \(Y_i\) is the dependent variable

- \(X_{i1}\) is an independent variable

- \(X_{i2} ... X_{i5}\) are dummy codes for another independent variable

- \(\beta_0\) is the intercept

- \(\beta_1\) is the regression coefficient

- \(\epsilon_i\) is the error term

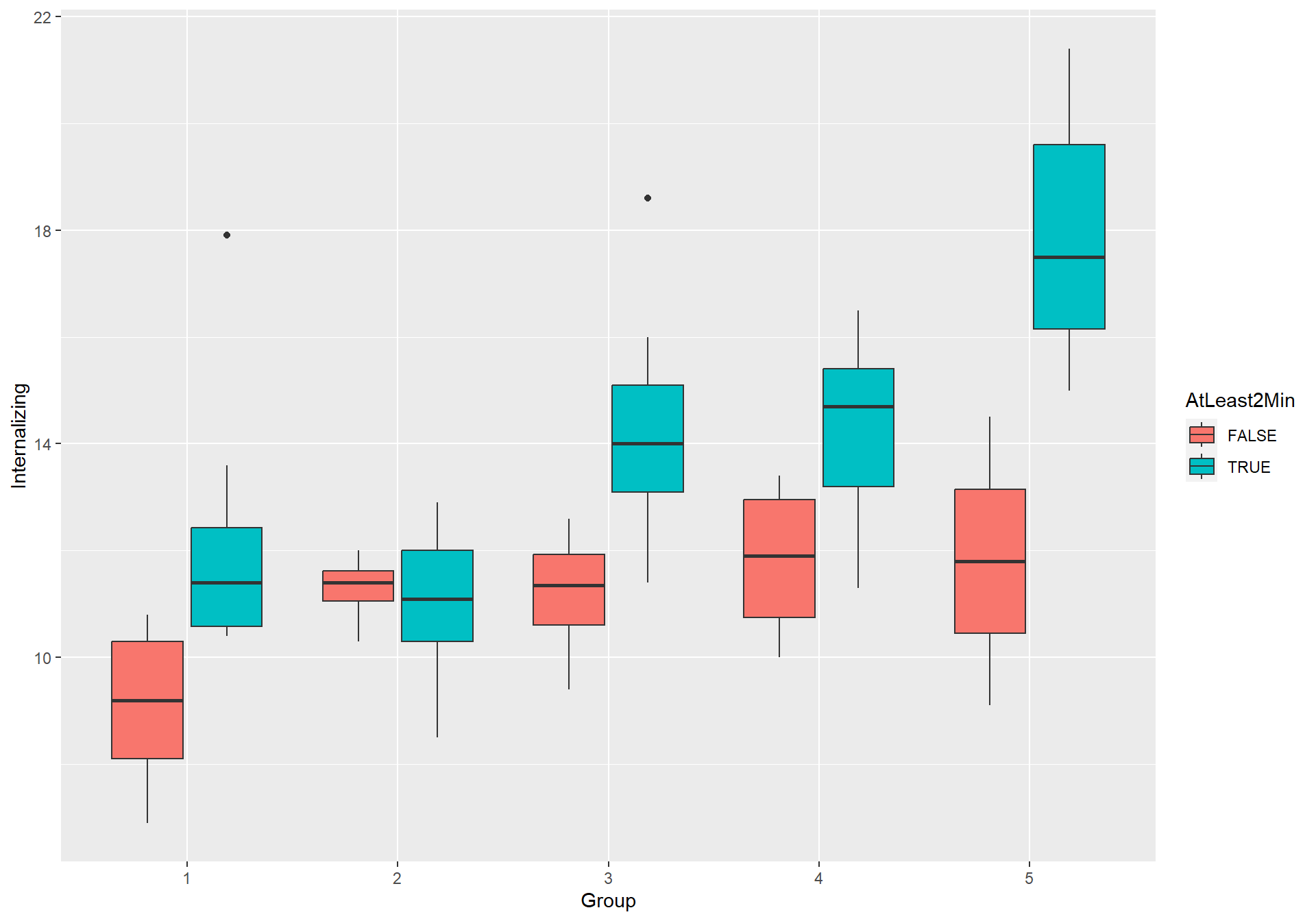

$$ Y_i = \beta_0 + \beta_1 x_{i1} + $$

$$ \beta_2 x_{i2} + \beta_3 x_{i3} + \beta_4 x_{i4} + \beta_5 x_{i5} + $$

$$ \beta_6 x_{i1}x_{i2} + \beta_7 x_{i1}x_{i3} + \beta_8 x_{i1}x_{i4} + \beta_9 x_{i1}x_{i5} + \epsilon_i $$

Why consider these as linear models?

- Unified framework for understanding the techniques

- There's something kind of cool about this, right?

- Much greater flexibility

- Continuous predictors of all types

- Different types of relationships among variables

- particularly in the case of MANOVA

- Ability to handle unbalanced designs and missing data

- Consider repeated-measures ANOVA -- what if someone misses one condition?

Why not consider these as linear models?

The non-regression ways of applying these techniques often have built-in adjustments for violations of assumptions

- Example: Welch's t-test

- Adjusts for unequal variances (heteroscedasticity) between groups

- Linear regression formulation does not inherently account for this

- Other examples include corrections for sphericity in repeated-measures ANOVA

Reason 1: Special Adjustments for Violations of Assumptions

Why not consider these as linear models?

$$ t = \frac{\bar{X}_1 - \bar{X}_2}{\sqrt{\frac{s_1^2}{n_1} + \frac{s_2^2}{n_2}}} $$

where:

- \(\bar{X}_1\) and \(\bar{X}_2\) are the sample means

- \(s_1^2\) and \(s_2^2\) are the sample variances

- \(n_1\) and \(n_2\) are the sample sizes of each group

Reason 1: Special Adjustments for Violations of Assumptions

Why not consider these as linear models?

- Traditional techniques like ANOVA and MANOVA automatically adjust for multiple comparisons

- Example: ANOVA F-test compares all groups simultaneously

- Linear regression formulation requires multiple dummy codes

- Each dummy code represents a separate comparison

- This increases the risk of Type I error (false positives) if not adjusted

- Of course, you could adjust it! But corrections like Bonferroni or Tukey's HSD are more intuitive in traditional techniques

Reason 2: Intuitive Adjustment for Multiple Comparisons

Why not consider these as linear models?

- There are some quantities that ANOVA-family analyses will give you automatically. Why struggle to get a linear regression to give you those?

- Omnibus effects in ANOVA

- Group means and differences between them

- Sums of squares and resultant effect sizes (e.g., partial \(\eta^2\))

- Question: what else?

Reason 3: Sometimes it just doesn't make sense for the research question you are answering.

Why not consider these as linear models?

- Huge number of researcher degrees of freedom entailed in the regression-based approach.

- What should your reference group be?

- What do you do if only one of your dummy codes is significant?

- What do you do if you're basically agnostic to the nature of multivariate differences between groups?

- Maybe we should do MANOVA before multigroup SEM

Reason 3: Sometimes it just doesn't make sense for the research question you are answering.

Recommendations

- Running a regression and then an ANOVA on top of it is sometimes a good strategy

- ...particularly in the case in which the model is more naturally parameterized as a regression, such as a model with continuous covariates

- Be conservative

- ...which often means sticking to methods that naturally adjust for multiple comparisons.

- (i.e., ANOVA's rather than a dummy-coded regression)

- ...which often means sticking to methods that naturally adjust for multiple comparisons.

- Most of all, be wary of results that hold up across one strategy but not the other.

Copy of deck

By Veronica Cole

Copy of deck

- 62