🤖

💭

👨🏻💻

Vibe Coding

AI Augmented Development

How did we get here?

🤖

💭

AI Augmented Development

How did we get here?

🤖

💭

Context is 👑

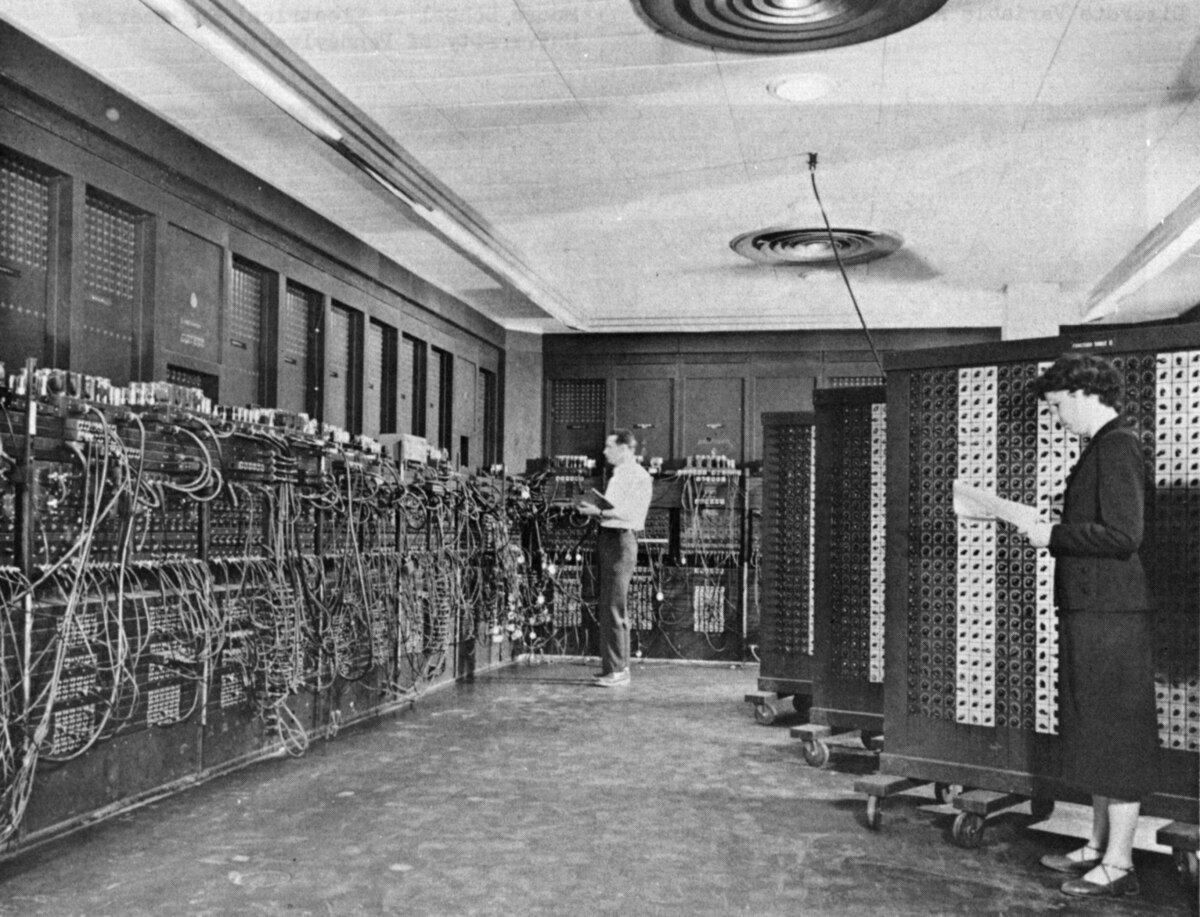

AI had been around

for a while...

🤖

💭

AI has existed in universities and

big tech organizations for many years.

A friend of mine studied neural networks at the Technion about 20 years ago…

Deep Blue beat Gary Kasparov in 1996

IBM Watson, Siri & Alexa are old news...

Interactive timeline from 2015 - 2025

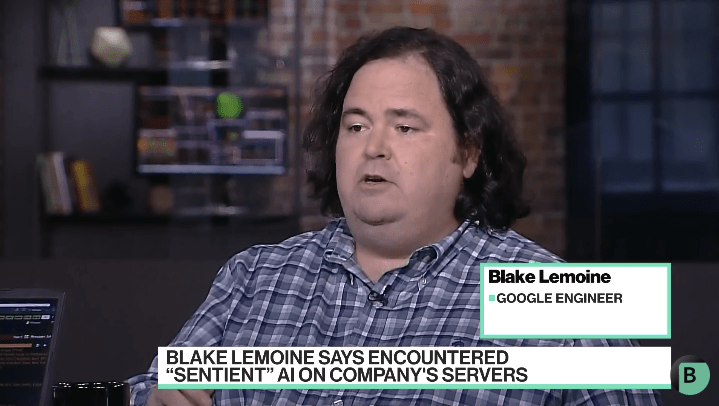

A famous interview

🤖

💭

It was a futuristic, geeky gimmick.

It did not significantly impact our lives.

The machine is sentient!

🤖

💭

In 2022, Blake Lemoine, a Google AI engineer, was fired from Google for violating its employee confidentiality and data security policies after he publicly claimed its LaMDA artificial intelligence was sentient.

Later that year, we had the GPT moment…

GPT moment!

🤖

💭

In late Nov 2022, OpenAI made ChatGPT

available to the public for free!

100 million monthly users in 2 months!

It reached 1 million users

within just 5 days of its launch!

476 million December 2024

1 billion monthly users by October 2025

But Chat GPT was just a Front!

🤖

💭

While ChatGPT was offered for free

to the general public...

The web exploded with AI services as a result.

OpenAI also offered its AI models

via a paid API platform

Multi-Domain Use cases of AI models

🤖

💭

Generative AI

Text & Language Generation

- Conversational AI (Chatbots, Assistants)

- Content creation (emails, stories, summaries)

- Code generation

- Translation & grammar correction

Image Generation

- Creating & editing images with Midjourney, DALL.E, GPT...

Video Generation

- Video from text or images, Deepfakes & Avatars, Auto-edits, Animation

Audio Generation

- Text-to-speech (TTS), AI voice cloning, Music generation

Perception AI (Understanding the real world)

Vision (object recognition, tracking), Speech Recognition, Sensor Fusion (e.g. for robotics, drones)

Predictive / Analytical AI

Fraud detection, Forecasting, Diagnostics, Recommendations etc.

Tech Giants had to join in

🤖

💭

Google was forced to change its strategy and integrate AI into its search results to stay relevant.

Google also made its own models available via API, along with other public tools like Gemini and NotebookLM.

Microsoft, Amazon, Meta, Apple, and X have integrated their own AI models into their services and are offering them as cloud services as well.

New players like Anthropic and Mistral had emerged.

The Open Source Eco System

🤖

💭

Open source experienced a significant growth as well.

HuggingFace offers over 1M smaller, customized models that are free for download or used via their API.

Tools like Ollama, LM Studio, and OpenRouter make it easy to run models on your infrastructure (on prem).

Running models Locally require a strong infrastructure.

Why can't I use GPT or Claude for everything?

🤖

💭

Do you just want to use the model or include it in your product?

Is the LLM intended for general use?

Or will it need to be custom tailored for a specific use-case?

Can you use hosted LLMs over the network?

Or is "on-prem" a requirenment?

Some leading questions to help you choose a model that fit your needs

Does the model need to contain reasoning, or other traits?

Is the budget a consideration?

You may want to optimize for a faster customized model

Why do we need so many models?

LLM categories

🤖

💭

General-Purpose / Fast Model

Reasoning Models

Deep Search / Agentic Models

LLMs differ in architecture, training methods, and intended purposes.

They vary in speed, reasoning, and deep search capabilities.

1. General-Purpose / Fast Model

🤖

💭

Speed: High speed and low latency are primary goals,

achieved through smaller model sizes or specific optimizations.

Reasoning: Basic reasoning, may struggle with complex, multi-step logical problems without specific prompting (like Chain-of-Thought).

Deep Search: Generally do not have built-in deep search capabilities and rely solely on their internal training data.

These models are optimized for quick response times and cost-efficiency, making them suitable for everyday tasks like simple question-answering and content generation.

2. Reasoning Models

🤖

💭

Speed: Slower than general-purpose models,

often involves generating intermediate "thought" or "thinking" steps (Chain-of-Thought) to break down problems.

Reasoning: Highly capable in complex areas like mathematical proofs, scientific reasoning, logic puzzles, and debugging code, by systematically working through the problem.

Deep Search: Reasoning capabilities can be combined with search tools, but the core focus is on the logical processing of information, not information retrieval itself.

These models are engineered to excel at complex, multi-step reasoning tasks, often using sophisticated training techniques and architectural designs.

3. Deep Search / Agentic Models

🤖

💭

Speed: Slower, as they operate with a higher degree of autonomy, performing multiple steps over an extended period (minutes rather than seconds) to complete a comprehensive research task.

Reasoning: Utilize strong reasoning capabilities to comprehend user intentions, dynamically plan multi-turn retrieval, and synthesize information from various sources.

Deep Search: Involves extensive use of tools, like web browsing,

data analysis, and code execution, to perform deep information mining

and produce comprehensive reports.

These are advanced systems, often referred to as "search agents," that are designed for autonomous and in-depth information seeking and synthesis across multiple sources, typically the web.

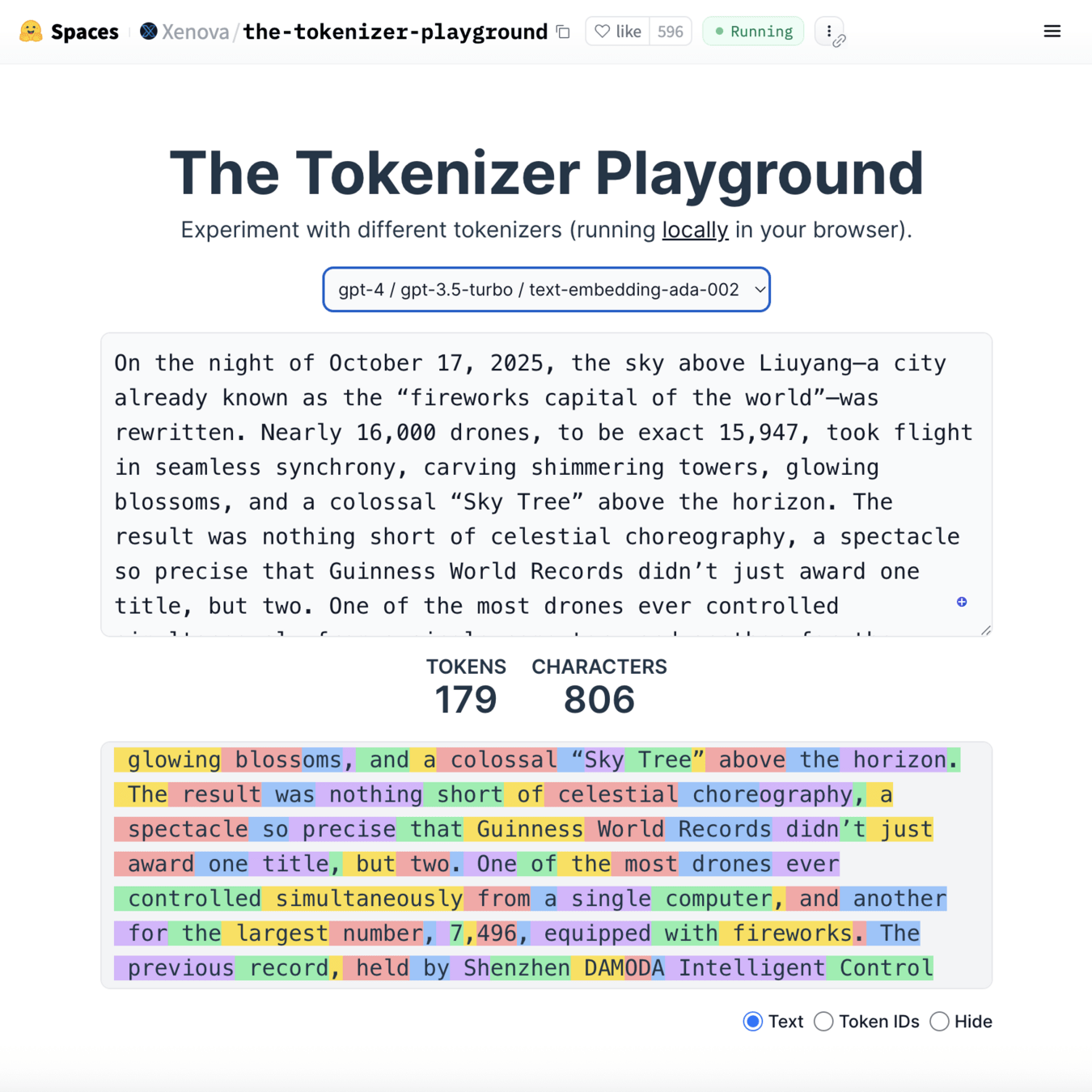

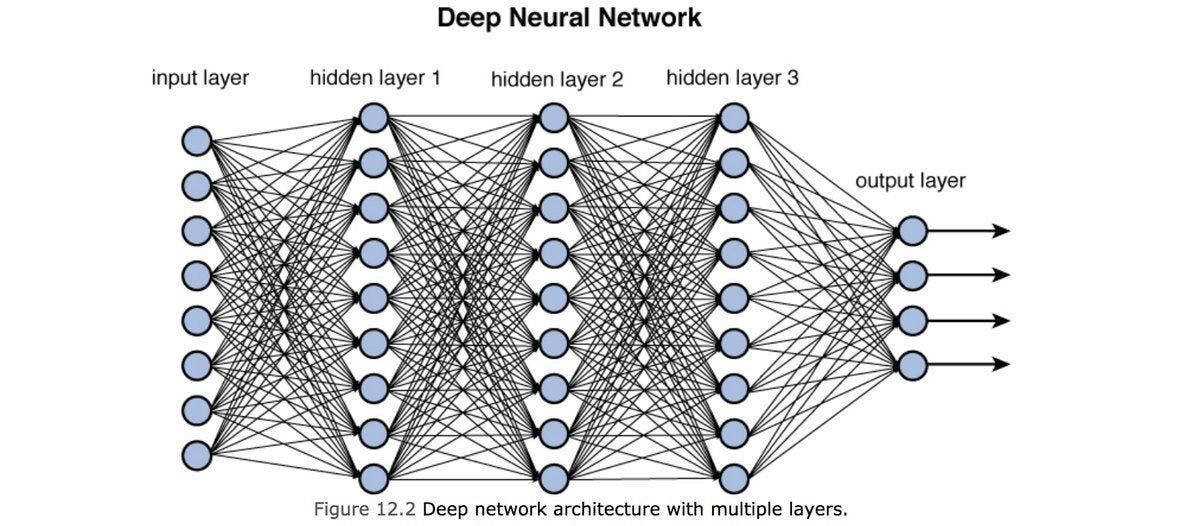

How LLMs process text

🤖

💭

They operate by statistical probabilities.

While they may appear to understand, this is merely pattern matching, not human-like cognition.

LLMs do not "understand" text like humans do

Tokenization: converts text into tokens which can be

words, parts of words, or characters.

Embeddings: numerical representations that capture the meaning and context of these tokens.

Pattern recognition is the model's ability to find statistical relationships and structures within this numerical data.

Prediction: how the model determines the most statistically probable next word to generate coherent and relevant text.

Known issues when working with LLMs

🤖

💭

Training Data Limitations (a.k.a data cutoff)

Hallucination and Accuracy

Context Window Constraints

Reasoning Limitations

Inconsistency

Domain-Specific Limitation

Some of the solutions

🤖

💭

Web search tools, fact-checking tools

System prompts and Prompt engineering

Condense information before analysis

Break complex problems into smaller, sequential steps

Implement Retrieval Augmented Generation (RAG) systems

Implement human-in-the-loop workflows for critical decisions

Fine-tune models on domain-specific data when possible

Vibe Coding

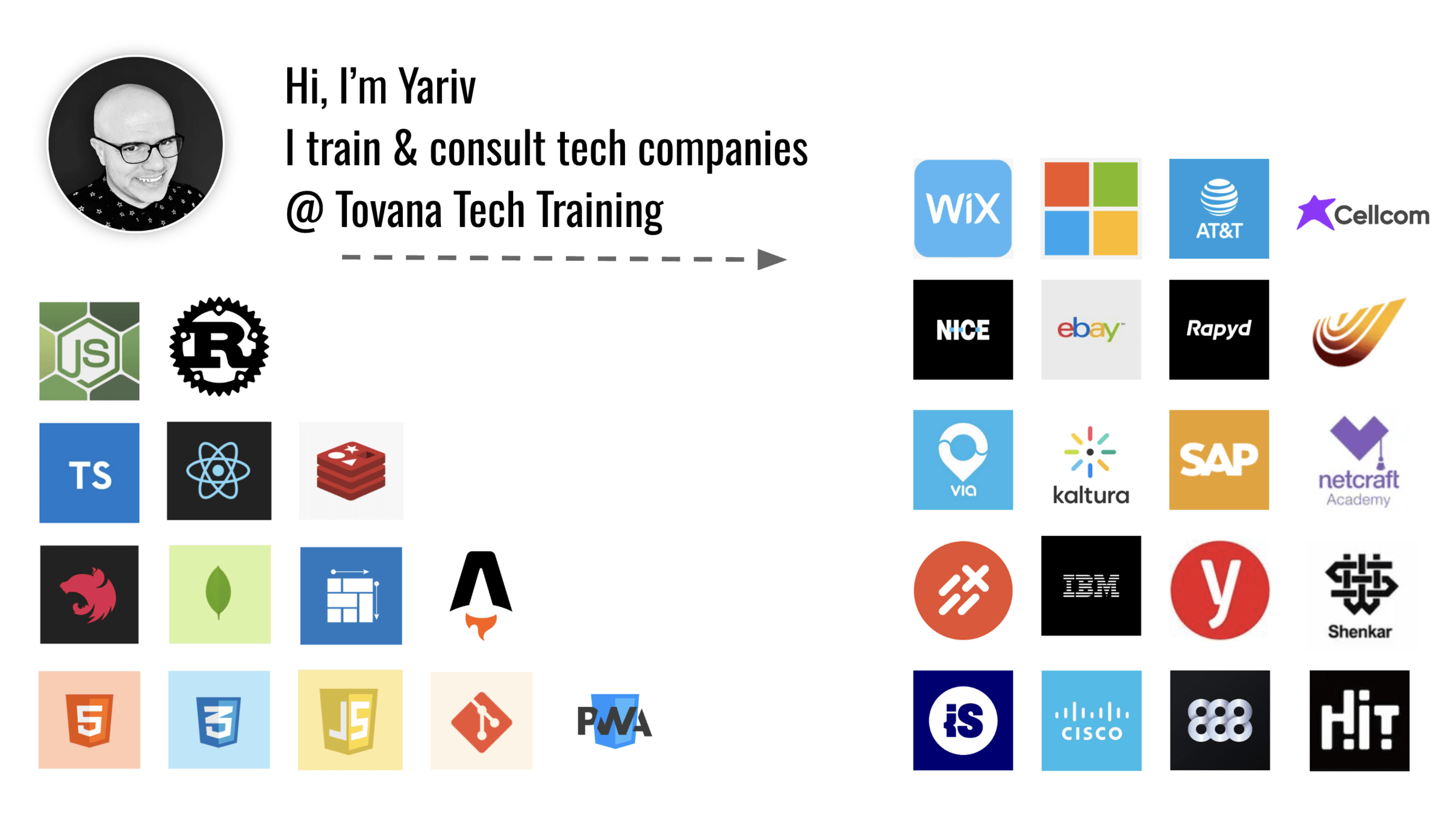

By Yariv Gilad

Vibe Coding

- 249