Inspecting Vision Models

Ahcène Boubekki

UCPH/Pioneer

Samuel G Matthiesen

Sebastian Mair

DTU

Linkøping

Motivation

Motivation

Objective: Learning Causal Relationships

How do we do that

for complex data?

Rios, F. L., Markham, A., & Solus, L. (2024).

Scalable Structure Learning for Sparse Context-Specific Causal Systems.

arXiv preprint arXiv:2402.07762.

What are the features?

Certainly not the pixels...

Motivation

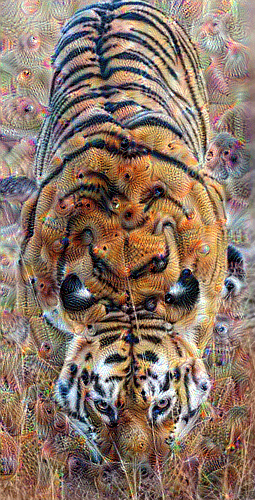

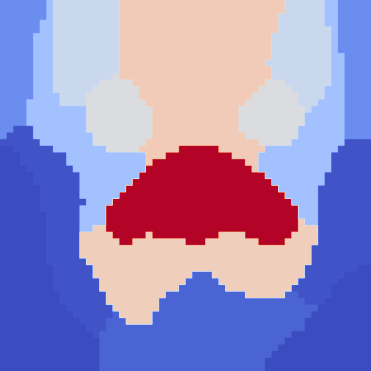

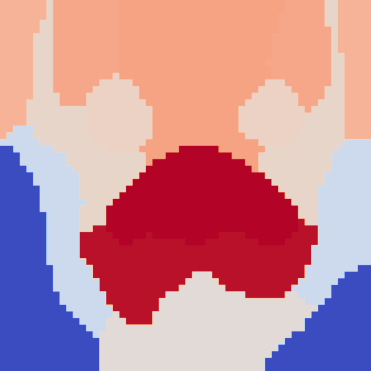

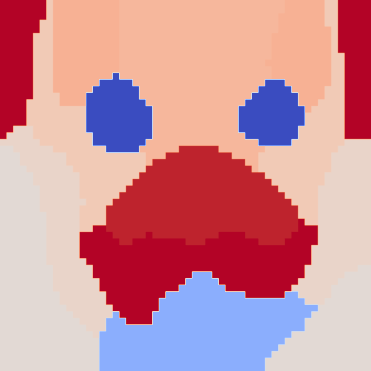

What did you see?

Where did you look?

Where were

the eyes?

How many eyes

were there?

How would you decompose this image?

Motivation

Objective: Learning Causal Relationships

How do we do that

for complex data?

Rios, F. L., Markham, A., & Solus, L. (2024).

Scalable Structure Learning for Sparse Context-Specific Causal Systems.

arXiv preprint arXiv:2402.07762.

What are the features?

Certainly not the pixels...

Semantics?

Concepts?

How to see what

a model sees?

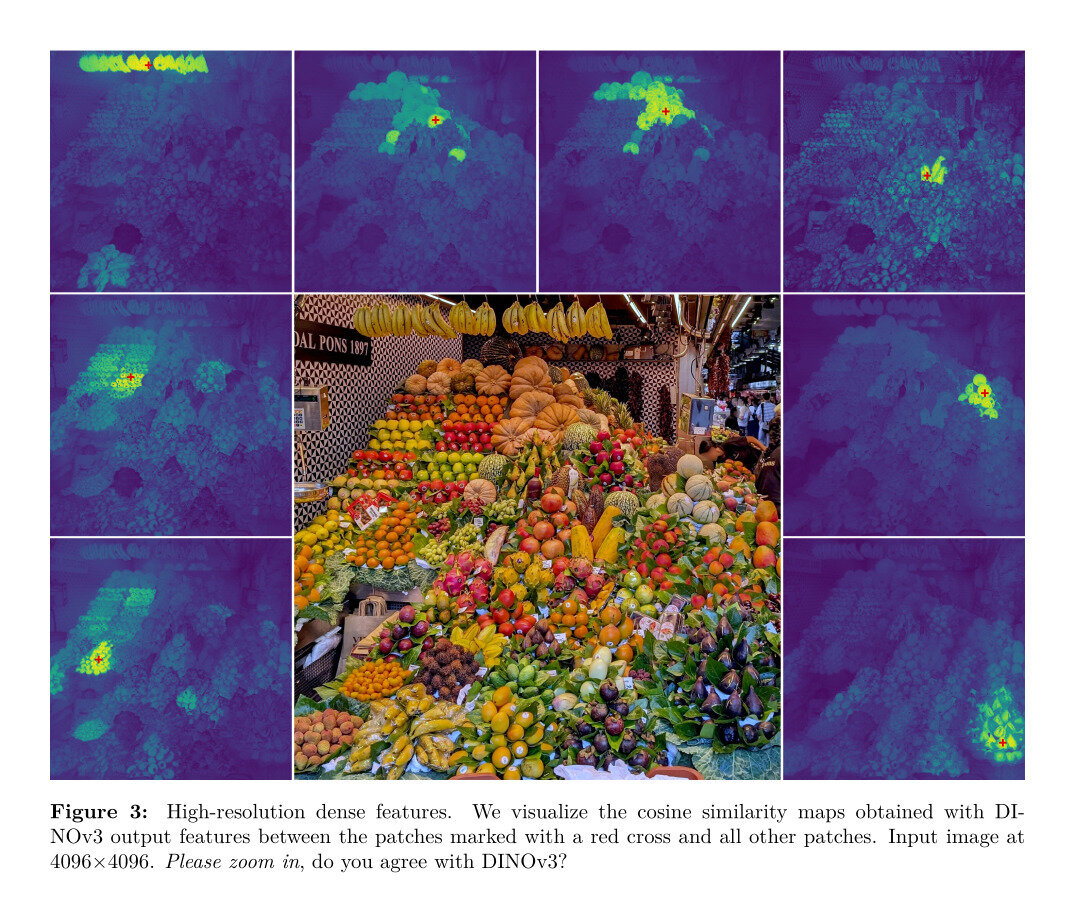

How to see what a model sees?

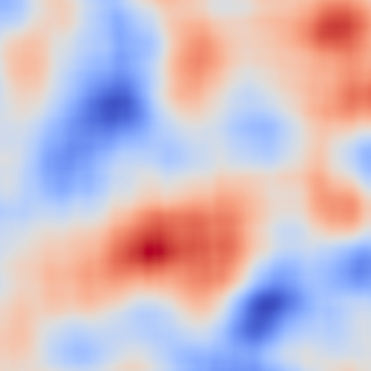

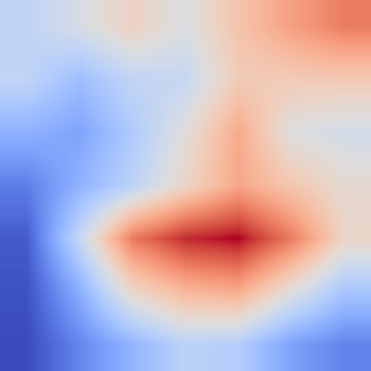

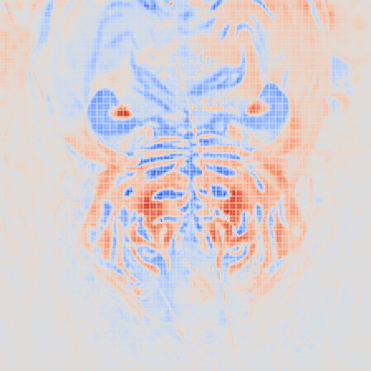

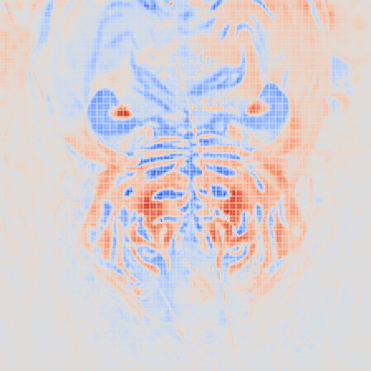

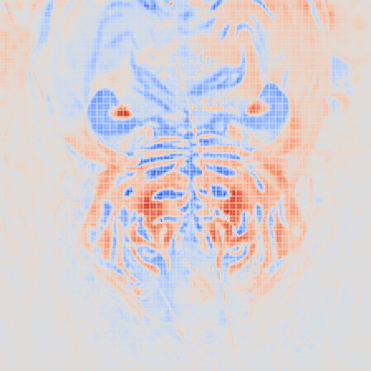

RISE

XRAI

GradCAM

LRP

IG

Dingo or Lion

These do not answer directly

what does the model see?

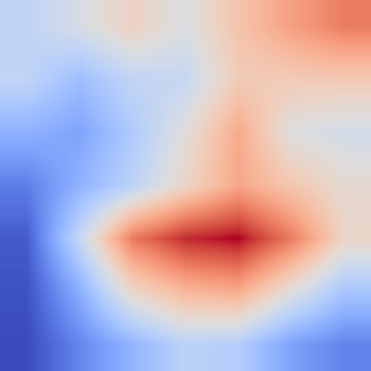

Deep Dream?

What makes it more a tiger than a tiger?

Too slow, impossible to train, not really useful.

What is important for the prediction?

Inconsistent, difficult to read, objective unclear.

Saliency Maps?

How is the neighborhood in the embedding?

Inspection of the embedding, "biaised" justification..

Prototypes/Concepts?

Counterfactual?

What should I change to change class?

Tricky to compute, but nice!

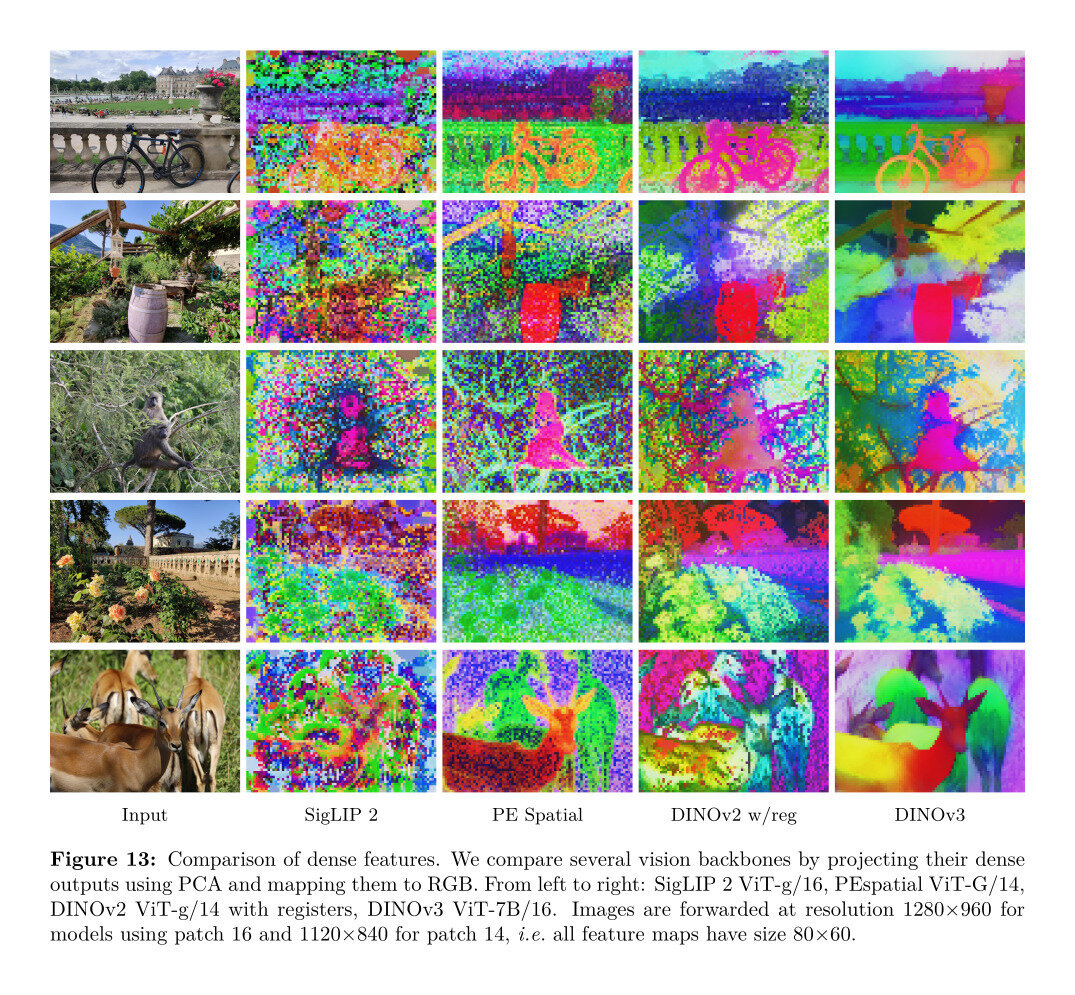

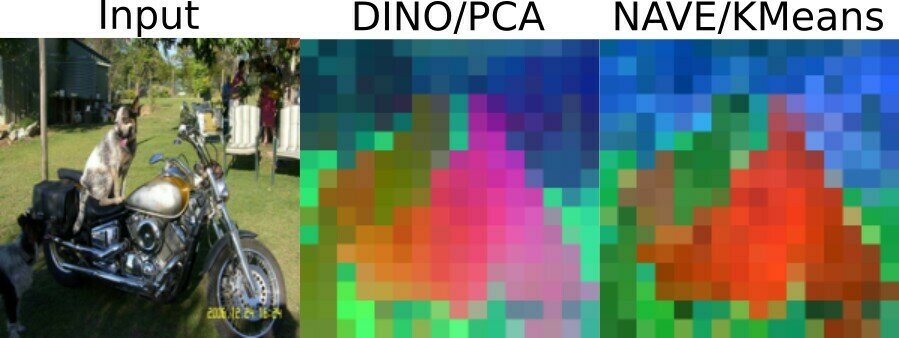

How to see what a model sees?

Standard Image Classifier

Encoder

convolutions, pooling, non-linearity, skip-connections, attention, etc.

Classifier

Single linear layer... eventually a softmax

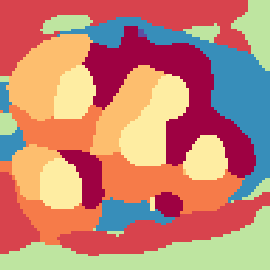

clustering

k=10

k=5

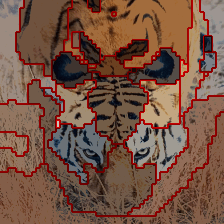

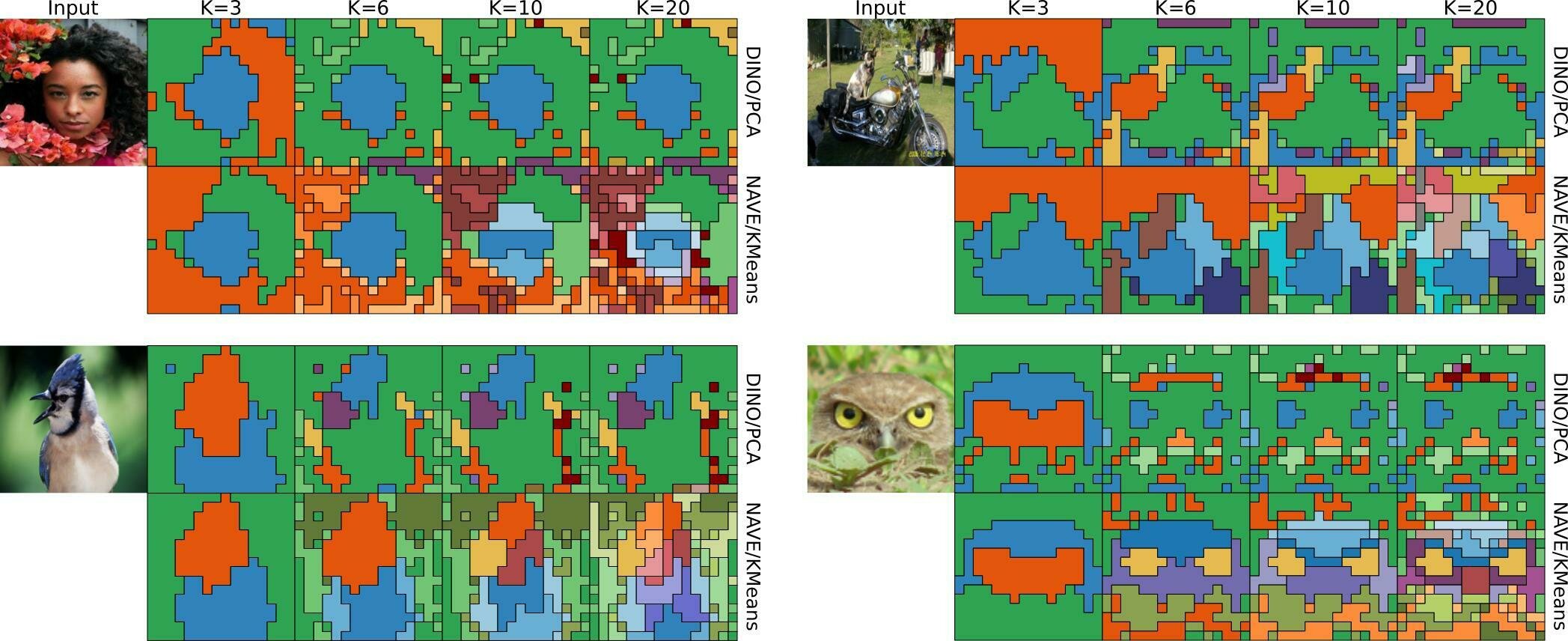

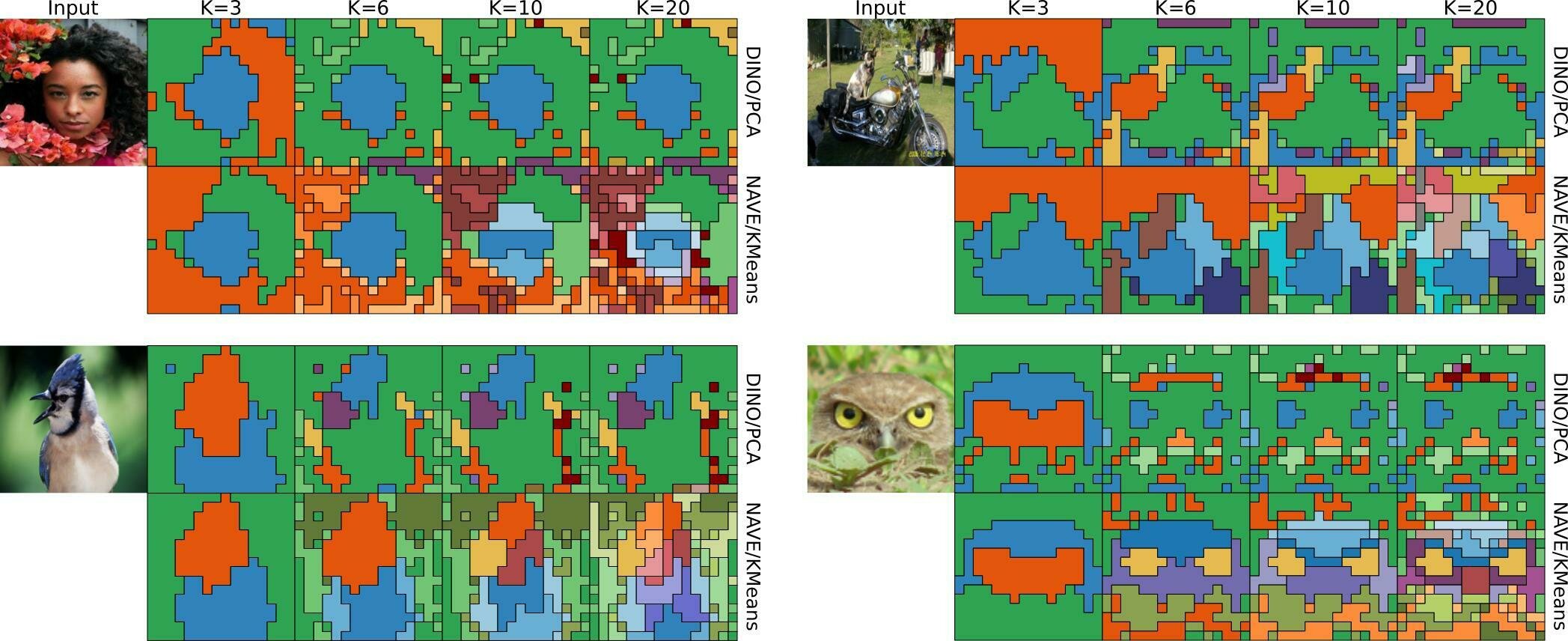

How to see what a model sees?

Seems like a déjà-vu?

One object at a time

Limited to 3 directions

For K=3,

PCA and k-means are similar

K-means provides

some hierarchy!

What can we "explain"?

What can we explain?

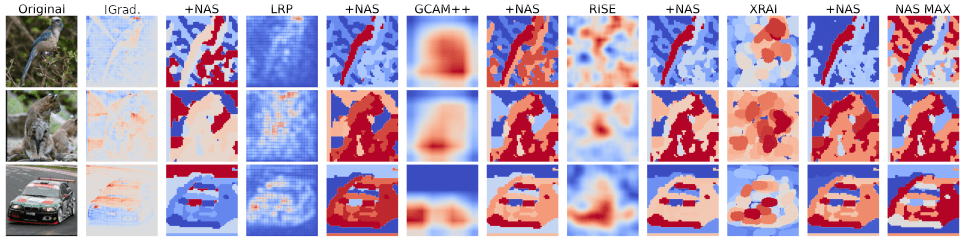

Explain explanations

IG

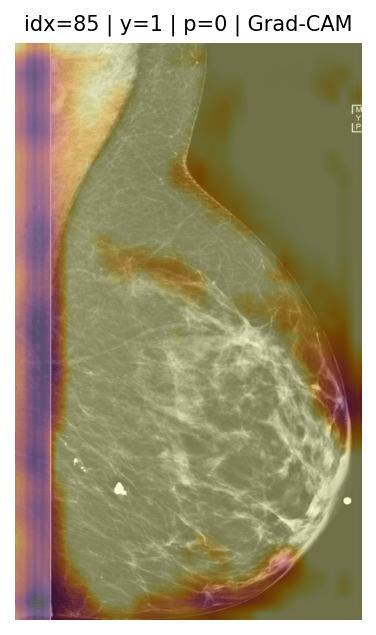

LRP

GradCAM

RISE

XRAI

color gradient ~ rank

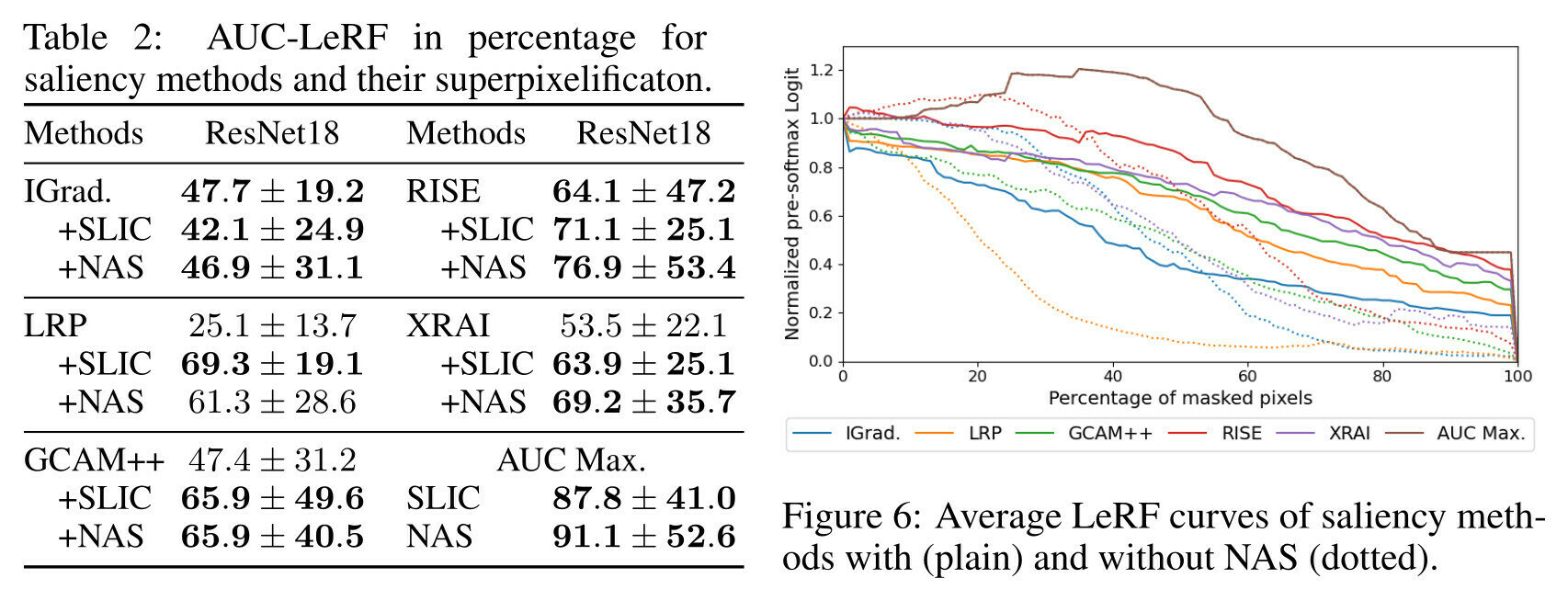

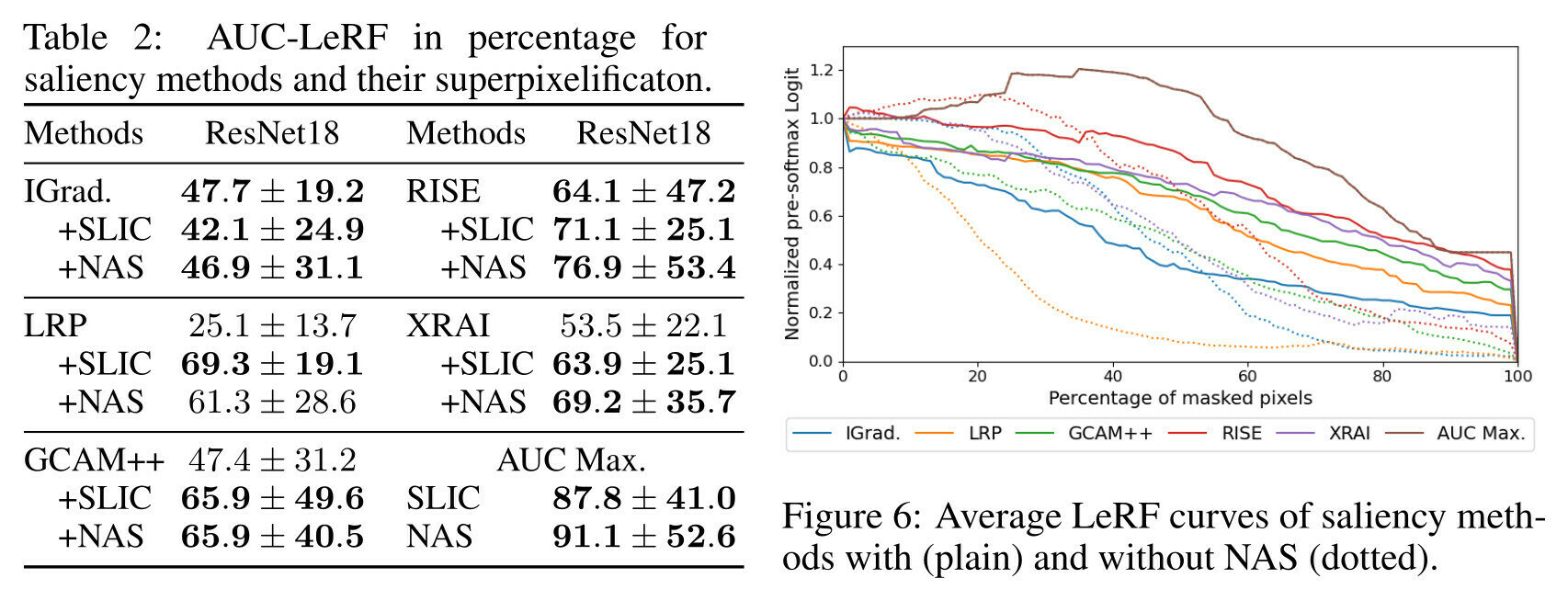

What can we explain?

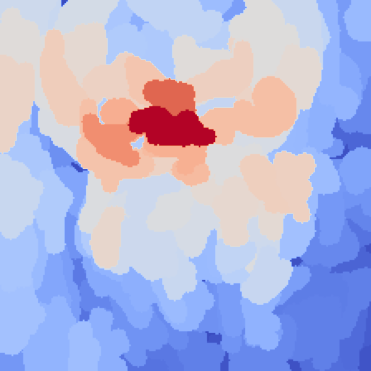

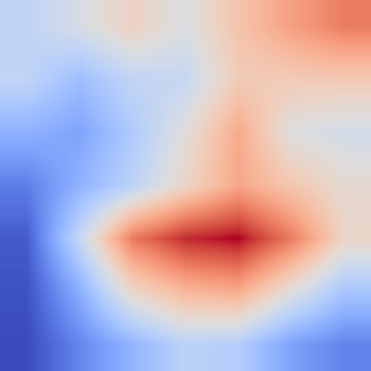

Maximize the AUC-LeRF

Superpixelif.

improves AUC

SLIC and NAVE

return same perf?

AUC-LeRF is the problem!

Negative image of the bird max the AUC

AUC MAX

What can we explain?

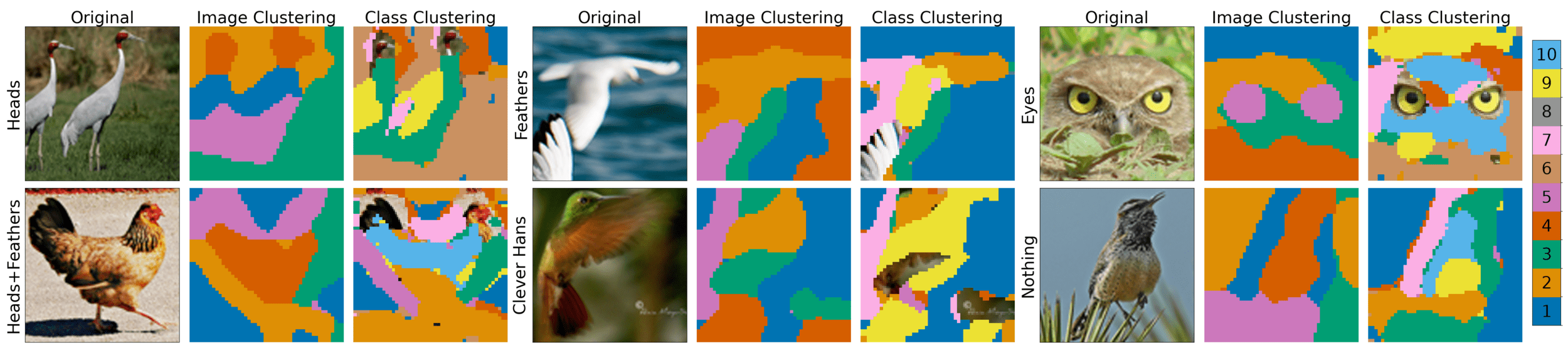

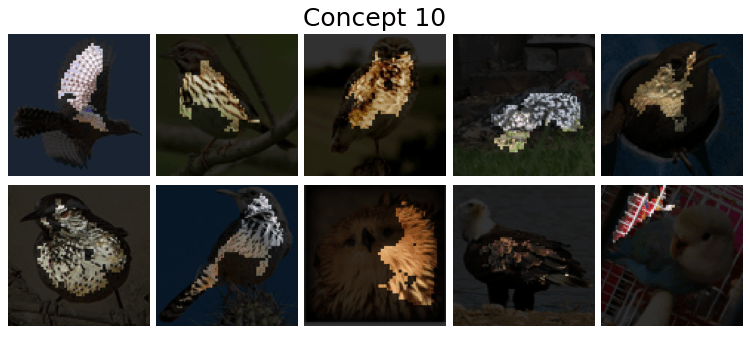

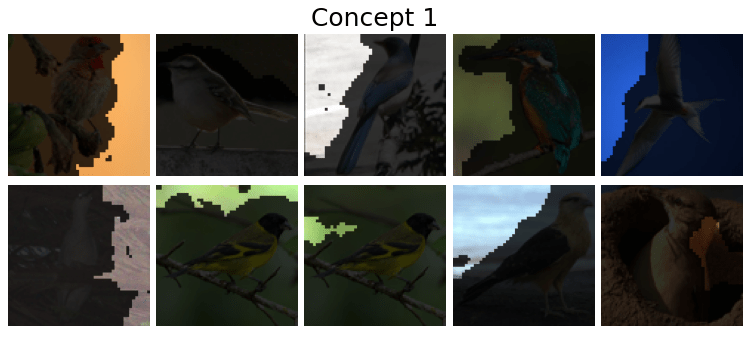

Connect Explanations and Semantics

Replace class-wise explanations

Concept Extraction

What can we explain?

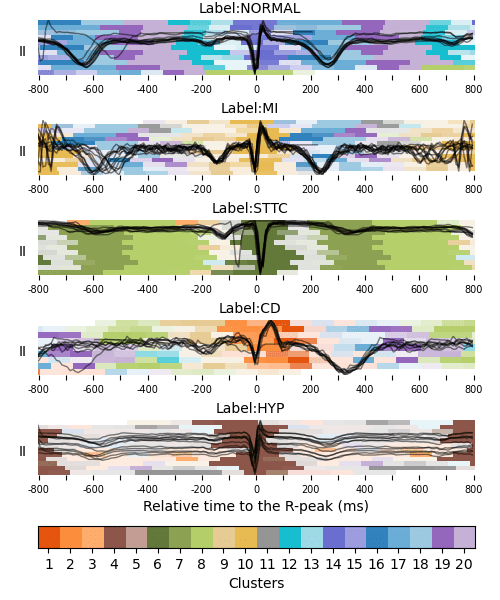

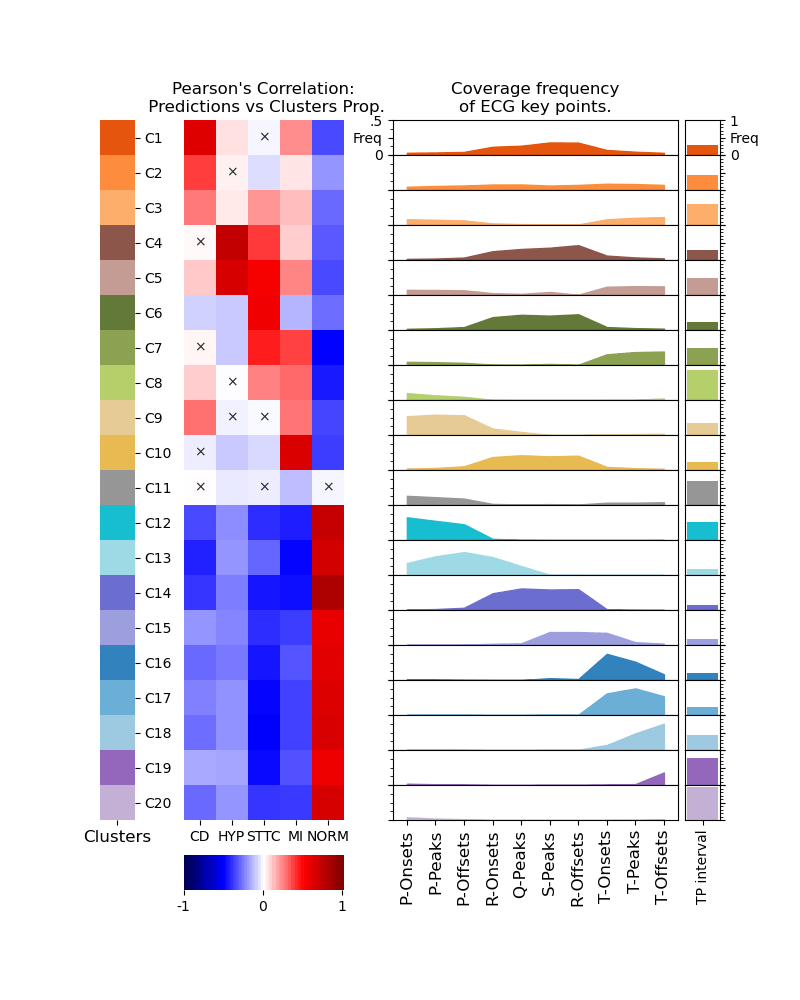

ECG Explanation

What can we explain?

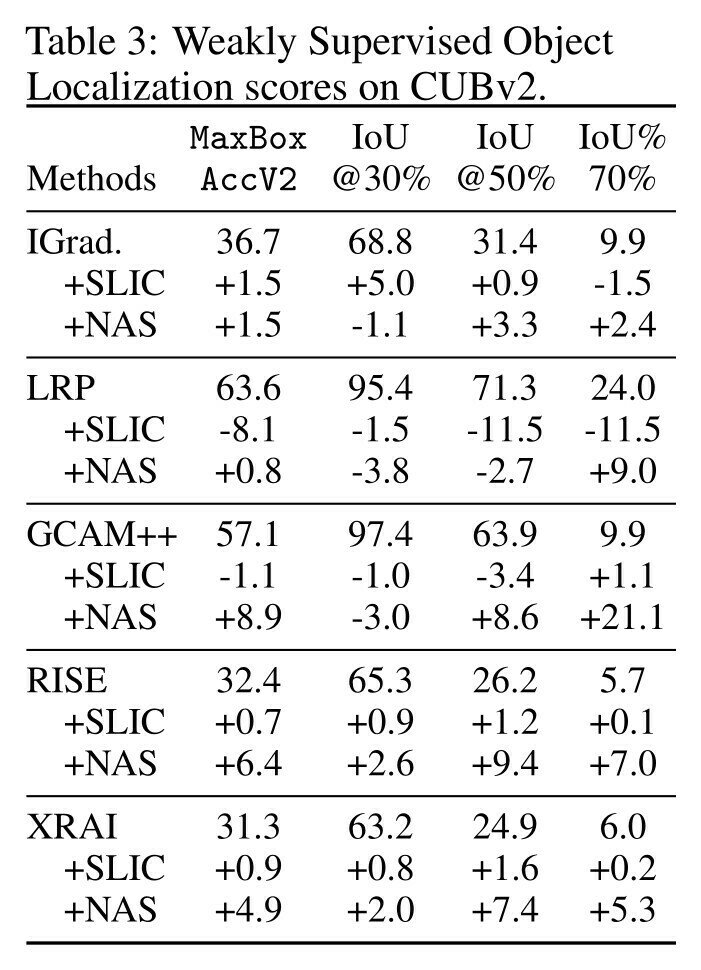

Object Localization

NAS always improves MaxBox

but not all thresholded IoU

Always improves the most difficult IoU@70%

+

+

+

+

+

+

+

++

+

+

-

-

-

+

+

+

-

+

+

+

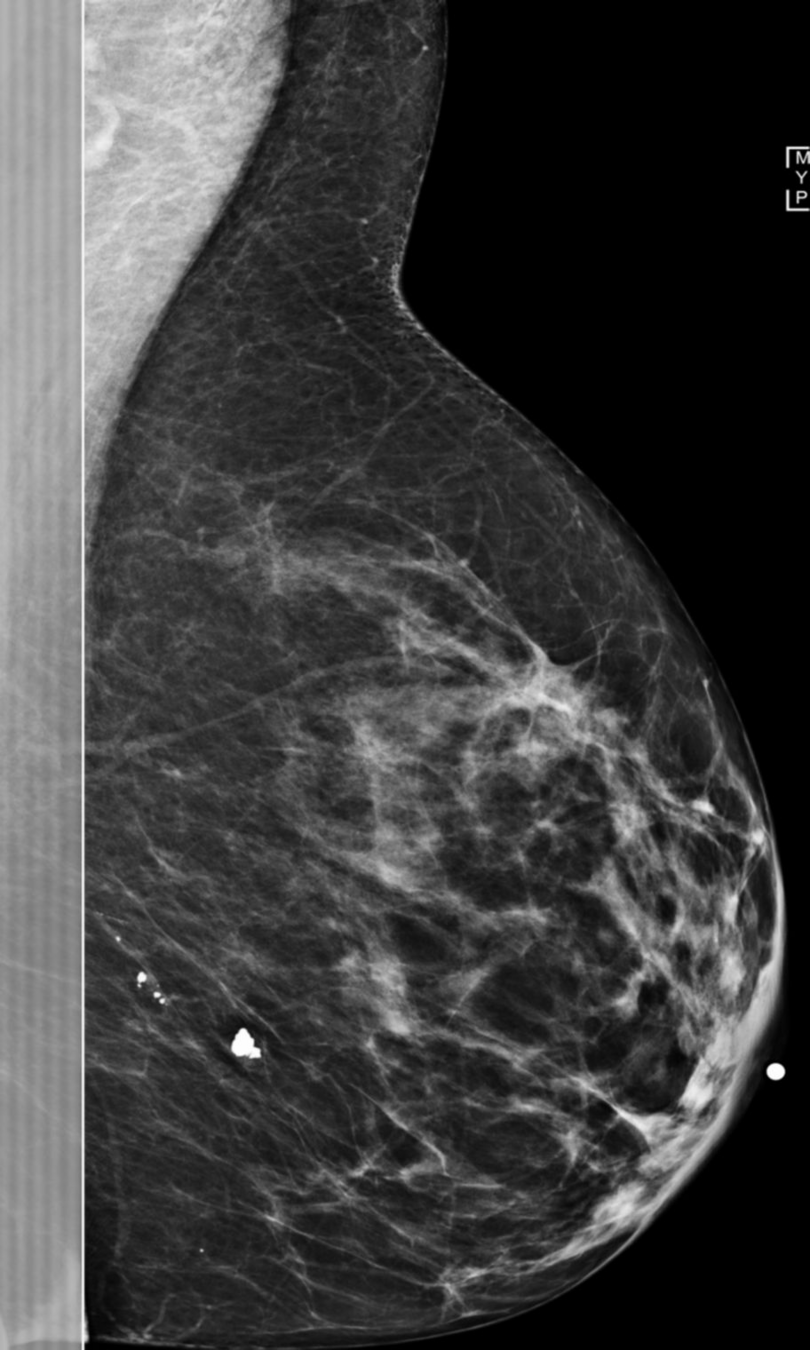

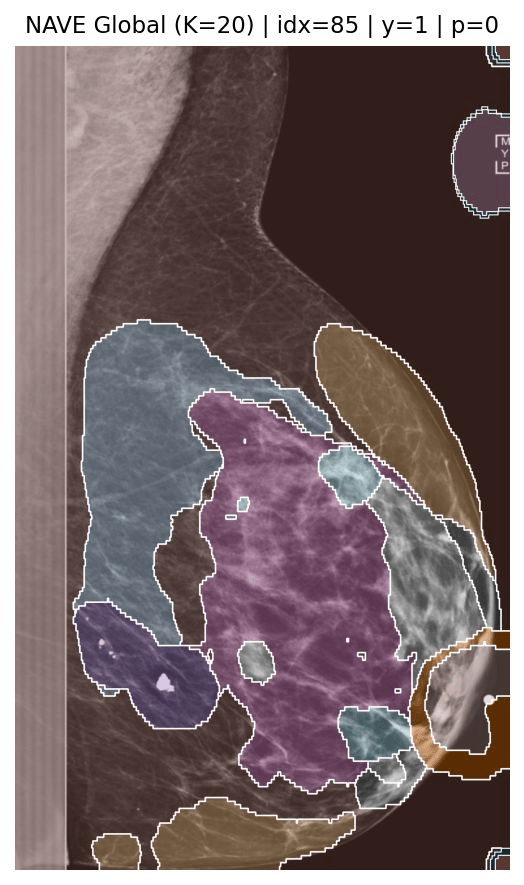

What can we explain?

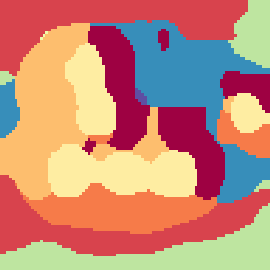

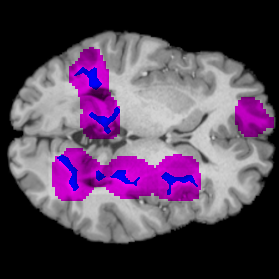

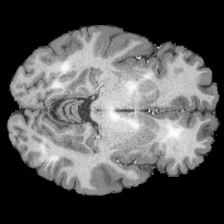

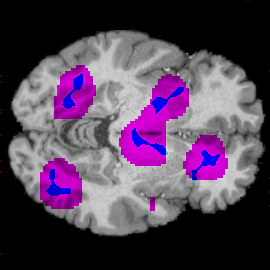

Annotation Masks

Model train for binary classif.

All lesions recovered

Can we use NAVE for medical annotations?

You need well performing model!

What can we explain?

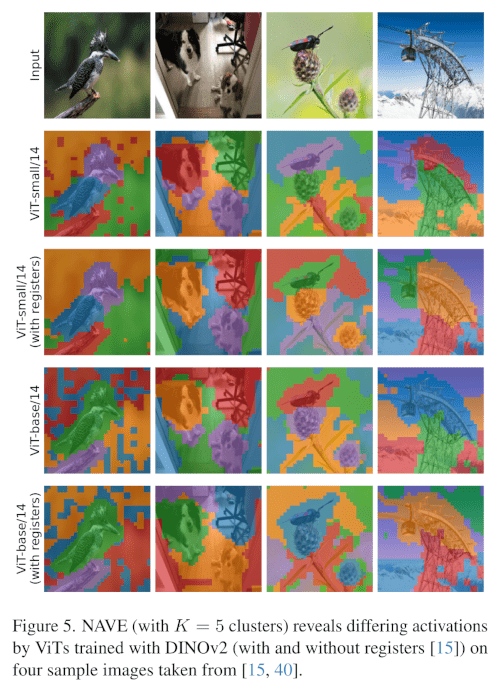

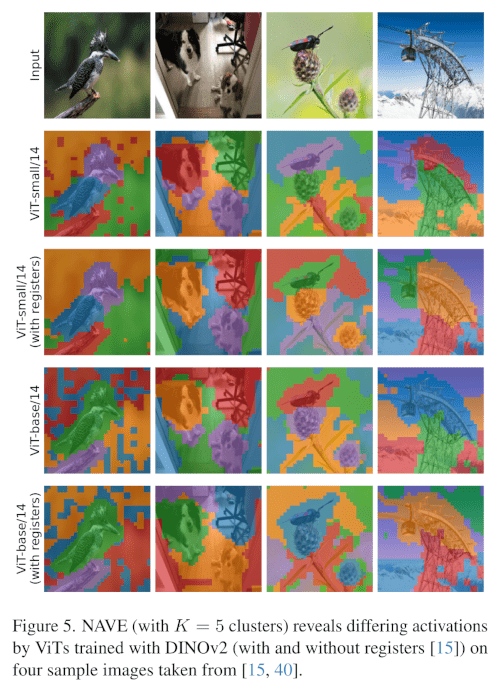

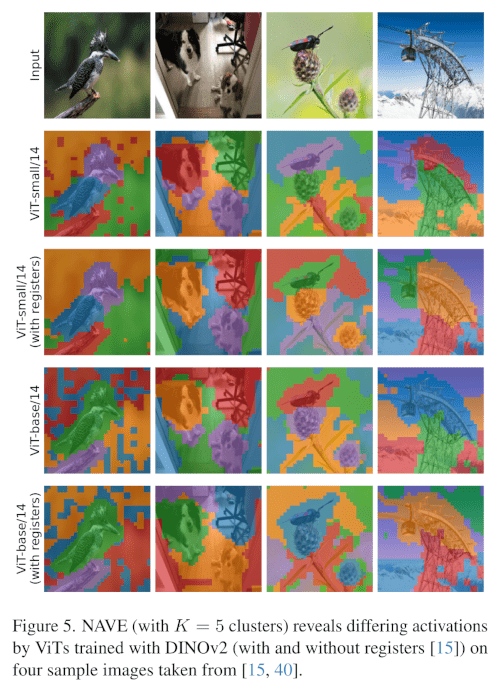

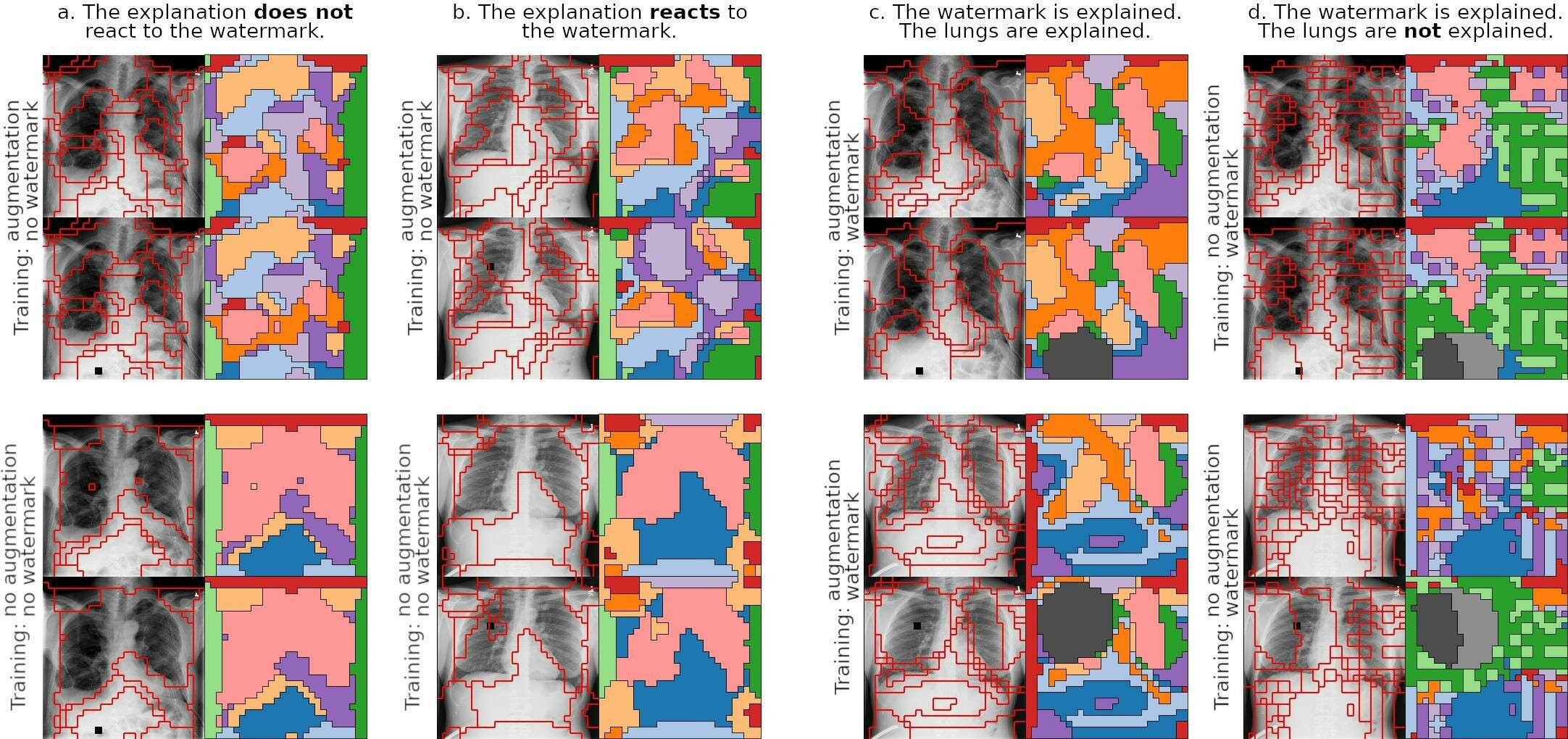

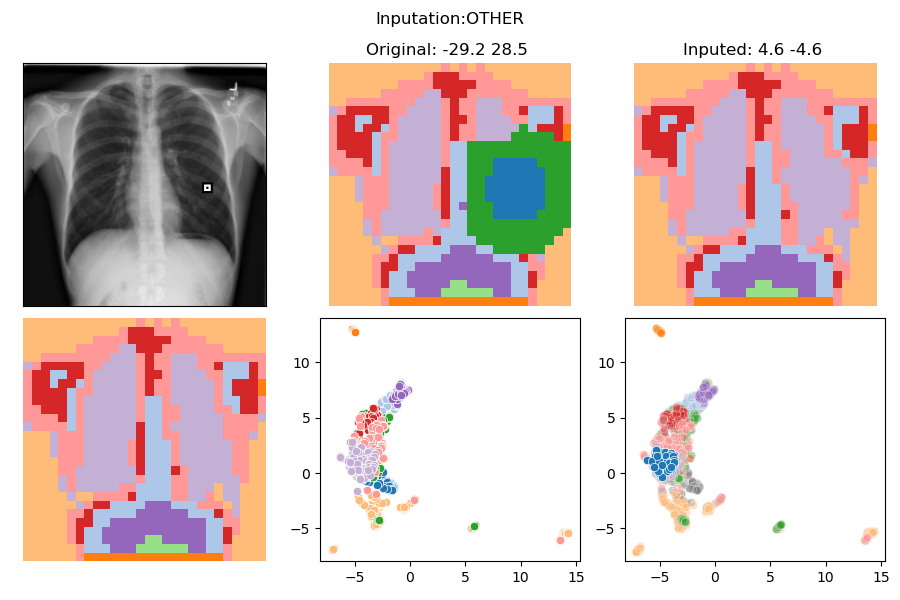

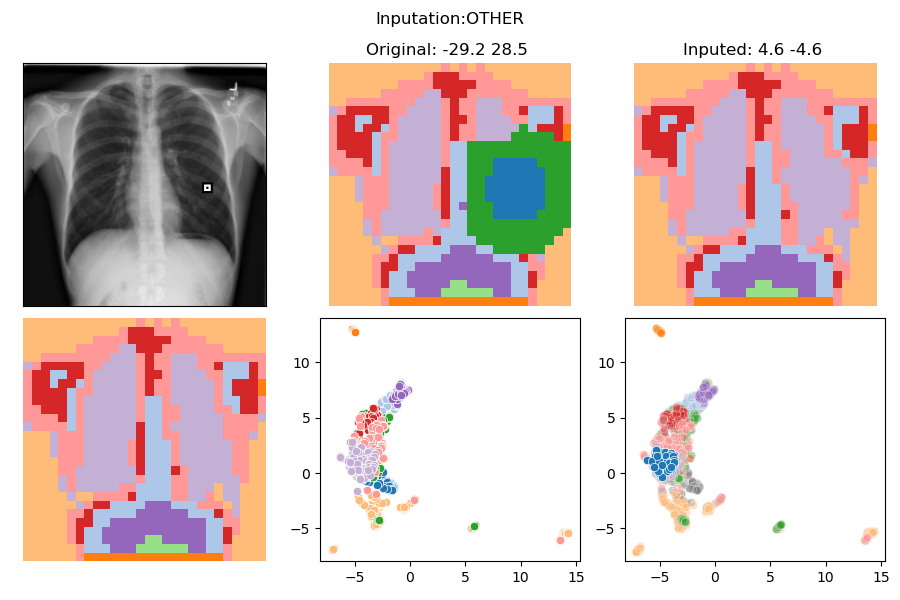

Inspect Artifacts in ViT and Registers

Sometimes it works

for small ViT

Often it doesn't work

for big ViT

What can we explain?

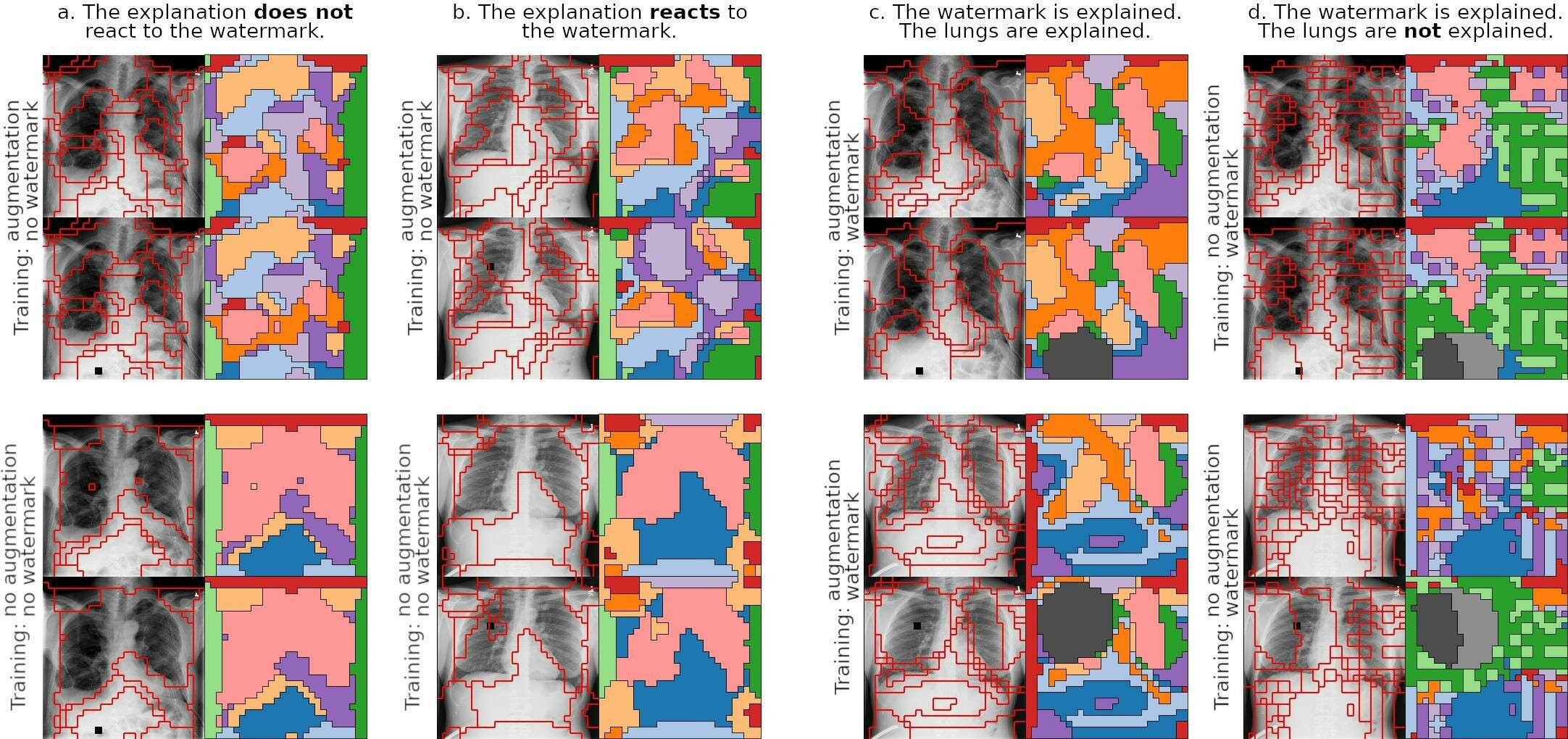

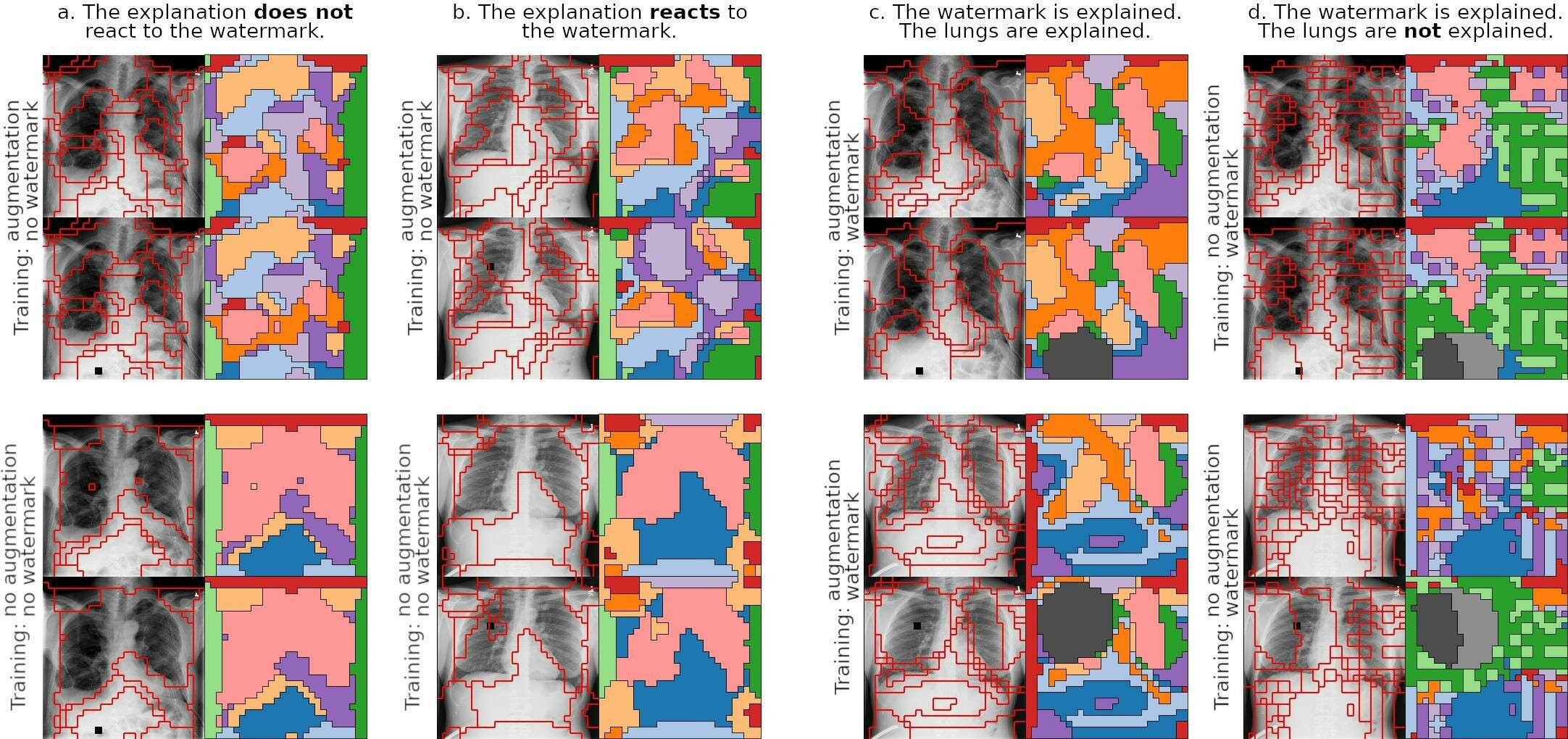

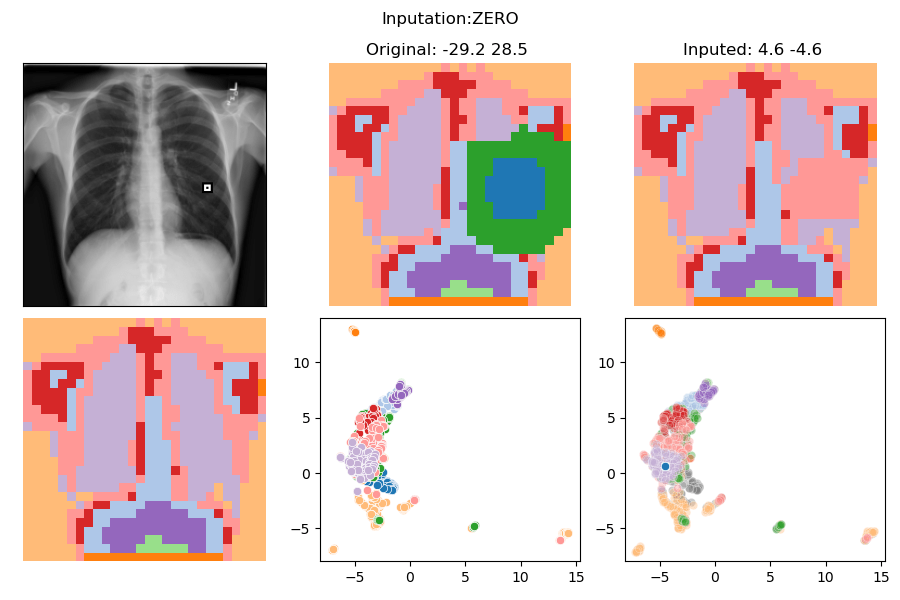

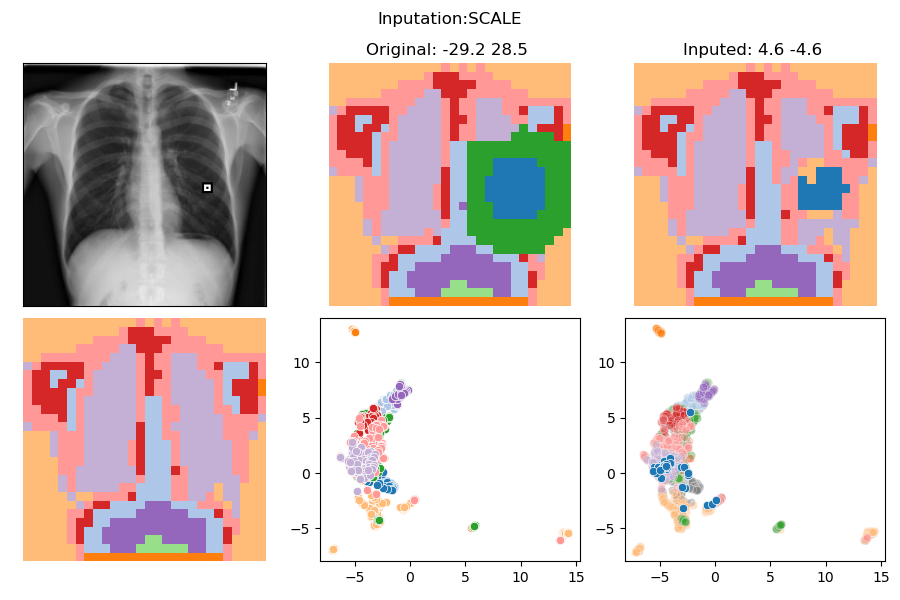

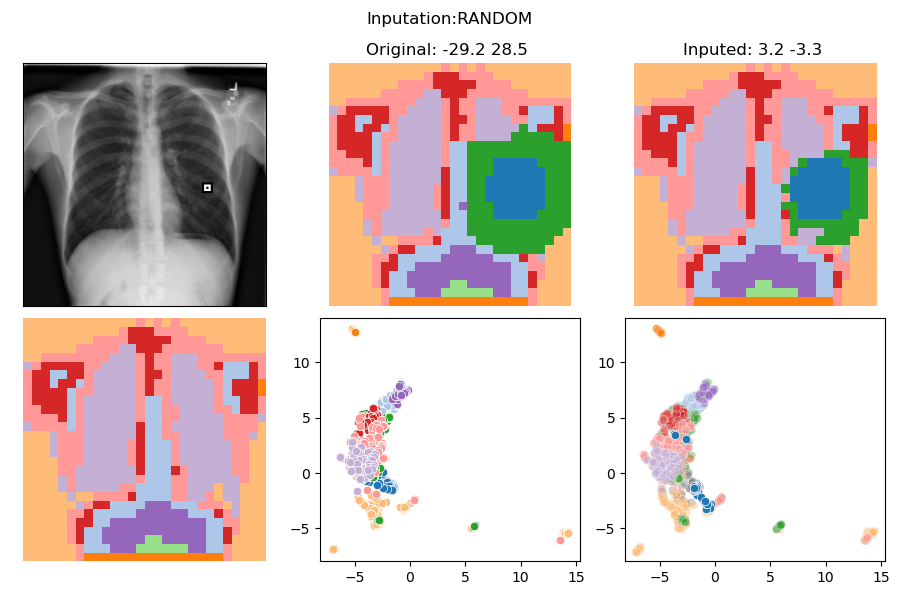

Inspect Shortcuts Saturation

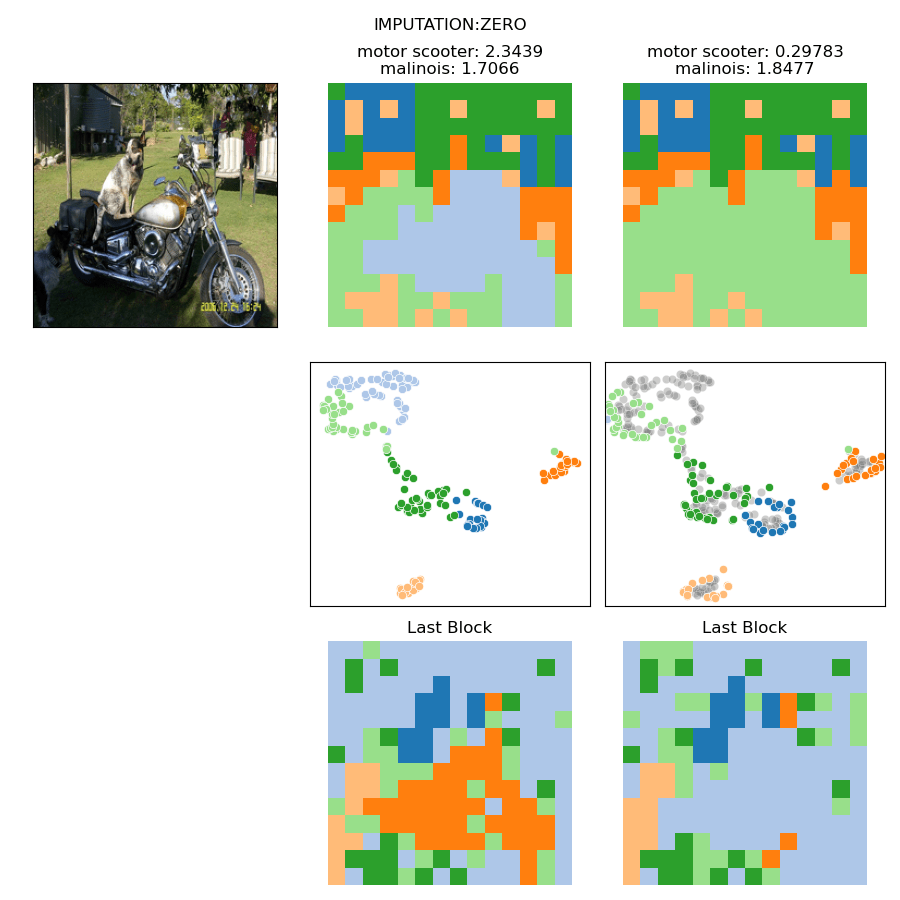

What else?

What else?

Unlearning?

>>> Test set

>>> IMPUTATION: SWAP

>>> Accuracies

All LBL==0 LBL==1

Distribution 176 26.7 73.3

--------------------------------------

Nothing : 26.7 100.0 0.0

Watermark : 100.0 100.0 100.0

Wmk and Imput: 26.7 100.0 0.0

--------------------------------------What else?

In Distribution Masking?

What else?

Adversarial Attack?

Summary

Summary

Does it capture semantics?

Yes

Can we see what the model sees?

Yes

- Ease interpretation of saliency maps.

- Concept extraction

- Unsupervised evaluation of local explanations.

- Object localization

- Annotations masks from labels

- Model inspection

- Shortcut saturation

- Unlearning

- Adversarial attacks

- etc.

What can we explain?

- Feature attribution?

- Causality?

- Concept injection?

- Any other idea?

What else?

Inspecting Vision Models

Ahcène Boubekki

UCPH

Samuel G Matthiesen

Sebastian Mair

DTU

Linkøping

Inspecting Vision Models

By ahcene

Inspecting Vision Models

- 18