Web Audio

Basics to Beats

Andrew Krawchyk

Senior Web Developer at ISL.

FEWD Instructor at General Assembly.

Not from Belgium.

Thomas Degry

Front end & iOS developer at ISL.

iOS instructor at General Assembly.

Originally from Belgium!

Luden's® Beatbox

ISL x Luden's®

(shameless plug™)

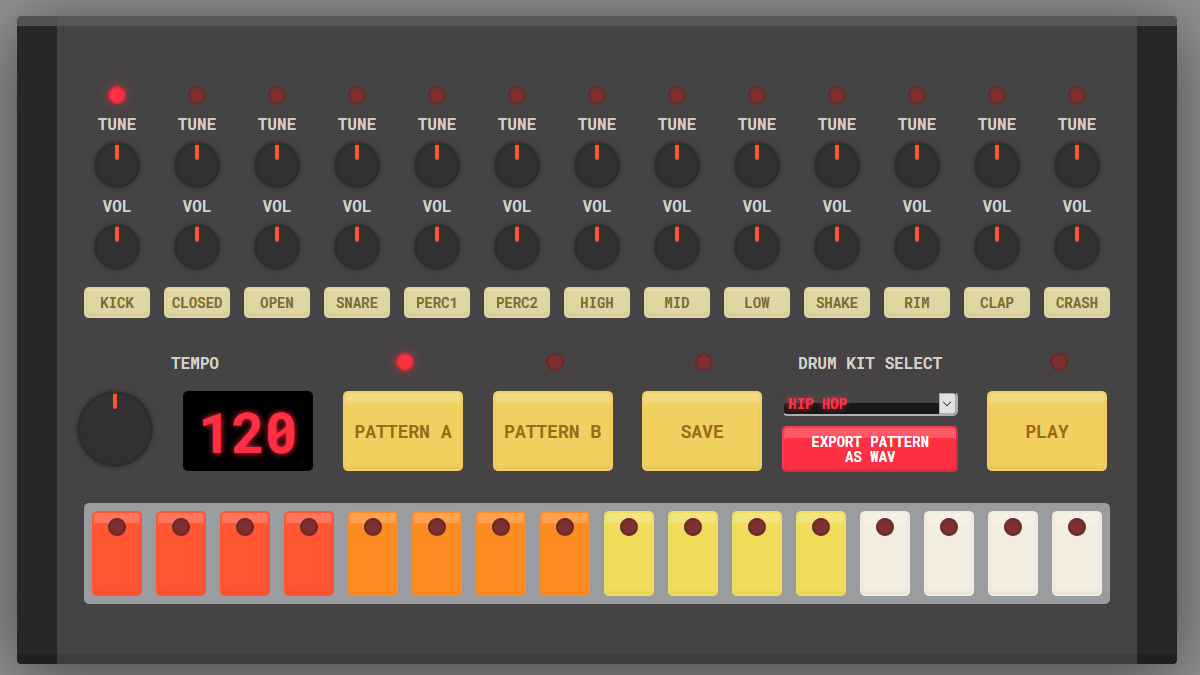

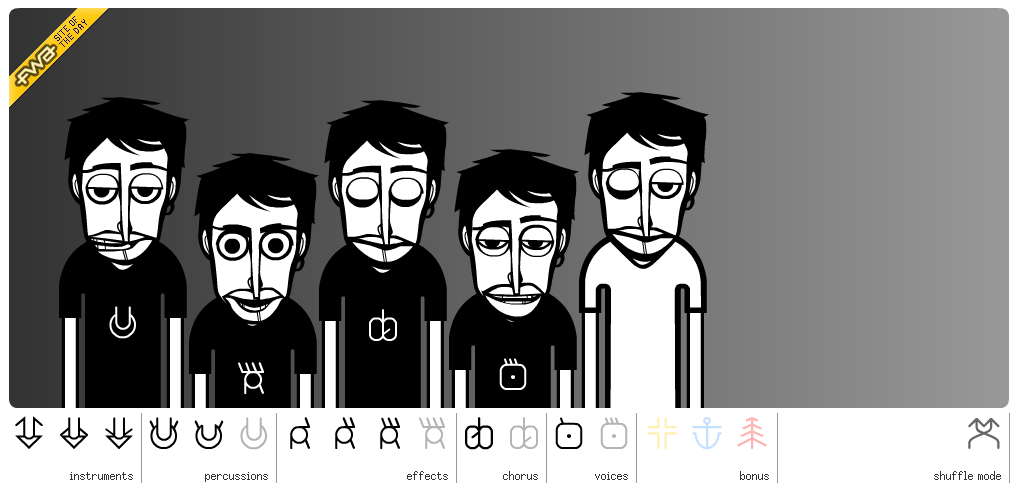

Inspiration

- Too abstract, not enough direction for the user.

- Too many controls, have to be a pro to master it.

- Easy to use, easy to master, lots of possibilities.

- Right amount of magic and easy to use.

- Too limiting, clunky UX.

Browser Support

IE doesn't support Web Audio.

- Chrome and Firefox report different duration times for the same audio file

- Edge's implementation is incomplete, no AudioContext.suspend()

- Loop `onend` only fires on some browsers

Inconsistencies across major browsers:

Syncing Sounds

Playing immediate sounds

Timing sound changes to be on beat

Playing looping sounds

Killing dead sounds

Technical Approach

React + Redux

Web Audio + SoundJS

React

Turns the lights on 🚦

Redux

Stores state and provides pub/sub to state changes

Updates the DOM based on those state changes

Provides selectors for computing derived data, keeps state management simple

Responds to actions by generating new state and emitting updates

Sends user actions to Redux

Receives state changes from Redux

Web Audio

Provides browser API for processing and synthesizing audio in web applications.

SoundJS

High resolution timer, microsecond precision for accurate scheduling.

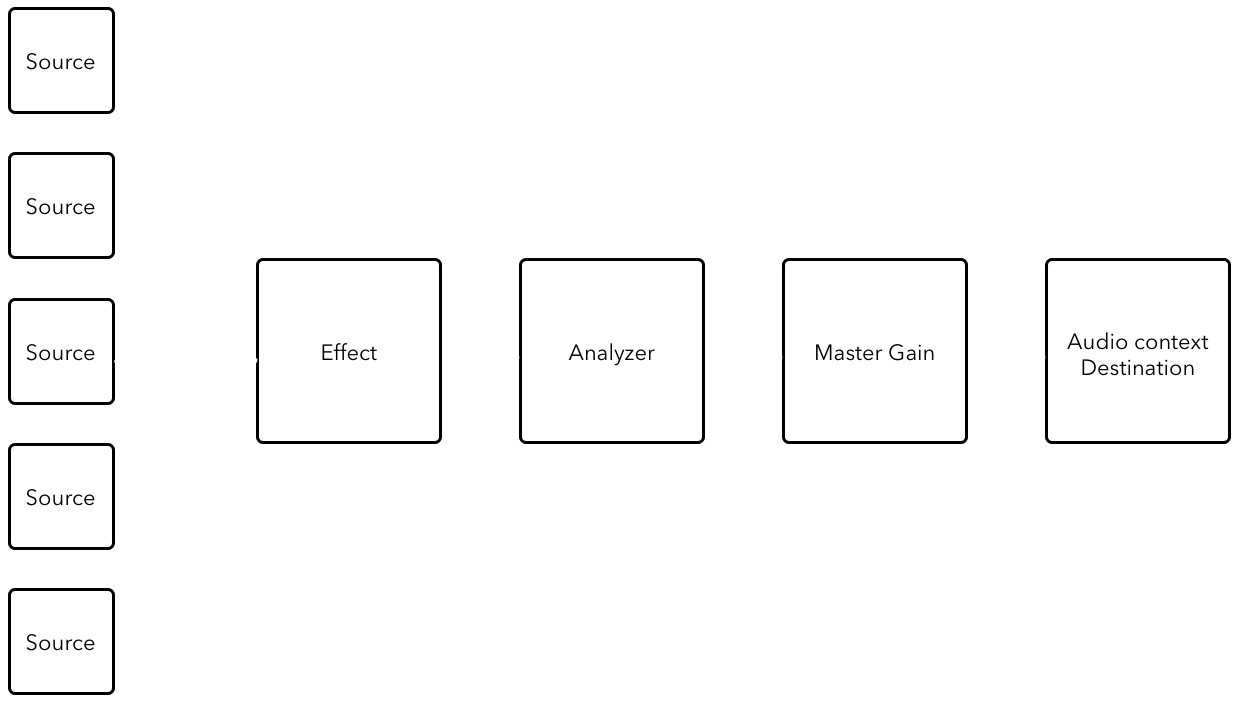

Audio context consists of connected nodes creating an audio routing graph.

Simple API wrapping Web Audio, HTML tag, Flash, and even Cordova audio API's.

Plugins provide support, unfortunately still lowest common denominator problem.

Can't take advantage of advanced new features.

Application Structure

- Audio separated from React and Redux in helper

- Application has no knowledge about audioContexts or gain nodes

- Stateless changes like volume() and effect() exposed from the audio helper to React

- Playing state changes published through Redux and the audioHelper was subscribed and changed audio accordingly

Questions?

First, an Audio Context

const audioCtx = new AudioContext()

const masterGain = audioCtx.createGain()

masterGain.connect(audioCtx.destination)

masterGain.gain.value = 1

const mp3 = new Promise((resolve, reject) => {

fetch('https://s3.amazonaws.com/ludens-beta/1_Ludens_Rap.ef7f136e.mp3')

.then(res => {

// convert to array buffer

res.arrayBuffer().then(buffer => {

try {

// decode data

audioCtx.decodeAudioData(buffer, decodedData => {

resolve(decodedData)

})

} catch (error) {

reject(error)

}

}, error => {

reject(error)

})

})

})

mp3.then(decodedData => {

const source = audioCtx.createBufferSource()

source.buffer = decodedData

source.connect(masterGain)

source.start()

})Timing Sounds to Play on Beat

// assumes previous code

const sampleDuration = 16 // sec

function scheduleSound(when) {

const source = audioCtx.createBufferSource()

source.buffer = decodedData

source.connect(masterGain)

source.start(when)

}

scheduleSound(0)

scheduleSound(sampleDuration)

Playing looping sounds

Promise.all([

bufferLoader('https://s3.amazonaws.com/ludens-beta/3_Ludens_Bass_slap.904e2cae.mp3'),

bufferLoader('https://s3.amazonaws.com/ludens-beta/10_Ludens_groove_toypiano.93320ce2.mp3')

]).then(sounds => {

const scheduleAheadTime = 0.1 // sec

const sampleDuration = 16 // sec

let nextSoundTime = 0.0 // sec

let currentPlaying = sounds // current playing sounds

function nextSound() {

nextSoundTime = nextSoundTime += sampleDuration

}

function scheduleSounds(when) {

currentPlaying.forEach(decodedData => {

const source = audioCtx.createBufferSource()

source.buffer = decodedData

source.connect(masterGain)

source.start(when)

})

}

function scheduler() {

while (nextSoundTime < audioCtx.currentTime + scheduleAheadTime) {

scheduleSounds(nextSoundTime)

nextSound()

}

}

window.setInterval(() => {

scheduler()

}, 25) // lookahead ms

})Playing immediate sounds

// storage for raw audio buffers to be used with AudioBufferSourceNodes

const buffers = {}

// storage for current and future sources, arrays of sources keyed by buffer name

const liveSources = {}

function storeBuffer(name, buffer) {

buffers[name] = {}

buffers[name].buffer = buffer

}

// assumes previous code w/modifications

function scheduleImmediateSounds() {

// if we dont have current playing in live

// add new live source with offset

const newSounds = diffLiveAndPlaying(currentPlaying) // magic

let offset = sampleDuration - (nextSoundTime - audioCtx.currentTime)

return newSounds.forEach(name => {

playSoundSource(name, audioCtx.currentTime, offset)

})

}

function updateCurrentPlaying(update) {

currentPlaying = update

if (isScheduling) {

scheduleImmediateSounds()

}

}

updateCurrentPlaying(['rap', 'groove'])Killing dead sounds

// assumes previous code w/modifications

function pruneLiveSources() {

// if we have live sources not in currently playing

// kill those sources

const liveNames = Object.keys(liveSources)

const deadSources = liveNames.filter(name => currentPlaying.indexOf(name) === -1)

deadSources.forEach(removeLiveSource)

}

function nextSound() {

nextSoundTime = nextSoundTime += sampleDuration

pruneLiveSources()

iterations++

}

function updateCurrentPlaying(update) {

currentPlaying = update

if (isScheduling) {

pruneLiveSource(currentPlaying)

scheduleImmediateSounds()

}

}

updateCurrentPlaying(['rap', 'groove'])Web Audio: Basics to Beats

By Andrew Krawchyk

Web Audio: Basics to Beats

- 1,189