Modeling Transformers in Natural Language Processing Based on Spiking Neural Networks

AmirHossein Ebrahimi

September 2022

1

School of Mathematics, Statistics and Computer Science

Supervisors

Dr. Mohammad Ganjtabesh

Dr. Morteza Mohammadnori

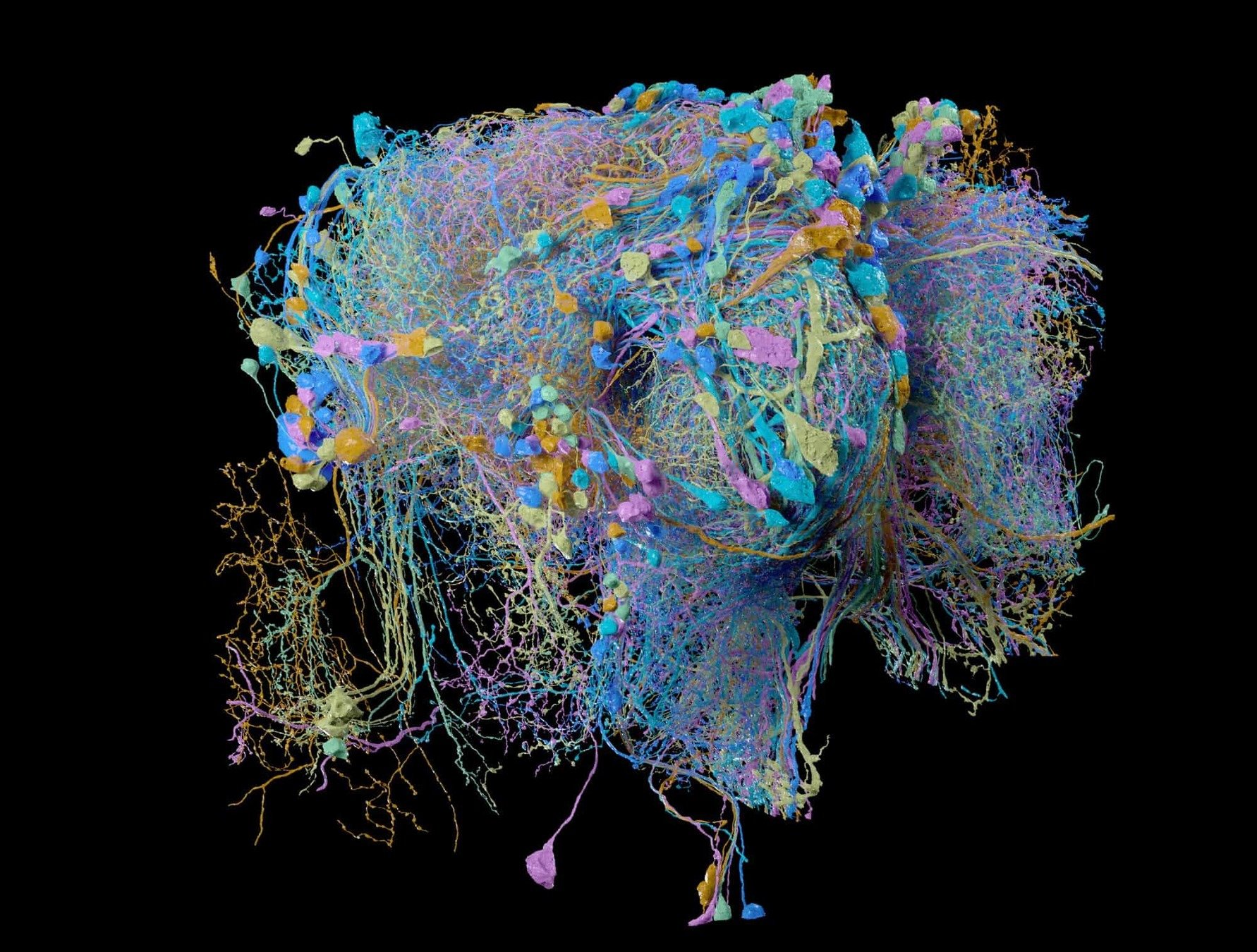

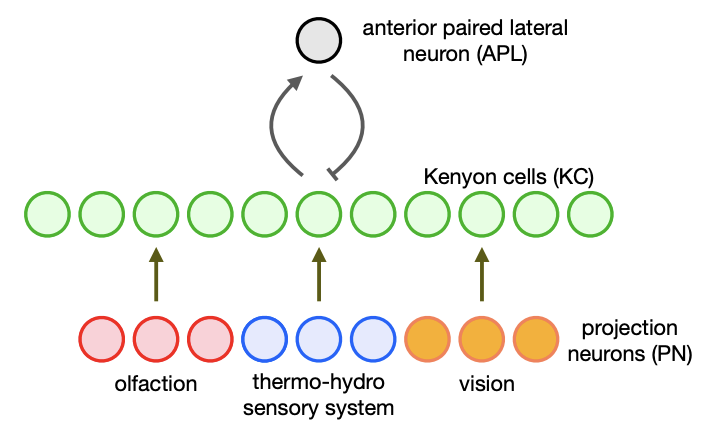

brain of fruit flies

It all starts here.

Image credit: The FlyEM team, Janelia Research Campus, HHMI (CC BY 4.0)

2

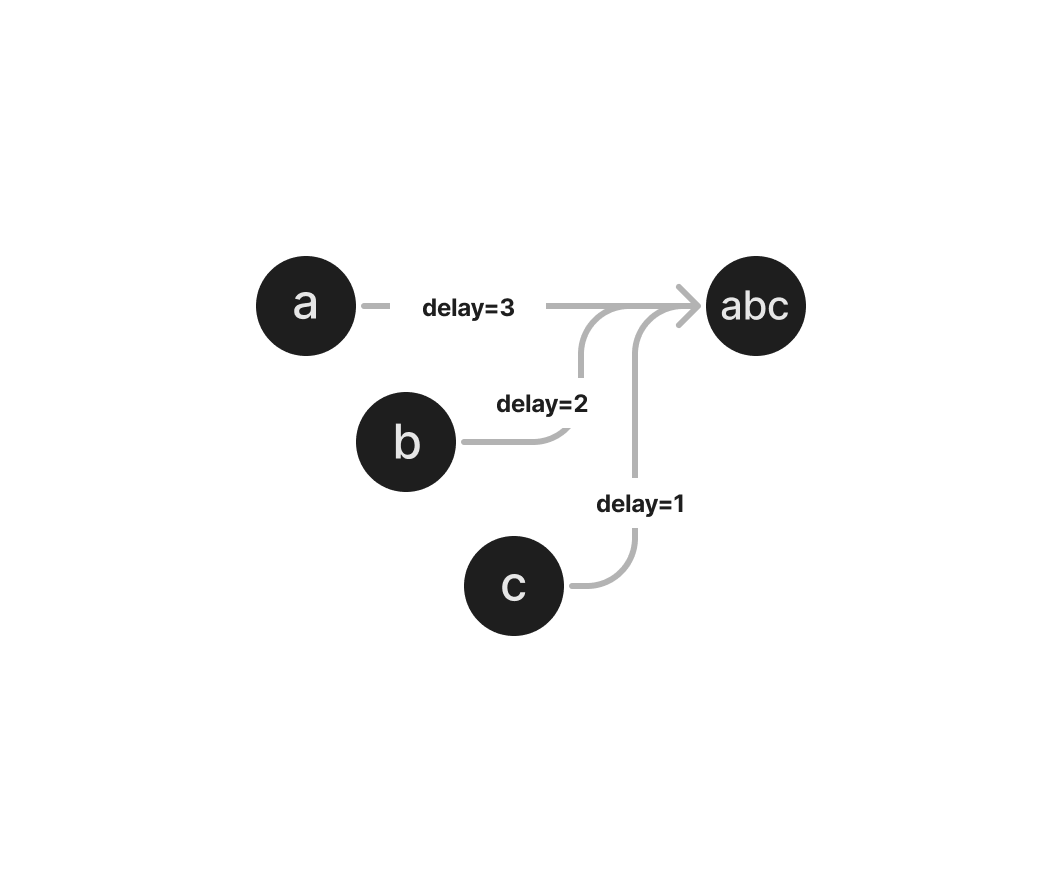

natural language processing

Transformers

Chemical Reaction Prediction

Image / VIDEO processing

3

TSNN

Transformer inspired

architecture

based on

Spiking Neural Network

4

Reading Background

5500 years of evolution.

5.1

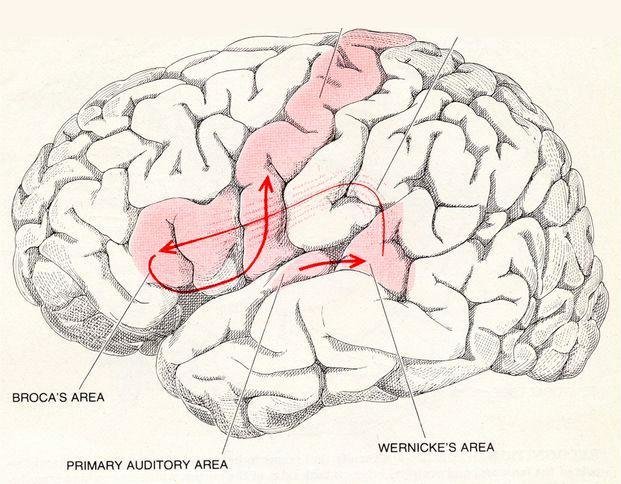

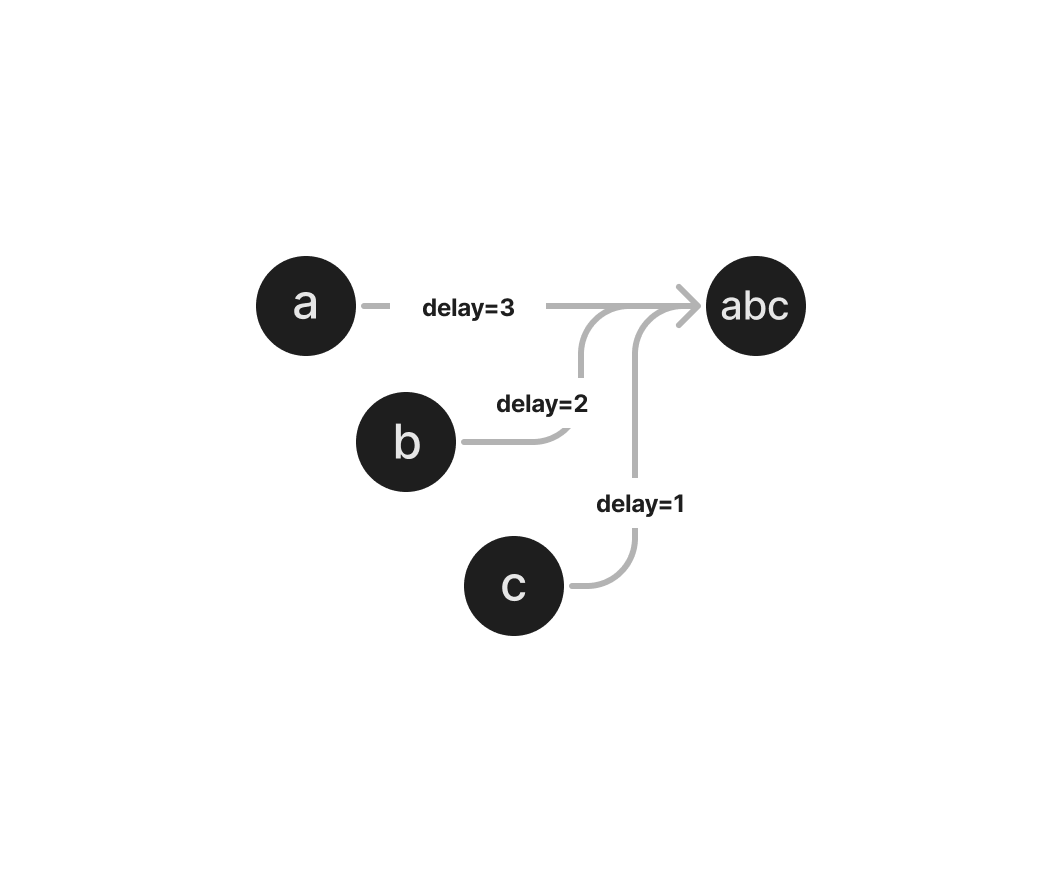

Delay

Find the optimal delay for each connection

6.1

Delay

Find the optimal delay for each connection

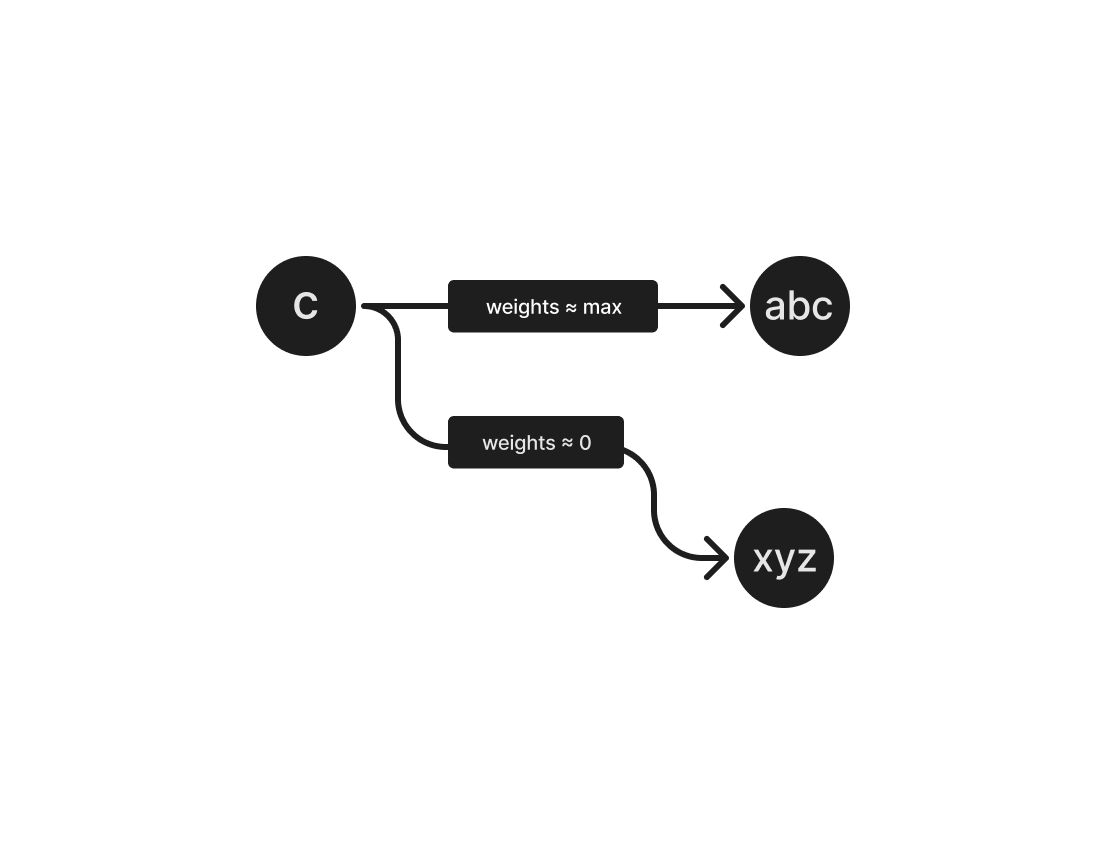

Weight

Find the optimal weight for each connection

6.2

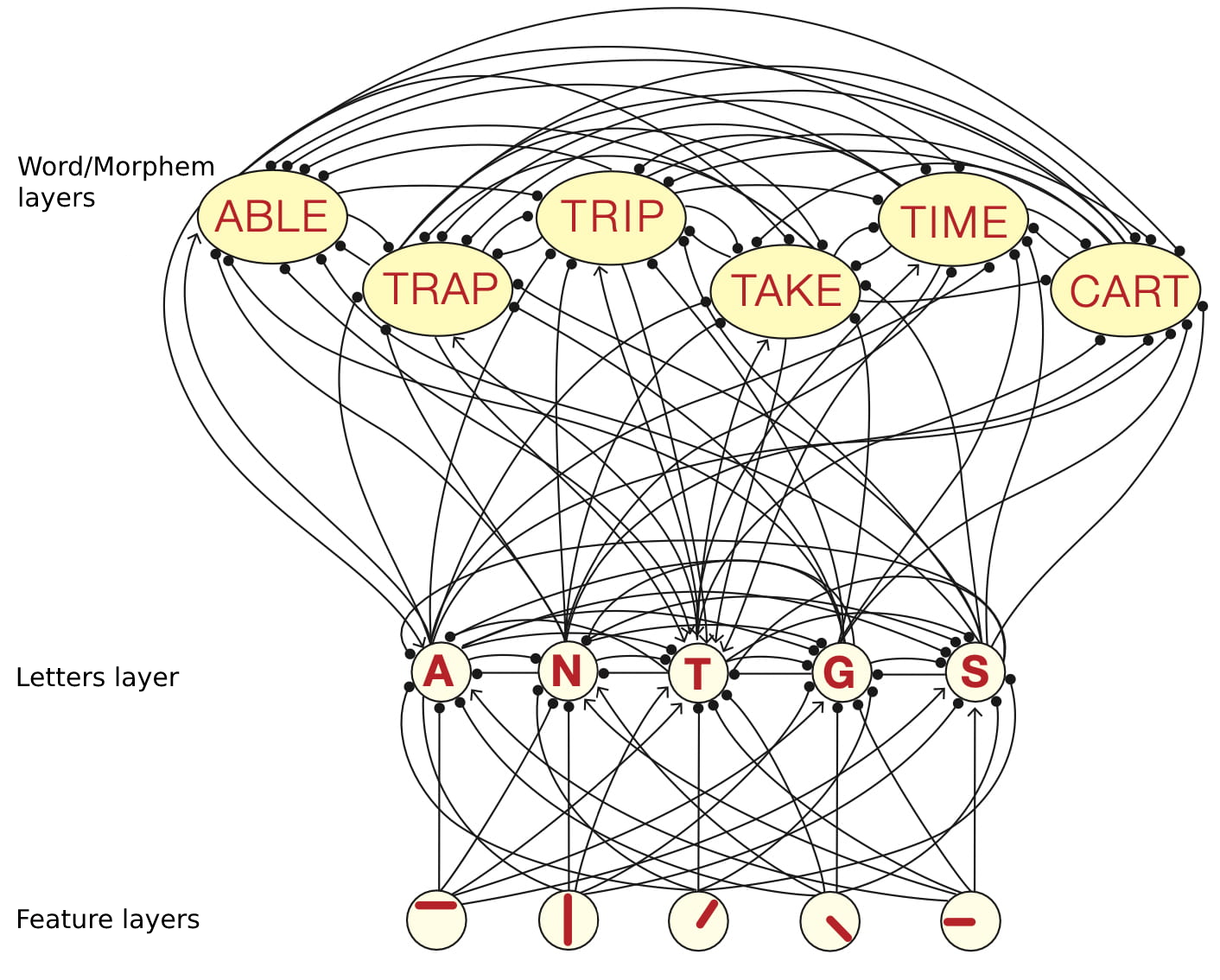

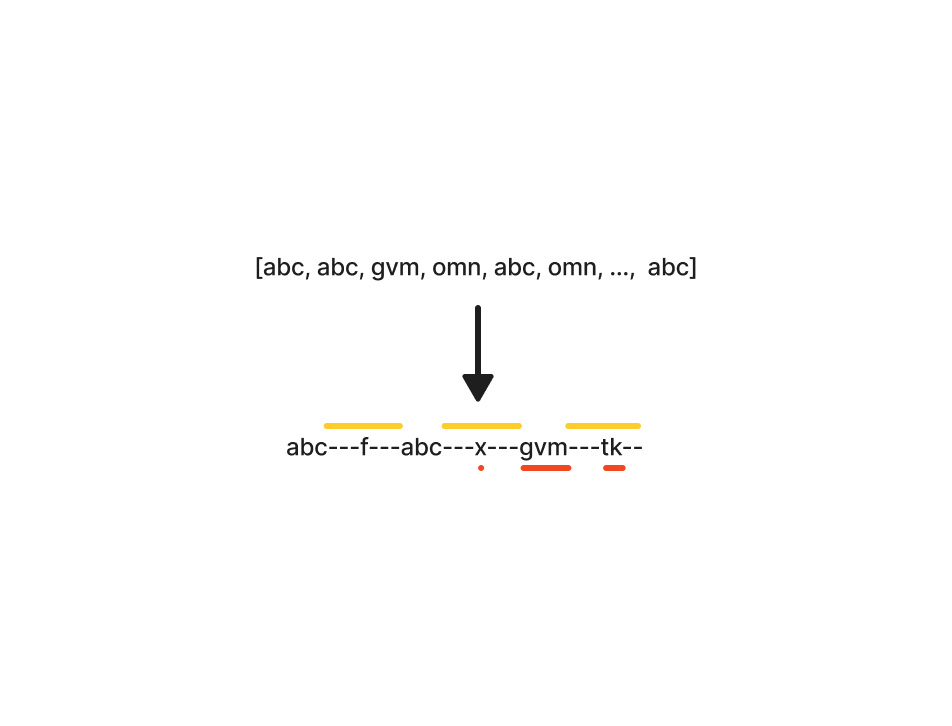

〞

un·break·able

– Morpheme Detection

7.1

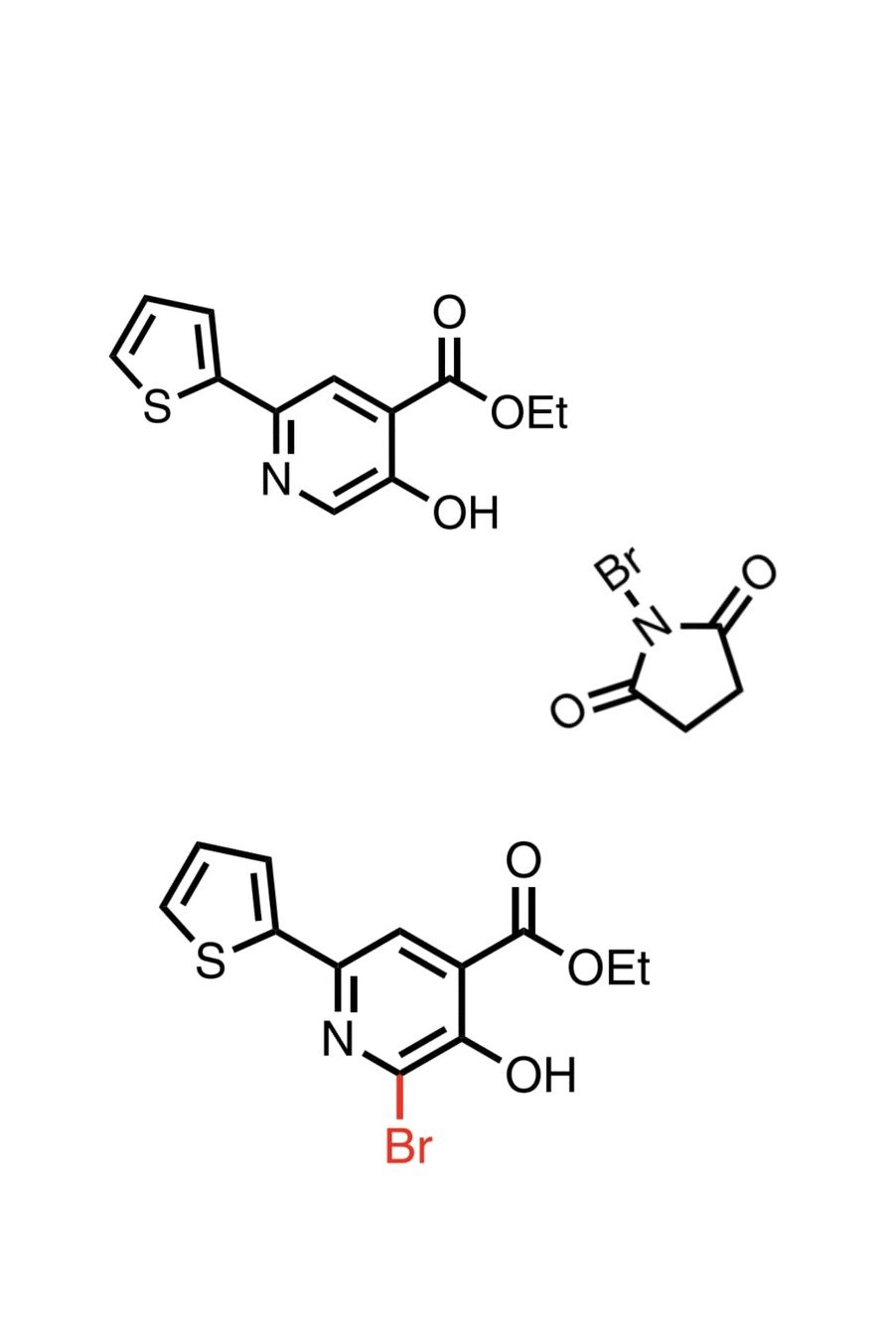

Biological Model

Adapted from Cognitive Neuroscience: The Biology of the Mind-W. W. Norton & Company (2019)

7.2

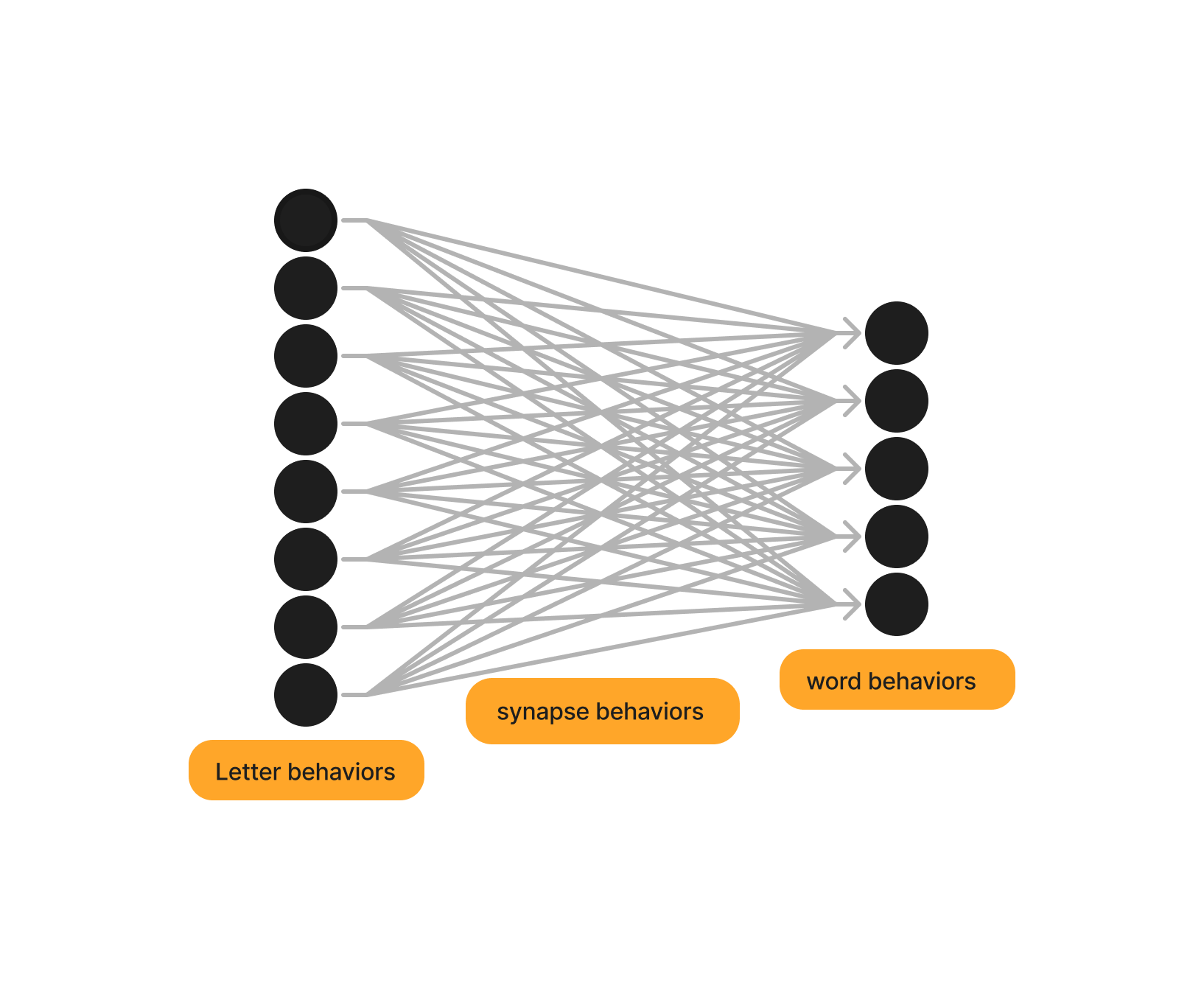

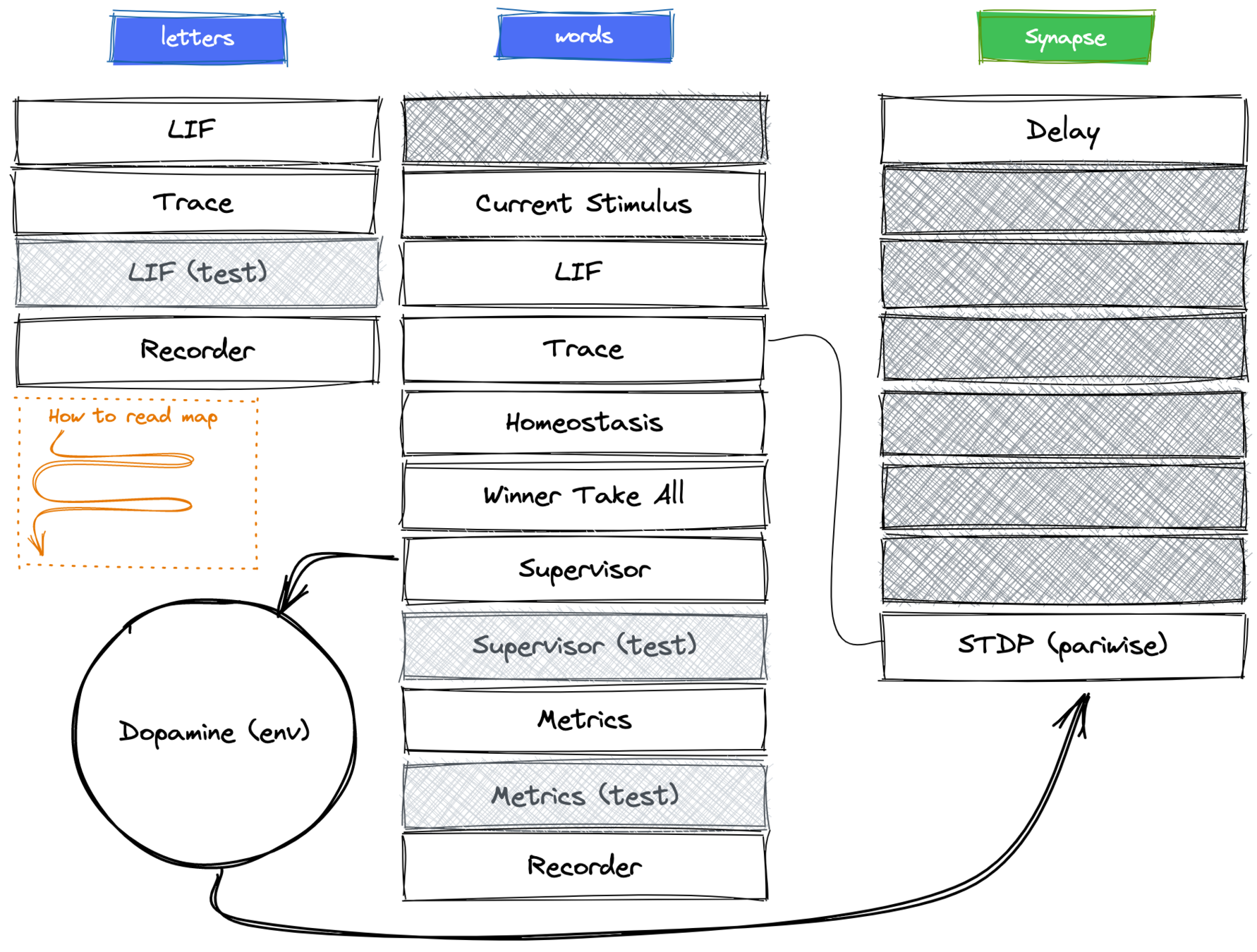

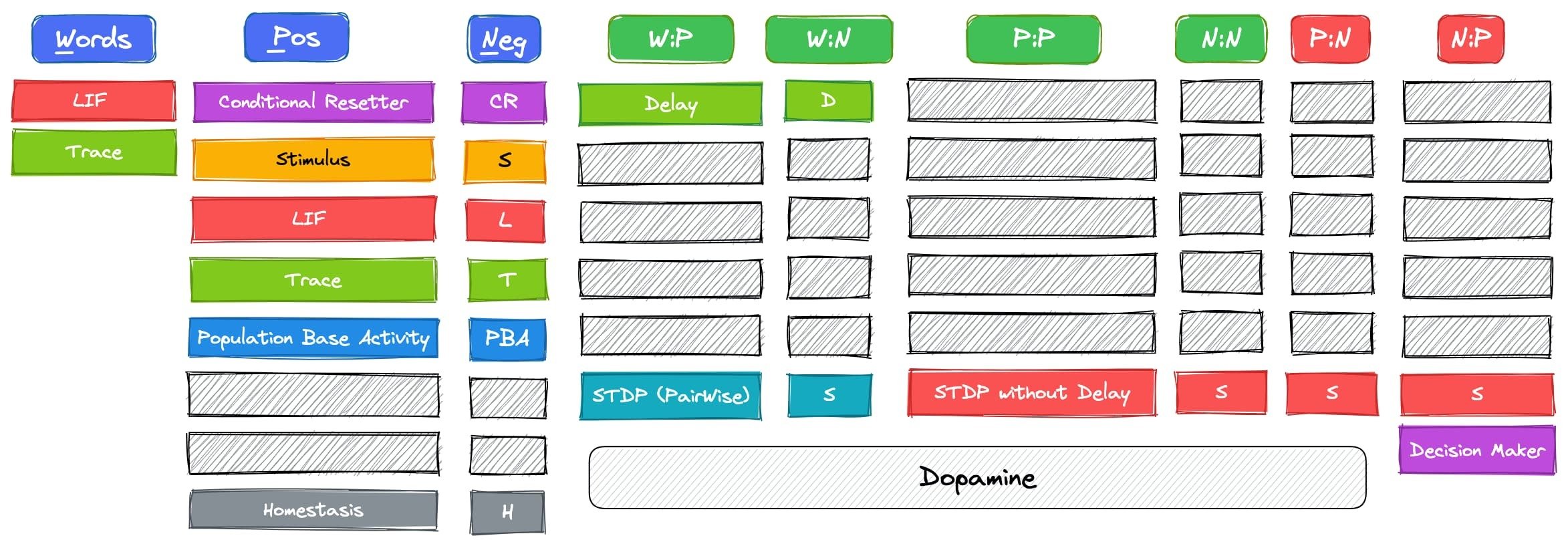

Architecture

8.1

Beahviours

8.2

1

LIF as building block for our biological model.

2

Delay makes it possible to accumulate firing effect.

3

Homeostasis makes the stabilization process easier

5

STDP optimize weights via LTD and LTP

mechanism

4

winner take all simplify the model and its

output

6

Dopamine plays an important role

in reinforcement learning

8.3

9.1

Data Specification

p = 0.9

tau = 7

noise = 0.8

1000 words

Experiments

The longer it takes

for the model to converge,

the darker it grows.

9.2

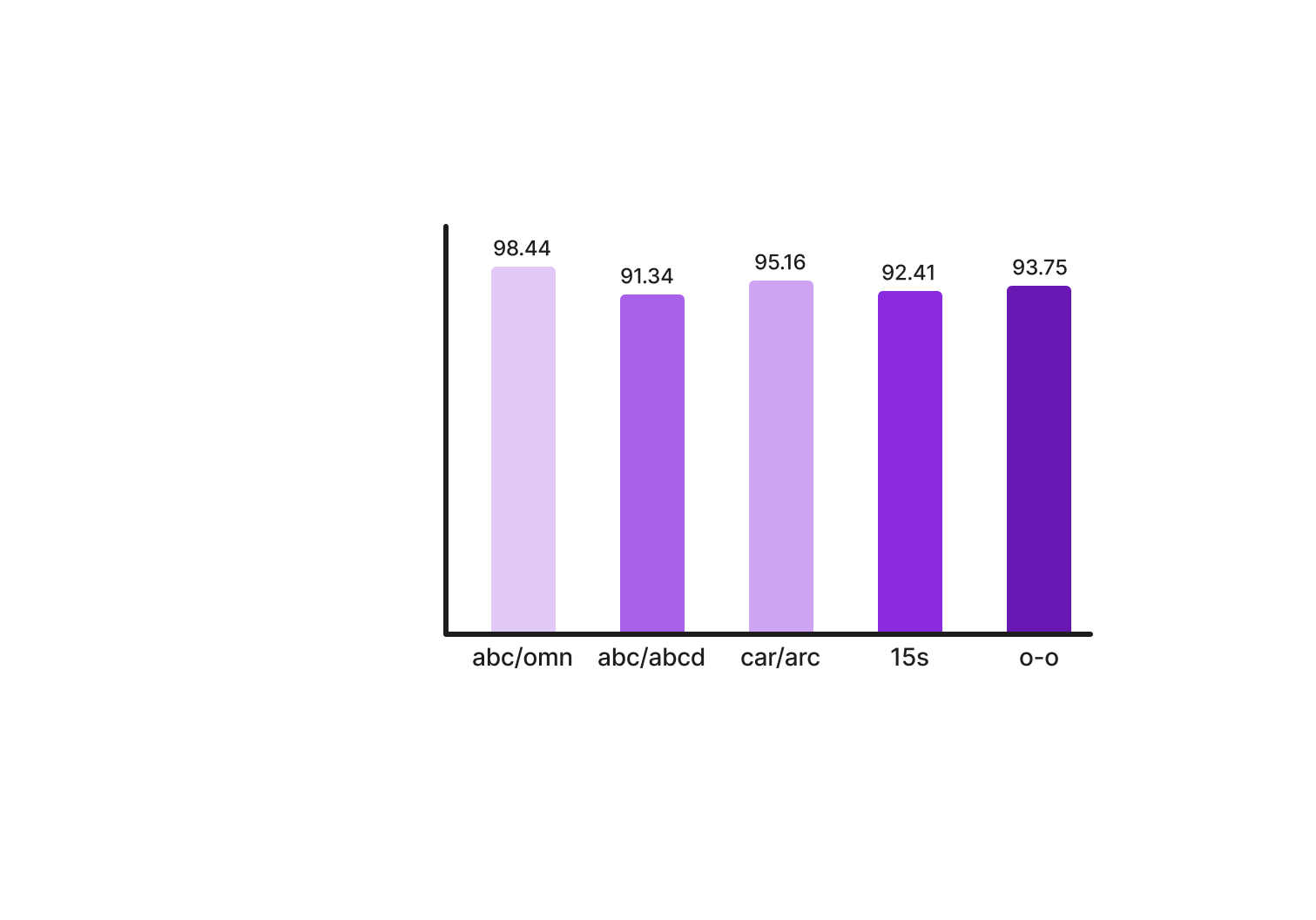

〞

This show was an amazing, fresh & innovative idea in the 70s when it first aired...

– Sentiment Analysis

10

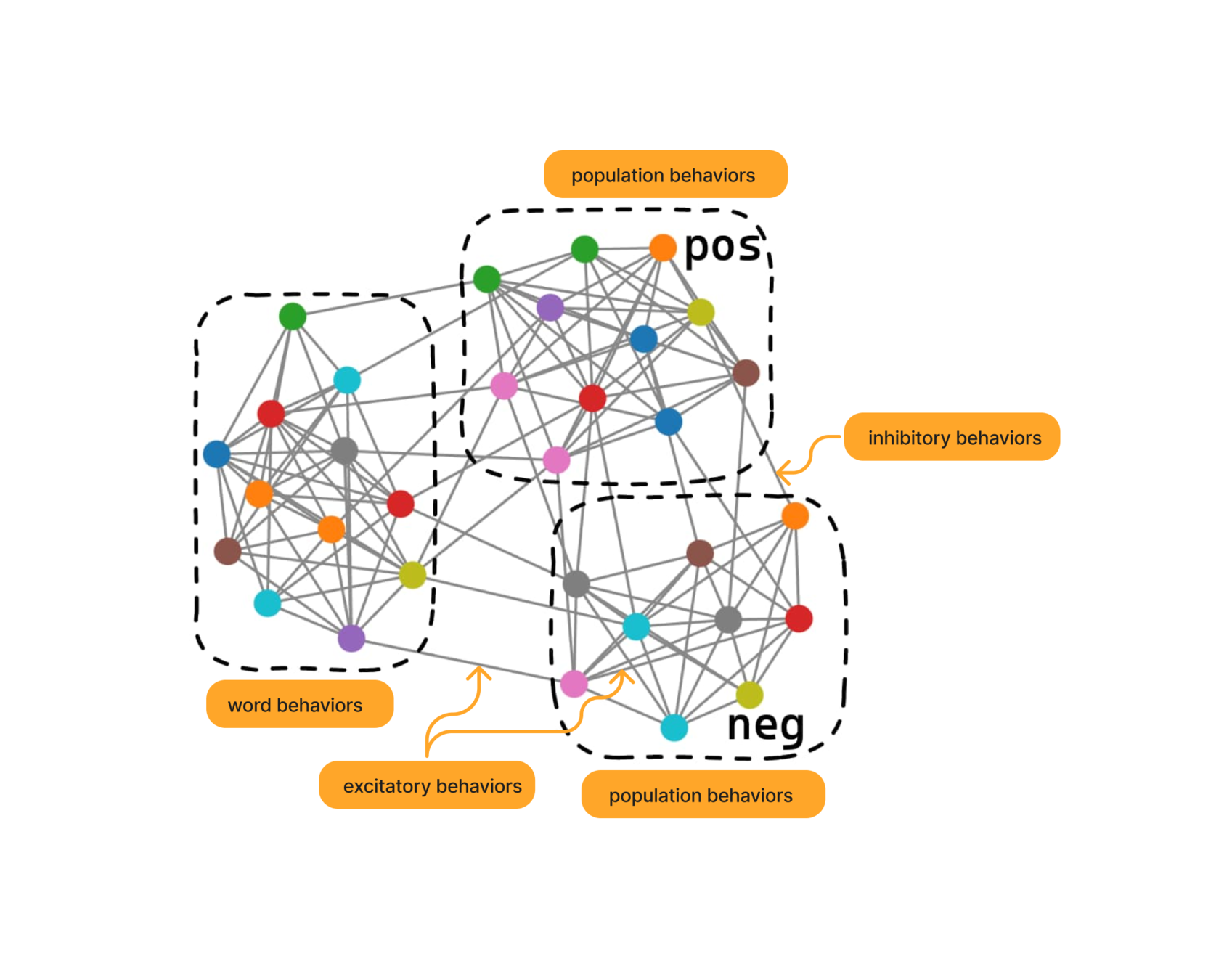

Architecture

11.1

Behaviours

11.2

1

Population Base Activity regulate long term effect on neuron decision.

2

Stimulus merge and accumulate other population currents.

3

Trace make attention mechanism possible.

5

Condition Reseter separate the simulation in the inference & learning phase

4

Population Winner

is a strategy for output detection in neuron populations.

6

Decision Maker select the worthy neuron population.

11.3

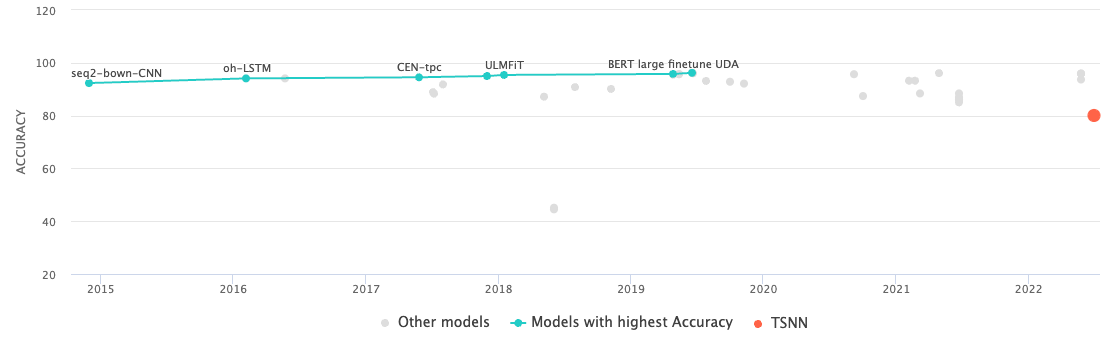

Benchmarks

Adapted from Sentiment Analysis on IMDb benchmark by paperswithcode.com

12

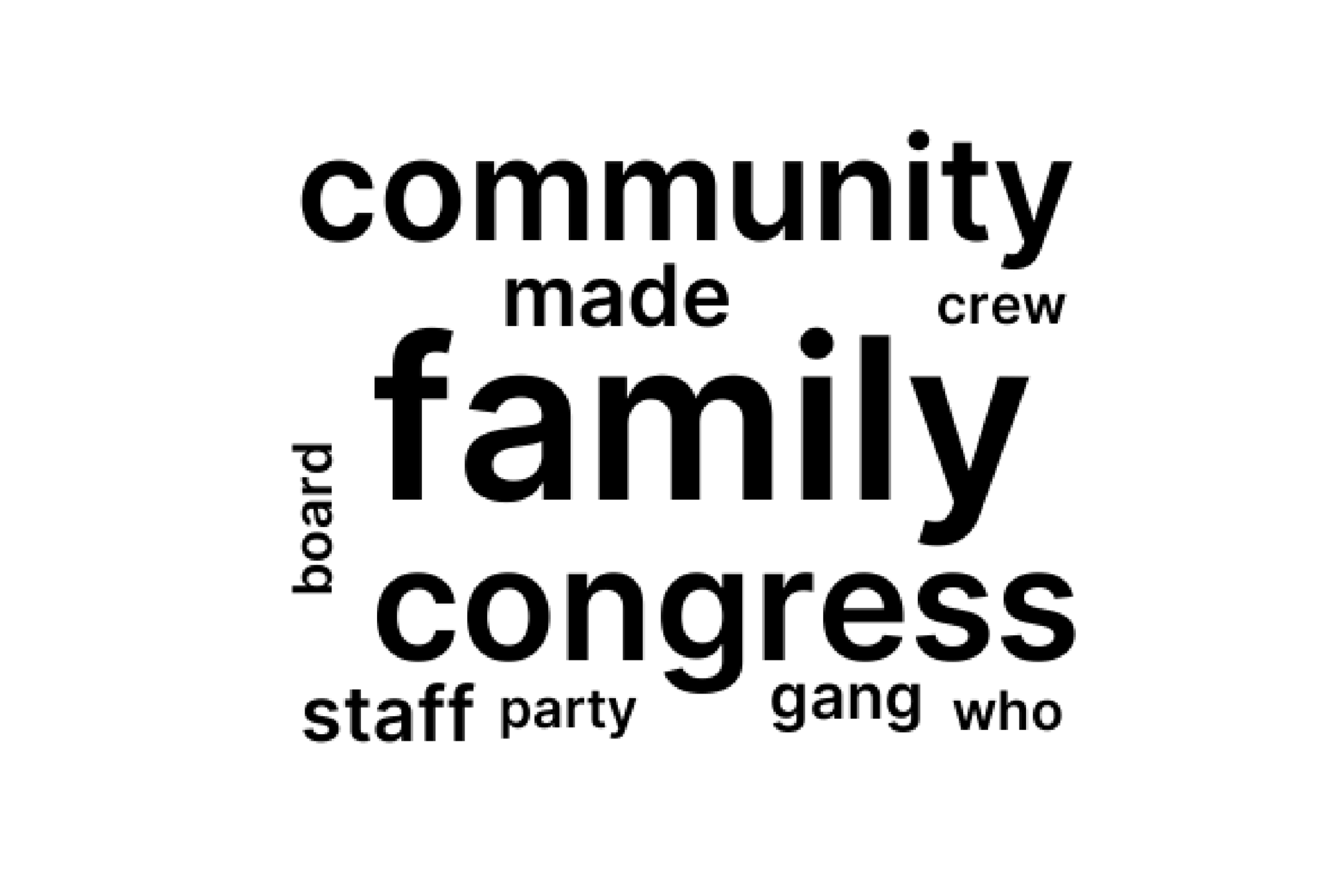

FlyVec

2021

13

Images credit: https://flyvec.vizhub.ai

Images credit: https://flyvec.vizhub.ai

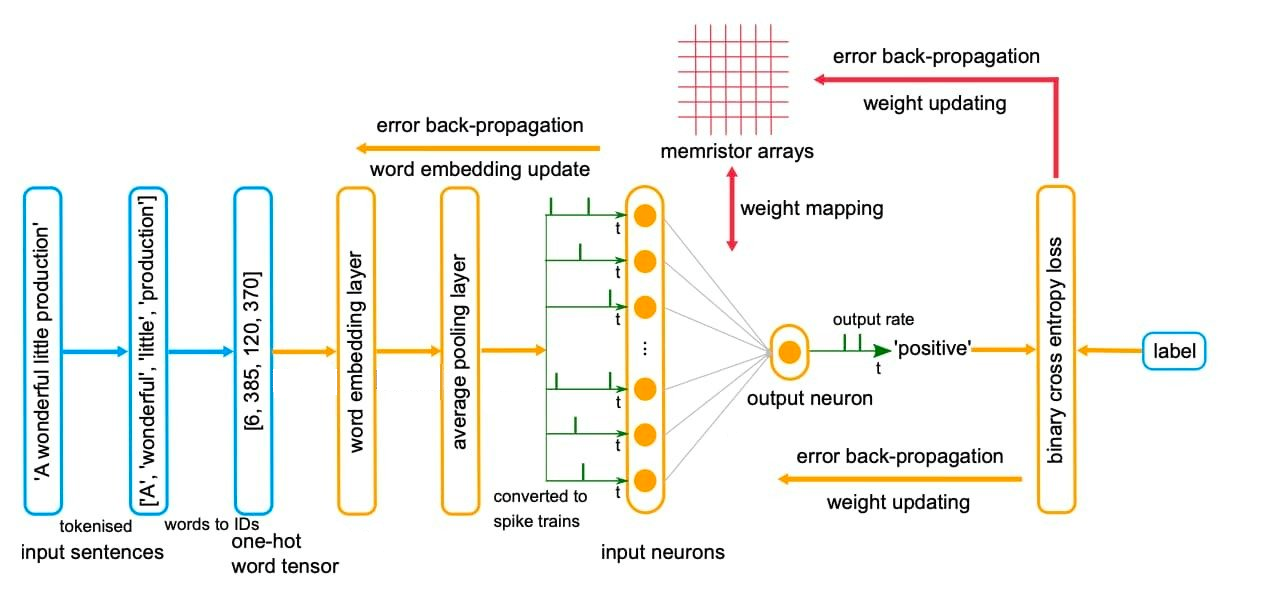

Memristor

2022

Image credit: "Text Classification in Memristor-based Spiking Neural Networks" paper

14

Memristor

sentiment analysis model

Partially bio-plausible

Delay Learning

Generality

Scalability

tsnn

transformer-inspired architecture

NEW

Fully bio-plausible

Delay Learning

Generality

FLYVEC

word embedding model

Bio-plausible

Delay Learning

Generality

Scalability

MODELS & COMPARISONS

15

Future Works

Model expansion

Increase the number of neurons in the neural population by increasing the model size layer by layer.

Multi domain

Benchmark the model's performance in additional data domains, such as image using gabor filters or other nlp tasks.

Mapping

Achieve optimal convergence by reordering the mapping function in the first seen of the desired outputs.

16

Thanks

For you attention

STACK & SOURCE CODE

17

tsnn

By Amirhossein Ebrahimi

tsnn

- 15