Running LLMs locally without network

{curiosity}

Antarctica earlier this week. A relative with a career in electronics and radio, with focus on learning and experimentation led to a role as Technical Comms Officer at Davis Station.

1. Your skill in software engineering is founded in your personal learning experience applied to problem solving.

2. There will always be new ways proposed to solve problems. Some will be better than those you already know. (some will not, and you need to be able to discern the difference)

3. Learning new tech developments keep engineers competitive and relevant.

4. Curiosity breeds fun and innovation, and your squad should reserve capacity for this.

Curiosity and learning

1. What interests do I have?

2. Have I been meaning to look at a particular new tech domain or framework?

3. How can I connect these dots and research/experiment in a fun way?

If you want or need to spend time outside of work..

* Don't over-commit - family and other personal things come first, but do find try to find one small block of time to learn. 30 mins a week of intentional reading on commute?

This will give you ideas and experience that you could contribute to opensource, or to help you at work.

Curious about security in software defined radio (https://rtl-sdr.com), and interested in satellites/space.

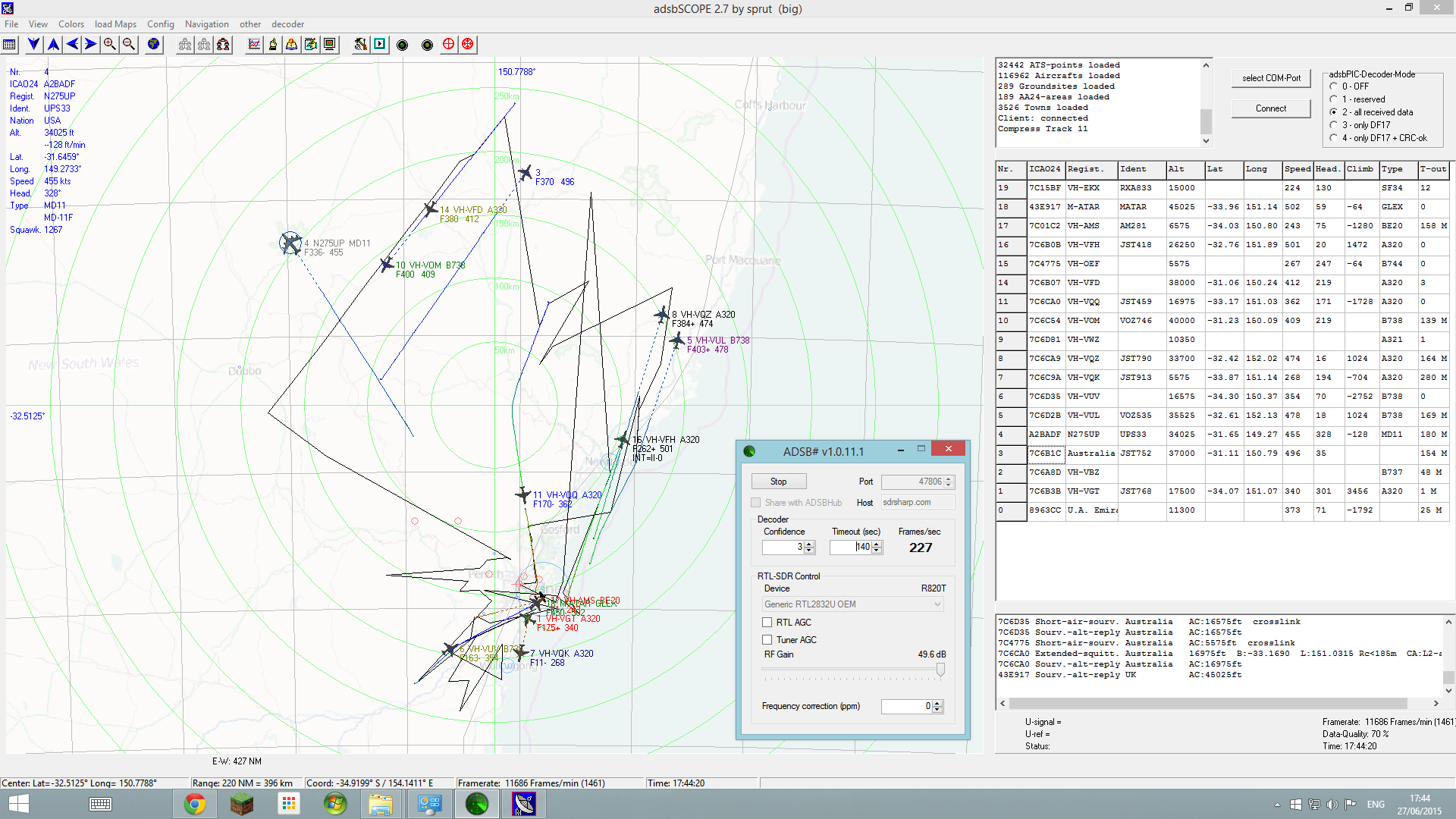

Unforeseen outcome #1: recieving flight telemetry data direct from planes via a simple antennae.

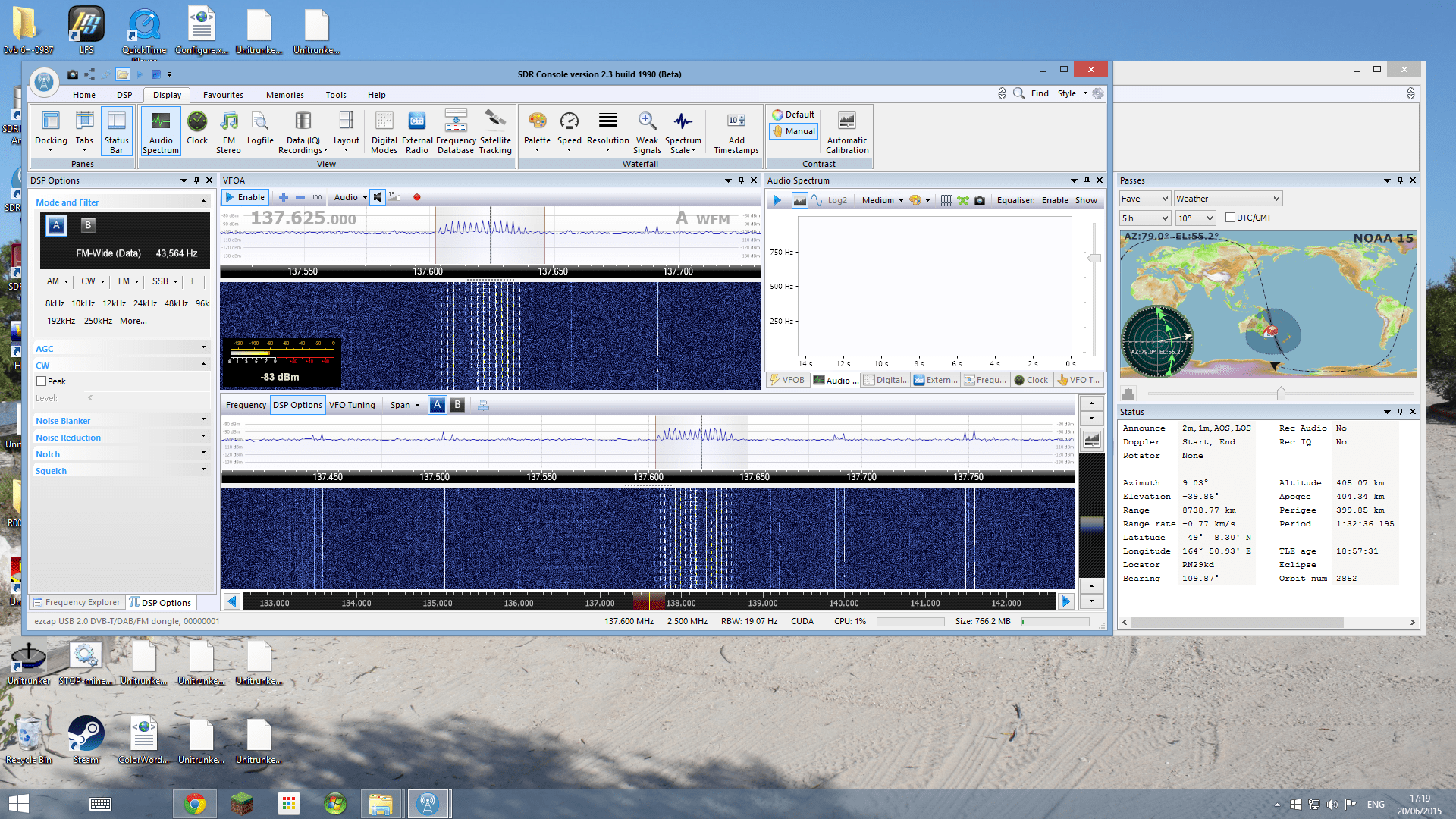

Unforeseen outcome #2: collecting raw satellite data

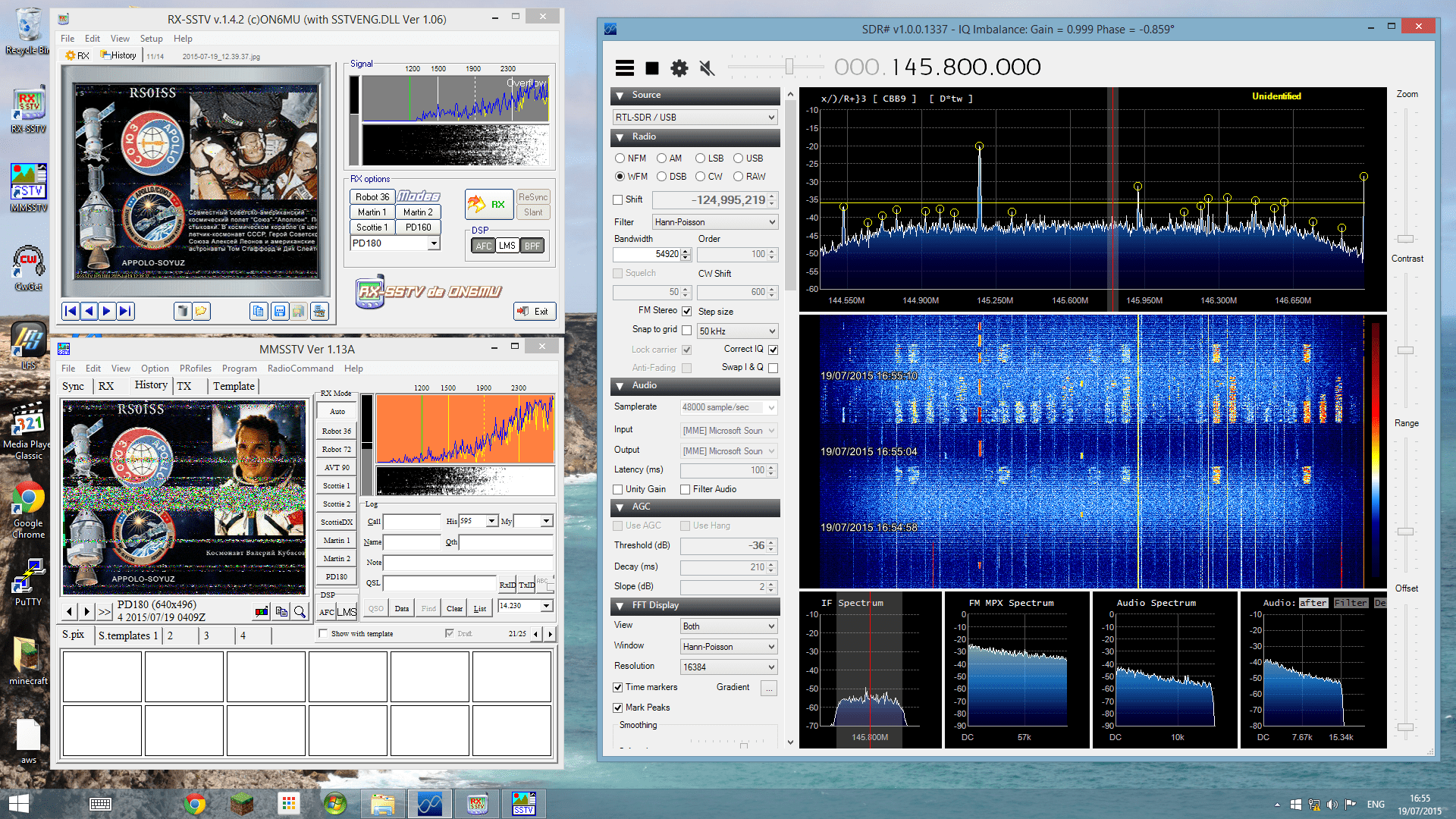

Unforeseen outcome #3: recieving SSTV images from the ISS as it goes overhead.

{demo}

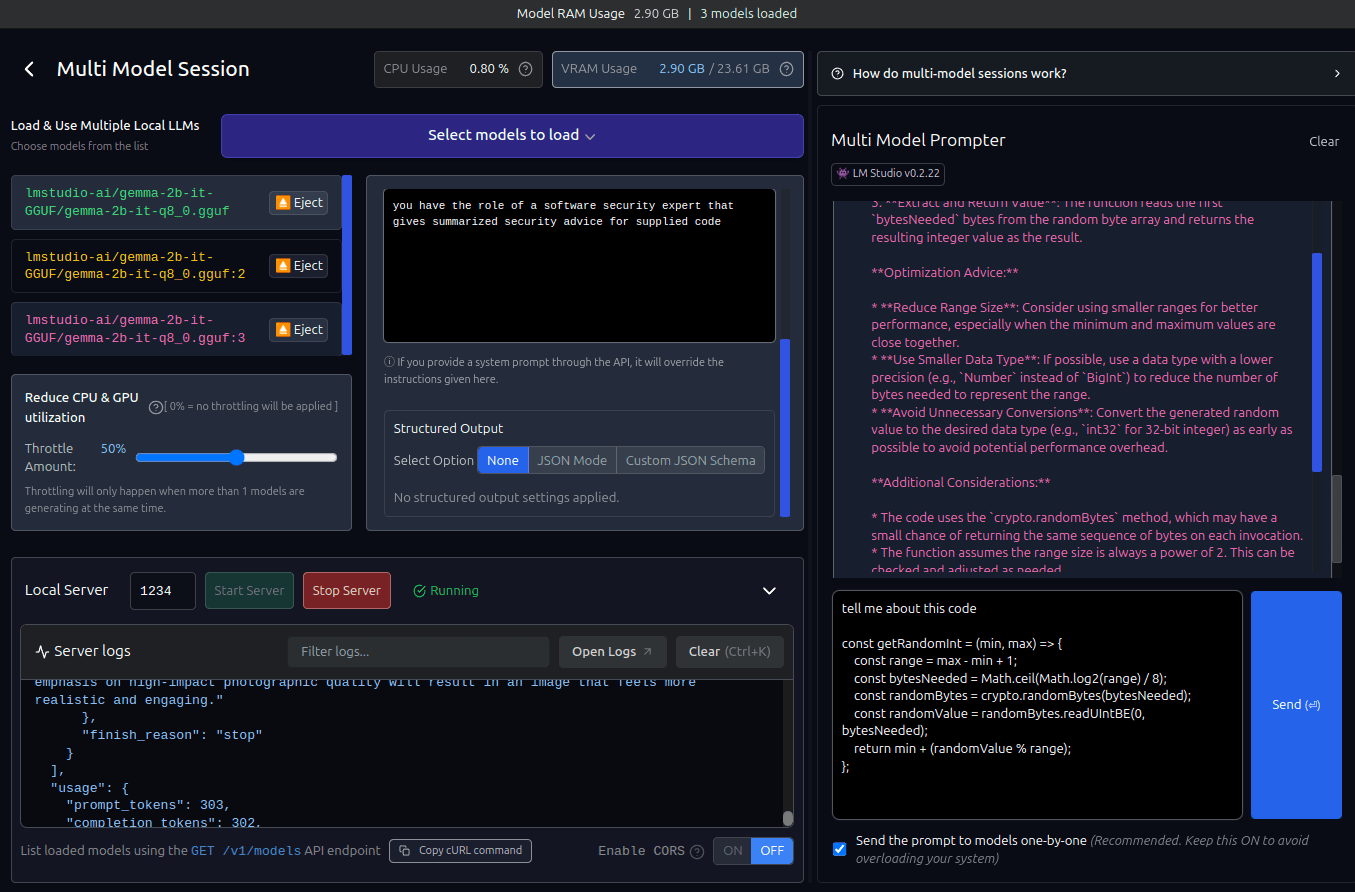

ok, switching topics to local LLMs

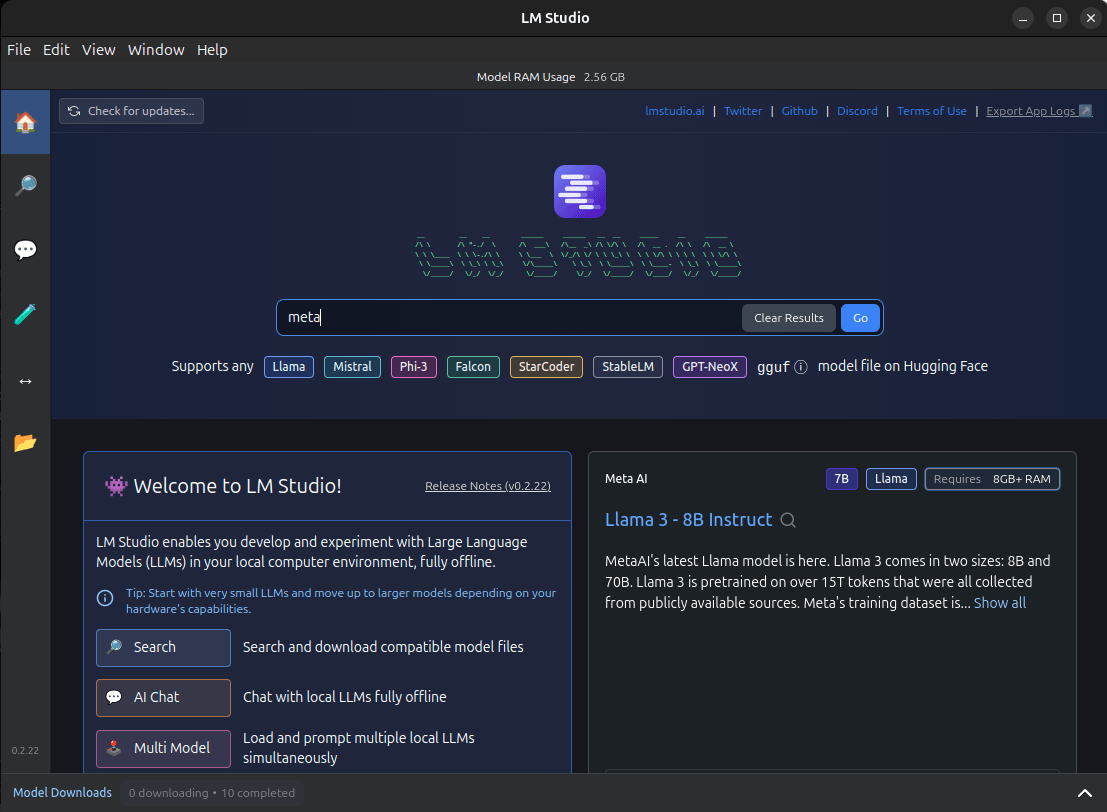

LLM Studio to run opensource Large Language Models https://lmstudio.ai/

models from https://huggingface.co/

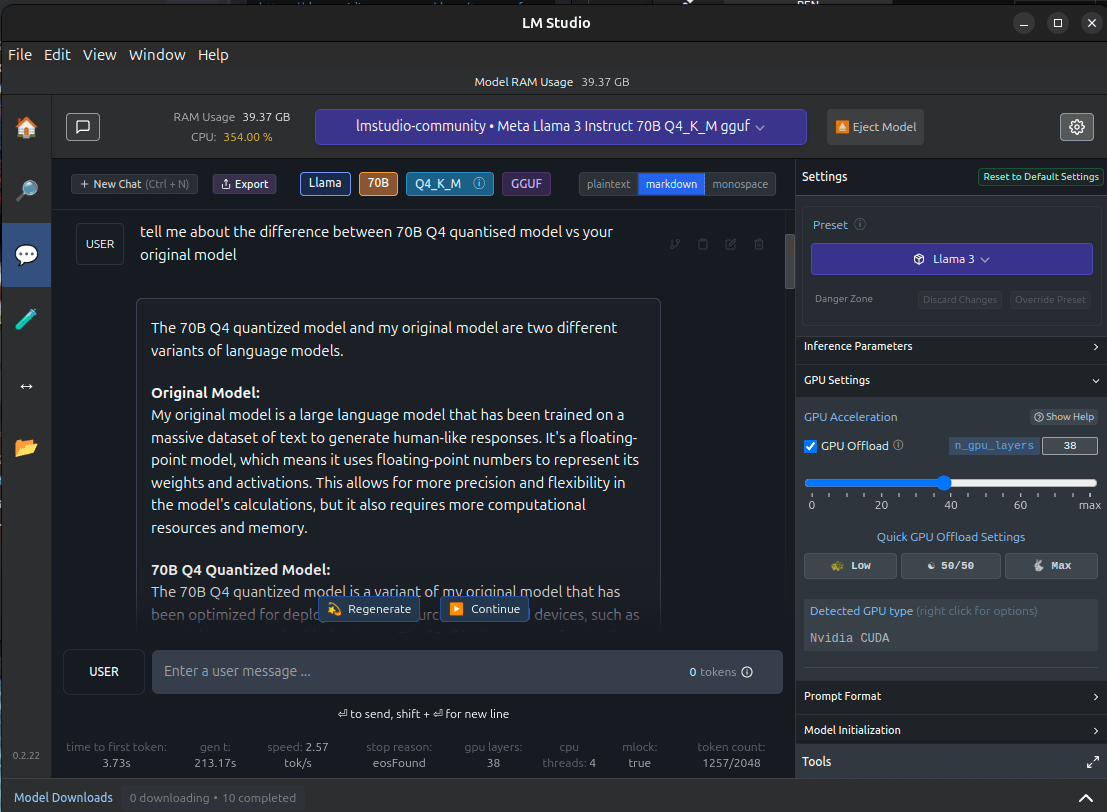

Running a 4bit quantized version of llama3 locally

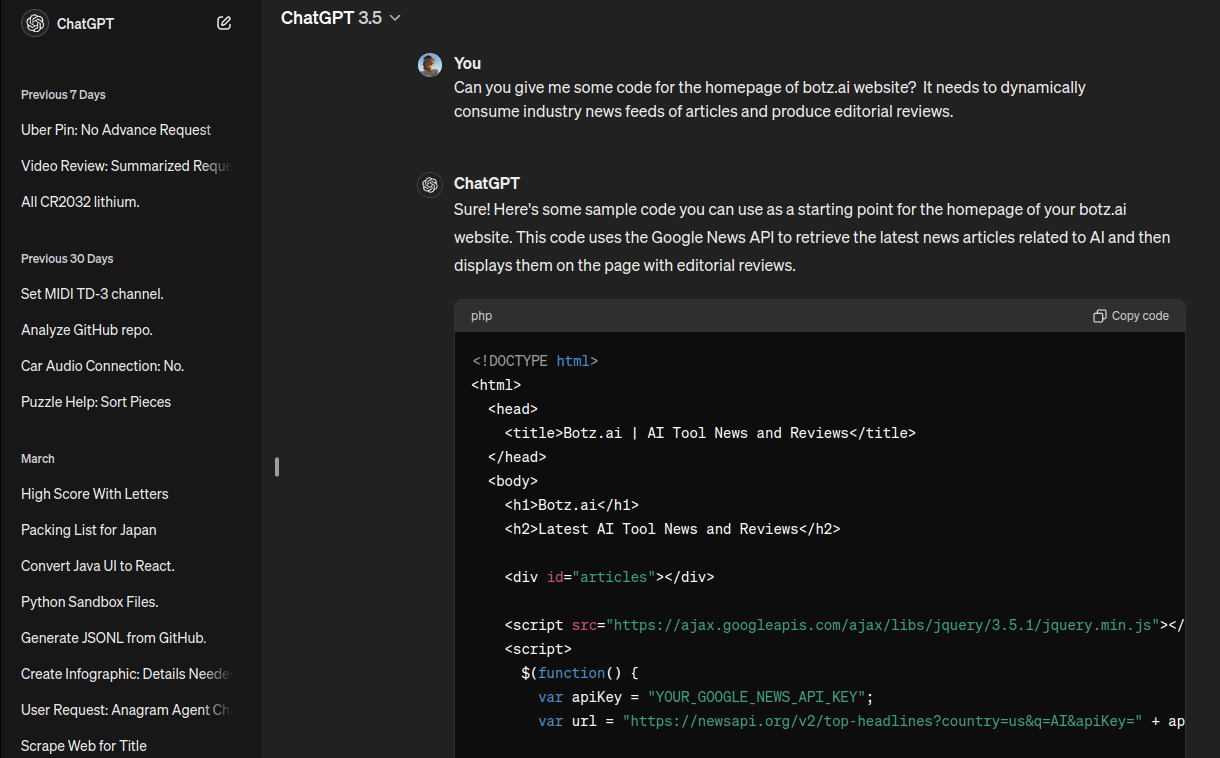

Stepping back to an earlier experiment with ChatGPT..

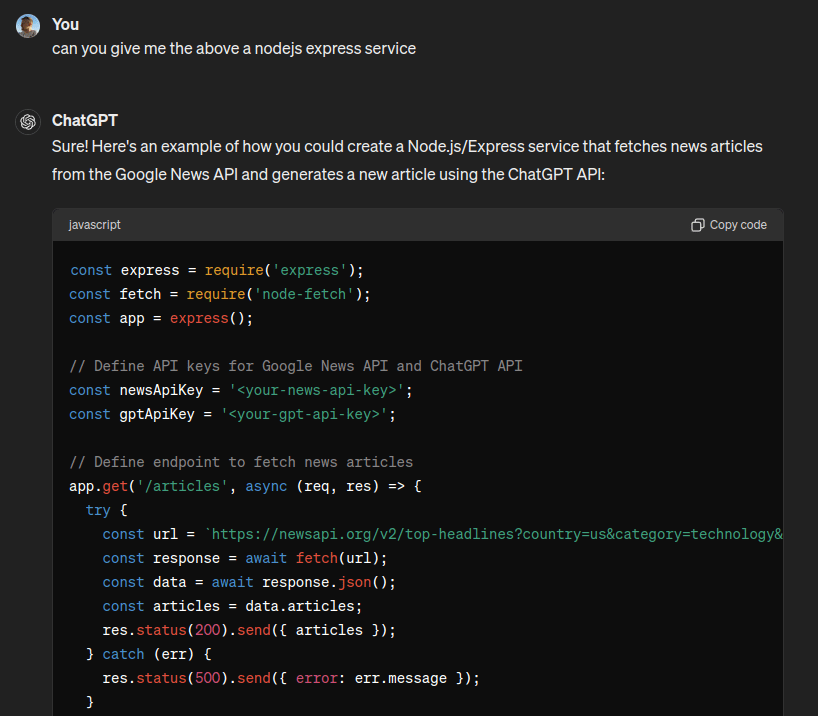

Let's split that out into a microservice..

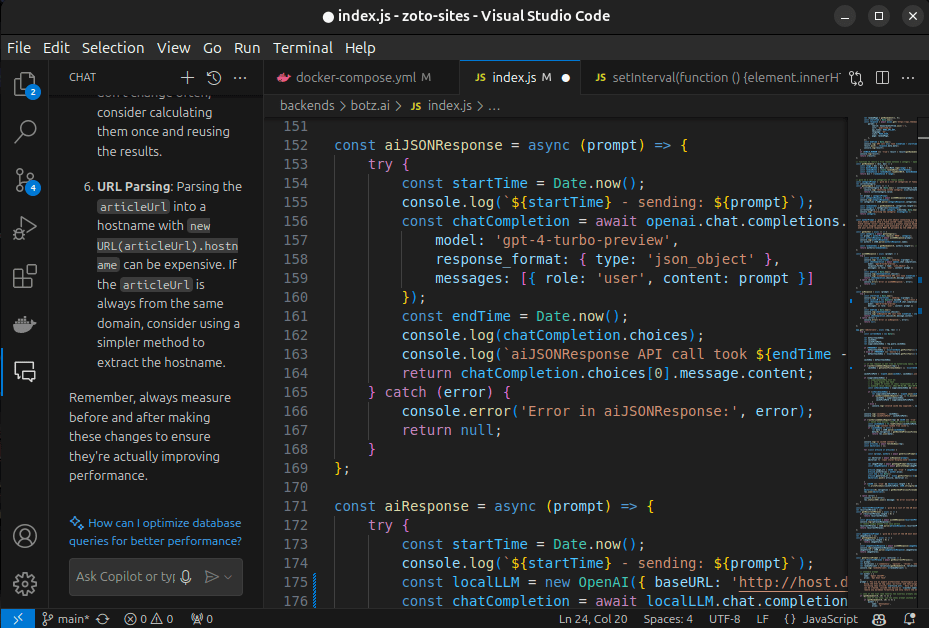

Hand over to Copilot and refine..

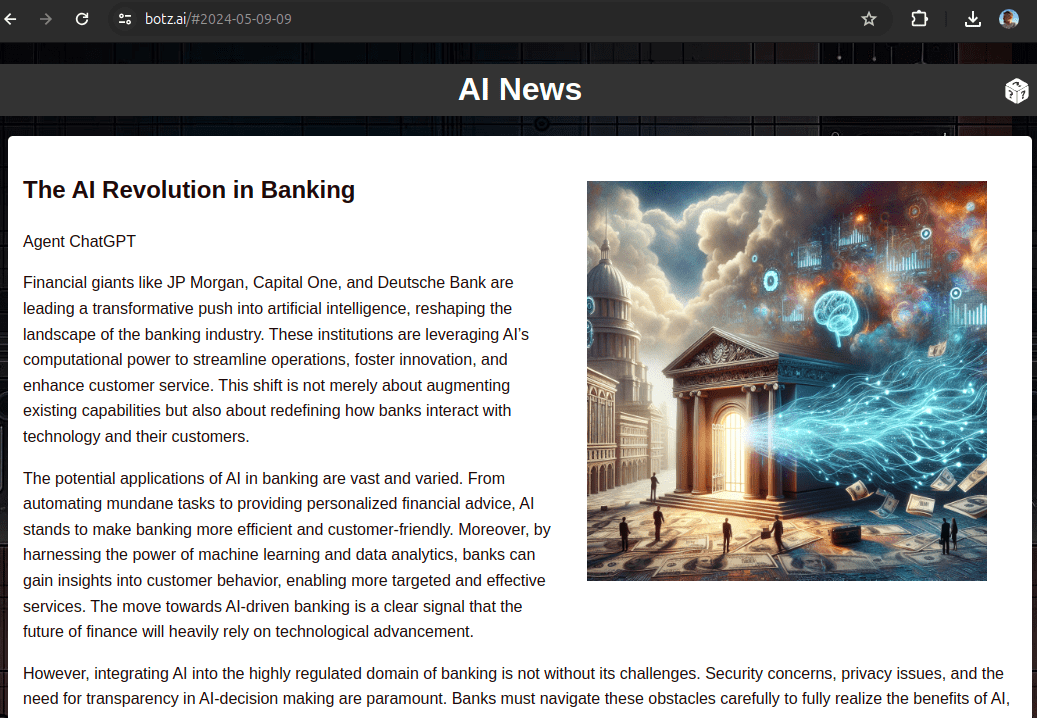

Resulting page example

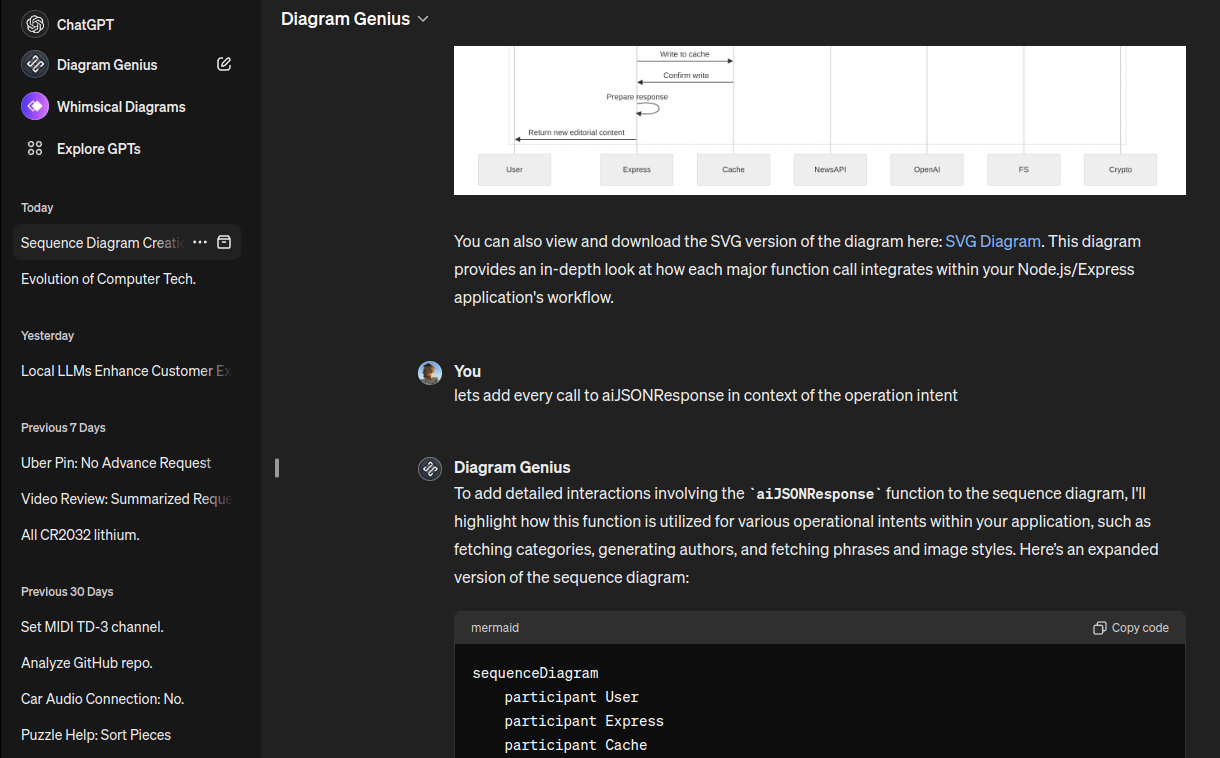

Create a sequence diagram of the microservice with a custom GPT..

Full diagram - it is correct.

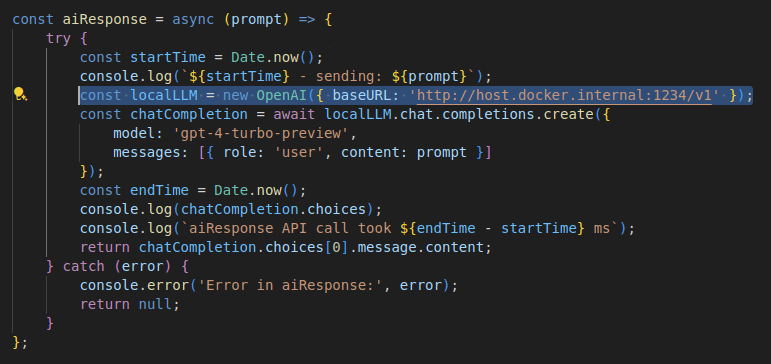

OK.. back to the present time - lets update that earlier code to point at a local service hosted by LM Studio using same contract as OpenAI apis. Now we can run our ChatGPT code against any local LLM.

It still works, but the local LLM in this example is not really understanding all the dimensions of the prompt.

Also, we can run multiple models with different personas and interact with them at the same time..

1. An experiment on a phone quickly escalated.

2. Engineers need to get across this tech now.

3. AI is not a hammer for every nail, but..

4. The way we work is going to change.

Happy to talk specifics offline.

some thoughts

const currentCuriosity = await me.getCuriosityTopic();

const action = await consider(currentCuriosity);

schedule(action);

fork & execute..

Curiosity - Running LLMs locally

By Andrew Vaughan

Curiosity - Running LLMs locally

- 54