Writing Reusable and Reproducible Pipelines for Training Neural Networks

Andrey Lukyanenko

Senior DS, Careem

About me

- ~4 years as ERP-system consultant

- self-study for switching career

- DS since 2017

- Lead a medical chatbot project

- Lead an R&D CV team

- Senior DS in anti-fraud team

Content

- Styles of writing training code

- Reusable pipeline: why do it and how to start

- Functionality of training pipeline

Styles of writing code

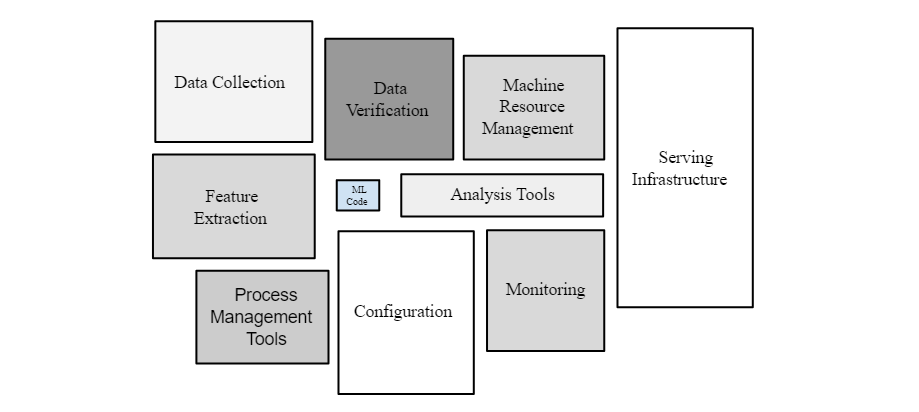

Training pipeline

Reasons for writing pipeline

- Writing everything from scratch takes time and can have errors

- You have repeatable pieces of code anyway

- Better understanding how the things work

- Standardization among the team

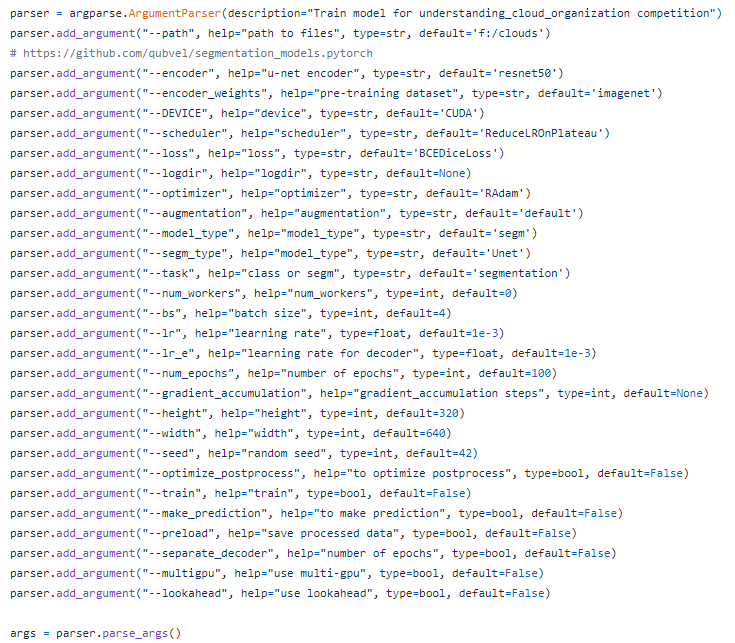

My pipeline

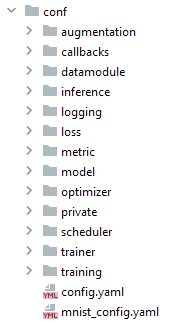

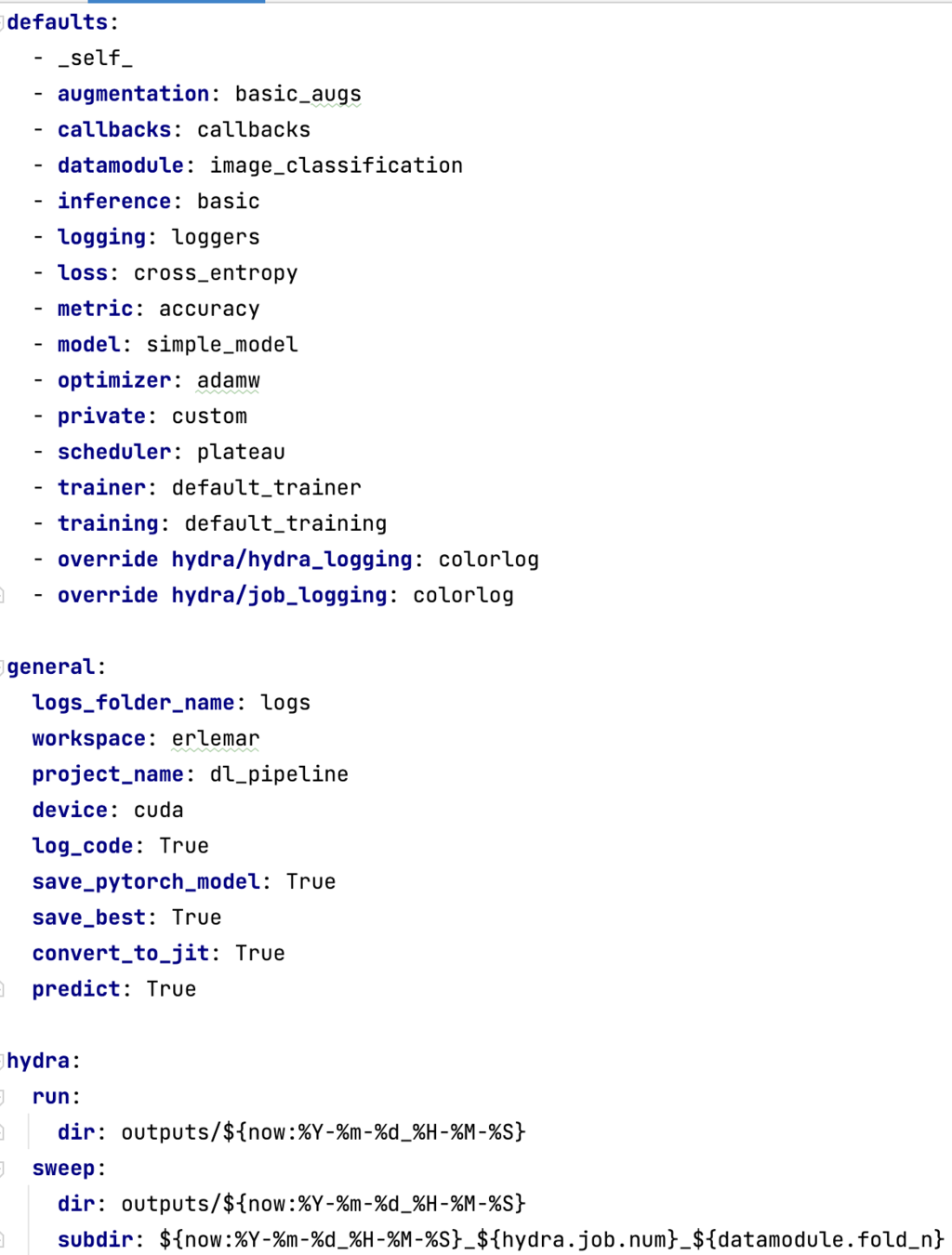

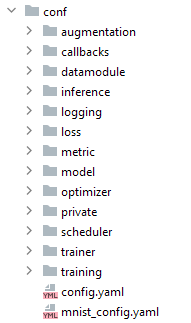

My pipeline: core ideas

- Replaceable modules

- Hydra/OmegaConf for configuration files

- Values in configuration files can be changed in CLI

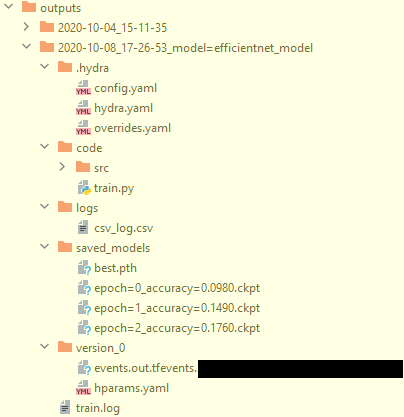

- Logging and reproducibility

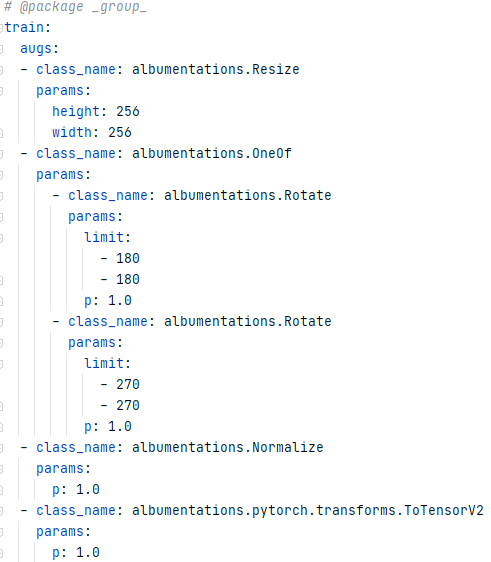

My pipeline

My pipeline

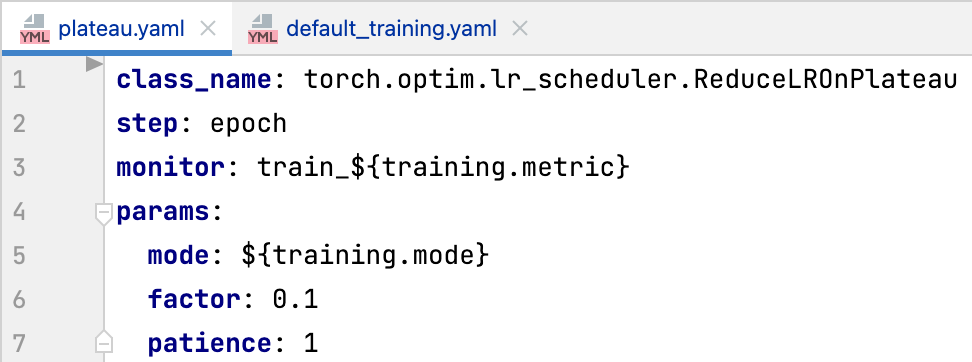

def configure_optimizers(self):

optimizer = load_obj(self.cfg.optimizer.class_name)(self.model.parameters(),

**self.cfg.optimizer.params)

scheduler = load_obj(self.cfg.scheduler.class_name)(optimizer,

**self.cfg.scheduler.params)

return (

[optimizer],

[{'scheduler': scheduler,

'interval': self.cfg.scheduler.step,

'monitor': self.cfg.scheduler.monitor}],

)

My pipeline

>>> python train.py

>>> python train.py optimizer=sgd

>>> python train.py model=efficientnet_model

>>> python train.py model.encoder.params.arch=resnet34

>>> python train.py datamodule.fold_n=0,1,2 -m

@hydra.main(config_path='conf', config_name='config')

def run_model(cfg: DictConfig) -> None:

os.makedirs('logs', exist_ok=True)

print(cfg.pretty())

if cfg.general.log_code:

save_useful_info()

run(cfg)

if __name__ == '__main__':

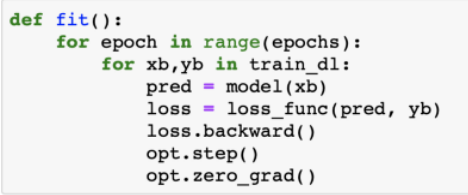

run_model()Training loop

def training_step(self, batch, *args, **kwargs): # type: ignore

image = batch['image']

logits = self(image)

target = batch['target']

shuffled_target = batch.get('shuffled_target')

lam = batch.get('lam')

if shuffled_target is not None:

loss = self.loss(logits, (target, shuffled_target, lam)).view(1)

else:

loss = self.loss(logits, target)

self.log('train_loss', loss,

on_step=True, on_epoch=True, prog_bar=True, logger=True)

for metric in self.metrics:

score = self.metrics[metric](logits, target)

self.log(f'train_{metric}', score,

on_step=True, on_epoch=True, prog_bar=True, logger=True)

return lossReproducibility

def set_seed(seed: int = 42) -> None:

np.random.seed(seed)

random.seed(seed)

os.environ['PYTHONHASHSEED'] = str(seed)

torch.backends.cudnn.benchmark = False

torch.backends.cudnn.deterministic = True

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed)Experiment tracking

Changing hyperparameters

>>> python train.py optimizer=sgd

>>> python train.py trainer.gpus=2Basic functionality

- Easy to modify for a similar problem

- Make predictions

- Make predictions without pipeline

- Changing isn't very complicated

Useful functionality

- Configs, configs everywhere

- Templates of everything

- Training on folds and hyperparameter optimization

- Training with stages

- Using pipeline for a variety of tasks

- Sharable code and documentation

- Various cool tricks

Links

- https://developers.google.com/machine-learning/crash-course/production-ml-system

- https://medium.com/@CodementorIO/good-developers-vs-bad-developers-fe9d2d6b582b

- https://towardsdatascience.com/the-pytorch-training-loop-3c645c56665a

- https://neptune.ai/blog/best-ml-experiment-tracking-tools

- https://github.com/Erlemar/pytorch_tempest

Contacts

Writing reusable and reproducible pipelines for training neural networks

By Andrey Lukyanenko

Writing reusable and reproducible pipelines for training neural networks

- 314