Transfer Learning

What is Transfer Learning?

Transfer learning is the improvement of learning in a new task through the transfer of knowledge from a related task that has already been learned.

http://pages.cs.wisc.edu/~shavlik/abstracts/torrey.handbook09.abstract.html

Why we care about this?

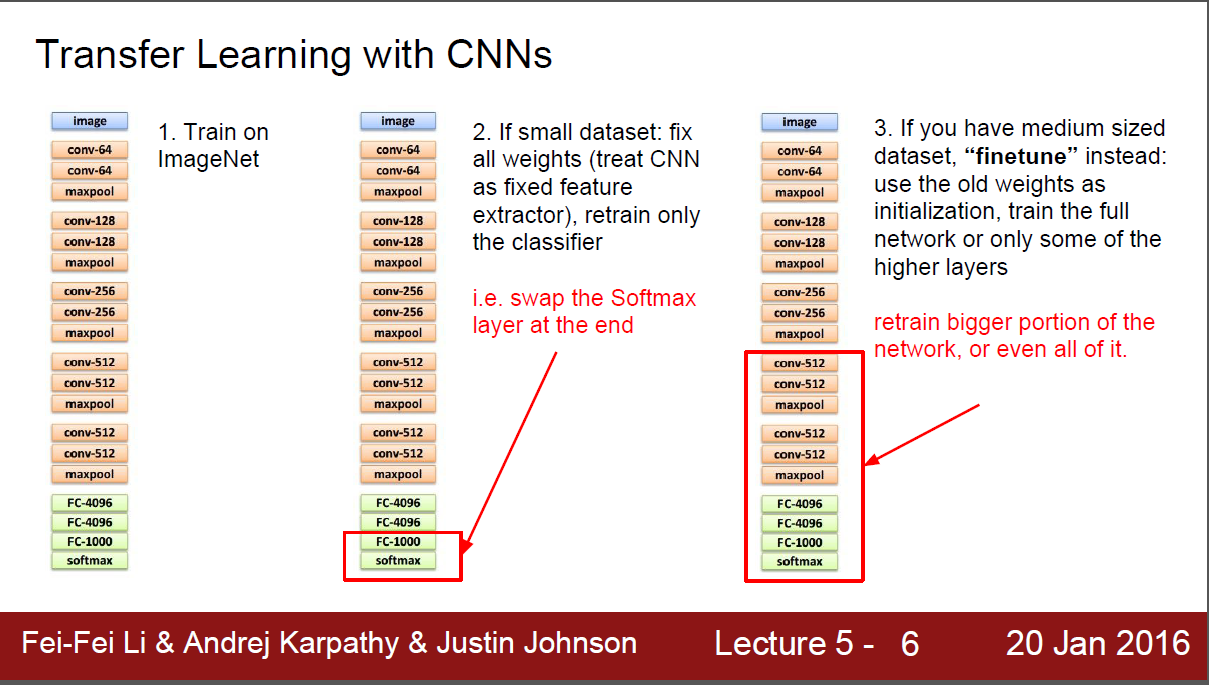

- We rarely have a dataset big enough for CNN

- We can take advantage of ImageNet (1.2 million images and 1000 classes)

- Reduced risk of overfitting

- Less training time

The two types

- CNN as fixed feature extractor

- Fine-tuning the CNN

Feature Extractor

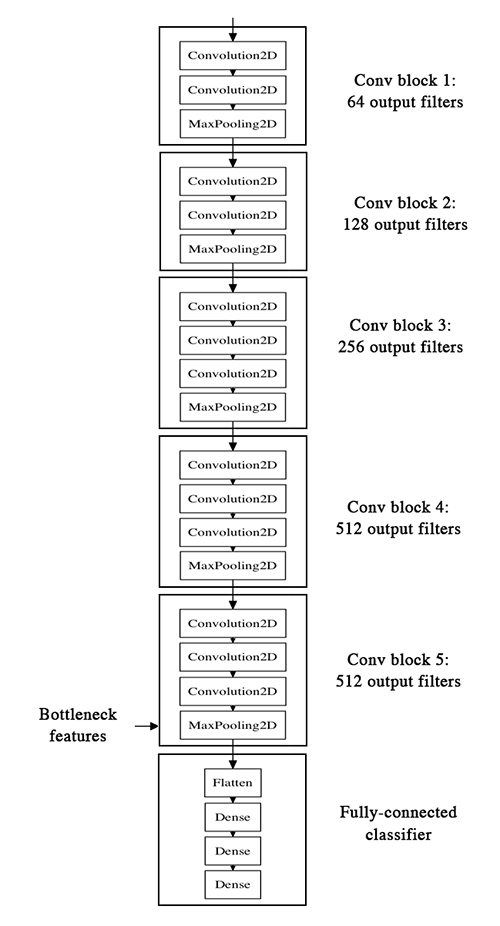

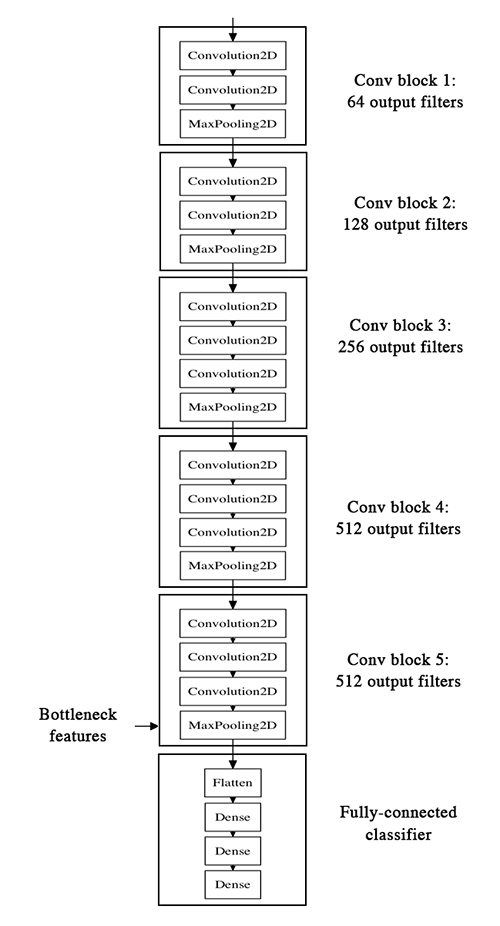

VGG16

Top Model

Feature Extractor

from keras.applications.vgg16 import VGG16

from keras.preprocessing import image

from keras.applications.vgg16 import preprocess_input

import numpy as np

model = VGG16(weights='imagenet', include_top=False)

img_path = 'elephant.jpg'

img = image.load_img(img_path, target_size=(224, 224))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

features = model.predict(x)SVM(features)

XGBoost(features)

RandomForest(features)

or

or

FIne Tuning

Steps for FIne TUNING

- Initialize CNN with ImageNet weights and freeze Convolution layers (Without the top model)

- Create top model (Fully Connected Layers and output layer)

- Train top model with the dataset

- Attach top model to the CNN

- Fine Tune the network using a very small learning rate

Resources for this

- Using the CPU (Default)

- Using the GPU (Requires Nvidia graphics card and CUDA drivers)

- Using Amazon p2.xlarge instances (0.9 cents/hour and 30x faster than a traditional CPU)

Github repo

Transfer Learning

By Antonio Grimaldo

Transfer Learning

- 1,078